Zeo sleep self-experiments

EEG recordings of sleep and my experiments with things affecting sleep quality or durations: melatonin, potassium, vitamin D etc.

I discuss my beliefs about Quantified Self, and demonstrate with a series of single-subject design self-experiments using a Zeo. A Zeo records sleep via EEG; I have made many measurements and performed many experiments. This is what I have learned so far:

the Zeo headband is wearable long-term

melatonin improves my sleep

one-legged standing does little

Vitamin D at night damages my sleep & Vitamin D in morning does not affect my sleep

potassium (over the day but not so much the morning) damages my sleep and does not improve my mood/productivity

small quantities of alcohol appear to make little difference to my sleep quality

I may be better off changing my sleep timing by waking up somewhat earlier & going to bed somewhat earlier

lithium orotate does not affect my sleep

Redshift causes me to go to bed earlier

ZMA: inconclusive results slightly suggestive of benefits

Quantified Self (QS) is a movement with many faces and as many variations as participants, but the core of everything is this: experiment with things that can improve your life.

What Is QS?

Quantified Self is not expensive devices, or meet-ups, or videos, or even ebooks telling you what to do. Those are tools to an end. If reading this page does anything, my hope is to pass on to some readers the Quantified Self attitude: a playful thoughtful attitude, of wondering whether this thing affects that other thing and what implications could be easily tested. “Science” without the capital “S” or the belief that only scientists are allowed to think.

That’s all Quantified Self is, no matter how simple or complicated your devices, no matter how automated your data collection, no matter whether you found a pedometer lying around or hand-engineered your own EEG headset.

Quantified Self is simply about having ideas, gathering some data, seeing what it says, and improving one’s life based on the data. If gathering data is too hard and would make your life worse off—then don’t do it! If the data can’t make your life better—then don’t do it! Not every idea can or should be tested.

The QS cycle is straightforward and flexible:

Have an idea

Gather data

Test the data

Make a change; GOTO 1

Any of these steps can overlap: you may be collecting sleep data long before you have the idea (in the expectation that you will have an idea), or you may be making the change as part of the data in an experimental design, or you may inadvertently engage in a “natural experiment” before wondering what the effects were (perhaps the baby wakes you up on random nights and lets you infer the costs of poor sleep).

The point is not publishable scientific rigor. If you are the sort of person who wants to run such rigorous self-experiments, fantastic! The point is making your life better, for which scientific certainty is not necessary: imagine you are choosing between equally priced sleep pills and equal safety; the first sleep pill will make you go to sleep faster by 1 minute and has been validated in countless scientific trials, and while the second sleep pill has in the past week has ended the sweaty nightmares that have plagued you every few days since childhood but alas has only a few small trials in its favor—which would you choose? I would choose the second pill!

To put it in more economic/statistical terms, what we want from a self-experiment is for it to give us a confidence just good enough to tell whether the expected value of our idea is more than the idea will cost. But we don’t need more confidence unless we want to persuade other people! (So from this perspective, it is possible to do a QS self-experiment which is “too good”. Much like one can overpay for safety and buy too much insurance—like extra warranties on electronics such as video game consoles, a notorious rip-off.)

What QS Is Not: (Just) Data Gathering

One failure mode which is particularly dangerous for QSers is to overdo the data collection and collect masses of data they never use. Famous computer entrepreneur & mathematician Stephen Wolfram exemplified this for me in March 201214ya with his lengthy blog post “The Personal Analytics of My Life” in which he did some impressive graphing and exploration of data 1989–23201214ya: a third of a million (!) emails, full keyboard logging, calendar, phone call logs (with missed calls include), a pedometer, revision history of his tome A New Kind of Science, file types accessed per date, parsing scanned documents for dates, a treadmill, and perhaps more he didn’t mention.

Wolfram’s dataset is well-depicted in informative graphs, breathtaking in its thoroughness, and even more impressive for its duration. So why do I read his post with sorrow? I am sad for him because I have read the post several times, and as far as I can see, he has not benefited in any way from his data collection, with one minor exception:

Very early on, back in the 1990s, when I first analyzed my e-mail archive, I learned that a lot of e-mail threads at my company would, by a certain time of day, just resolve themselves. That was a useful thing to know, because if I jumped in too early I was just wasting my time.

Nothing else in his life was better 1989–23201214ya because he did all this, and he shows no indication that he will benefit in the future (besides having a very nifty blog post). And just reading through his post with a little imagination suggests plenty of experiments he could do:

He mentions that 7% of his keystrokes are the Backspace key.

This seems remarkably high—vastly higher than my own use of backspace—and must be slowing down his typing by a nontrivial amount. Why doesn’t he try a typing tutor to see if he can improve his typing skill, or learn the keyboard shortcuts in his text editor? If he is wasted >7% of all his typing (because he had to type what he is Backspacing over, of course), then he is wasting typing time, slowing things done, adding frustration to his computer interactions and worst, putting himself at greater risk of crippling RSI.

How often does he access old files? Since he records access to all files, he can ask whether all the logging is paying for itself.

Is there any connection between the steps his pedometer records and things like his mood or emailing? Exercise has been linked to many benefits, both physical and mental, but on the other hand, walking isn’t a very quick form of exercise. Which effect predominates? This could have the practical consequence of scheduling a daily walk just as he tries to make sure he can have dinner with his family.

Does a flurry of emails or phone calls disrupt his other forms of productivity that day? For example, while writing his book would he have been better off barricading himself in solitude or working on it in between other tasks?

His email counts are astonishingly high in general:

Is answering so many emails really necessary? Perhaps he has put too much emphasis on email communication, or perhaps this indicates he should delegate more—or if running Mathematica is so time-consuming, perhaps he should re-evaluate his life and ask whether that is what he truly wants to do now. I have no idea what the answer to any of these questions are or whether an experiment of any kind could be run on them, but these are key life decisions which could be prompted by the data—but weren’t.

Another QS piece(“It’s Hard to Stay Friends With a Digital Exercise Monitor”) struck me when the author, Jenna Wortham, reflected on her experience with her Nike+ FuelBand motion sensor:

The forgetfulness and guilt I experienced as my FuelBand honeymoon wore off is not uncommon, according to people who study behavioral science. The collected data is often interesting, but it is hard to analyze and use in a way that spurs change. “It doesn’t trigger you to do anything habitually,” said Michael Kim, who runs Kairos Labs, a Seattle-based company specializing in designing social software to influence behavior…Mr. Kim, whose résumé includes a stint as director of Xbox Live, the online gaming system created by Microsoft, said the game-like mechanisms of the Nike device and others like it were “not enough” for the average user. “Points and badges do not lead to behavior change,” he said.

Finally, Neal Stephenson, in discussing his treadmill desk use focuses on estimating mileage & caloric expenditure and showing the effects of bad posture he developed—but he entirely ignores issues of whether it affected his typing, his writing, or anything that might actually matter.

One thinks of a saying of W. Edwards Deming: “Experience by itself teaches nothing.” Indeed. A QS experiment is a 4-legged beast: if any leg is far too short or far too long, it can’t carry our burdens.

And with Wolfram and Wortham, we see that 2 legs of the poor beast have been amputated. They collected data, but they had no ideas and they made no changes in their life; and because QS was not part of their life, it soon left their life. Wortham seems to have dropped the approach entirely, and Wolfram may only persevere for as long as the data continues to be useful in demonstrating the abilities of his company’s products.

Zeo QS

On Christmas 201016ya, I received one of Zeo Inc’s (founded 200323ya, shutting down 2013) Zeo bedside unit after long coveting it and dreaming of using it for all sorts of sleep-related questions. (As of February 201313ya, the bedside unit seems to’ve been discontinued; the most comparable Zeo Inc. product seems to be the Zeo Sleep Manager Pro, ~$135.1$902013.) With it, I begin to apply my thoughts about Quantified Self.

A Zeo is a scaled-down (one-electrode) EEG sensor-headband, which happens to have an alarm clock attached. The EEG data is processed to estimate whether one is asleep and what stage of sleep one is in. Zeo breaks sleep down into waking, REM, light, and deep. (The phases aren’t necessarily that physiologically distinct.) It’s been compared with regular polysomnography by Zeo Inc and others1 and seems to be reasonably accurate. (Since regular sleep tests cost hundreds to thousands of dollars per session and are of questionable external validity since they are a different uncomfortable setting than your own bedroom, I am fine with a Zeo being just “reasonably” accurate in predicting PSG ratings.)

The data is much better than what you would get from more popular methods like cellphones with accelerometers, since an accelerometer only knows if you are moving or not, which isn’t a very reliable indicator of sleep2. (You could just be lying there staring at the ceiling, wide awake. Or perhaps the cat is kneading you while you are in light sleep.) As well, half the interest is how exactly sleep phases are arranged and how long the cycles are; you could use that information to devise a custom polyphasic schedule or just figure out a better nap length than the rule-of-thumb of 20 minutes. And the price isn’t too bad—$228.54$1502012 for the normal Zeo as of February 201214ya. (The basic mobile Zeo is much cheaper, but I’ve seen people complain about it and apparently it doesn’t collect the same data as more expensive mobile version or the original bedside unit.)

Tests

A thinker sees his own actions as experiments & questions—as attempts to find out something. Success and failure are for him answers above all.

I personally want the data for a few distinct purposes, but in the best Quantified Self vein, mostly experimenting:

more thoroughly quantifying the benefits of melatonin

and dose levels: 1.5mg may be too much. I should experiment with a variety: 0.1, 0.5, 1.0, 1.5, and 3mg?

quantifying the costs of modafinil

testing benefits of huperzine-A3

designing & starting polyphasic sleep

assisting lucid dreaming

reducing sleep time in general (better & less sleep)

investigating effects of n-backing:

do n-backing just before sleep, and see whether percentages shift (more deep sleep as the brain grows/changes?) or whether one sleeps better (fewer awakenings, less light sleep).

do n-backing after waking up, to look for correlation between good/bad sleeps and performance (one would expect good sleep → good scores).

test the costs of polyphasic sleep on memory4

(positive) effect of Seth Roberts’s one-legged standing on sleep depth/efficiency

possible sleep reductions due to meditation

serial cable uses:

quantifying meditation (eg. length of gamma frequencies)

rank music by distractibility?

measure focus over the day and during specific activities (eg. correlate frequencies against n-backing performance)

Measure negative effect of nicotine on sleep & determine appropriate buffer

test claims of sleep benefits from magnesium

I have tried to do my little self-experiments as well as I know how to, and hopefully my results are less bogus than the usual anecdotes one runs into online. What I would really like is for other people (especially Zeo owners) to replicate my results. To that end I have taken pains to describe my setups in complete detail so others can use it, and provided the data and complete R or Haskell programs used in analysis. If anyone replicates my results in any fashion, please contact me and I would be happy to link your self-experiment here!

First Impressions

First Night

Christmas morning, I unpacked it and admired the packaging, and then looked through the manual. The base-station/alarm-clock seems pretty sturdy and has a large clear screen. The headband seemed comfortable enough that it wouldn’t bother me. The various writings with it seemed rather fluffy and preppy, but I did my technical homework before hand, so could ignore their crap.

Late that night (quite late, since the girls stayed up playing Fable 3 and Xbox Kinect dancing games and what not), I turn in wearily. I had noticed that the alarm seemed to be set for ~3:30 AM, but I was very tired from the long day and taking my melatonin, and didn’t investigate further—I mean, what electronic would ship with the alarm both enabled and enabled for a bizarre time? It wasn’t worth bothering the other sleeper by turning on the light and messing with it. I put on the headband, verified that the Zeo seemed to be doing stuff, and turned in. Come 3 AM, and the damn music goes off! I hit snooze, too discombobulated to figure out how to turn off the alarm.

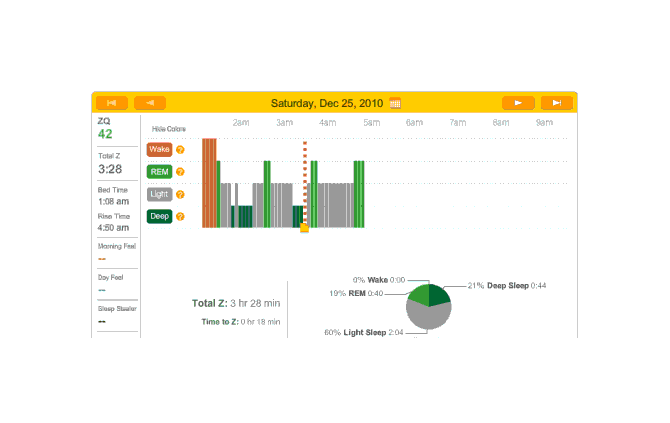

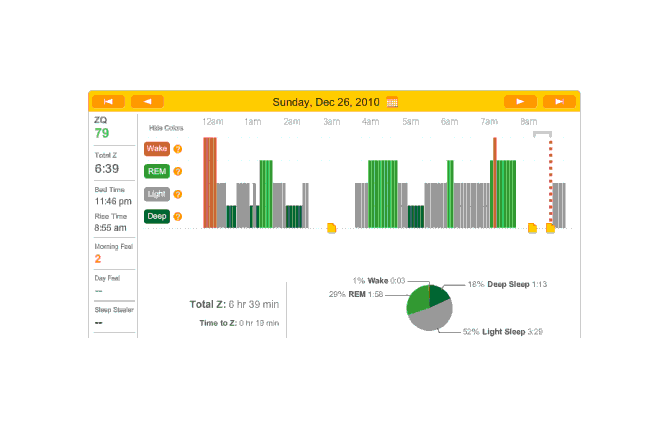

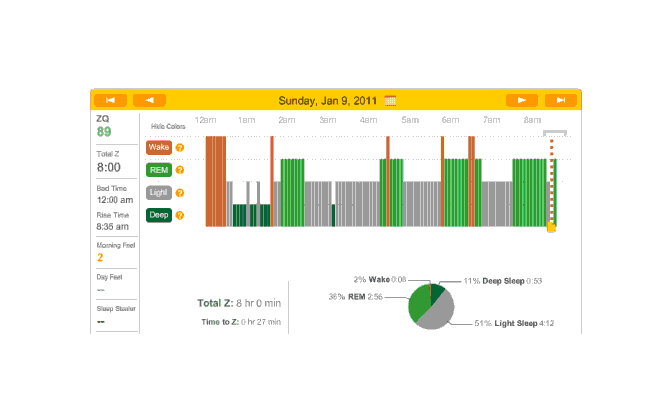

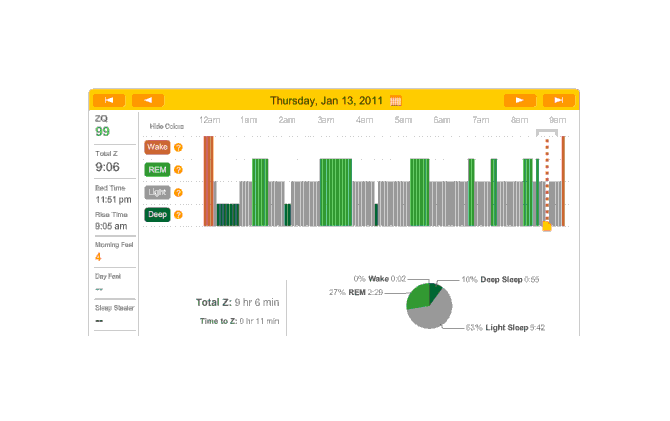

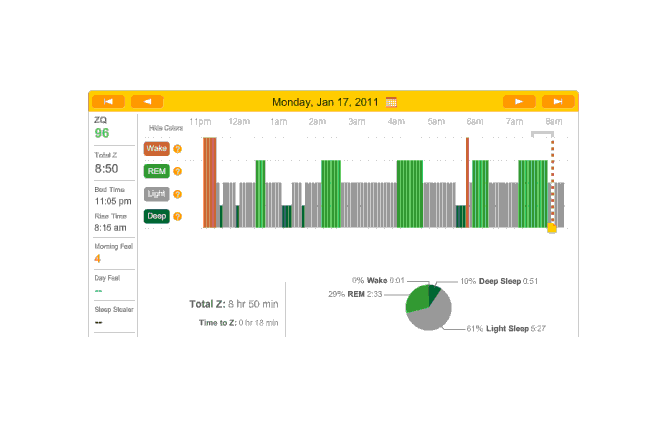

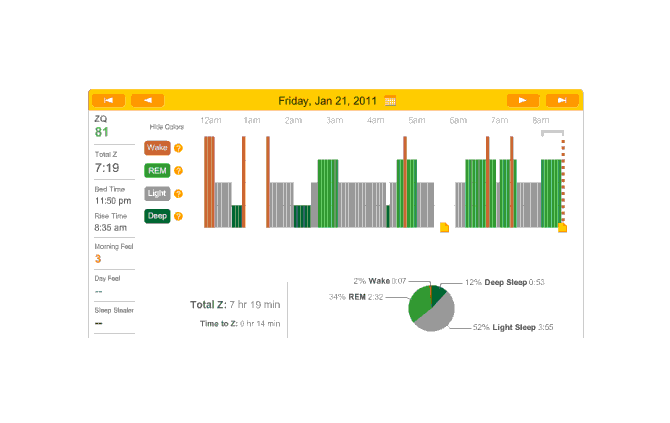

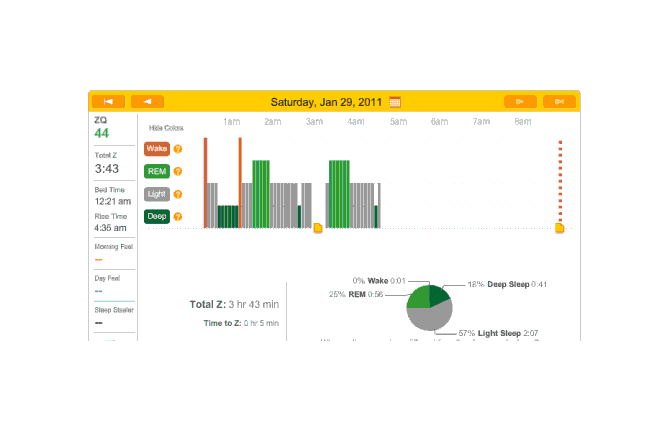

So that explains the strange Zeo data for the first day:

First night

The major surprise in this data was how quickly I fell asleep: 18 minutes. I had always thought that I took much longer to fall asleep, more like 45 minutes, and had budgeted accordingly; but apparently being deluded about when you are awake and asleep is common—which leads into an interesting philosophical point: if your memories disagree with the Zeo, who should you believe? The rest of the data seemed too messed up by the alarm to learn anything from.

Uses

Meditation

One possible application for Zeo was meditation. Most meditation studies are very small & methodologically weak, so it might be worthwhile to verify for oneself any interesting claims. If Zeo’s measuring via EEG, then presumably it’s learning something about how relaxed and activity-less one’s mind is. I’m not seeking enlightenment, just calmness, which would seem to be in the purview of an EEG signal. (As Charles Babbage said. errors made using insufficient data are still less than errors made using no data at all.) But alas, I meditated for a solid 25 minutes and the Zeo stubbornly read at the same wake level the entire time; I then read my Donald Keene book, Modern Japanese diaries, for a similar period with no change at all. It is possible that the 5-minute averaging (Zeo measures every 2 seconds) is hiding useful changes, but probably it’s simply not picking up any real differences. Oh well.

Smart Alarm

The second night I had set the alarm to a more reasonable time, and also enabled its smart alarm mode (“SmartWake”), where the alarm will go off up to 30 minutes early if you are ever detected to be awake or in light sleep (as opposed to REM or deep sleep). One thing I forgot to do was take my melatonin; I keep my supplements in the car and there was a howling blizzard outside. It didn’t bother me since I am not addicted to melatonin.

In the morning, the smart alarm mode seemed to work pretty well. I woke up early in a good mode, thought clearly and calmly about the situation—and went back to sleep. (It’s a holiday, after all.)

Replacing Headband

Around 2011-05-15, I gave up on the original headband—it was getting too dirty to get good readings—and decided to rip it apart to see what it was made of, and to order a new set of three for $54.34$352011 (which seems reasonable given the expensive material that the contacts are made of—silver fabric); they then cost $50. A little googling found me a coupon, FREESHIP, but apparently it only applied to the Zeo itself and so the pads were actually $62.1$402011, or ~$20.18$132011 a piece. I won’t say that buying replacement headbands semi-annually is something that thrills me, but $31.05$202011 a year for sleep data is a small sum. Certainly it’s more cost-effective than most of the nootropics I have used. (Full disclosure: 9 months after starting this page, Zeo offered me a free set of headbands. I used them and when the news broke about Zeo going out of business, I bought another set.)

The old headband, with electrical tape residue

/

/

Said headband with plastic removed; notice discoloration of metal despite cleaning

/

/

The new headband’s wrapper

/

/

In the future, I might try to make my own; eok.gnah claims that buying the silver fabric is apparently cheaper than ordering from Zeo, marciot reports success in making headbands, and it seems one can even hook up other sensors to the headband. Another alternative is, since the Zeo headband is a one-electrode EEG headset, to take an approach similar to the EEG people and occasionally add small dabs of conductive paste, since fairly large quantities are cheap (eg. 12oz for $45.71$302012). There was a disposable adhesive gel ECG electrodes with offset press-stud connections being experimented with by Zeo Inc, but they never entered wide use before it shut down.

One problem with the sensor mounted on the headband is that the lithium battery inside it can stop holding a charge. The casing is extremely difficult to open without damaging the circuitry or connections, and the battery inside is soldered to the circuit board:

An opened Zeo headband sensor

Once safely opened, the battery can be replaced by another one of similar size. For details, see the Quantified Self forum thread.

Melatonin

Before writing my melatonin advocacy article, I had used melatonin regularly for 6+ years, ever since I discovered (somewhen in high school or college) that it was useful for enforcing bedtimes and seemed to improve sleep quality; when I posted my writeup to LessWrong people were naturally a little skeptical of my specific claim that it improved the quality of my sleep such that I could reduce scheduled time by an hour or so. Now that I had a Zeo, wouldn’t it be a good idea to see whether it did anything, lo these many years later?

The following section represents 5 or 6 months of data (raw CSV data; guide to Zeo CSV). My basic dosage was 1.5mg of melatonin taken 0-30 minutes before going to sleep.

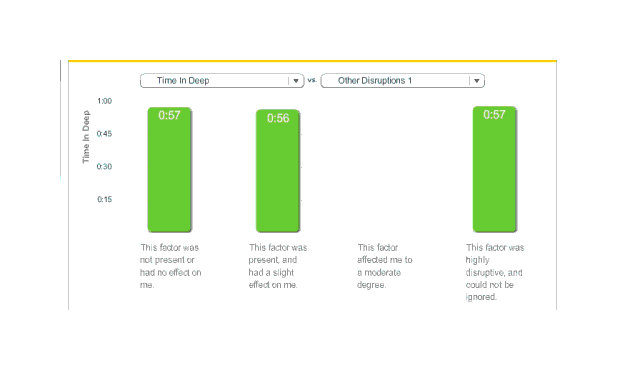

Graphic

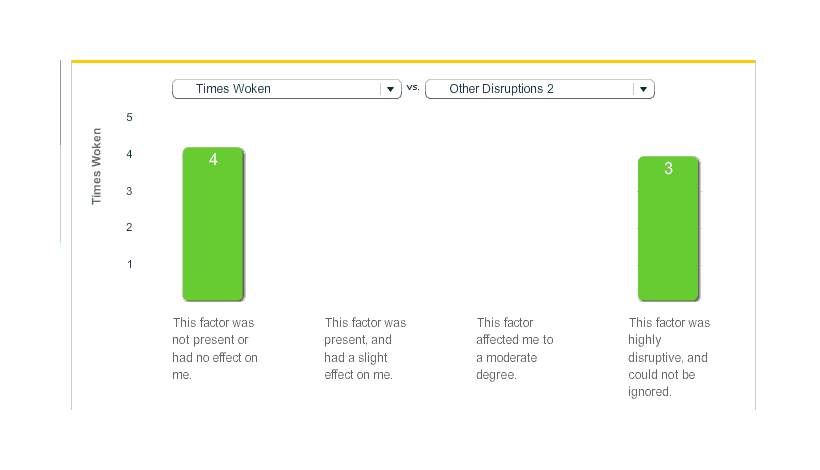

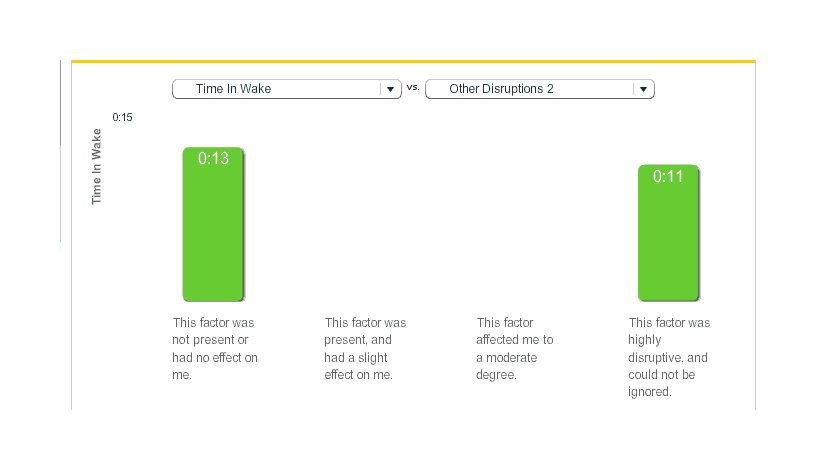

Deep sleep and ‘time in wake’ were both apparently unaffected; ‘time in wake’ apparently had too small a sample to draw much conclusion:

Melatonin, time in deep over five months,

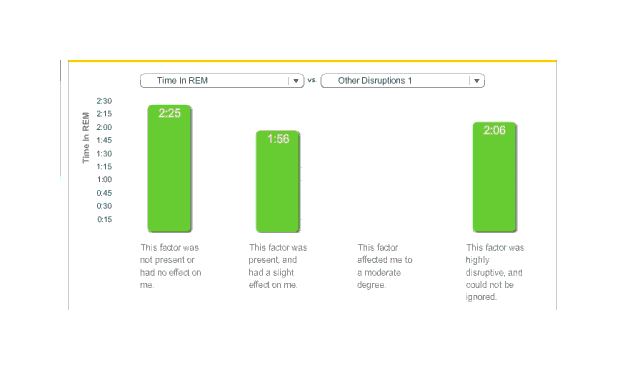

Surprisingly, total REM sleep fell:

Melatonin, time in REM

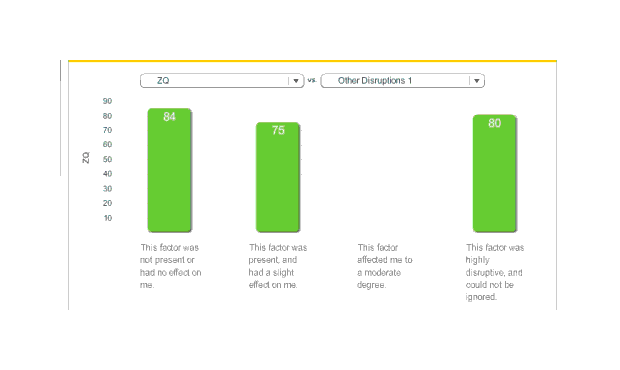

While the raw ZQ falls, the regression takes into account the correlated variables and indicates that this is something of an

Melatonin, ZQ

REM’s average fell by 29 minutes, deep sleep fell by 1 minute, but total sleep fell by 54 minutes; this implies that light sleep fell by 24 minutes. (The averages were 254.2 & 233.3) I am not sure what to make of this. While my original heuristic of a one hour reduction turns out to be surprisingly accurate, I had expected light and deep sleep to take most of the time hit. Do I get enough REM sleep? I don’t know how I would answer that.

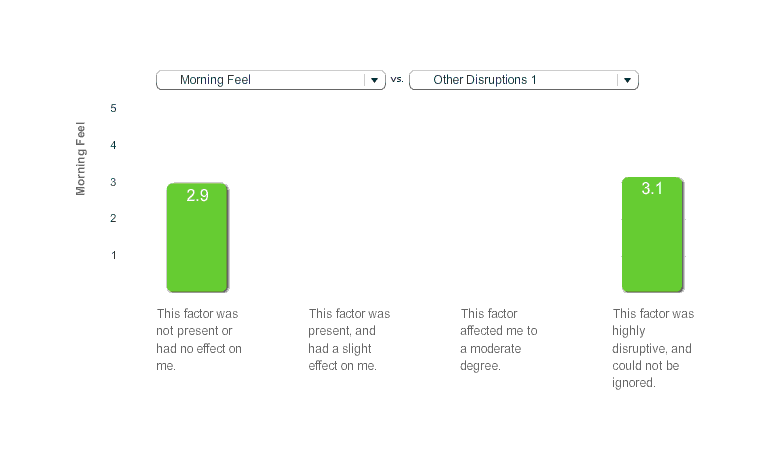

I did feel fine on the days after melatonin use, but I didn’t track it very systematically. The best I have is the ‘morning feel’ parameter, which the Zeo asks you on waking up; in practice I entered the values as: a ‘2’ means I woke feeling poor or unrested, ‘3’ was fine or mediocre, and ‘4’ was feeling good. When we graph the average of morning feel against melatonin use or non-use, we find that melatonin was noticeably better (2.95 vs 3.17):

Melatonin, Morning Feel

Graphing some more of the raw data:

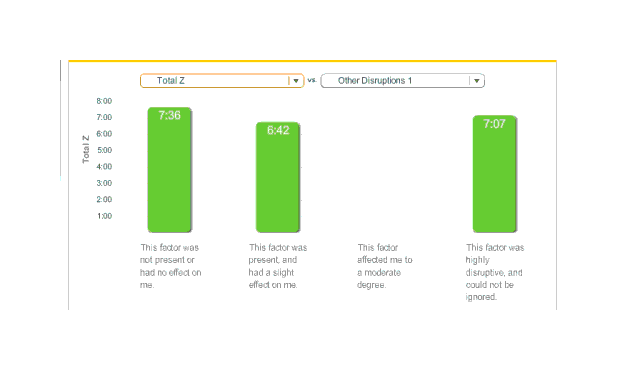

Melatonin, Total Time Asleep (Total Z)

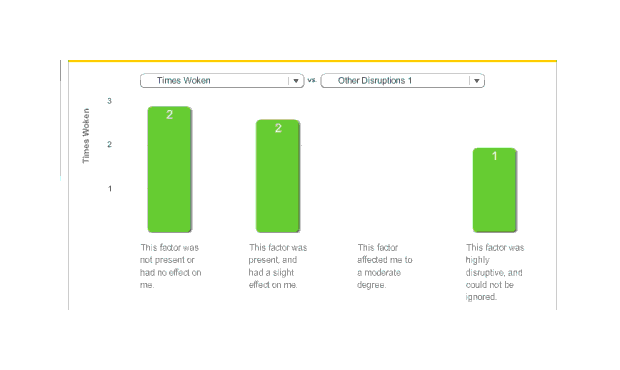

Melatonin, Times Woken per Night

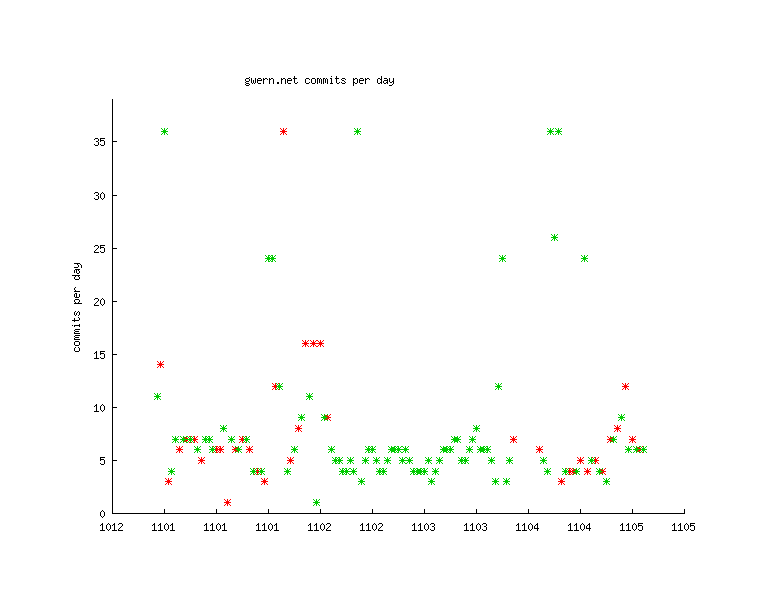

Unfortunately, during this period, I didn’t regularly do my n-backing either, so there’d be little point trying to graph that. What I spent a lot of my free time doing was editing Gwern.net, so it might be worth looking at whether nights on melatonin correspond to increased edits the next day. In this graph of edits, the red dots are days without melatonin and the green are days with melatonin; I don’t see any clear trend, although it’s worth noting almost all of the very busy days were melatonin days:

Days versus # of edits versus melatonin on/off

Melatonin Analysis

The data is very noisy (especially towards the end, perhaps as the headband got dirty) and the response variables are intercorrelated which makes interpretation difficult, but hopefully the overall conclusions from the multivariate linear analysis are not entirely untrustworthy. Let’s look at some average. Zeo’s website lets you enter in a 3-valued variable and then graph the average day for each variable against a particular recorded property like ZQ or total length of REM sleep. I defined one dummy variable, and decided that a ‘0’ would correspond to not using melatonin, ‘1’ would correspond to using it, and ‘2’ would correspond to using a double-dose or more (on the rare occasions I felt I needed sleep insurance). The following additional NHST-style5 analyses of p-values is done by importing the CSV into R; given all the issues with self-experimentation (these melatonin days weren’t even blinded), the p-values should be treated as gross guesses, where <0.01 indicates I should take it seriously, <0.05 is pretty good, <0.10 means I shouldn’t sweat it, and anything bigger than 0.20 is, at most, interesting while >0.5 means ignore it; we’ll also look at correcting for multiple comparisons6, for the heck of it. A mnemonic: p-values are about whether the effect exists, and d-values are whether we care. For a visualization of effect sizes, see “Windowpane as a Jar of Marbles”.

The analysis session in the R interpreter:

# Read in data w/ variable names in header; uninteresting columns deleted in OpenOffice.org

zeo <- read.csv("https://gwern.net/doc/zeo/2011-zeo-melatonin.csv")

# "Melatonin" was formerly "SSCF 10";

# I also edited the CSV to convert all '3' to '1' (& so a binary)

l <- lm(cbind(ZQ, Total.Z, Time.to.Z, Time.in.Wake, Time.in.REM,

Time.in.Deep, Awakenings, Morning.Feel, Time.in.Light)

~ Melatonin, data=zeo)

summary(manova(l))

# Df Pillai approx F num Df den Df Pr(>F)

# Melatonin 1 0.102 0.717 9 57 0.69

# Residuals 65

summary(l)

# Response ZQ :

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 83.52 4.13 20.21 <2e-16

# Melatonin 2.43 4.99 0.49 0.63

#

# Response Total.Z :

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 452.38 22.86 19.79 <2e-16

# Melatonin 9.68 27.59 0.35 0.73

#

# Response Time.to.Z :

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 19.48 2.59 7.52 2.1e-10

# Melatonin -5.04 3.13 -1.61 0.11

#

# Response Time.in.Wake :

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 7.095 1.521 4.66 1.6e-05

# Melatonin -0.247 1.836 -0.13 0.89

#

# Response Time.in.REM :

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 144.62 9.38 15.41 <2e-16

# Melatonin -3.73 11.32 -0.33 0.74

#

# Response Time.in.Deep :

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 54.33 3.26 16.68 <2e-16

# Melatonin 5.56 3.93 1.41 0.16

#

# Response Awakenings :

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 3.095 0.524 5.90 1.4e-07

# Melatonin -0.182 0.633 -0.29 0.77

#

# Response Morning.Feel :

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 2.952 0.142 20.78 <2e-16

# Melatonin 0.222 0.171 1.29 0.2

#

# Response Time.in.Light :

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 253.86 13.59 18.68 <2e-16

# Melatonin 7.93 16.40 0.48 0.63The MANOVA indicates no statistically-significant difference between the groups of days, taking all variables into account (p = 0.69). To summarize the regression:

Variable |

Correlate/Effect |

p-value |

Coefficient’s sign is… |

|---|---|---|---|

|

5.04 |

0.11 |

better |

|

0.18 |

0.77 |

better |

|

0.25 |

0.89 |

better |

|

.56 |

0.16 |

better |

|

.93 |

0.63 |

worse |

|

3.73 |

0.74 |

worse |

|

9.68 |

0.73 |

better |

|

2.43 |

0.63 |

better |

|

.22 |

0.20 |

better |

Part of the problem is that too many days wound up being useless, and each day costs us information and reduces our true sample size. (None of the metrics are strong enough to survive multiple correction7, sadly.)

And also unfortunately, this dataseries doesn’t distinguish between addition to melatonin or benefits from melatonin—perhaps the 3.2 is my ‘normal’ sleep quality and the 2.9 comes from a ‘withdrawal’ of sorts. The research on melatonin doesn’t indicate any addiction effect, but who knows?

If I were to run further experiments, I would definitely run it double-blind, and maybe even test <1.5mg doses as well to see if I’ve been taking too much; 3mg turned out to be excessive, and there are one or two studies indicating that <1mg doses are best for normal people. I wound up using 1.5mg doses. (There could be 3 conditions: placebo, 0.75mg, and 1.5mg. For looking at melatonin effect in general, the data on 2 dosages could be combined. Melatonin has a short half-life, so probably there would be no point in random blocks of more than 2–3 days8: we can randomize each day separately and assume that days are independent of each other.)

Worth comparing are Jayson Virissimo’s preliminary results:

According to the preliminary [Zeo] data, while on melatonin, I seemed to get more total sleep, more REM sleep, less deep sleep, and wake up about the same number of times each night. Because this isn’t enough data to be very confident in the results, I plan on continuing this experiment for at least another 4 months (2 on and 2 off of melatonin) and will analyze the results for the [statistical] significance and magnitude of the effects (if there really are any) while throwing out the outliers (since my sleep schedule is so erratic).

Value of Information (VoI)

See also the discussion as applied to ordering modafinil and testing nootropics

We all know it’s possible to spend more time figuring out how to “save time” on a task than we would actually save time like rearranging books on a shelf or cleaning up in the name of efficiency (xkcd even has a cute chart listing the break-even points for various possibilities,“Is It Worth The Time?”), and similarly, it’s possible to spend more money trying to “save money” than one would actually save; less appreciated is that the same thing is also possible to do with gaining information.

The value of an experiment is the information it produces. What is the value of information? Well, we can take the economic tack and say value of information is the value of the decisions it changes. (Would you pay for a weather forecast about somewhere you are not going to? No. Or a weather forecast about your trip where you have to make that trip, come hell or high water? Only to the extent you can make preparations like bringing an umbrella.)

Wikipedia says that for a risk-neutral person, value of perfect information is “value of decision situation with perfect information”—“value of current decision situation”. (Imperfect information is just weakened perfect information: if your information was not 100% reliable but 99% reliable, well, that’s still worth a lot.)

The decision is the binary take or not take. Melatonin costs ~$15.01$102013 a year (if you buy in bulk during sales, as I did). Suppose I had perfect information it worked; I would not change anything, so the value is $0. Suppose I had perfect information it did not work; then I would stop using it, saving me $15.01$102013 a year in perpetuity, which has a net present value9 (at 5% discounting) of $307.72$2052013. So the best-case value of perfect information—the case in which it changes my actions—is $307.72$2052013, because it would save me from blowing $15.01$102013 every year for the rest of my life. My melatonin experiment is not perfect since I didn’t randomize or double-blind it, but I had a lot of data and it was well powered, with something like a >90% chance of detecting the decent effect size I expected, so the imperfection is just a loss of 10%, down to $276.2$1842013. From my previous research and personal use over years, I am highly confident it works—say, 80%10 . If the experiment says melatonin works, the information is useless to me since I continue using melatonin, and if the experiment says it doesn’t, then let’s assume I decide to quit melatonin11 and then save $15.01$102013 a year or $276.2$1842013 total. What’s the expected value of obtaining the information, given these two outcomes? (0.80 × 0) + (0.20 × 184) = 36.8. Or another way, redoing the net present value: (10 − 0) ⧸ ln 1.05 × 0.9 × 0.2. At minimum wage opportunity cost of $10.51$72013 an hour, $55.24$36.82013 is worth 5.25 hours of my time. I spent much time on screenshots, summarizing, and analysis, and I’d guess I spent closer to 10–15 hours all told.

This worked out example demonstrates that when a substance is cheap and you are highly confident it works, a long costly experiment may not be worth it. (Of course, I would have done it anyway due to factors not included in the calculation: to try out my Zeo, learn a bit about sleep experimentation, do something cool, and have something neat to show everyone.)

Melatonin Data

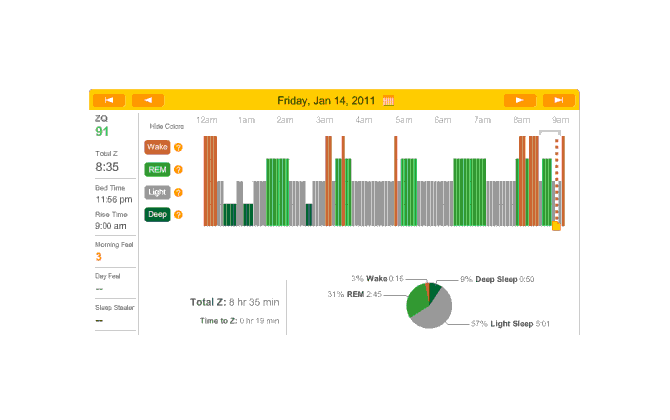

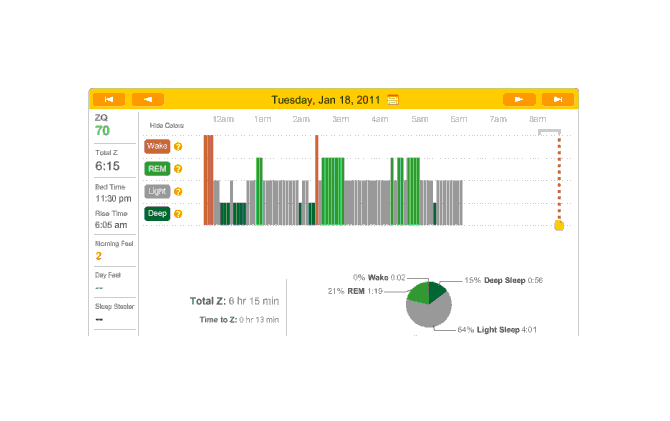

The data looked much better than the first night, except for a big 2-hour gap where I vaguely recall the sensor headband having slipped off. (I don’t think it was because it was uncomfortable but due to shifting positions or something.) Judging from the cycle of sleep phases, I think I lost data on a REM peak. The REM peaks interest me because it’s a standard theory of polyphasic sleeping that thriving on 2 or 3 hours of sleep a day is possible because REM (and deep sleep) is the only phase that truly matters, and REM can dominate sleep time through REM rebound and training.

Second night

Besides that, I noticed that time to sleep was 19 minutes that night. I also had forgotten to take my melatonin. Hmm…

Since I’ve begun this inadvertent experiment, I’ll try continuing it, alternating days of melatonin usage. I claim in my melatonin article that usage seems to save about 1 hour of sleep/time, but there’s several possible avenues. One could be quicker to fall asleep; one could awake fewer times; and one could have greater percentage of REM or deep sleep, reducing light sleep. (Light sleep doesn’t seem very useful; I sometimes feel worse after light sleep.)

During the afternoon, I took a quick nap. I’m not a very good napper, it seems—only the first 5 minutes registered as even light sleep.

A dose of melatonin (1.5mg) and off to bed a bit early. I’m a little more impressed with the smart alarm; since I’m hard-of-hearing and audio alarms rarely if ever work, I usually use a Sonic Alert vibrating alarm clock. But in the morning I woke up within a minute of the alarm, despite the lack of vibration or flashing lights. (The chart doesn’t reflect this, but as a previous link says, distinguishing waking from sleeping can be difficult and the transitions are the least trustworthy parts of the data.)

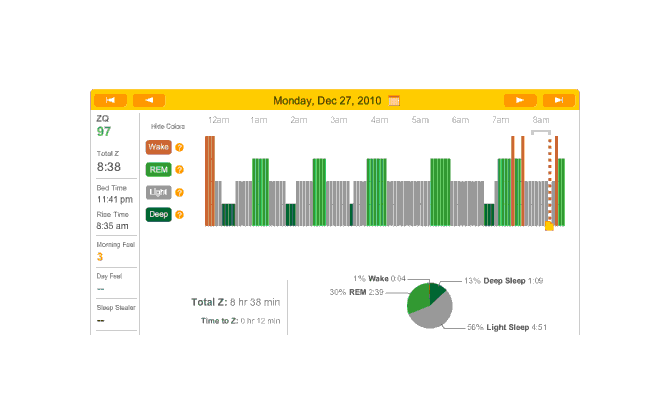

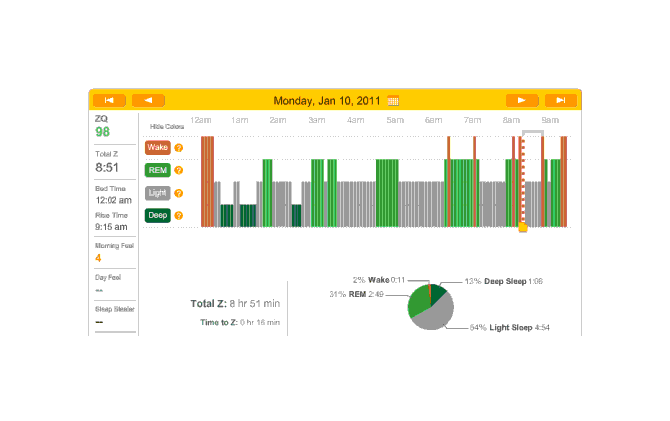

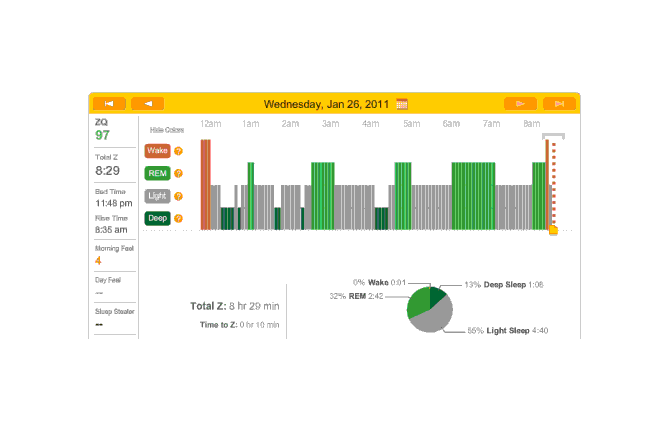

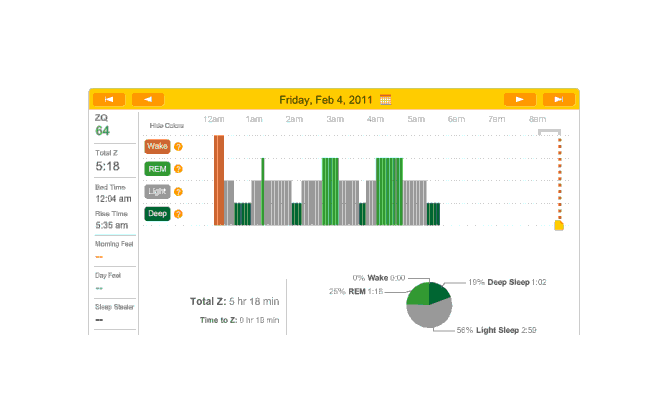

The data was especially good today, with no big gaps:

2010-12-27 ZQ sleep logs

You can see an impressively regular sleep cycle, cycling between REM and light sleep. What’s disturbing is the relative lack of deep sleep—down 4-5% (and there wasn’t a lot to begin with). I suspect that the lack of deep sleep indicates I wasn’t sleeping very well, but not badly enough to wake up, and this is probably due either to light from the Zeo itself—I only figured out how to turn it off a few days later—or my lack of regular blankets and use of a sleeping bag. But the awakenings around 4-6 AM and on other days has made me suspicious that one of the cats is bothering me around here and I’m just forgetting it as I fall asleep.

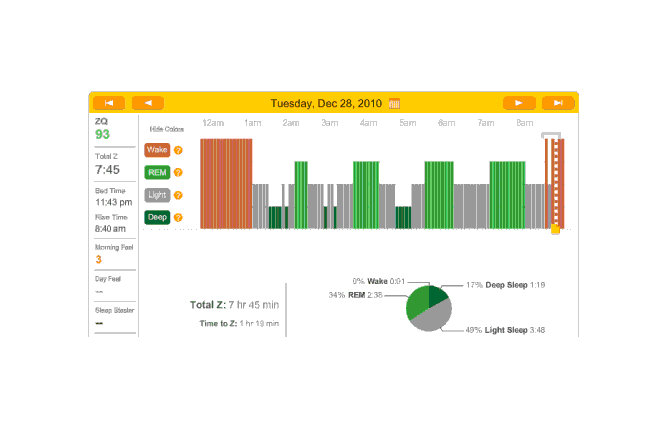

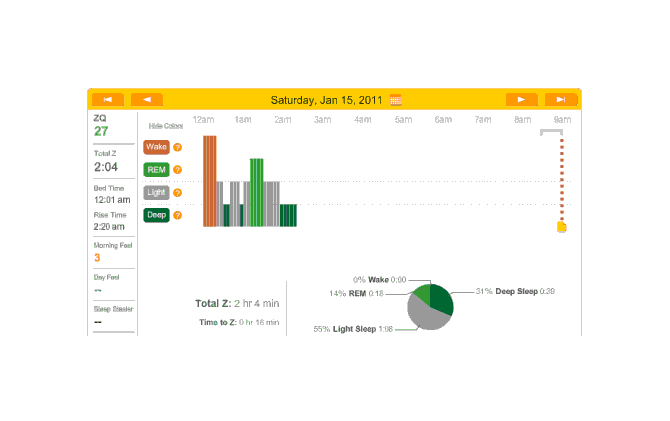

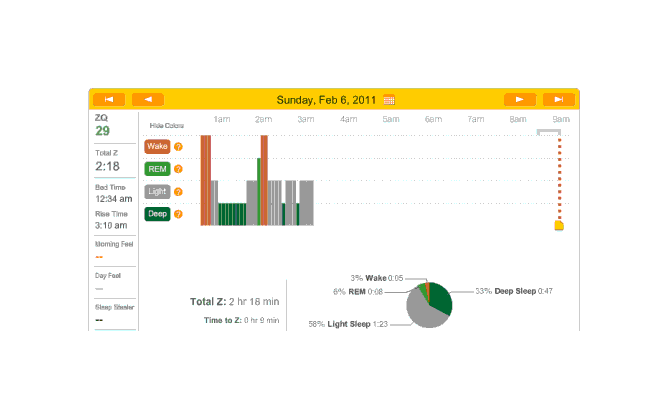

The next night is another no-melatonin night. This time it took 79 minutes to fall asleep. Very bad, but far from unprecedented; this sort of thing is why I was interested in melatonin in the first place. Deep sleep is again limited in dispersion, with a block at the beginning and end, but mostly a regular cycle between light and REM:

2010-12-28 ZQ sleep logs

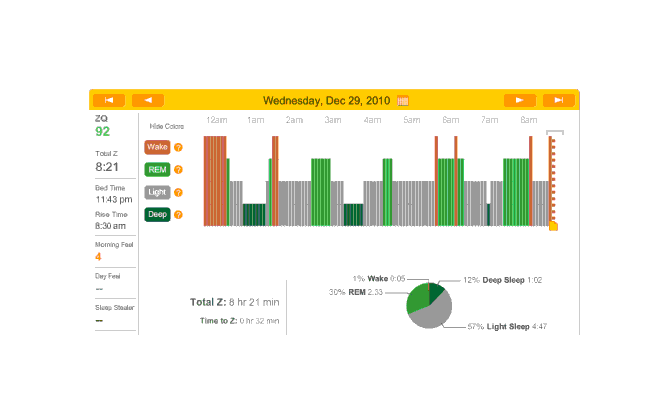

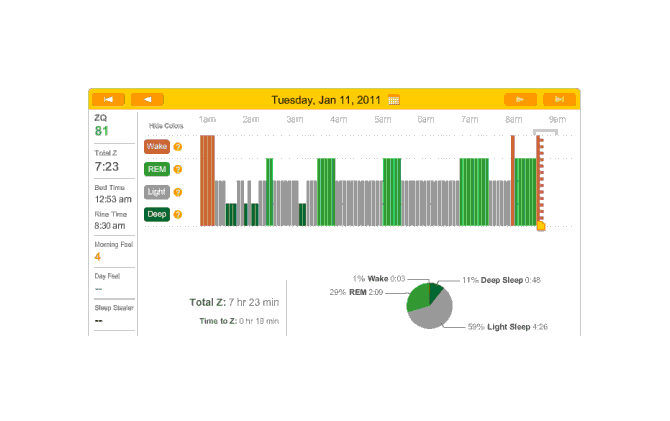

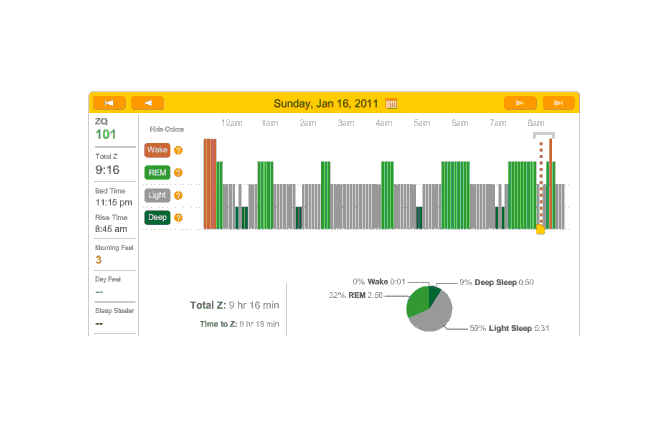

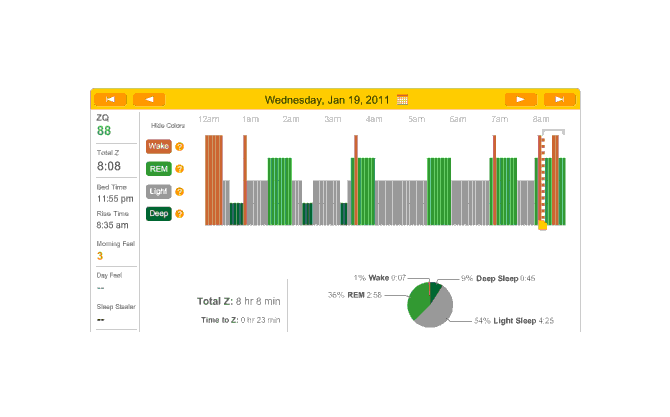

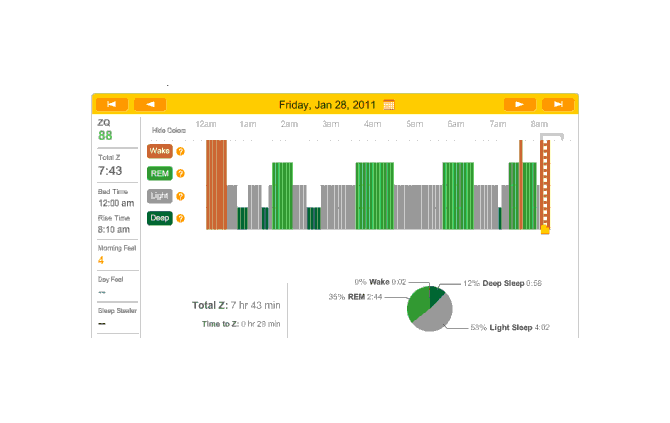

Melatonin night, and 32 minutes to sleep. (I’m starting to notice a trend here.) Another fairly regular cycle of phases, with some deep sleep at the beginning and end; 32 minutes to fall asleep isn’t great but much better than 79 minutes.

2010-12-29 ZQ sleep logs

Perhaps I should try a biphasic schedule where I sleep for an hour at the beginning and end? That’d seem to pick up most of my deep sleep, and REM would hopefully take care of itself with REM rebound. Need to sum my average REM & deep sleep times (that sum seems to differ quite a bit, eg. one fellow needs 4+ hours. My own need seems to be similar) so I don’t try to pick a schedule doomed to fail.

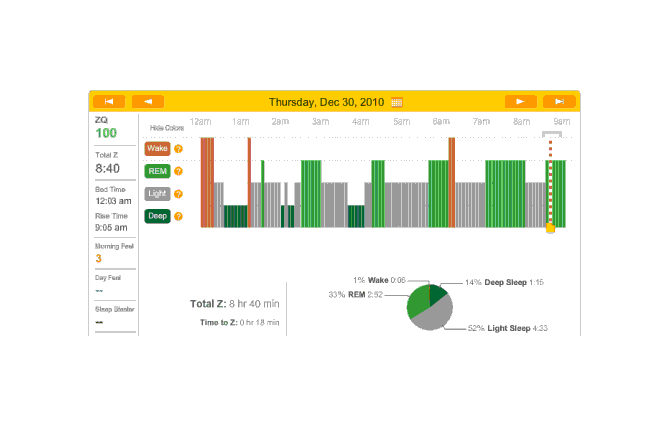

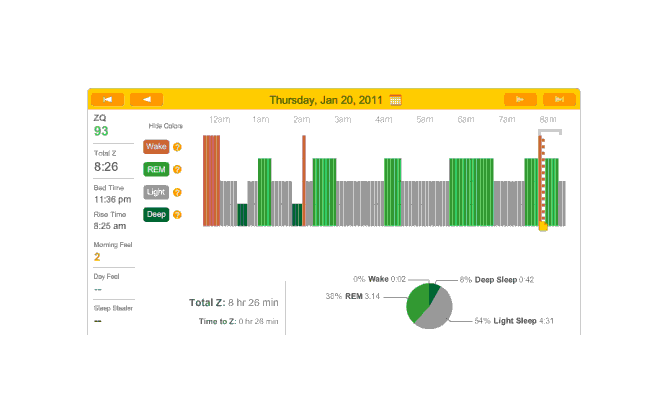

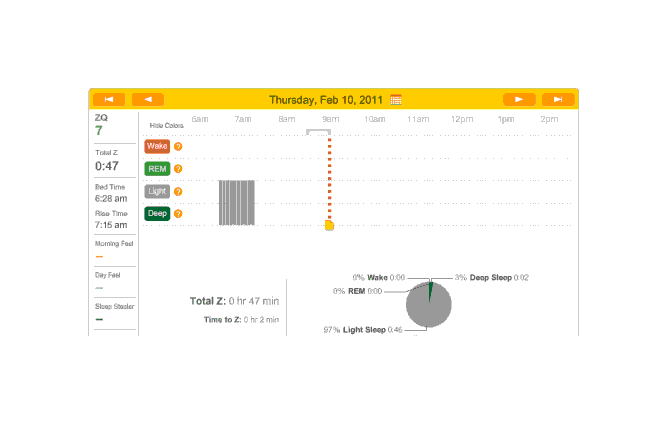

Another night, no melatonin. Time to sleep, just 18 minutes and the ZQ sets a new record even though my cat Stormy woke me up in the morning12:

2010-12-30 ZQ sleep logs

I personally blame this on being exhausted from 10 hours working on my transcription of The Notenki Memoirs. But a data point is a data point.

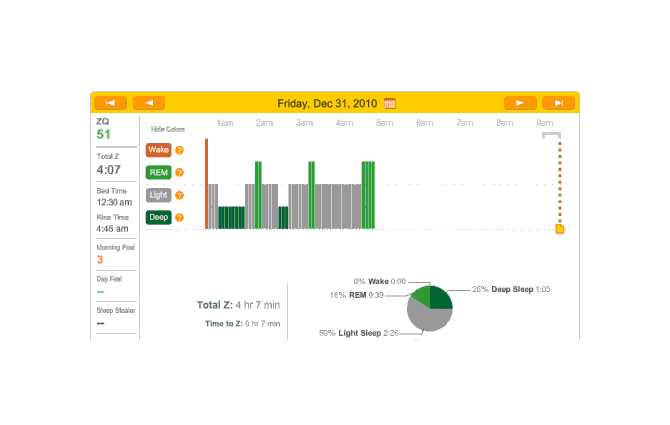

I spend New Year’s Eve pretty much finishing The Notenki Memoirs (transcribing the last of the biographies, the round-table discussion, and editing the images for inclusion), which exhausts me a fair bit as well; the champagne doesn’t help, but between that and the melatonin, I fall asleep in a record-setting 7 minutes. Unfortunately, the headband came off somewhere around 5 AM:

2010-12-31 ZQ sleep logs

A cat? Waking up? Dunno.

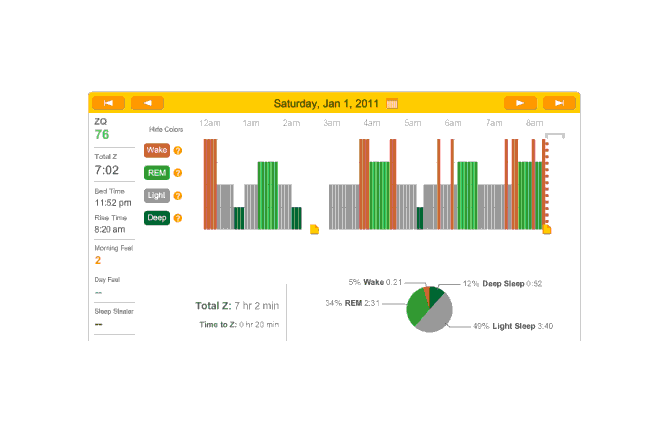

Another relatively quick falling asleep night at 20 minutes. Which then gets screwed up as I simply can’t stay asleep and then the cat begins bothering the heck out of me in the early morning:

2011-01-01 ZQ sleep logs

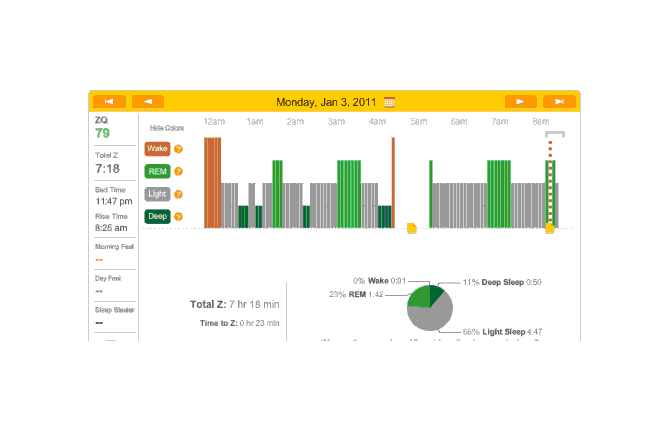

Melatonin night, which subjectively didn’t go too badly; 20 minutes to sleep. But lots of wake time (long enough wakes that I remembered them) and 2 or 3 hours not recorded (probably from adjusting my scarf and the headband):

2011-01-03 ZQ sleep logs

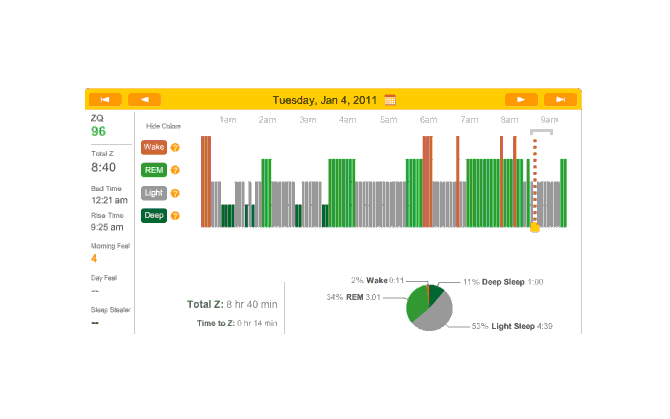

Accidentally did another melatonin night (thought Monday was a no-melatonin night). Very good sleep—set records for REM especially towards the late morning which is curious. (The dreams were also very curious. I was an Evangelion character (Kaworu) tasked with riding that kind of carnival-like ride that goes up and drops straight down.) Also another quick falling asleep:

2011-01-04 ZQ sleep logs

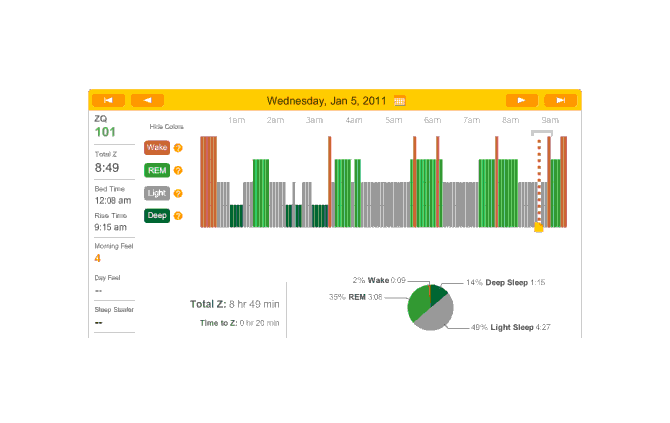

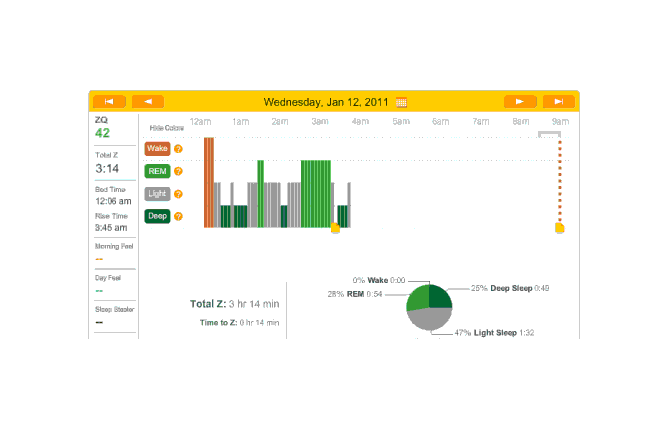

Rather than 3 melatonin nights in a row, I skipped melatonin this night (and thus will have it the next one). Perhaps because I went to sleep so very late, and despite some awakenings, this was a record-setting night for ZQ and TODO deep sleep or REM sleep? :

2011-01-05 ZQ sleep logs

I also switched the alarm sounds 2 or 3 days ago to ‘forest’ sounds; they seem somewhat more pleasant than the beeping musical tones. The next night, data is all screwed up. What happened there? It didn’t even record the start of the night, though it seemed to be active and working when I checked right before going to sleep. Odd.

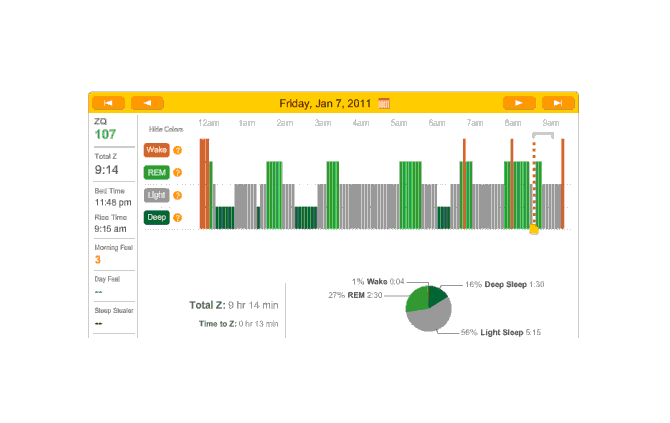

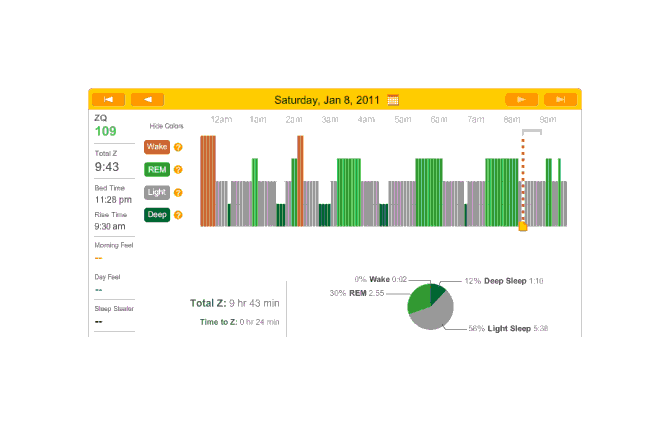

Next 2 days aren’t very interesting; first is no-melatonin, second is melatonin:

2011-01-07 ZQ sleep logs

2011-01-08 ZQ sleep logs

Off

On

Off

One of my chief Zeo complaints was the bright blue-white LCD screen. I had resorted to turning the base station over and surrounding it with socks to block the light. Then I looked closer at the labels for the buttons and learned that the up-down buttons changed the brightness and the LCD screen could be turned off. And I had read the part of the manual that explained that. D’oh!

On

?

Off

Off (forgot)

On

Off

On

Off

On

Off

Off, but no data on the 22nd. No idea what the problem is—the headset seems to have been on all night.

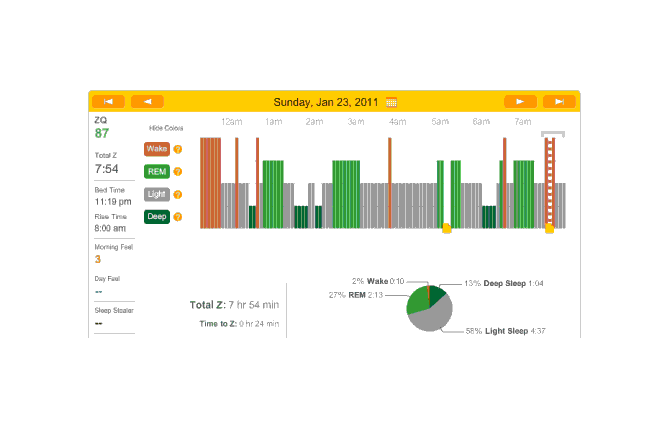

On with a double-dose of melatonin because I was going to bed early; as you can see, didn’t work:

2011-01-23 ZQ sleep logs

Off, no data on the 24th. On, no data on the 25th. I don’t know what went wrong on these two nights.

Off

The 27th (on for melatonin) yielded no data because, frustratingly, the Zeo was printing a ‘write-protected’ error on its screen; I assumed it had something to do with uploading earlier that day—perhaps I had yanked it out too quickly—and put it back in the computer, unmounted and went to eject it. But the memory card splintered on me! It was stuck and the end was splintering and little needles of plastic breaking off. I couldn’t get it out and gave up. The next day (I slept reasonably well) I went back with a pair of needle-nose pliers. I had a backup memory card. After much trial and error, I figured out the card had to be FAT-formatted and have a directory structure that looked like ZEO/ZEOSLEEP.DAT. So that’s that.

Off

On

30: on

31: off

1: on

2: off

3: on

Unfortunately, this night continues a long run of no data. Looking back, it doesn’t seem to have been the fault of the new memory card, since some nights did have enough data for the Zeo website to generate graphs. I suspect that the issue is the pad getting dirty after more than a month of use. I hope so, anyway. I’ll look around for rubbing alcohol to clean it. That night initially starts badly—the rubbing alcohol seemed to do nothing. After some messing around, I figure out that the headband seems to have loosened over the weeks and so while the sensor felt reasonably snug and tight and was transmitting, it wasn’t snug enough. I tighten it considerably and actually get some decent data:

Off

5: on

Off

7: on

8: off

9: on

Off

11: on?

The previous night, I began paying closer attention to when it was and was not reading me (usually the latter). Pushing hard on it made it eventually read me, but tightening the headband hadn’t helped the previous several nights. Pushing and not pushing, I noticed a subtle click. Apparently the band part with the metal sensor pad connects to the wireless unit by 3 little black metal nubs; 2 were solidly in place, but the third was completely loose. Suspicious, I try pulling on the band without pushing on the wireless unit—leaving the loose connection loose. Sure enough, no connection was registered. I push on the unit while loosing the headband—and the connection worked. I felt I finally had solved it. It wasn’t a loose headband or me pulling it off at night or oils on the metal sensors or a problem with the SD card. I was too tired to fix it when I had the realization, but resolved the next morning to fix it by wrapping a rubber band around the wireless unit and band. This turned out to not interfere with recharging, and when I took a short nap, the data looked fine and gapless. So! The long data drought is hopefully over.

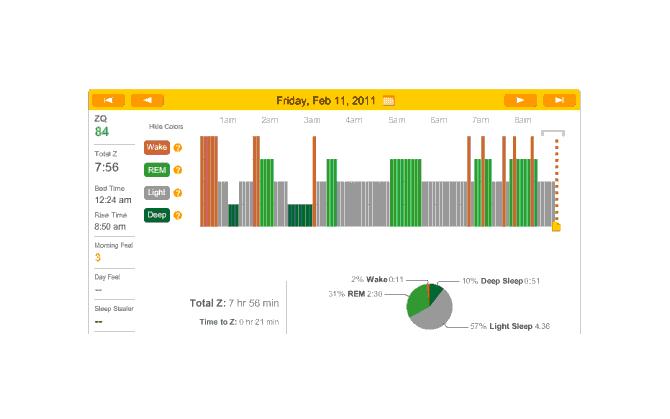

2011-02-11 ZQ sleep logs

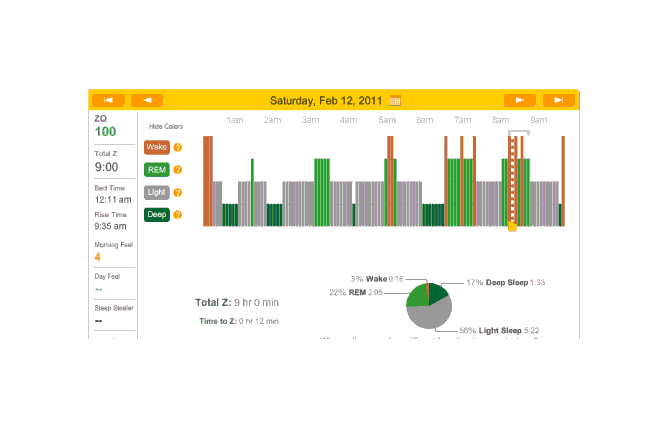

Off

On

Off

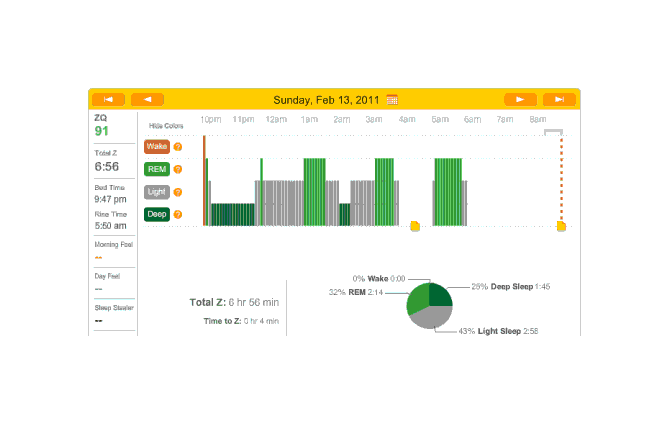

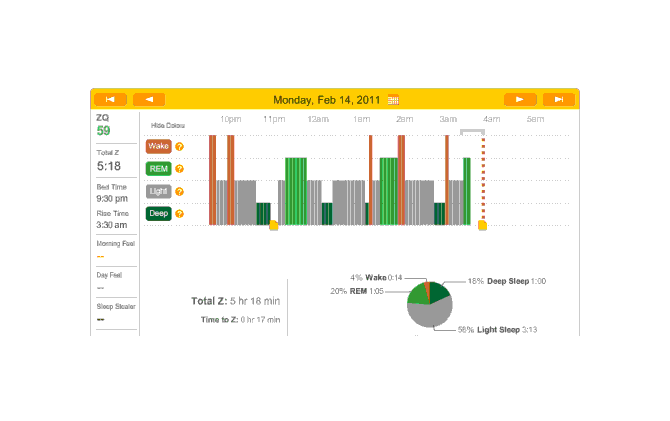

On the 15th of February, I had a very early flight to San Francisco. That night and every night from then on, I was using melatonin, so we’ll just include all the nights for which any sensible data was gathered. Oddly enough, the data and ZQs seem bad (as one would expect from sleeping on a couch), but I wake up feeling fairly refreshed. By this point we have the idea how the sleep charts work, so I will simply link them rather than display them.

Then I took a long break on updating this page; when I had a month or two of data, I uploaded to Zeo again, and buckled down and figured out how to have ImageMagick crop pages. The shell script (for screenshots of my browser, YMMV) is for file in *.png; do mogrify +repage -crop 700x350+350+285 $file; done;

General observations: almost all these nights were on melatonin. Not far into this period, I realized that the little rubber band was not working, and I hauled out my red electrical tape and tightened it but good; and again, you can see the transition from crappy recordings to much cleaner recordings. The rest of February:

March:

April:

April 4th was one of the few nights that I was not on melatonin during this timespan; I occasionally take a weekend and try to drop all supplements and nootropics besides the multivitamins and fish oil, which includes my melatonin pills. This night (or more precisely, that Sunday evening) I also stayed up late working on my computer, getting in to bed at 12:25 AM. You can see how well that worked out. During the 2 AM wake period, it occurred to me that I didn’t especially want to sacrifice a day to show that computer work can make for bad sleep (which I already have plenty of citations for in the Melatonin essay), and I gave in, taking a pill. That worked out much better, with a relatively normal number of wakings after 2 AM and a reasonable amount of deep & REM sleep.

Exercise

One-Legged Standing

Seth Roberts found that for him, standing a lot helped him sleep. This seems very plausible to me—more fatigue to repair, closer to ancestral conditions of constant walking—and tallied with my own experience. (One summer I worked at a summer camp, where I spent the entire day on my feet; I always slept very well though my bunk was uncomfortable.) He also found that stressing his legs by standing on one at a time for a few minutes also helped him sleep. That did not seem as plausible to me. But still worth trying: standing is free, and if it does nothing, at least I got a little more exercise.

Roberts tried a fairly complicated randomized routine. I am simply alternating days as with melatonin (note that I have resumed taking melatonin every day). My standing method is also simple; for 5 minutes, I stand on one leg, rise up onto the ball of my foot (because my calves are in good shape), and then sink down a foot or two and hold it until the burning sensation in my thigh forces me to switch to the other leg. (I seem to alternate every minute.) I walk my dog most every day, so the effect is not as simple as ‘some moderate exercise that day’; in the next experiment, I might try 5 minutes of dumbbell bicep curls instead.

One-Legged Standing Analysis

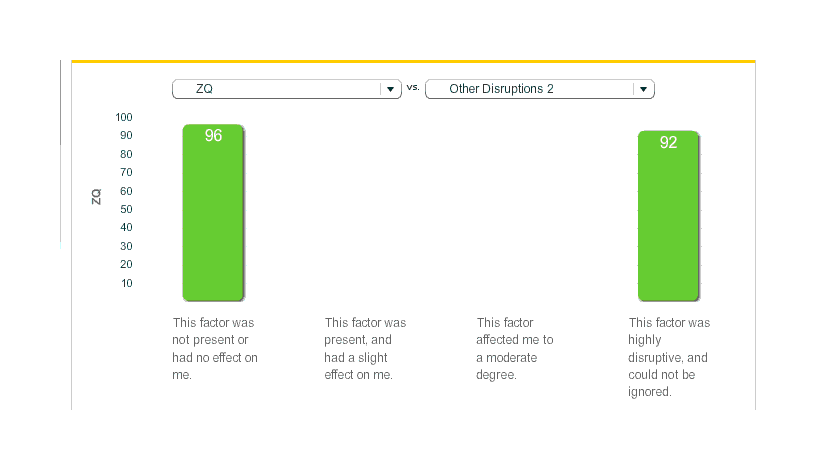

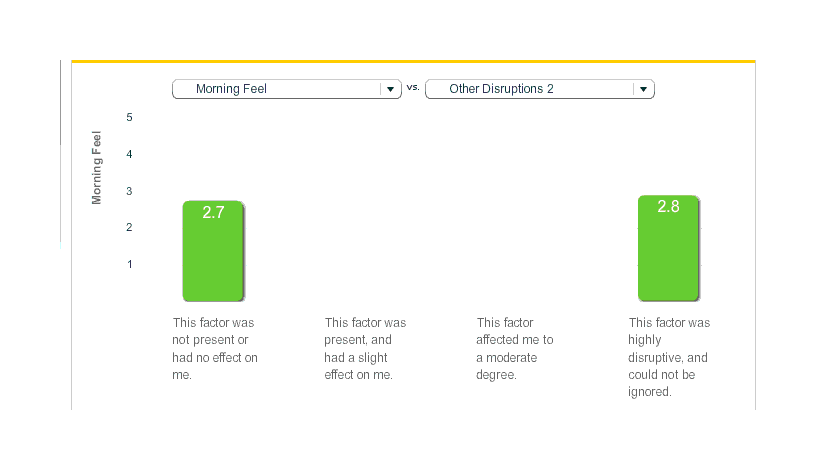

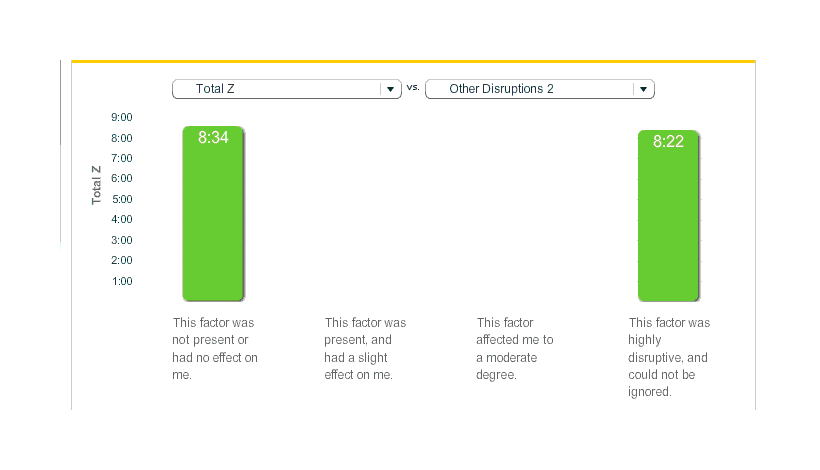

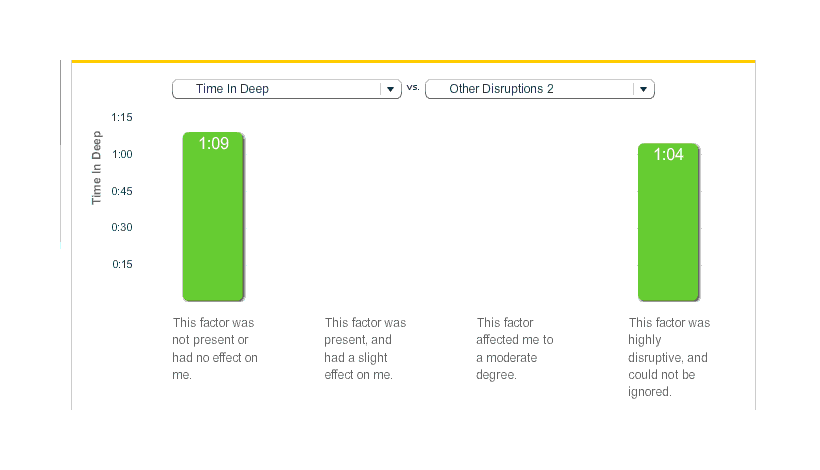

The initial results were promising. Of the first 5 days, 3 are ‘on’ and 2 are off; all 3 on-days had higher ZQs than the 2 off-days. Unfortunately, the full time series did not seem to bear this out. Looking at the ~70 recorded days between 2011-06-11 and 2011-08-27 (raw CSV data), the raw uncorrected averages looked like this (as before, the ‘3’ means the intervention was used, ‘0’ that it was not):

Standing ZQ vs non-standing

Morning feel rating

Total sleep time

Total deep sleep time

Total REM sleep time

Number of times woken

Total time awake

R analysis, using multivariate linear regression13 turns in a non-statistically-significant value for one-leggedness in general (p = 0.23); by variable:

Variable |

Effect |

p-value |

Coefficient’s sign is… |

|---|---|---|---|

|

-1.24 |

0.16 |

worse |

|

-4.09 |

0.37 |

worse |

|

0.47 |

0.51 |

worse |

|

-0.37 |

0.80 |

better |

|

-5.33 |

0.02 |

worse |

|

2.76 |

0.38 |

worse |

|

-1.56 |

0.10 |

worse |

|

-0.05 |

0.79 |

better |

|

-0.05 |

0.32 |

worse |

No p-values survived multiple-correction14:.

While I did not replicate Roberts’s setup exactly in the interest of time and ease, and it was not blinded, I tried to compensate with an unusually large sample: 69 nights of data. This was a mixed experiment: there seems to be an negative effect, but none of the changes seem to have large effect sizes or strong p-values.

The one-legged standing was not in exclusion to melatonin use, but I had used it most every night. I thought I might go on using one-legged standing, perhaps skipping it on nights when I am up particularly late or lack the willpower, but I’ve abandoned it because it is a lot of work to use and the result looked weak. In the future, I should look into whether walks before bedtime help.

Vitamin D

Potassium

LSD Microdosing

Alcohol

Suspicious that alcohol was delaying my sleep and worsening my sleep when I did finally go to bed, I recorded my alcohol consumption for a year. Correlating alcohol use against when I go to bed shows no interesting correlation, nor with any of the other sleep variables Zeo records, even after correcting for a shift in my sleep patterns over that year. So it would seem I was wrong.

In May 201313ya, I began to wonder if alcohol was damaging my sleep; I don’t drink alcohol too often and never more than a glass or two, so I don’t have any tolerance built up. I noticed that on nights when I drank some red wine or had some of my mead, it seemed to take me much longer to fall asleep and I would regularly wake up in the middle of the night. So I began noting down days on which I drank any alcohol, to see if it correlated with sleep problems (and probably then just refrain from alcohol in the evening, since I don’t care enough to run a randomized experiment).

In May 201412ya, I ran out of all my mead and also a gallon of burgundy wine I had bought to make beef bourguignon with, so that marked a natural close to the data collection. I compiled the alcohol data along with the Zeo data in the relevant time period, and looked at the key metrics with a multivariate multiple regression. The main complexity here is that I earlier discovered that I had gradually shifted my sleep down and now Start.of.Night looks like a sigmoid, so to control for that, I fit a sigmoid to the Date using nonlinear least squares, and then plugged the estimated values in. The code, showing only the results for the Alcohol boolean:

drink <- read.csv("https://gwern.net/doc/zeo/2014-gwern-alcohol.csv")

library(minpack.lm)

summary(nlsLM(Start.of.Night ~ Alcohol + as.integer(Date) + (a / (1 + exp(-b * (as.integer(Date) - c)))),

start = list(a = 6.15e+05, b = -1.18e-04, c = -5.15e+04),

control=(nls.lm.control(ftol = sqrt(.Machine$double.eps)/4.9, maxfev=1024, maxiter=1024)),

data=drink))

# Parameters:

# Estimate Std. Error t value Pr(>|t|)

# a 5.61e+06 6.49e+09 0.00 1.00

# b -1.00e-03 2.44e-04 -4.10 4.8e-05

# c -8.26e+03 1.16e+06 -0.01 0.99

summary(lm(cbind(Start.of.Night, Time.to.Z, Time.in.Wake, Awakenings, Morning.Feel, Total.Z, Time.in.REM, Time.in.Deep) ~

Alcohol +

as.integer(Date) + I(5.61e+06 / (1 + exp(-(1.00e-03) * (as.integer(Date) - (-8.26e+03))))),

data=drink))

# Response Start.of.Night :

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# AlcoholTRUE -8.96e-01 4.75e+00 -0.19 0.85

#

# Response Time.to.Z :

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# AlcoholTRUE -2.50e+00 1.41e+00 -1.77 0.077

#

# Response Time.in.Wake :

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# AlcoholTRUE -2.04e+00 2.40e+00 -0.85 0.3956

#

# Response Awakenings :

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# AlcoholTRUE -2.03e-01 2.85e-01 -0.71 0.48

#

# Response Morning.Feel :

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# AlcoholTRUE -5.03e-02 9.16e-02 -0.55 0.5836

#

# Response Total.Z :

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# AlcoholTRUE 1.04e+01 7.89e+00 1.32 0.19

#

# Response Time.in.REM :

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 7.59e+05 9.83e+05 0.77 0.44

# AlcoholTRUE 1.84e+00 3.58e+00 0.51 0.61

#

# Response Time.in.Deep :

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# AlcoholTRUE 1.14e+00 1.41e+00 0.80 0.42Zilch. No correlation is at all interesting.

So it looks like alcohol—at least in the small quantities I consume—makes no difference.

Timing

Bed Time for Better Sleep

Someone asked if I could turn up a better bedtime using their Zeo data. I accepted, but the sleep data comes with quite a few variables and it’s not clear which variable is the ‘best’—for example, I don’t think much of the ZQ variable, so it’s not as simple as regressing ZQ ~ Bedtime and finding what value of Bedtime maximizes ZQ. I decided that I could try finding the optimal bedtime by two strategies:

look for some underlying factor of good sleep using factor analysis—I’d expect maybe 2 or 3 factors, one for total sleep, one for insomnia, and maybe one for REM sleep—and maximize the good ones and minimize the bad ones, equally weighted

just do a multivariate regression and weight each variable equally

So, setup:

zeo <- read.csv("https://gwern.net/doc/zeo/gwern-zeodata.csv")

zeo$Sleep.Date <- as.Date(zeo$Sleep.Date, format="%m/%d/%Y")

## convert "05/12/2014 06:45" to "06:45"

zeo$Start.of.Night <- sapply(strsplit(as.character(zeo$Start.of.Night), " "), function(x) { x[2] })

## convert "06:45" to 24300

interval <- function(x) { if (!is.na(x)) { if (grepl(" s",x)) as.integer(sub(" s","",x))

else { y <- unlist(strsplit(x, ":")); as.integer(y[[1]])*60 + as.integer(y[[2]]); }

}

else NA

}

zeo$Start.of.Night <- sapply(zeo$Start.of.Night, interval)

## correct for the switch to new unencrypted firmware in March 2013;

## I don't know why the new firmware subtracts 15 hours

zeo[(zeo$Sleep.Date >= as.Date("2013-03-11")),]$Start.of.Night

<- (zeo[(zeo$Sleep.Date >= as.Date("2013-03-11")),]$Start.of.Night + 900) %% (24*60)

## after midnight (24*60=1440), Start.of.Night wraps around to 0, which obscures any trends,

## so we'll map anything before 7AM to time+1440

zeo[zeo$Start.of.Night<420 & !is.na(zeo$Start.of.Night),]$Start.of.Night

<- (zeo[zeo$Start.of.Night<420 & !is.na(zeo$Start.of.Night),]$Start.of.Night + (24*60))

## keep only the variables we're interested in:

zeo <- zeo[,c(2:10, 23)]

## define naps or nights with bad data as total sleep time under ~1.5 hours (100m) & delete

zeo <- zeo[zeo$Total.Z>100,]

write.csv(zeo, file="bedtime-factoranalysis.csv", row.names=FALSE)Let’s begin with a simple factor analysis, looking for a ‘good sleep’ factor. Zeo Inc apparently was trying for this with the ZQ variable but I’ve always been suspicious of it because it doesn’t seem to track Morning.Feel or Awakenings very well but simply be how long you slept (Total.Z):

zeo <- read.csv("https://gwern.net/doc/zeo/2014-07-26-bedtime-factoranalysis.csv")

library(psych)

nfactors(zeo)

# VSS complexity 1 achieves a maximimum of 0.8 with 6 factors

# VSS complexity 2 achieves a maximimum of 0.94 with 6 factors

# The Velicer MAP achieves a minimum of 0.09 with 1 factors

# Empirical BIC achieves a minimum of 466.5 with 5 factors

# Sample Size adjusted BIC achieves a minimum of 39396 with 5 factors

#

# Statistics by number of factors

# vss1 vss2 map dof chisq prob sqresid fit RMSEA BIC SABIC complex eChisq eRMS eCRMS eBIC

# 1 0.71 0.00 0.090 35 41394 0 6.4648 0.71 0.99 41145 41256 1.0 1.8e+03 0.12926 0.15 1577

# 2 0.77 0.85 0.099 26 40264 0 3.3366 0.85 1.13 40079 40162 1.2 9.4e+02 0.09275 0.12 755

# 3 0.78 0.89 0.139 18 40323 0 2.1333 0.91 1.36 40195 40253 1.4 9.0e+02 0.09075 0.14 772

# 4 0.75 0.89 0.216 11 39886 0 1.3401 0.94 1.73 39808 39843 1.5 8.0e+02 0.08560 0.17 722

# 5 0.78 0.89 0.280 5 39415 0 0.7267 0.97 2.56 39380 39396 1.4 5.0e+02 0.06779 0.20 467

# 6 0.80 0.94 0.450 0 38640 NA 0.3194 0.99 NA NA NA 1.2 2.2e+02 0.04479 NA NA

# 7 0.80 0.92 0.807 -4 37435 NA 0.1418 0.99 NA NA NA 1.2 1.0e+02 0.03075 NA NA

# 8 0.78 0.91 4.640 -7 30474 NA 0.0002 1.00 NA NA NA 1.3 2.5e-02 0.00048 NA NA

# 9 0.78 0.91 NaN -9 30457 NA 0.0002 1.00 NA NA NA 1.3 2.5e-02 0.00048 NA NA

# 10 0.78 0.91 NA -10 30440 NA 0.0002 1.00 NA NA NA 1.3 2.5e-02 0.00048 NA NA

## BIC says 5 factors, so we'll go with that:

factorization <- fa(zeo, nfactors=5); factorization

# Standardized loadings (pattern matrix) based upon correlation matrix

# MR1 MR2 MR5 MR4 MR3 h2 u2 com

# ZQ 0.87 -0.14 -0.01 0.25 -0.04 0.99 0.013 1.2

# Total.Z 0.96 0.04 -0.01 0.07 -0.04 0.99 0.011 1.0

# Time.to.Z 0.05 -0.03 0.92 0.03 0.10 0.84 0.159 1.0

# Time.in.Wake -0.18 0.90 -0.02 0.04 -0.15 0.83 0.168 1.1

# Time.in.REM 0.87 0.05 0.03 0.05 0.09 0.78 0.215 1.0

# Time.in.Light 0.94 0.02 -0.04 -0.20 -0.14 0.84 0.158 1.1

# Time.in.Deep 0.02 0.03 0.01 0.99 -0.02 0.98 0.023 1.0

# Awakenings 0.35 0.75 0.08 -0.03 0.26 0.79 0.209 1.7

# Start.of.Night -0.21 0.00 0.10 -0.05 0.86 0.84 0.162 1.2

# Morning.Feel 0.22 -0.13 -0.55 0.11 0.46 0.66 0.343 2.5

#

# MR1 MR2 MR5 MR4 MR3

# SS loadings 3.65 1.44 1.21 1.16 1.08

# Proportion Var 0.37 0.14 0.12 0.12 0.11

# Cumulative Var 0.37 0.51 0.63 0.75 0.85

# Proportion Explained 0.43 0.17 0.14 0.14 0.13

# Cumulative Proportion 0.43 0.60 0.74 0.87 1.00

#

# With factor correlations of

# MR1 MR2 MR5 MR4 MR3

# MR1 1.00 0.03 -0.18 0.34 -0.03

# MR2 0.03 1.00 0.27 -0.09 0.00

# MR5 -0.18 0.27 1.00 -0.09 0.09

# MR4 0.34 -0.09 -0.09 1.00 0.03

# MR3 -0.03 0.00 0.09 0.03 1.00

#

# Mean item complexity = 1.3

# Test of the hypothesis that 5 factors are sufficient.

#

# The degrees of freedom for the null model are 45 and the objective function was 40.02 with Chi Square of 48376

# The degrees of freedom for the model are 5 and the objective function was 32.69

#

# The root mean square of the residuals (RMSR) is 0.07

# The df corrected root mean square of the residuals is 0.2

#

# The harmonic number of observations is 1152 with the empirical chi square 473.1 with prob < 5.1e-100

# The total number of observations was 1214 with MLE Chi Square = 39412 with prob < 0

#

# Tucker Lewis Index of factoring reliability = -6.359

# RMSEA index = 2.557 and the 90 % confidence intervals are 2.527 2.569

# BIC = 39377

# Fit based upon off diagonal values = 0.97This looks like MR1=overall sleep; MR2=insomnia/bad-sleep; MR5=difficulty-falling-asleep?; MR4=deep-sleep-(not part of MR1!); MR3=dunno. MR1 and MR4 correlate 0.34, and MR2/MR5 0.27, which makes sense. I want to maximize overall sleep and deep sleep (deep sleep seems connected to health), so MR1 and M4.

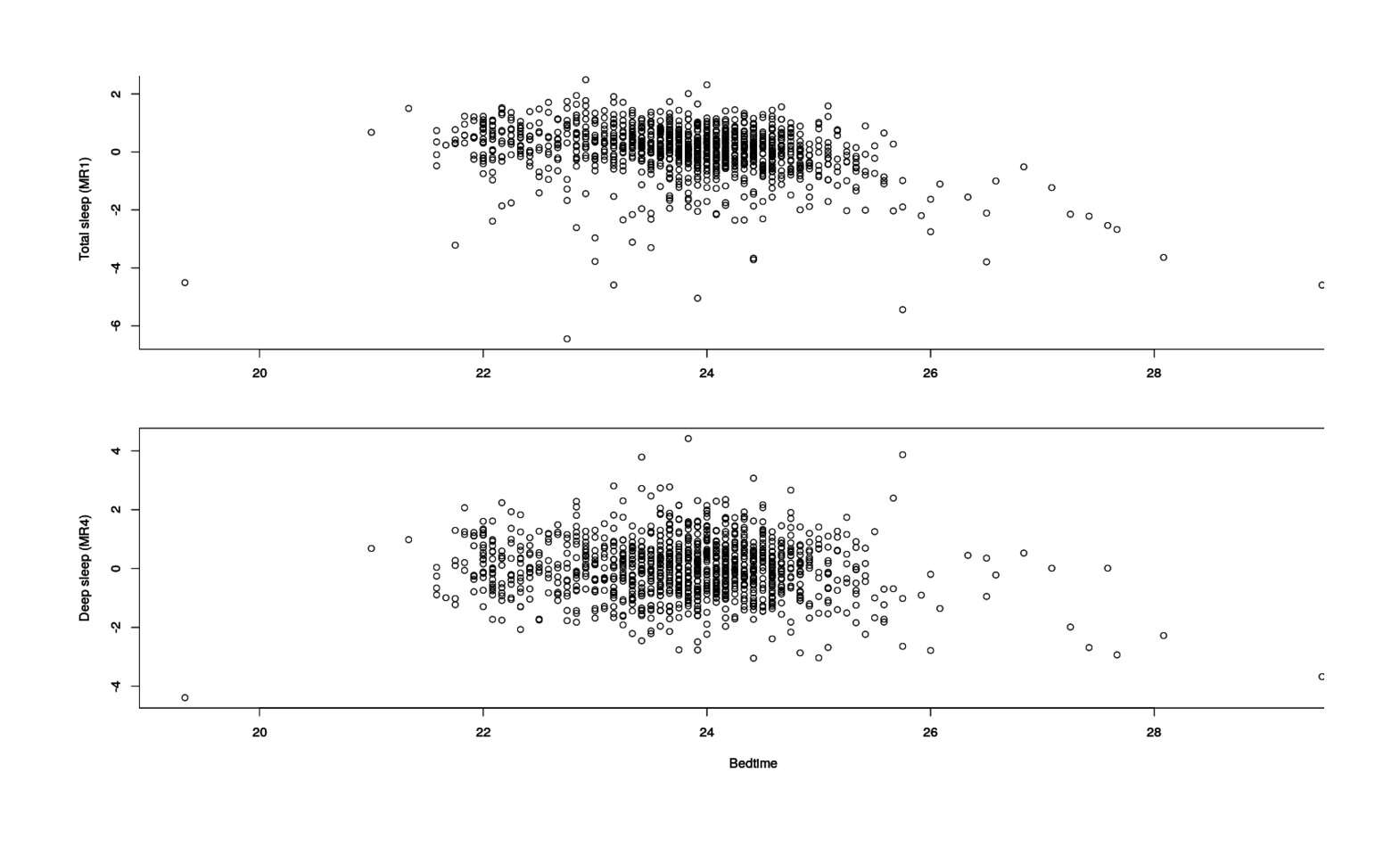

Now that we have our factors, we can extract them and plot them over time for a graphical look:

MR1 <- predict(factorization, data=zeo)[,1]

MR4 <- predict(factorization, data=zeo)[,4]

par(mfrow=c(2,1), mar=c(4,4.5,1,1))

plot(MR1 ~ I(Start.of.Night/60), xlab="", ylab="Total sleep (MR1)", data=zeo)

plot(MR4 ~ I(Start.of.Night/60), xlab="Bedtime", ylab="Deep sleep (MR4)", data=zeo)

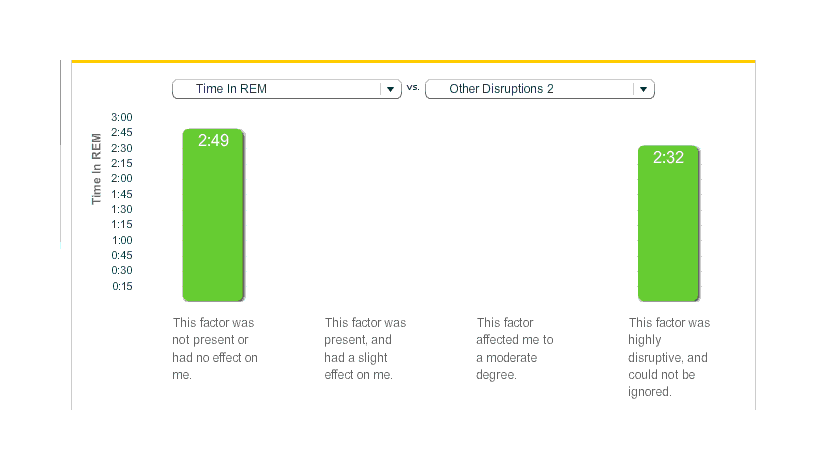

Total & deep sleep factors vs bedtime

looks like an overall linear decline (later=worse), but possibly with a peak somewhere looking like a quadratic.

So we’ll try fitting quadratics:

factorModel <- lm(cbind(MR1, MR4) ~ Start.of.Night + I(Start.of.Night^2), data=zeo); summary(factorModel)

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) -6.63e+01 7.65e+00 -8.67 <2e-16

# Start.of.Night 9.74e-02 1.07e-02 9.13 <2e-16

# I(Start.of.Night^2) -3.56e-05 3.72e-06 -9.57 <2e-16

#

# Residual standard error: 0.829 on 1127 degrees of freedom

# (84 observations deleted due to missingness)

# Multiple R-squared: 0.152, Adjusted R-squared: 0.15

# F-statistic: 101 on 2 and 1127 DF, p-value: <2e-16

#

#

# Response MR4 :

#

# Call:

# lm(formula = MR4 ~ Start.of.Night + I(Start.of.Night^2), data = zeo)

#

# Residuals:

# Min 1Q Median 3Q Max

# -3.057 -0.651 -0.017 0.600 4.329

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) -5.06e+01 8.97e+00 -5.64 2.1e-08

# Start.of.Night 7.23e-02 1.25e-02 5.79 9.3e-09

# I(Start.of.Night^2) -2.58e-05 4.36e-06 -5.92 4.2e-09

#

# Residual standard error: 0.971 on 1127 degrees of freedom

# (84 observations deleted due to missingness)

# Multiple R-squared: 0.0384, Adjusted R-squared: 0.0367

# F-statistic: 22.5 on 2 and 1127 DF, p-value: 2.57e-10

## on the other hand, if we had ignored the quadratic term, we'd

## get a much worse fit

summary(lm(cbind(MR1, MR4) ~ Start.of.Night, data=zeo))

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 6.643744 0.653047 10.2 <2e-16

# Start.of.Night -0.004613 0.000457 -10.1 <2e-16

#

# Residual standard error: 0.861 on 1128 degrees of freedom

# (84 observations deleted due to missingness)

# Multiple R-squared: 0.0829, Adjusted R-squared: 0.0821

# F-statistic: 102 on 1 and 1128 DF, p-value: <2e-16

#

# Response MR4 :

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 2.337279 0.747401 3.13 0.0018

# Start.of.Night -0.001627 0.000523 -3.11 0.0019

#

# Residual standard error: 0.986 on 1128 degrees of freedom

# (84 observations deleted due to missingness)

# Multiple R-squared: 0.00851, Adjusted R-squared: 0.00764

# F-statistic: 9.69 on 1 and 1128 DF, p-value: 0.0019So we want to use the quadratic. Given this quadratic model, what’s the optimal bedtime?

estimatedFactorValues <- predict(factorModel, newdata=data.frame(Start.of.Night=1:max(zeo$Start.of.Night, na.rm=TRUE)))

## when is MR1 maximized?

which(estimatedFactorValues[,1] == max(estimatedFactorValues[,1]))

# 1368

1368 / 60

# [1] 22.8

## 10:48 PM seems reasonable

## when is MR3 maximized?

which(estimatedFactorValues[,2] == max(estimatedFactorValues[,2]))

# 1401

## 11:21 PM seems reasonable

## summing the factors isn't quite the average of the two time, but it's close:

combinedFactorSums <- rowSums(estimatedFactorValues)

which(combinedFactorSums == max(combinedFactorSums))

# 1382

## 11:02PMMaybe using factors wasn’t a good idea? We can try a multivariate regression on the variables directly:

quadraticModel <- lm(cbind(ZQ, Total.Z, Time.to.Z, Time.in.Wake, Time.in.REM,

Time.in.Light, Time.in.Deep, Awakenings, Morning.Feel)

~ Start.of.Night + I(Start.of.Night^2), data=zeo)

summary(quadraticModel)

# Response ZQ :

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) -7.84e+02 1.06e+02 -7.38 3.1e-13

# Start.of.Night 1.29e+00 1.48e-01 8.68 < 2e-16

# I(Start.of.Night^2) -4.70e-04 5.16e-05 -9.10 < 2e-16

#

# Residual standard error: 11.5 on 1127 degrees of freedom

# (84 observations deleted due to missingness)

# Multiple R-squared: 0.139, Adjusted R-squared: 0.137

# F-statistic: 90.9 on 2 and 1127 DF, p-value: <2e-16

#

# Response Total.Z :

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) -4.48e+03 5.54e+02 -8.08 1.7e-15

# Start.of.Night 7.32e+00 7.73e-01 9.47 < 2e-16

# I(Start.of.Night^2) -2.67e-03 2.69e-04 -9.91 < 2e-16

#

# Residual standard error: 60 on 1127 degrees of freedom

# (84 observations deleted due to missingness)

# Multiple R-squared: 0.158, Adjusted R-squared: 0.156

# F-statistic: 106 on 2 and 1127 DF, p-value: <2e-16

#

# Response Time.to.Z :

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) -6.09e+02 1.22e+02 -4.98 7.3e-07

# Start.of.Night 8.43e-01 1.71e-01 4.94 8.8e-07

# I(Start.of.Night^2) -2.81e-04 5.95e-05 -4.73 2.6e-06

#

# Residual standard error: 13.2 on 1127 degrees of freedom

# (84 observations deleted due to missingness)

# Multiple R-squared: 0.0431, Adjusted R-squared: 0.0415

# F-statistic: 25.4 on 2 and 1127 DF, p-value: 1.61e-11

#

# Response Time.in.Wake :

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) -1.26e+02 1.76e+02 -0.72 0.47

# Start.of.Night 2.15e-01 2.45e-01 0.88 0.38

# I(Start.of.Night^2) -7.83e-05 8.55e-05 -0.92 0.36

#

# Residual standard error: 19.1 on 1127 degrees of freedom

# (84 observations deleted due to missingness)

# Multiple R-squared: 0.00149, Adjusted R-squared: -0.000283

# F-statistic: 0.84 on 2 and 1127 DF, p-value: 0.432

#

# Response Time.in.REM :

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) -1.43e+03 2.69e+02 -5.32 1.2e-07

# Start.of.Night 2.32e+00 3.75e-01 6.19 8.6e-10

# I(Start.of.Night^2) -8.39e-04 1.31e-04 -6.42 2.0e-10

#

# Residual standard error: 29.1 on 1127 degrees of freedom

# (84 observations deleted due to missingness)

# Multiple R-squared: 0.0608, Adjusted R-squared: 0.0592

# F-statistic: 36.5 on 2 and 1127 DF, p-value: 4.37e-16

#

# Response Time.in.Light :

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) -2.45e+03 3.43e+02 -7.15 1.5e-12

# Start.of.Night 4.07e+00 4.78e-01 8.50 < 2e-16

# I(Start.of.Night^2) -1.50e-03 1.67e-04 -9.00 < 2e-16

#

# Residual standard error: 37.2 on 1127 degrees of freedom

# (84 observations deleted due to missingness)

# Multiple R-squared: 0.164, Adjusted R-squared: 0.162

# F-statistic: 110 on 2 and 1127 DF, p-value: <2e-16

#

# Response Time.in.Deep :

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) -5.88e+02 1.10e+02 -5.34 1.1e-07

# Start.of.Night 9.27e-01 1.53e-01 6.04 2.1e-09

# I(Start.of.Night^2) -3.30e-04 5.35e-05 -6.17 9.5e-10

#

# Residual standard error: 11.9 on 1127 degrees of freedom

# (84 observations deleted due to missingness)

# Multiple R-squared: 0.0398, Adjusted R-squared: 0.0381

# F-statistic: 23.4 on 2 and 1127 DF, p-value: 1.12e-10

#

# Response Awakenings :

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) -1.18e+02 2.71e+01 -4.36 1.4e-05

# Start.of.Night 1.68e-01 3.77e-02 4.46 9.0e-06

# I(Start.of.Night^2) -5.67e-05 1.32e-05 -4.31 1.7e-05

#

# Residual standard error: 2.93 on 1127 degrees of freedom

# (84 observations deleted due to missingness)

# Multiple R-squared: 0.0274, Adjusted R-squared: 0.0256

# F-statistic: 15.9 on 2 and 1127 DF, p-value: 1.62e-07

#

# Response Morning.Feel :

#

# Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) -2.12e+01 7.02e+00 -3.01 0.00266

# Start.of.Night 3.32e-02 9.79e-03 3.39 0.00073

# I(Start.of.Night^2) -1.15e-05 3.41e-06 -3.37 0.00079

#

# Residual standard error: 0.761 on 1127 degrees of freedom

# (84 observations deleted due to missingness)

# Multiple R-squared: 0.0103, Adjusted R-squared: 0.0085

# F-statistic: 5.84 on 2 and 1127 DF, p-value: 0.00301

## Likewise, what's the optimal predicted time?

estimatedValues <- predict(quadraticModel, newdata=data.frame(Start.of.Night=1:max(zeo$Start.of.Night, na.rm=TRUE)))

# but what time is best? we have so many choices of variable to optimize.

# Let's simply sum them all and say bigger is better

# first, we need to negate 'Time.in.Wake', 'Time.to.Z', 'Awakenings',

# as for those, bigger is worse

estimatedValues[,3] <- -estimatedValues[,3] # Time.to.Z

estimatedValues[,4] <- -estimatedValues[,4] # Time.in.Wake

estimatedValues[,8] <- -estimatedValues[,8] # Awakenings

combinedSums <- rowSums(estimatedValues)

which(combinedSums == max(combinedSums))

# 1362Or 10:42PM, which is almost identical to the MR1 estimate. So just like before.

Both approaches suggest that I go to bed somewhat earlier than I do now. This has the same correlation ≠ causality issue as the rise-time analysis does (perhaps I am especially sleepy on the days I go to bed a bit early and so naturally sleep more), but on the other hand, it’s not suggesting I go to bed at 7PM or anything crazy, so I am more inclined to take a chance on it.

Rise Time for Productivity

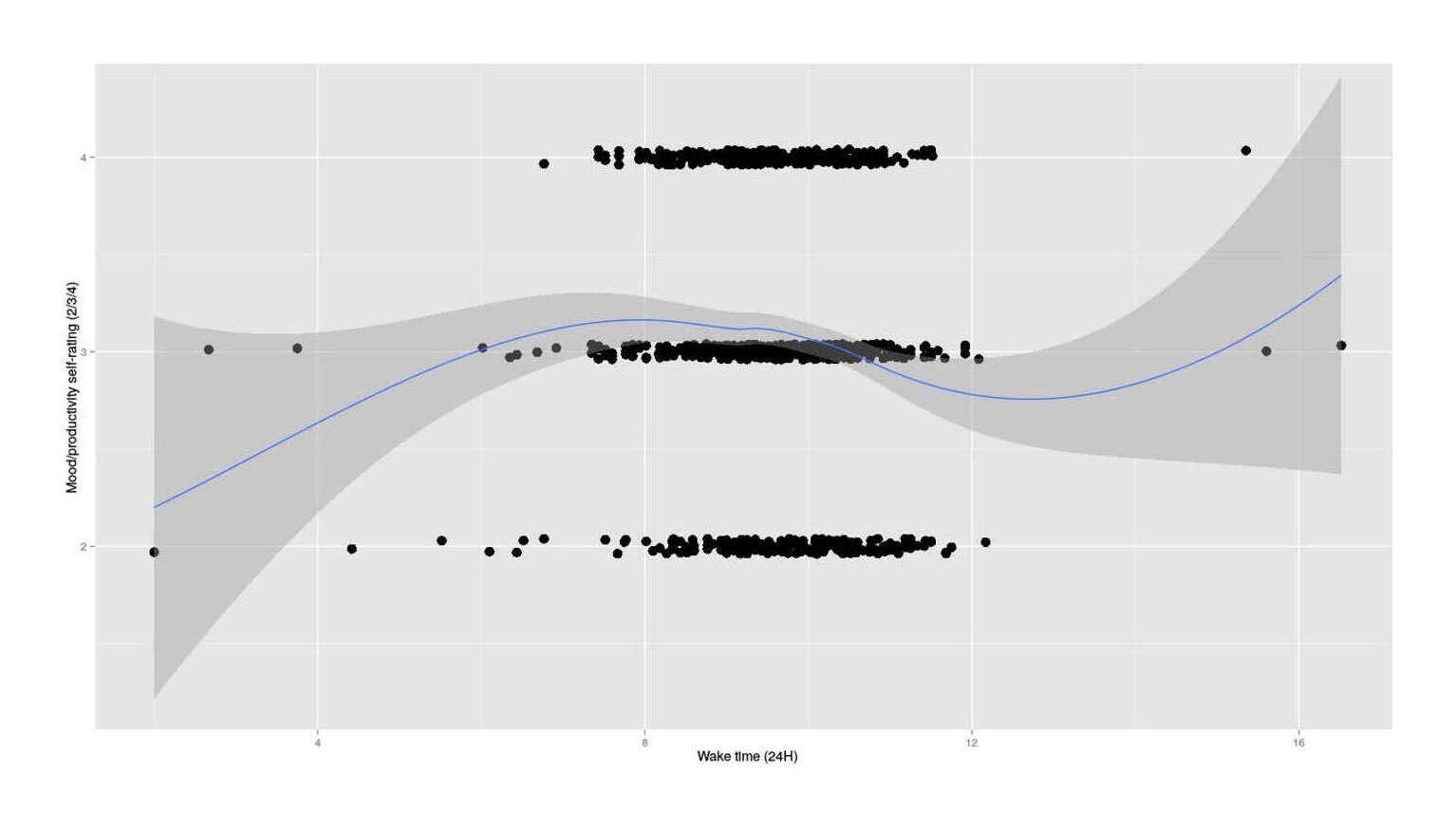

I noticed a claim that for one person, rising at 3-5AM (!) seemed to improve their days “because the morning hours have no distractions” and I wondered whether there might be any such correlation for myself, so I took my usual MP daily self-rating and plotted against rise-time that day:

Self-rating vs rise time, n = 841

It looks like a cubic suggesting one peak around 8:30AM and then a later peak, but that’s based on so little I ignore it. The causal relationship is also unclear: maybe getting up earlier really does cause higher MP self-ratings, but perhaps on days I don’t feel like doing anything I am more likely to sleep in, or some other common cause. The available samples suggest that earlier than that is worse, possibly much worse, so I am not inclined to try out something I expect to make me miserable.

The source code of the graph & analysis; preprocessing:

mp <- read.csv("~/selfexperiment/mp.csv", colClasses=c("Date","integer"))

zeo <- read.csv("https://gwern.net/doc/zeo/gwern-zeodata.csv")

## we want the date of the day sleep ended, not started, so we ignore the usual 'Sleep.Date' and construct our own 'Date':

zeo$Date <- as.Date(sapply(strsplit(as.character(zeo$Rise.Time), " "), function(x) { x[1] }), format="%m/%d/%Y")

## convert "05/12/2014 06:45" to "06:45"

zeo$Rise.Time <- sapply(strsplit(as.character(zeo$Rise.Time), " "), function(x) { x[2] })

## convert "06:45" to the integer 24300

interval <- function(x) { if (!is.na(x)) { if (grepl(" s",x)) as.integer(sub(" s","",x))

else { y <- unlist(strsplit(x, ":")); as.integer(y[[1]])*60 + as.integer(y[[2]]); }

}

else NA

}

zeo$Rise.Time <- sapply(zeo$Rise.Time, interval)

## doesn't always work, so delete missing data:

zeo <- zeo[!is.na(zeo$Date),]

## correct for the switch to new unencrypted firmware in March 2013;

## I don't know why the new firmware changed things; adjustment of 226 minutes was estimated using:

# library(changepoint); cpt.mean(na.omit(zeo$Rise.Time)); '$mean [1] 566.7 340.2'; 566.7 - 340.2 = 226

zeo[(zeo$Date >= as.Date("2013-03-11")),]$Rise.Time <-

(zeo[(zeo$Date >= as.Date("2013-03-11")),]$Rise.Time + 226) %% (24*60)

allData <- merge(mp,zeo)

morning <- data.frame(MP=allData$MP, Rise.Time=allData$Rise.Time)

morning$Rise.Time.Hour <- morning$Rise.Time / 60

write.csv(morning, file="morning.csv", row.names=FALSE)Graphing and fitting:

morning <- read.csv("https://gwern.net/doc/zeo/2014-07-26-risetime-mp.csv")

library(ggplot2)

ggplot(data = morning, aes(x=Rise.Time.Hour, y=jitter(MP, factor=0.2)))

+ xlab("Wake time (24H)")

+ ylab("Mood/productivity self-rating (2/3/4)")

+ geom_point(size=I(4))

## cross-validation suggests 0.8397 but looks identical to auto-LOESS span choice

+ stat_smooth(span=0.8397)

## looks 100% like a cubic function

linear <- lm(MP ~ Rise.Time, data=morning)

cubic <- lm(MP ~ poly(Rise.Time,3), data=morning)

anova(linear,cubic)

# Model 1: MP ~ Rise.Time

# Model 2: MP ~ poly(Rise.Time, 3)

# Res.Df RSS Df Sum of Sq F Pr(>F)

# 1 839 442

# 2 837 437 2 5.36 5.14 0.0061

AIC(linear,cubic)

# df AIC

# linear 3 1852

# cubic 5 1846

summary(cubic)

# ...Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 3.0571 0.0249 122.70 <2e-16

# poly(Rise.Time, 3)1 -0.9627 0.7225 -1.33 0.183

# poly(Rise.Time, 3)2 -1.4818 0.7225 -2.05 0.041

# poly(Rise.Time, 3)3 1.7795 0.7225 2.46 0.014

#

# Residual standard error: 0.723 on 837 degrees of freedom

# Multiple R-squared: 0.0142, Adjusted R-squared: 0.0107

# F-statistic: 4.02 on 3 and 837 DF, p-value: 0.00749

# plot(morning$Rise.Time,morning$MP); points(morning$Rise.Time,fitted(cubic),pch=19)

which(fitted(cubic) == max(fitted(cubic))) / 60

# 516 631 762

# 8.60 10.52 12.70Magnesium Citrate

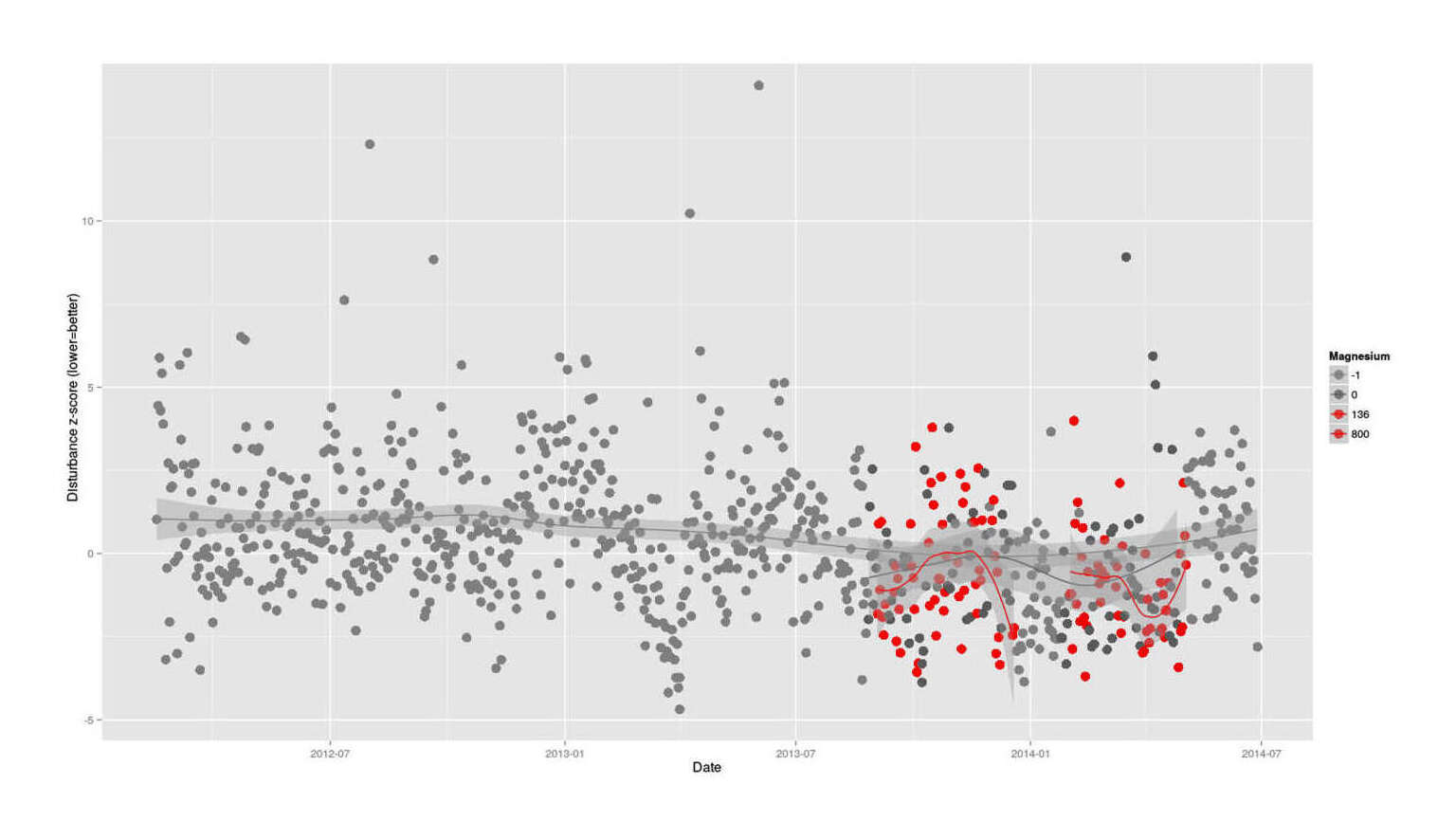

Re-analyzing data from a magnesium self-experiment, I find both positive and negative effects of the magnesium on my sleep. It’s not clear what the net effect is.

I became interested in magnesium after noting a possible effect on my productivity from TruBrain (which among other things included a magnesium tablet), and then a clear correlation from some magnesium l-threonate. I’d also long heard of magnesium helping sleep, and was curious about that too. So I began a large (~207 days) RCT trying out 136mg then 800mg of elemental magnesium per day in late 201313ya—early 201412ya. (This was not a large enough experiment to definitively answer questions about both productivity and sleep, but since I have all the data on hand, I thought I’d look.)

The results of the main were surprising: it seemed that the magnesium caused an initial large boost to my productivity, but the boost began to fade and after 20 days or so, the effect became negative, and the period with the larger dose had a worse effect, suggesting a cumulative overdose.

With the differing effect of the doses in mind, I looked at the effect on my sleep data.

Analysis

Prep:

magnesium <- read.csv("https://gwern.net/doc/nootropic/quantified-self/2013-2014-magnesium.csv")

magnesium$Date <- as.Date(magnesium$Date)

zeo <- read.csv("https://gwern.net/doc/zeo/gwern-zeodata.csv")

zeo$Sleep.Date <- as.Date(zeo$Sleep.Date, format="%m/%d/%Y")

zeo$Date <- zeo$Sleep.Date

rm(zeo$Sleep.Date)

# create an equally-weighted index of bad sleep: a z-score of the 3 bad things

zeo$Disturbance <- scale(zeo$Time.to.Z) + scale(zeo$Awakenings) + scale(zeo$Time.in.Wake)

magnesiumSleep <- merge(zeo, magnesium)

write.csv(magnesiumSleep, file="2014-07-27-magnesium-sleep.csv", row.names=FALSE)(I then hand-edited the CSV to delete unused columns.)

Graphing Disturbance:

Sleep disturbance over time, colored by magnesium dose, with LOESS-smoothed trend-lines

magnesiumSleep <- read.csv("https://gwern.net/doc/zeo/2014-07-27-magnesium-sleep.csv")

magnesiumSleep$Date <- as.Date(magnesiumSleep$Date)

## historical baseline:

magnesiumSleep[is.na(magnesiumSleep$Magnesium.citrate),]$Magnesium.citrate <- -1

library(ggplot2)

ggplot(data = magnesiumSleep, aes(x=Date, y=Disturbance, col=as.factor(magnesiumSleep$Magnesium.citrate))) +

ylab("Disturbance z-score (lower=better)") +

geom_point(size=I(4)) +

stat_smooth() +

scale_colour_manual(values=c("gray49", "grey35", "red1", "red2" ),

name = "Magnesium")Analysis (first disturbances, then all variables):

magnesiumSleep <- read.csv("https://gwern.net/doc/zeo/2014-07-27-magnesium-sleep.csv")

l0 <- lm(Disturbance ~ as.factor(Magnesium.citrate), data=magnesiumSleep)

summary(l0)

# ...Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) -0.5020571 0.1862795 -2.69518 0.0076218

# as.factor(Magnesium.citrate)136 -0.0566556 0.3101388 -0.18268 0.8552318

# as.factor(Magnesium.citrate)800 -0.5394708 0.3259212 -1.65522 0.0994178So it seems that magnesium citrate may decrease sleep problems.

l1 <- lm(cbind(ZQ, Total.Z, Time.to.Z, Time.in.Wake, Time.in.REM, Time.in.Light,

Time.in.Deep, Awakenings, Morning.Feel)

~ as.factor(Magnesium.citrate),

data=magnesiumSleep)

summary(l1)

# Response ZQ : ...Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 95.85149 1.29336 74.11065 < 2e-16

# as.factor(Magnesium.citrate)136 -3.27254 2.15332 -1.51976 0.13012

# as.factor(Magnesium.citrate)800 1.49545 2.26290 0.66086 0.50945

#

# Response Total.Z : ...Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 536.35644 6.59166 81.36898 < 2e-16

# as.factor(Magnesium.citrate)136 -27.37398 10.97453 -2.49432 0.013414

# as.factor(Magnesium.citrate)800 15.86805 11.53300 1.37588 0.170367

#

# Response Time.to.Z : ...Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 12.59406 1.24108 10.14766 < 2e-16

# as.factor(Magnesium.citrate)136 4.26559 2.06629 2.06437 0.040247

# as.factor(Magnesium.citrate)800 -2.43079 2.17144 -1.11944 0.264269

#

# Response Time.in.Wake : ...Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 24.09901 1.87720 12.83776 < 2e-16

# as.factor(Magnesium.citrate)136 -3.66041 3.12537 -1.17119 0.24289

# as.factor(Magnesium.citrate)800 -4.16023 3.28441 -1.26666 0.20672

#

# Response Time.in.REM : ...Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 171.45545 2.99387 57.26889 < 2e-16

# as.factor(Magnesium.citrate)136 -6.45545 4.98452 -1.29510 0.19675

# as.factor(Magnesium.citrate)800 2.27925 5.23818 0.43512 0.66393

#

# Response Time.in.Light : ...Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 304.54455 4.08746 74.50709 < 2.22e-16

# as.factor(Magnesium.citrate)136 -23.33403 6.80525 -3.42883 0.00073338

# as.factor(Magnesium.citrate)800 20.51667 7.15156 2.86884 0.00455323

#

# Response Time.in.Deep : ...Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 60.88119 1.20888 50.36152 < 2e-16

# as.factor(Magnesium.citrate)136 2.48723 2.01268 1.23578 0.21796

# as.factor(Magnesium.citrate)800 -6.81996 2.11510 -3.22441 0.00147

#

# Response Awakenings : ...Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 6.039604 0.238675 25.30475 < 2e-16

# as.factor(Magnesium.citrate)136 -0.548376 0.397372 -1.38001 0.16910

# as.factor(Magnesium.citrate)800 -0.427359 0.417594 -1.02338 0.30734

#

# Response Morning.Feel : ...Coefficients:

# Estimate Std. Error t value Pr(>|t|)

# (Intercept) 2.7227723 0.0762575 35.70497 < 2e-16

# as.factor(Magnesium.citrate)136 0.1193330 0.1269620 0.93991 0.34837

# as.factor(Magnesium.citrate)800 -0.1513437 0.1334229 -1.13432 0.25799

l2 <- lm(cbind(ZQ, Total.Z, Time.to.Z, Time.in.Wake, Time.in.REM, Time.in.Light,

Time.in.Deep, Awakenings, Morning.Feel) ~ Magnesium.citrate,

data=magnesiumSleep)

summary(manova(l1))

# Df Pillai approx F num Df den Df Pr(>F)

# as.factor(Magnesium.citrate) 2 0.3265357 4.271083 18 394 2.3902e-08

# Residuals 204

summary(manova(l2))

# Df Pillai approx F num Df den Df Pr(>F)

# Magnesium.citrate 1 0.1815233 4.85456 9 197 7.1454e-06

# Residuals 205

which(p.adjust(c(0.3483,0.2579,0.1752,0.1301,0.5094,0.3344,0.0134,0.1703,0.0632,0.1967,

0.6639,0.4895,0.0007,0.0045,0.0005,0.2179,0.0014,0.0004,0.0402,0.2642,

0.1262,0.2428,0.2067,0.2673,0.1691,0.3073,0.4144),

method="BH")

< 0.05)

# [1] 13 14 15 17 18A table summarizing the results by dose (‘all’ is the net effect from the non-factor version):

Variable |

Dose (mg) |

Coef |

p |

Effect |

|---|---|---|---|---|

|

136 |

0.11933 |

0.3483 |

better |

|

800 |

-0.15134 |

0.2579 |

worse |

|

all |

-0.00022 |

0.1752 |

worse |

|

136 |

-3.27254 |

0.1301 |

worse |

|

800 |

1.49545 |

0.5094 |

better |

|

all |

0.00270 |

0.3344 |

better |

|

136 |

-27.3739 |

0.0134 |

worse |

|

800 |

15.8680 |

0.1703 |

better |

|

all |

0.02698 |

0.0632 |

better |

|

136 |

-6.45545 |

0.1967 |

worse |

|

800 |

2.27925 |

0.6639 |

better |

|

all |

0.00447 |

0.4895 |

better |

|

136 |

-23.3340 |

0.0007 |

worse |

|

800 |

20.5166 |

0.0045 |

better |

|

all |

0.03202 |

0.0005 |

better |

|

136 |

2.48723 |

0.2179 |

better |

|

800 |

-6.81996 |

0.0014 |

worse |