Nenex: A Neural Personal Wiki Idea

Proposal for a personal wiki built on neural nets: all edits are logged & used to finetune a NN assistant in realtime.

Existing personal wikis or personal knowledge management tools (eg. Roam, Obsidian, org-mode) make highly limited use of the wave of powerful language & code-generating neural networks (LLMs like GPT-4), limited to minor improvements such as suggesting relevant links or offering copyediting suggestions.

This is due less to a lack of neural network capabilities than the difficulty of integrating them into document systems all designed in paradigms long predating LLMs. If Vannevar Bush or Douglas Engelbart were designing a ‘neural wiki’ from the ground up to be a ‘tool for thought’, taking GPT-4-level LLMs for granted, what would that look like?

It would probably not look like existing tools, which take a hypertext approach of a collection of independent nodes referencing each other and version-controlled as text files. Simple text-file-based approaches like copying in entire documents quickly run into performance limits or small but non-trivial error rates.

A more natural approach would be to draw inspiration from DL scaling paradigms in treating ‘everything as a sequence prediction task’: in this LLM-centric wiki paradigm (Nenex), the wiki would not be file/node-centric but edit-centric.

Instead of being hobbled by cloud providers optimizing for simplicity & jealous of their genericized chatbot models, you log all actions to train a local LLM to imitate you, and use the system alternating between taking actions and approving/disapproving execution of predicted actions. As data accumulates, the LLM learns not simply tool usage or generic text prediction, but prediction of your text, with your unique references, preferences, and even personality/values.

The wiki is represented not as a set of static files with implicit history, but in more of a revision-control system or functional programming style as a history of edits in a master log; the LLM simply learns to predict the next action in the log (using ‘dynamic evaluation’ finetuning for scalability).

All user edits, reference additions, spellchecks or new vocabulary addition, summarization, updates of now-outdated pages etc., are just more actions for the LLM to learn to predict on the fly. It can flexibly use embeddings & retrieval, simple external tools (such as downloading research papers), & operate over an API. A Nenex’s LLM can be easily upgraded by training new models on the Nenex log, additionally trained on all relevant information (private or public), and incorporate arbitrary feedback from the user.

A Nenex would interactively tailor itself to a user’s writing style, knowledge, existing corpus, and enable semantic features unavailable in other systems, such as searching a personal wiki for pages that need updating given updates to other pages.

As of September 2023, LLMs have revolutionized my code writing, but I would have to admit that they have not revolutionized my natural-language writing—as part of my Gwern.net work, I’ve integrated LLMs in a few modest ways like handling annoying formatting tasks (eg. converting LaTeX math expressions to HTML+Unicode) or for doing vector search of annotations (as you can see in most Gwern.net popups as the similars tab). And sometimes I can pop an essay into GPT-4 or Claude-2 and get some good suggestions. Not impressive. There is just not that much I can get out of an LLM if all it can do is implement short code requirements I can express in a chat format without too much friction, and I have to do everything else for it.

Background: Lifeless Corpuses

…Writing shares a strange feature with painting. The offsprings of painting stand there as if they are alive, but if anyone asks them anything, they remain most solemnly silent. The same is true of written words. You’d think they were speaking as if they had understanding, but if you question anything that has been said because you want to learn more, it continues to signify just that same thing forever…it always needs its father’s support; alone. it can neither defend itself nor come to its own support.

“Socrates”, Plato’s Phaedrus

Something I find depressing about writing, as compared to programming, is how inert it is. Writing does not do anything and when one writes, the words simply accumulate. The ideas expressed in those writings will link & build on each other, but the writing process itself remains unaffected: no matter how many millions of words one has written, each time, one confronts the same empty page and blinking text cursor. No matter how many times one corrals notes into an essay or expands an outline or runs spellcheck, it takes the same amount of work the next time, and even the fanciest text editor like Emacs or Scrivener offers little help. (In part because the benefit of many features—especially keybindings—is less than the effort it would take to learn & configure & remember how to use them.)

And this is true for other formats, like periodicals. No matter how famous and influential a periodical is, it is only as good as its latest issue, and the periodical as an organization & corpus, has shockingly little value. For example, magazines like Time or Life or Newsweek became nearly worthless overnight thanks to the Internet, and what gets published under those brand names are but pale shadows of the original globe-shaking colossi. It doesn’t matter how many extraordinary writers dedicated their lives to them or how vast their reportage was or or how large their archives (tellingly called “morgues”) are—they have all sold for a song. The writing itself, the editors, the technical equipment, the printing presses, the culture—all now worthless. (Much of that residual value reflected the IP copyright licensing value of their photographic archives to stock photography giants like Getty Images.) Nothing is more useless than yesterday’s news.

And yet, why is it? Why isn’t the accumulated wisdom of decades of writing more useful?

What is it about such intellectual work like writing that there is so little ‘infrastructure’ or ‘capital’ such that one could ‘plug a new intern into Time’ and they suddenly become a far better journalist? Why couldn’t the spirit of Time, the genius loci of a century of reporting, possess an intern, imbuing them with vast knowledge & all the secrets of the newsroom and skills like knowing where to dig up dead bodies or how to read the hidden clues a lying politician is giving you?

Well, obviously, because all of that is either locked in another human’s head (who is probably long gone), or it’s sitting inert and hidden in a mountain of dead trees. No matter how large that corpus gets, no matter what treasures are buried within, it still does nothing and it will never speak for itself, and we have no tools to levitate it with.1

What I want is to animate my dead corpus so it can learn & think & write.

Wanted: Real Writing Assistants

LLMs are convenient, as far as they go, but that is not far. I’m disappointed how little use I’ve made of such astonishingly powerful technology: these are brains in boxes that could almost write my essays for me! There have to be more useful writing LLM tools than these—like there is in writing code, where GPT-4 has rapidly become indispensable for quickly writing or reviewing my code.2 But it doesn’t seem like there’s any writing tools which have gone much further.3 Certainly, popular writing tools like Roam, Obsidian, org-mode/Logseq etc. do not seem to have made much progress incorporating LLMs in any profound way.4

It’s not like there aren’t plenty of things about the standard tool-for-thought experience which couldn’t be improved by LLMs. For example, tag management is a perennial pain point: adding tags to pages or URLs, splitting up overgrown tags, naming the new sub-tags… All extremely tedious chores which cause users to abandon tagging, but also straightforwardly automatable using LLM embeddings+prompts. Naming & titling short pages is a hassle that discourages creating them in the first place. Breaking up a long essay into a useful hierarchy of section headers is not the most fun one could have on one’s laptop in bed. Or consider writing good revision/patch summaries for all that—annoying!5

Writing Assistant Limitations

“Ginny!” said Mr. Weasley, flabbergasted. “Haven’t I taught you anything? What have I always told you? Never trust anything that can think for itself if you can’t see where it keeps its brain?”

Harry Potter and the Chamber of Secrets, J. K. Rowling

But it’s easy to see barriers to a deep integration of LLMs. The first question is: how do you even get your text into an LLM for it to do anything with it? You immediately run into the problem that most LLM use can accept a small amount of text (a small fraction of an essay), and only temporarily—that text will be forgotten as soon as that request finishes. This means that an LLM is ignorant of the rest of your personal wiki, never mind even larger feasible text datasets that would be highly useful. (For example, an LLM personal assistant would benefit from studying all your emails & chats, and the text of every paper or web page you’ve read.) You also can’t update the LLM: it would be great if you could compile a list of every error your LLM assistant made (like flagging a new name or vocabulary term as a spelling error), and feed that back in; but you can’t do that either, because if you put the list of errors & corrections into the LLM input, it wouldn’t fit. And you can forget about ideas like feeding a livestream of your typing to the LLM to teach it how you think & revise text.

Putting stuff into LLMs is such a barrier that most uses revolve around figuring out how to dice up a large text corpus in a way which isn’t too error-prone to feed in a small morsel of text and get back something useful that can then be blindly inserted back into the corpus. (And because these can’t be all that useful, they must be extremely cheap, and one may not bother with them at all.)

We also have security issues: our LLM assistant is too easily turned into a confused deputy, executing orders that come from attackers via prompt injection, rather than our own commands, because they tend to look the same, and our LLM assistant just doesn’t know that much about us—if “we” command it to delete all our files, how should it know that that’s not something we’d ever do? It was simply trained to be ‘helpful’, without any particular details about helpful to whom.

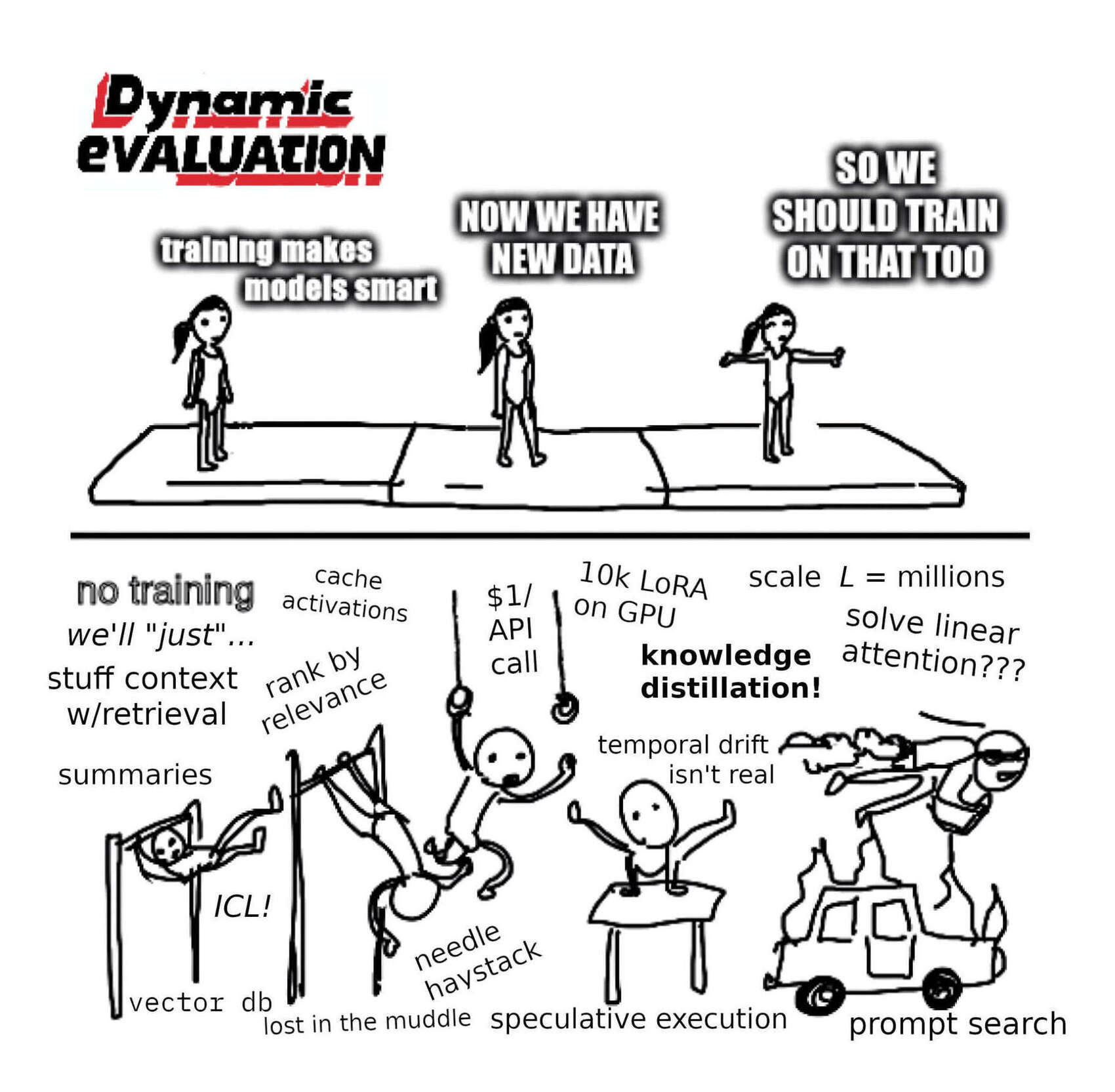

Dynamic Evaluation

The Moving Finger writes; and, having writ,

Moves on: nor all thy Piety nor Wit

Shall lure it back to cancel half a Line,

Nor all thy Tears wash out a Word of it.Edward FitzGerald (#51, Rubáiyát of Omar Khayyám)

The biggest problem with LLMs is the processing bottleneck of attention limiting its ability to flexibly learn from any kind of corpus.

We could wait for better attention & memory mechanisms. It’s a hot area of research, and publicly-accessible models like Claude-2 can, as of September 2023, digest up to hundreds of thousands of tokens. Perhaps that is what neural wikis are waiting for? Or some further tweaks to retrieval?

But Claude-2 is still extremely expensive, the attention seems to routinely fail or result on confabulations and it seems likely that a Transformer over hundreds of thousands of tokens will be inherently slow if it must reprocess the entire input for every token output. And we want millions or tens of millions of tokens, with as little fancy architecture engineering as possible—a real LLM maximalist wants to program NNs using not code, but data (and pre-existing data at that).

There is an alternative to context windows, if we look back in DL history to the now-forgotten age of RNNs. If we drop the usual unstated assumption that one must use stateless API calls which cannot be personalized beyond the prompt, the idea of finetuning becomes an obvious one. As NN models are so vastly overparameterized and store vast amounts of memorized facts in their weights, they can easily accommodate anything that a user might write, or their reference database—after all, they generally saw billions or trillions of tokens during training, so what’s a few million more? Large NNs are increasingly sample-efficient, learning more from fewer data points than smaller models, often memorizing while still generalizing; in fact, they can sometimes memorize text after only one exposure. And finetuning doesn’t need to be one-and-done, or limited to running offline in a batch process overnight while the user sleeps.

When RNN users wanted the best performance possible on a new corpus of text, what did they do? They used dynamic evaluation (Transformer version, w/retrieval)6: essentially, just finetuning the model (using ordinary ‘online’ SGD) each timestep on each input as it arrived.7 (For example, a char-RNN might predict the next character, receive the actual next character, do a backprop step, and predict the next character after that by feeding the current hidden state into the newly-weight-updated RNN.) This lets the model learn from the latest data, in a more profound way than simply being in a context window & doing in-context/meta-learning, because the weights can change repeatedly over many timesteps, building on prior weight updates, and permanently encoding knowledge into its weights. Language models can memorize samples seen only a few times during training, or even once, but still benefit from training for multiple epoches, so we can have our cake and eat it too: have smart models which memorize—indeed, we can deliberately train models to memorize large sets of facts to serve as a long-term memory, such as the unique IDs of relevant documents. (For more background on this paradigm, see my AUNN proposal which takes the memorizing-data paradigm to its limit.)

Challenges in using a frozen model with large new time-varying datasets.

Dynamic evaluation is particularly good at updating a model to a rather different data distribution, handling repeated or rare/novel (or both) tokens, at a constant factor cost (which can be dropped as necessary), while in principal being able to improve indefinitely. This makes dynamic evaluation much more suitable than other techniques like vector retrieval for highly-personalized long-form writing, where one might introduce many new concepts, terms (eg. ‘Nenex’), or references, which a pretrained model might not know and which might not be in the context window either (because the previous instances were in a different file, or just too far away in the current file either before or after the focus): as soon as it is used, it is infused into the model’s weights, and the more surprising or novel the unpredicted text is, the larger the update. At the beginning, when text still fits into the context window & the Transformer can learn in-context, dynamic evaluation won’t help too much because it is still ‘warming up’, but it will gradually improve the more text it sees, while an amnesiac Transformer cannot; few-shot prompting can, with enough examples, catch up to finetuning, but may require thousands of examples and use up a context window of even millions of tokens at staggering expense & latency; and a batch-finetuned Transformer may be competitive, but it will be so only at greatly staggered intervals—too late to assist the user in writing their current essay.

This would be a natural way to implement a personal writing assistant: finetuning is not that expensive when amortized over heavy use8, a user doesn’t type fast in terms of tokens per second, so a good Transformer can keep up while being dynamically evaluated (and even train on the same minibatch repeatedly or go back to do multiple epoches), and the Transformer will be able to predict substantially better over large corpuses.

The naive approach would be to just predict one’s writing inside the text editor, with some tab-completion assistance. That’s fine, but we can do better. If it can predict the user’s next typed word action, why not predict the user’s next action, period? Not just Backspace to correct a typo, but all actions: spellcheck, writing commit messages, switching text buffers…

(Our goal here is not ‘superintelligence’, but ‘superknowledge’.)

Performance

Because users type slowly on average, if the LLM is fast enough to provide completions in a reasonable timeframe at all, then it can probably be dynamically evaluated as well; if it is not, then one can begin optimizing more heavily.

For example, one could run models in parallel: train a model one place while running a model (not necessarily identical) elsewhere. Lightweight finetuning methods like LoRA may be helpful, although not a panacea, because the point is not to hardwire the model to a pre-existing capability, exploiting the linearity & low-dimensionality of most tasks, but to have the model memorize everything about the user, ideally everything they’ve ever written or read, and all data available from sources like emails or calendars, which is a large amount of detailed brute facts that must be memorized & will not fit in a ‘lightweight adaptor’ of a few kilobytes (or even megabytes)—so eg. LoRA works fine for instruction-tuning in English, but lightweight finetuning methods do not generalize or scale well, and to do it in a different language altogether requires true finetuning. The sampling-only model could use heavy performance optimizations like quantization (which can be done quickly).

Transformer models are inherently latency-tolerant in this sort of parallel setup, because their large context window provides a buffer for training; the model does not need to be up-to-the-second to work, because the most recent user behavior will still be in the context window. The in-context learning can substitute for dynamicism as long as the context window doesn’t ‘overflow’.

Even if it overflows, it will probably be hard for the user to notice the decay immediately—they would need to be invoking behavior uniquely dependent on unpredictable text in the gap between the context window (which the user’s actions are constantly repopulating with relevant text) and the already-dynamically-evaluated model constantly being synced over from the training process.

And the further behind the training model becomes, the more opportunity it has to use n > 1 minibatches to switch from serial training to parallel batch training, and leapfrog to the present, and do one big sync with the sampling model. (And if GPU VRAM permits sampling but not any training, then the alternate training instance can be located on CPU, where there may be enough RAM to slowly train.)

If the user slows down, then the problem will solve itself.

Immutability

We can do this by stepping back from simply recording typed words, to recording all actions. The central object is no longer a static text file sitting on disk, but a sequence of actions. Every text file is built up by a sequence of actions taken by the user: inserting other files, deleting words, spellchecking, typing, running built-in functions to do things like sort lines… Each action can be logged, and the LLM dynamically trained to predict the next action.9

This would work particularly well with a Lisp approach, as Lisp systems like Emacs can easily serialize all executed user actions to textual S-expressions, and then call the LLM to generate a new S-expression, and interpret that to achieve anything that the user could do.10

Imitation Learning

This gives a new paradigm for a ‘text editor’. Where Emacs is “everything is a buffer or Lisp function”, and vi is “everything is a keystroke”, for a neural assistant, “everything is user imitation”. All user actions and state transitions are stored as a sequence, and predicted by the LLM.

The LLM is now an imitation learning deep reinforcement learning agent, similar to a Decision Transformer like Gato or Toolformer. It is both the operating system and the user: it is training to predict the user’s actions in the text editor ‘environment’ to achieve good states (like a high-quality essay).

And because it is supervised by the user, its errors are corrected immediately, leading to a DAgger-like bootstrap: if the NN errors frequently in a particular way, it gets feedback on that class of errors and fixes it. This is because if a prediction doesn’t quite work, the user’s subsequent actions correct it: they either undo it and try again until they get the right thing, edit the wrong response into a right response (this can be a pervasive idiom, perhaps bound to a specific should-have-been function to edit anything that can be edited), or do the right thing manually. Each of these fixes the model to varying degrees, and ideally, will fix not just that specific instance or similar errors, but gradually improve the model’s responses as a whole by learning the joint function of user+corpus.

Because the model is being dynamically evaluated, these fixes happen immediately and these improvements stick—they do not simply disappear out the context window (or disappear into some distant cloud database to perhaps someday improve a model which may be deployed next year).

The more the user writes or does, the more the model learns to imitate it and the user switches from ‘doing’ to ‘approving’, and the more can be delegated to the NN. This goal of imitating the user also helps resolve security concerns about destruction or exfiltration.11

Warm-Starting

The LLM can be taught by demonstration to do many things, and new features created by the user simply by ‘hand-editing’ the feature. But it would be burdensome for the user if a Nenex came as a tabula rasa where they had to actually do so for every feature they might want (as interesting an exercise as ‘bootstrapping your own unique knowledge-base/wiki/editor from scratch’ might be).

So a Nenex’s LLM would come pretrained on a public ‘demo corpus’, a log which ran through demonstrations of all standard functionality. Users could contribute back additional demonstrations to ‘patch’ problems.12

Implementing Features

With this paradigm, we can now accomplish many ‘tools for thought’ goals, by expressing them as appropriately-formatted log items and then using the LLM to predict actions conditional on the current log and/or prompts.

Text completion remains as before, simply now wrapped in some sort of

insert-textwrapper.The suggested text completion is presented to the user through some sort of GUI or TUI interface, like grayed-out text or listed in a side pane. (A side pane for all the LLM ‘commentary’, akin to how streaming websites implement chat in a pane next to or below the streamed video, would be the obvious first stab at a GUI.)

If the user ignores the presented completions and continues writing, this automatically corrects the LLM in the usual way: those completions were not likely after all, and should not have been predicted; and vice-versa if the user does accept a completion. Nothing special need be done, merely the usual text-insertion recorded.

Copying/pasting: cut/copy/paste, in the simple familiar form and the more powerful forms supported by text editors (like Emacs’s kill-ring and registers), are the most common tasks after typing. In most cases, a few keystrokes is enough to specify everything, and involving an LLM would not help.

In more complex cases, of the sort that one might make heavy use of registers to store multiple different copied pieces of text while pasting them at various times, powerful text completion can provide the same functionality: the user ‘cuts’ several pieces of text (without any explicit decision about register storage), creating discrete atomic sections of text in the log, and then types the first word or two, and accepts a tab-completion of the rest of it from the LLM. This avoids the cognitive overhead of naming and remembering and specifying individual registers.

Abbreviations/templates: another common text editor power user feature is various sorts of ‘text expansion’: typing an acronym might expand out into the full spelled-out word or phrase, or an ID might expand out to some template like a form letter. These are generally subsumed by an LLM doing ordinary text-completion with sufficiently literal access to history, especially if the user made a habit of typing an ID or keyword first before the rote text.

Auto-Links: one of the greatest hassles of densely hyperlinked text is simply adding relevant links. Auto-linking is one of the annoying problems where past automated methods aren’t quite smart enough to make it work without a lot of effort, but doing it manually is both too easy & too hard. Each link has to be recalled & looked up, stressing fallible memory. A website like English Wikipedia can count on editors using specialized link-adding tools, but not a personal wiki. It is also not as simple as setting up regexp/URL pairs, as that will often misfire (or fail to fire), and even when all the text hits are valid, result in over-linking as well—usually, one only wants the first instance linked, well, unless the first instance is distant in the text or a reader might not have noticed it, in which case maybe one wants some later instances as well, depending on the context…

However, an LLM can easily memorize the full set of hyperlinks a user ever writes, and it can easily understand subtle contextual rules like ‘link the first one only—usually’. And link prediction is simply a subset of text prediction.

Spellcheck is automatically learned by logs of the user running spellcheck or simply editing erroneous words.

Like completions, spellcheck fixes itself. If the user rejects corrections, then that is logged and the model learns to predict the rejection and that it was not useful, and the word in question should be preserved & predicted.

Summarization/abstraction: most LLMs can be prompted to write abstracts for an essay.

Should this not be the case or customized abstracts desired, abstraction can be trained by log entries which take the existing essays, the abstract removed, an abstraction prompt prefixed, and the abstract suffixed.

Sectionizing: creating a good set of hierarchical headers for an essay can be tedious.

This may not be promptable, but can be trained like summarization: construct the training examples by defining a sectionizing prompt, strip the sections from existing essays, and append them as the answer.

This learns online in the usual way: eg. the user runs the sectionize function, notes that one section header is bad, fixes it, and now there’s a new example in the log of ‘here is a bad header that the user corrected to a good header’, and the concept of ‘good header’ improves for next time.

Navigation actions: switching between different files (or ‘buffers’ in Emacs terminology) is another action to be logged and predicted.

It would not necessarily be useful to let the LLM autonomously switch buffers in the text editor, but it can predict a ‘default’ buffer to switch to. Given the context up to the present instant (which the LLM knows, because it is being dynamically evaluated & can see the latest log entries in its context window), the desired buffer is usually obvious—the user has switched to it repeatedly, or there is a regular cycle of buffer switches, or one buffer contains text relevant to the current paragraph.

As the LLM gets good, the user can simply tap a short easy keybinding to ‘do what I mean’.

Change control: selecting edits and summarizing them as a patch can be automated given user examples.

A user can designate a set of edits as a ‘patch’, write a summary, and ‘commit’ it to the log as an entry. The edits might take the form of a diff, or they might simply specify a range of log entries, like the previous 1000 log entries combined with the summary “wrote up today’s bike trip”.

Then this becomes promptable.

Arbitrary knowledge can be added into the log, wrapped in a null-op command.

For example, if we wish to teach the LLM about useful references, we could issue some sort of

download-paper https://arxiv.org/abs/2023.1234command, which would download the fulltext of the paper and insert that into the log. The LLM will train on the paper text, learning all about it, and will now understand our reference to “as et al 2023 shows, it takes 20 GPUs to frobnicate a doodad”, and perhaps will point out that Smith actually said “10 GPUs”.Or we could add in emails, or chats, or web page snippets, or keyboard logs. These inputs do not necessarily have direct uses, but the more knowledge about/from the author, the better.

(As the model is private and customized for the user locally, assuming no API use, this poses no privacy risks—presumably the author is reading their own essays before publishing them and would see any accidental leaks like publishing their address! We can also note that finetuning a remote model is a powerful form of obfuscation, which is a fact of particular interest to companies worried about subpoenas & legal discovery over training data corpuses; if you finetune the model and throw away the data, the model, like a human employee, keeps the capability/knowledge but has to be explicitly ‘questioned’ to extract any information…)

Plugging in better models: one source of ‘arbitrary knowledge’ particularly worth highlighting is bigger, better models.

When I talk about using the Nenex local model vs using a remote SaaS API model (especially an expensive frontier LLM), this is not a strict xor: we can use both! There is no reason that our local model cannot learn to call, when uncertain, out to a large model for assistance.

Let’s call that advisor models. You can simply dump the last n million tokens of context into another model(s), get the output (which will be highly intelligent, but limited by lack of personalization), and put that into the local model’s log to use, and then train on.

The more you do this, the more you ‘distill’ the relevant knowledge from the advisors into the local model, and the more the local model also learns when it would be good to call out to an advisor.

Learned retrieval:

One of the advantages of an imitation-learning approach is that the NN can learn to approximate the output of arbitrarily complicated processes, such as larger NNs or humans or automated tools.

One example of something useful to approximate is vector search. Vector search tends to be inflexible because the embeddings were generated for generic purposes, and suffer from being a jack-of-all-trades.

A Nenex LLM, however, can learn customized retrieval. It has memorized the URLs of relevant works, and can suggest them in response to a query; as the relevant links get inserted in essays, this becomes more feedback.

It can even learn to imitate an actual retrieval database’s responses! When retrieval queries are executed and the results logged, that is training data. This can be combined with post-retrieval filtering: “look up Transformer papers related to

(retrieve 'https://arxiv.org/abs/2023.1234')”, where the retrieval happens in the normal vector search way, and then the LLM filters out the non-Transformer papers, and returns the final matches URL1–n. This turns into a log item of (“look up Transformer papers…” → URL1–n), and at some point the user can just ask a query like “what’s the Transformer stuff forhttps://arxiv.org/abs/2023.1234?” and it knows.Aside from being useful in its own right, such embedding imitation can help instill more general knowledge into the NN, better organizing its understanding of retrieved material.

Outdated Pages: as you edit, and time passes, inevitably there’s ‘drift’, the natural language equivalent of bitrot. Can you solve this in Nenex? Of course.

You simply take the NN (which has memorized your wiki), and run it over each context window’s worth of text (perhaps packed with retrieval of similar text ranges), with the prompt “Is there any outdated or incorrect information in this passage? If there is, generate an edit fixing it”, and for each time that it predicts an edit, prompt the user with the context and the edit; if the user accepts it, it happens immediately, and regardless, it is added to the log and the model finetunes on it and restarts the site-wide update.

Each time it makes an edit or the user rejects an edit, it learns, and the site as a whole quickly updates any outdated passages. The user can’t read the whole site every time he updates something or a week passes… but the model can!

You can imagine many optimizations to this. An embedding defines a graph, so any time the user edits a piece of text, this iterative update process can kick off at the edited text and do breadth-first traversal looking for text to update based on what the user just wrote. (eg. you have a bunch of pages documenting some software tool and you fire up the editor and write ‘version 1.0 can now handle JSON’ and then all the passages which mention ‘JSON’ get inspected by the model which says ‘well obviously this page which says “the tool does not handle JSON” is now out of date, let’s ask the user for an edit to

[−not ].’)Commentary/critique13: prompts can elicit different persona in the model to make writing suggestions.

Since the full power of the LLM is available, we can ask for writing advice & critiques from any angle. A user could package up a set of prompts into a single command, like

M-x criticize-paragraph. The personas could be specified by the user, but one could just as well prompt the model to retrieve or generate a list to use.The criticize function could be intelligently run by Nenex only when it sees a paragraph is finished and the user moved on to the next paragraph: if it’s a meaty paragraph, then it’s worth writing the critique, but if it’s a short paragraph, like a rhetorical statement, then it can be skipped. This would happen naturally as the user makes use of it

Brainstorming: Since we have paid for a GPU, we might as well do more background tasks. One example of commentary/critique is trying to brainstorm new ideas; one could do things like a “day-dreaming loop” and retrieve random fragments of the corpus to attempt to generate novel outputs, and pass the most promising up to the user for evaluation.

External Links

“The Turing-Complete User” design philosophy