GPT-2 Neural Network Poetry

Demonstration tutorial of retraining OpenAI’s GPT-2 (a text-generating Transformer neural network) on large poetry corpuses to generate high-quality English verse.

- GPT-2-117M: Generating Poetry

- Training GPT-2-117M To Generate Poetry

- Training

GPT-2-poetry - Training

GPT-2-poetry-prefix - GPT-2-1.5b

- Overall

- Improvements

- External Links

- Appendix

In February 2019, following up on my 2015–2016 text-generation experiments with char-RNNs, I experiment with the cutting-edge Transformer NN architecture for language modeling & text generation.

Using OpenAI’s GPT-2-117M (117M) model pre-trained on a large Internet corpus and nshepperd’s finetuning code, I retrain GPT-2-117M on a large (117MB) Project Gutenberg poetry corpus. I demonstrate how to train 2 variants: “GPT-2-poetry”, trained on the poems as a continuous stream of text, and “GPT-2-poetry-prefix”, with each line prefixed with the metadata of the PG book it came from. In May 2019, I trained the next-largest GPT-2, GPT-2-345M, similarly, for a further quality boost in generated poems. In October 2019, I & Shawn Presser retrained GPT-2-117M on a Project Gutenberg corpus with improved formatting, and combined it with a contemporary poem dataset based on Poetry Foundation’s website; finally, we retrained the newly-released GPT-2-1.5b, which did not fit in our GPUs so we used TRC-supplied TPUs in a “swarm” to slowly finetune it.

With just a few GPU-days on NVIDIA 1080ti GPUs, GPT-2-117M finetuning can produce high-quality poetry which is more thematically consistent than my char-RNN poems—capable of modeling subtle features like rhyming, and sometimes even a pleasure to read.

I list some of the many possible ways to improve poem generation and further approach human-level poems. For the highest-quality AI poetry to date, see my followup pages, “GPT-3 Creative Writing”.

See Also: For anime plot summaries, see TWDNE; for generating ABC-formatted folk music, see “GPT-2 Folk Music” & “GPT-2 Preference Learning for Music and Poetry Generation”; for playing chess, see “A Very Unlikely Chess Game”; for the Reddit comment generator, see SubSimulatorGPT-2; for fanfiction, the Ao3; and for video games, the walkthrough model. For OpenAI’s GPT-3 followup, see “GPT-3: Language Models are Few-Shot Learners”.

OpenAI announced in February 2019 in “Better Language Models and Their Implications” their creation of “GPT-2-1.5b”, a Transformer1 neural network 10× larger than before trained (like a char-RNN with a predictive loss) by unsupervised learning on 40GB of high-quality text curated by Redditors. GPT-2-1.5b led to large improvements over GPT-1’s natural language generation, is close to or SOTA on natural language modeling, and demonstrated high performance on untrained NLP tasks (see the paper for more details: “Language Models are Unsupervised Multitask Learners”, et al 2019). By large improvements, one means that the best samples like the ones included in the OA announcement have started to reach an uncanny valley of text, capable of telling entire semi-coherent stories which can almost fool a sloppy reader—certainly, the verisimilitude is better than any char-RNN output I’ve seen. (A dump of many more samples is available on GitHub. There is also an interactive word-by-word “GPT-2-Explorer”.) The full GPT-2-1.5b model was not released, but a much smaller one a tenth the size, GPT-2-117M was released in February 2019, which I call “GPT-2-117M” to avoid confusion.

GPT-2-117M was used in most initial experiments with GPT-2-based text generation. OA’s next largest models, GPT-2-355M & GPT-2-774M were released in May & August 2019, and the final, largest, GPT-2-1.5b model was released in November 2019 (too late to be used in most of these experiments); 355M–774M turn out to just barely be trainable on commodity GPUs.2 Also worth noting is the release of 2019’s independently-trained GPT-2-1.5b model, which produces good samples if perhaps not quite as good as the OpenAI GPT-2-1.5b, but which was not trainable at the time on desktop GPUs3 although it does still at least run (allowing for sampling/prompting if not training). OpenAI notes that “Since February, we’ve spoken with more than five groups who have replicated GPT-2”, and some have or gone further than GPT-2-1.5b: replications/extensions include 2019, GROVER, Hugging Face (a NLP startup), XLNet, Nvidia’s MegatronLM, Google’s T5 (finetuning Colab), and MS’s DialoGPT.

GPT-2-117M: Generating Poetry

‘I don’t speak’, Bijaz said. ‘I operate a machine called language. It creaks and groans, but is mine own.’

Naturally, people immediately used GPT-2-117M for all sorts of things, and I applied it myself to generate surreal anime plot summaries & dialogue for “This Waifu Does Not Exist”.4 Even more naturally, just as with char-RNNs, GPT-2 models, even unfinetuned, work well for poetry:

GPT-2-117M completions of Allen Ginsberg’s “Howl”: “An Eternal Howl” (comments: 1); Rob Miles

Shelley’s “Ozymandias”: “GPT-2 Writes a Shelley Poem”

Alexander Pope’s Essay On Criticism: “GPT-2 As Step Toward General Intelligence”

8 famous opening lines from Tennyson, Yeats, Shakespeare, Henley, Whitman, T.S. Eliot: Peter Krantz

Kyle McDonald provided a tool around GPT-2-117M demonstrating ~154 prompts

“Ask GPT-2: Helpful Advice From A Confused Robot”: T.S. Eliot’s “Wasteland”

Samuel Taylor Coleridge’s “The Rime of the Ancient Mariner”: “FridAI: ‘Water, water, everywhere’, as read by Artificial Intelligence”

verse from a GPT-2-1.5b trained on a Google News corpus (‽) (using Grover)

CTRL appears capable of generating verse when prompted with the “books” genre, see the Github repository’s “Weary with toil…” example (CTRL uses a ‘prefix’ approach similar to mine, and the “books” prefix corresponds to Project Gutenberg text, so it is not surprising that its samples would resemble my GPT-2-poetry samples)

Transformer Poetry: Poetry classics reimagined by artificial intelligence, Kane 20195

Kenyon College class projects: James Wright/John Donne/Taylor Swift

Poetry is a natural fit for machine generation because we don’t necessarily expect it to make sense or have standard syntax/grammar/vocabulary, and because it is often as much about the sound as the sense. Humans may find even mediocre poetry quite hard to write, but machines are indefatigable and can generate many samples to select from, so the final results can be pretty decent.

The quality of the results is limited by sometimes only having access to smaller models and difficulty in running larger models at all; that can’t be fixed (yet). But quality is also reduced by GPT-2-117M being trained on all kinds of text, not just poetry, which means sampling may quickly diverge into prose (as seems to happen particularly easily if given only a single opening line, which presumably makes it hard for it to infer that it’s supposed to generate poetry rather than much more common prose), and it may not have learned poetry as well as it could have, as poetry presumably made up a minute fraction of its corpus (Redditors not being particularly fond of as unpopular a genre these days as poetry). Finetuning or retraining the released GPT-2-117M model on a large poetry corpus would solve the latter two problems.

The poetry samples above did not exploit finetuning because OpenAI did not provide any code to do so and declined to provide any when asked. Fortunate, nshepperd wrote a simple finetuning training implementation, which I could use for adding more interesting samples to my TWDNE and for retraining on poetry corpuses to compare with my previous char-RNN poetry attempts back in 2015–2016 (see the top of this page). An alternative GPT-2 training implementation with support for training on GCP TPUs has been created by Connor Leahy (technical details), who trained a GPT-2-1.5b (albeit to substantially worse performance).

Training GPT-2-117M To Generate Poetry

Data: The Project Gutenberg Poetry Corpus

My heart, why come you here alone?

The wild thing of my heart is grown

To be a thing,

Fairy, and wild, and fair, and wholeGPT-2

For the poetry corpus, Allison Parrish’s public domain “A Gutenberg Poetry Corpus” (“approximately three million lines of poetry extracted from hundreds of books from Project Gutenberg”) will serve admirably. A few other possibilities surface in Google Dataset Search, like “Poems from poetryfoundation.org”, but nothing particularly compelling.

As far as the text formatting goes, GPT-2-117M is flexible, you can dump in pretty much any text into a text file to use as the corpus, but some text formats are better than others. You want something which is as regular as possible (in both syntax & semantics), but also one which is as close to the kind of text you want generated, but also which wastes as few symbols as possible. Regularity makes learning easier, and you don’t want to have to massage the output too much, but on the other hand, GPT-2-117M has a narrow ‘window’ and no memory whatsoever, so if each line is padded out with a lot of formatting or even just whitespace, one would expect that to considerably damage output coherence—as most of the fixed ‘window’ is wasted on meaningless repetitive whitespace, while other changes like replacing newlines with the poetic convention of ’ / ’ are worse than nothing (since newline is 1 character vs 3 and maximally dense). Minimizing formatting also makes the cross-entropy loss easier to interpret or compare across datasets/runs: if there is a lot of formatting which is easy to predict or little formatting, the loss can look misleadingly good (or bad) as it easily predicts the formatting but struggles on more meaningful content.6

The PG corpus has a strange format: each line is a separate JSON object, consisting of one line of poetry and a numeric ID for the work it’s from. Fortunately, the file as a whole is in order (if the lines were out of order, training on them would destroy the long-range language modeling which is the Transformer’s raison d’être!), so to turn it into a clean text file for training on, we can simply query it with jq and strip out the remaining formatting. This provides a pretty good format over all: the newlines are meaningful, no symbols are wasted on leading or trailing whitespace, and it looks like what we want. It is imperfect in that metadata/formatting we would like to be there, such as author or poem title, is not there, and things we would prefer not to be there, like the prose prefaces of books or annotations, are, but hard to see how to fix those easily. Another flaw I learned about only afterwards is that the PG corpus has been censored ad usum Delphini to remove “egregiously offensive content” such as “racist/sexist/ableist” words like “Pakistan” or “homogenous” (while, of course, permitting innocuous words like “s—t” or “f—k”). This corpus is unsuited for any serious academic work, but it should be fine for playing around with generating poems.

Setting up the GPT-2-117M training environment & obtaining the poetry corpus:

git clone 'https://github.com/nshepperd/gpt-2.git'

cd gpt-2

source activate $MY_TENSORFLOW_ENVIRONMENT # set up your particular virtualenv

sh download_model.sh 117M # download original OA model

wget 'http://static.decontextualize.com/gutenberg-poetry-v001.ndjson.gz'

gunzip gutenberg-poetry-v001.ndjson.gz

cat gutenberg-poetry-v001.ndjson | jq .s | sed -e 's/^.//' -e 's/.$//' -e 's/\\//g' \

>> gutenberg-poetry-v001.txt ## delete JSON quoting

shuf gutenberg-poetry-v001.txt | head ## random poetry lines:

# For the black bat, night, has flown,

# The other's fate, Gaville, still dost rue.

# That make me mad. Oh, save me from those eyes!

# eyes, "w'y don't you talk straight out from the

# Make all there is in love so true.

# But p'r'aps I couldn't.

# And the soft ground turned to gravel,

# "'We will fight in bloody scuffle.'"

# For the fever'd dreams on thy rest that throng!"

# It is strange--my heart is heavy,

du -h gutenberg-poetry-v001.txt; wc gutenberg-poetry-v001.txt

# 117M gutenberg-poetry-v001.txt

# 3085117 21959786 121730091 gutenberg-poetry-v001.txtThere is an additional step before beginning training. GPT-2-117M works with text in a “byte-pair encoding”, which is somewhere in between a character embedding & a word embedding. The point of this BPE encoding is that it is somewhat more efficient than raw characters, because it can chunk more common sub-words or phrases & this gets more complete words or phrases into the Transformer’s fixed ‘window’ of n symbols, but BPE still assigns symbols to individual letters, and thus arbitrary outputs can be generated, unlike word-level NNs which are more compact but trade this off by having a restricted vocabulary of m words seen in the training corpus and must treat everything else as the unknown token <UNK> (especially bad for rare words like proper names or variants of words like pluralization or tenses). The training code will encode the text corpus at startup if necessary, but for 117MB of text this is so slow that it is worth the extra work to run the encoding process in advance & store the results before training on it:

PYTHONPATH=src ./encode.py gutenberg-poetry-v001.txt gutenberg-poetry-v001.txt.npzTraining GPT-2-poetry

The temptation of CPU training after a bad Tensorflow upgrade.

I assume you have a fully-working Nvidia CUDA & GPU-enabled Tensorflow installation, and have either run other DL code successfully or run the TF installation checklist’s MNIST toy example to verify that you have working GPU training. If you do not, I can give you no advice other than “good luck”. Debugging CUDA problems are the worst, and once you get a working setup, you should stick with it. If you just can’t solve the inscrutable crashes, you should look into using the free Google Colab GPU/TPU notebooks, or renting a cloud VM. (Training in the cloud is not as hard or complicated as it may seem, since you can pick a VM OS image which comes with CUDA/Tensorflow preinstalled and which will always work with the available GPUs.) You may be tempted to train on CPU to avoid the GPU-support mess entirely, but I advise against this: it will be at least 20× slower even if you have a many-core CPU like Threadripper, and it’ll save you time in the short run to switch to Colab or cloud or some alternative.

Then training proper can begin; my 1080ti7 can fit a minibatch size of 2 (GPT-2-117M is still a large model), and I’d rather not see too much output so I reduce the frequency of checkpointing & random text generation:

PYTHONPATH=src ./train.py --model_name 117M --dataset gutenberg-poetry-v001.txt.npz \

--batch_size 2 --save_every 10000 --sample_every 1000Check your CLI options

The Python library “fire” used in the OA GPT-2 code is treacherous—it will not error out or even warn you if you typo a command-line option! Double or triple-check any new options you set against the available arguments defined by train_main in train.py, and keep this gotcha in mind if setting an option doesn’t appear to be doing anything.8 While nshepperd has removed use of “fire” in favor of saner CLI options, watch out for this if you are using the original OA code or other derivatives.

Some hyperparameters could use tweaking:

runtime, Temperature:

‘Temperature’ (0–∞) is used in sampling: the top-k most likely words are generated, and then selected randomly from; at 0, the most likely word is always chosen, while 1 means each is selected according to its likelihood, and it degenerates to a uniform 1 in k probability with higher values. In other words, the higher the temperature, the more chaotic or unlikely the generated sequences will be.

In the original nshepperd code release, the default temperature setting for the samples during training, 1.0, is not the usual 0.7 everyone uses for GPT-2 prose sampling—although it turns out for poetry we don’t want it at 0.7 as that forces too many repeated lines & 0.9–1 turns out to be much better, so use temperature in that range when generating samples. (Higher still may be better but I have not experimented with >1.)

If you are sampling after 2019-05-15, it may be a better idea to use a new sampling strategy, “nucleus sampling” (which essentially sets a different k at each step to avoid sampling extremely unlikely words and greatly reduces the repetition problem), which can be enabled like

--top_p 0.9. (An interesting but untested sampling strategy is “tail free sampling”.)train time, Learning Rate (LR):

A key NN hyperparameter as always.

In nshepperd’s code, the Adam SGD learning rate is left at its TensorFlow default of 0.001, which works initially, but appears to be much too high for this purpose (perhaps because the minibatch is so tiny on 1 GPU). After training overnight, the loss was not decreasing below 2.5, so I decayed it manually to 0.0001 & resumed training (editing line 136 of

train.pyto readtf.train.AdamOptimizer(learning_rate = 0.001*0.10)), eventually decaying it again (to0.001*0.0001) to get it down to a loss of ~1.95. (nshepperd has since added a--learning_rateoption so manual editing of the source is no longer necessary.)

GPT-2-poetry Samples

After training GPT-2-117M an hour or two, a sample

# ...[14999 | 3855.62] loss=3.77 avg=3.62

# ======== SAMPLE 1 ========

# in her place before

# We could not see her smile.

# If, the dear one there are,

# That thou dost hide thy face--

# In thy own eyes, too, I shall see--

# A gentle smile, and smile.

# And I shall see thee, even as in that place,

# There be whom death shall be. By some, at least in some,

# Not to them I shall say; wherefore

# I have, to whom I will not say.

# For though I should be sad,

# 'Tis not to see them--no,

# No voice I can convey

# Or voice to me or voice--no,

# In a single case my own.

# They who, in all this state,

# Celestial are. The world

# Of heaven must have its own:

# And when that thou canst tell--

# That which I cannot tell,

# No matter whose.

# If I should tell thee then--

# That I should tell thee then--

# This man--but this man!

# That I should say to thee,

# That thou shouldst say to thee,

# It cannot be without,

# But that I shall speak to thee--

# Thou knowest not the way.

# Whence no speech! No tongue!

# In that I am not weak in thy power.

# No, no, I will speak to thee--

# I will speak for thee:

# For if it still do need

# I shall speak a word or an act.

# In that place, far away,

# On this sea, and that place!

# I will tell thee, and I will speak to thee.

# ...Overnight samples during training:

# ...[115999 | 2375.96] loss=1.10 avg=2.31

# ======== SAMPLE 1 ========

# The sun is gone, and the night is late,

# The lights are few, and the chimneys close,

# And yet the moon hangs lonely

# Above the town, where the tides divide,

# On the shores of the silent tide.

# The tide is still, and the tide is at rest,

# And the sands are many, and many a breast

# And breast of the tide is weary,

# And far away the tide's last foam

# Stands silent under the waves;

# But the restless tide creeps slowly,

# And the tide is strong, and the tide is deep,

# And the sea, like a white-faced phantom,

# Floats motionless over the deep.

# The tide is past, and the tide is at rest,

# And the sands are many, and far apart,

# And the sands are many, and far apart,

# And the sea, like a white-faced phantom,

# Floats motionless over the sea.

# 'Tis evening, and he comes to me,

# With scent of flowers, from the village lane;

# And the wind is blowing faint and low,

# And the dew, like tears, on the hill-tops pale,

# Is drifting faint and cold from the eastern sky,

# As he floats adown the western wave;

# And the trees that bend their branches sigh,

# And the winds that waft a sigh,

# Are fading dimly away,

# As the tide goes wandering,

# Tossing waves of sand.

# And the cloud that floats and swells

# Has passed, like the wave on the bay,

# And the moon is slowly sinking low,

# As he sinks adown the western bay.

# There are waters blue and bright

# On the breast of the silent sea;

# And the foam is like a bright

# And lingering pearl of the sea.

# Oh, I would my heart might still

# Ever be where the waves are free.

# Where the foam-flakes fall and swell,

# Falling into the silent sea;

# Where the foam-flakes fall and swell,

# Falling into the silent sea;

# Where the foam-flakes fall and swell

# Falling into the silent sea.

# Oh, I would my heart might still

# Ever be where the waves are free.

# Far away from the sea and the tide,

# In a quiet little bay,

# Where the foam-flakes fall and swell,

# And the rocks are telling of a day

# When I would my heart could stay;

# Where the foam-flakes fall and swell

# Falling into the silent sea;

# Where the foam-flakes fall and swell

# Falling into the silent sea.

# Oh, I would my heart might still

# Ever be where the waves are free.

# With a love that never will,

# With a passion never still,

# With a memory never still

# For a love that never will,

# With a sorrow never still

# For a love that never will,

# With a passion never still,

# With a passion never still,

# With a passionate love that never will,

# With a sorrow never still

# For a love that never will.

# Oh, I would my heart might still

# Ever be where the waves are free.

# With a love that never will,

# With a passion never still,

# With a sorrow never still,

# With a passion never will,

# With a passion never will,

# With a sorrow never still,

# With a love that never will.

# With a love that never will,

# With a passion never will,

# With a sorrow never still,

# With a love that never will,

# With a sorrow never will,

# With a sorrow never still,

# With a love that never will,

# With a grief that never will,

# With a sorrow never will,

# With a love that never will.

# Oh, I would my heart might still

# Ever be where the waves are free.

# With a love that never will,

# With a sorrow never still,

# With a sorrow never still,

# With a sorrow never will,

# With a love that never will,

# With a sorrow never will,

# With a sorrow never will.

# With a love that never will,

# With a sorrow never will,

# With a sorrow never still,

# With a sorrow never will,

# With a sorrow never will,

# With a love that never will.

# With a love that never will,

# With a sorrow never will,

# With a sorrow never still,

# With a sorrow never will,

# With a sorrow never will,

# With a sorrow never will,

# With a sorrow never will,

# With a sorrow never will,

# With a love that never will,

# With a sorrow never will.

# With a love that never will,

# With a sorrow never will,

# With a sorrow never still,

# With a sorrow never will,

# With a sorrow never will,

# With a grief that never

# [116000 | 2385.67] loss=3.33 avg=2.32

# ...

# [133999 | 5919.61] loss=2.89 avg=2.21

# ======== SAMPLE 1 ========

#

# "When the night is gone and the sun goes out,

# "There goes my bride and my lover:

# "Can she never come to her wedding-ring

# "With the light of the seven candles?

# "Oh! to bed with the bridegroom and bride,

# "Or to lie with my lover!

# "He shall not come to my bed at night

# "For a little while longer.

# "She shall not come to my chamber

# "For a little longer."

# The night is spent, the sun has gone;

# The bridal of the bride hath done;

# The bridal of the bridegroom comes

# To wed the bridegroom's attour.

# I come to see the wedding-feast.

# He cries to me--"Wilt seek the bride,

# "Wilt seek the bridal?"

# "Oh! I have sought her mother's bower

# "And never found her! oh! my flower!

# "Would we should love as brides should do!

# "I shall not find her!"

# The bridegroom at the bridegroom's door

# Gave his bride a ring and a prayer.

# "Now, bridegroom, sing a bridegroom loud!

# "I shall not find her!"

# The bridegroom at the bridegroom's door

# Gave his bride a ring and a prayer.

# What means the bridegroom or the bride?

# The bridegroom's bridegroom waits to ride.

# And looks with wonder at the bride;

# "And does she dream?"

# "Oh! I have dreamed!

# "She dreams of my youth,

# "As one that hears--it cannot be -

# "The story of a marriage vow!"

# And a voice answers:

# "The story of a marriage vow!"

# And the words reach the bridegroom's door

# As the bridegroom at the bridegroom's door

# Kisses the ring with a bridegroom's kiss on his cheek.

# They are wed! They are wedded!

# Each is in his bridegroom's bower;

# Each hath his bride in his bosom now!

# And each hath his bride in his heart.

# She is wedded!

# With each is the bridegroom's bride!

# And love is a bridegroom's bride!

# She is wedded!

# With each is the bridegroom's bride!

# "They are wedded!

# They are wedded!

# They are wedded!"

# A man from a fair tower, where the birds of the air

# Fairer and fainter and fairer the flowers and the trees,

# When the sweet light fades from the gardens of day he found

# Came he in quest of a maiden, whose form was wildly wild,

# So she came at the summons. He came, and stood by her side;

# And he gazed on the dream of her marvellous face, and smiled;

# And he said: "I came by the river's side, when the day was still

# And I think of my bridegroom--he--whom ye all have a will!

# She is wedded!

# And he loves me not, my bride!

# And he looks at her eyes with a love that seems to him divine,

# Then he pines, and thinks of her eyes with an inward passionate shine

# They are wed! They are wedded!

# By the river's side they are, bridegroom, led!"

# He hath summoned her maiden with the will, and she answers

# That he needs her for his bridegroom still.

# A man from a fair tower, where the birds of the air

# Fairer and fainter and fairer the flowers and the trees,

# What is the meaning of that--no--is it, Lord, where ye bear

# All the wonders of the world in this fair maiden's hair?

# What doth the meaning of that--no--nor what doth she know,

# She is wedded!

# What doth the meaning of that--no--she hath chosen so?

# She is wedded!

# She is wedded!

# She is wedded!

# She is wedded!

# She is wedded!

# She is wedded!

# She is wedded!

# And the will is good,

# In the light of days,

# In the heat and stress,

# It is wise and wise

# To be wedded!

# She is wedded!

# And the love is good,

# In the light of days,

# It is hard to live

# And bear the wreath!

# And he girdeth her to his bosom, and thinks of her

# As she stands, all white, in the open air,

# With the full moon facing her face; and her breath

# Cries and whispers and wails and cries

#

# ...[134000 | 5929.46] loss=2.12 avg=2.21

#

# ...[135998 | 6934.07] loss=1.96 avg=2.16

# [135999 | 6934.58] loss=3.06 avg=2.17

# ======== SAMPLE 1 ========

# re

# Their love and beauty are as one in dream,

# A visible sign of the things that were.

# But I have seen these things by all men's eyes,

# Felt them as kindred of man's earthly life,

# And with the instinct of the unseen spirit

# Been caught by likeness of the thing he is.

# My love is but the wind, and I have blown

# From earth to where I am, and I have seen

# The things that no man dreamed of; yet at last

# I know by my soul's sense of a sense of things

# That are not, and may be, but the things that were;

# And yet I know these things are not, but are

# As earth and heaven, if earth and heaven and hell

# Are but the same things that it seems. Yea, then

# I am the wind. God knows the ways of men,

# He knows the insensate secrets of delight,

# And they are mysteries, if there be any to be seen."

# But with that word the wind in wonder strode.

# He heard the rustle of the leaves, and saw

# The shadows move about him, and he leaned

# Against the doorway like a god, and knew

# The inner meanings of the leaves and streams.

# There where the trees lie down at their root-holes,

# There where the wind smells of the blossoming boughs,

# He saw the grass, and felt the green blades come,

# As if it were the buds and boughs upon air,

# And heard the green birds sing. He saw the fields,

# The trees, the rivers, and the flowers within,

# The birds, the grasses, and the living things,

# And the strange river on the shore that rolls

# Through all its quiet marge into the sky.

# There let him live till time should come, and then

# Let love be like the heaven, and we be one

# To love, and not be one, being all in all.

# And if he had not done me the good work

# Had it been well not I. The things that he said

# Should never be fulfilled by simple sense;

# For all must have a meaning in themselves.

# But he that works out of his mind is one

# With whom the things that are and are not are,

# And makes them meet and good. 'T were a good thing

# For him to work and win for me, and so

# If he were not I would have it all.'

# But he that lives and not lives in the world

# Was not more worthy of the hand of Fate,

# And knows life's meaning, and would seek for it

# Through failure, and in death's despite. For him,

# Who hath been stricken with me through the brain,

# Forget to tell me how his brother, he

# Whom he had saved and murdered--so let it be

# By some great memory left.

# But at last,

# As I said this, he saw me, and he said

# To one, whose face was grey with tears in me,

# "What is it? let me tell you who I am.

# Do you see the things that you have seen before?

# What is it?"

# "They are more wise

# Than wise men think of wisdom and good will,"

# Replied the other. "What I deem is good.

# The gods are good to mortals as they are,

# And they know well whereby we are born: but they

# Who have loved God and died to him the most

# Of all the gods are fallen into ill things:

# For God we know is good, and hath not been,

# And therefore must be, so it be, with men

# Who love, and love because we loved them not.

# Alas, I do not think that God alone

# Hath power over the earth to let the gods

# Face to face with the world. I hate at times

# The gods that made them: the gods that knew

# Their names are our own gods, and would not know

# One other reason, for I have the power,

# And all the gods are fallen into ill things."

# Then she said to me, "What may have been

# To have known, before I came into this land

# To find you in some other place and knew you,

# And know you, seeing so many and strange,

# And knowing such a godlike way to go

# Among the gods and suffer such long-sought.

# I can take my crown of gold and wear a garland,

# Take some crown for my sake, and the happy crown

# And let it be for all the years long held

# That I have known, and felt so like a god

# Some few suns live. My heart is all in all

# To live again, my life upon earth dead."

# So I said to the god that loved me well

# And longed to have him come back into my prayers,

#

# [136000 | 6944.39] loss=3.15 avg=2.18

# ...The loss here is the usual cross-entropy we often see in architectures like a char-RNN. Typically, the best text generation results come when the model has trained down to a cross-entropy of <1, and 2–4 tend to be incoherent gibberish. (For example, in Andrej Karpathy’s Tiny Shakespeare.) That loss is per character, while GPT-2 operates on BPEs, which usually encode multiple characters, so are harder to predict; it seems to me that the conversion factor is ~2–3, so a GPT-2 model should aim for a loss of <2 if a good char-RNN would reach losses like <1. In this case, GPT-2-117M’s original poetry modeling capability is not too shabby (as demonstrated by the various prompted samples), and it shows decent poetry samples starting ~3.5. (Gibberish seems to set in at losses >6.) Given how large & powerful GPT-2-117M is, even with this much poetry to work with, overfitting remains a concern—memorizing poetry is not amusing, we want creative extrapolation or mashups.

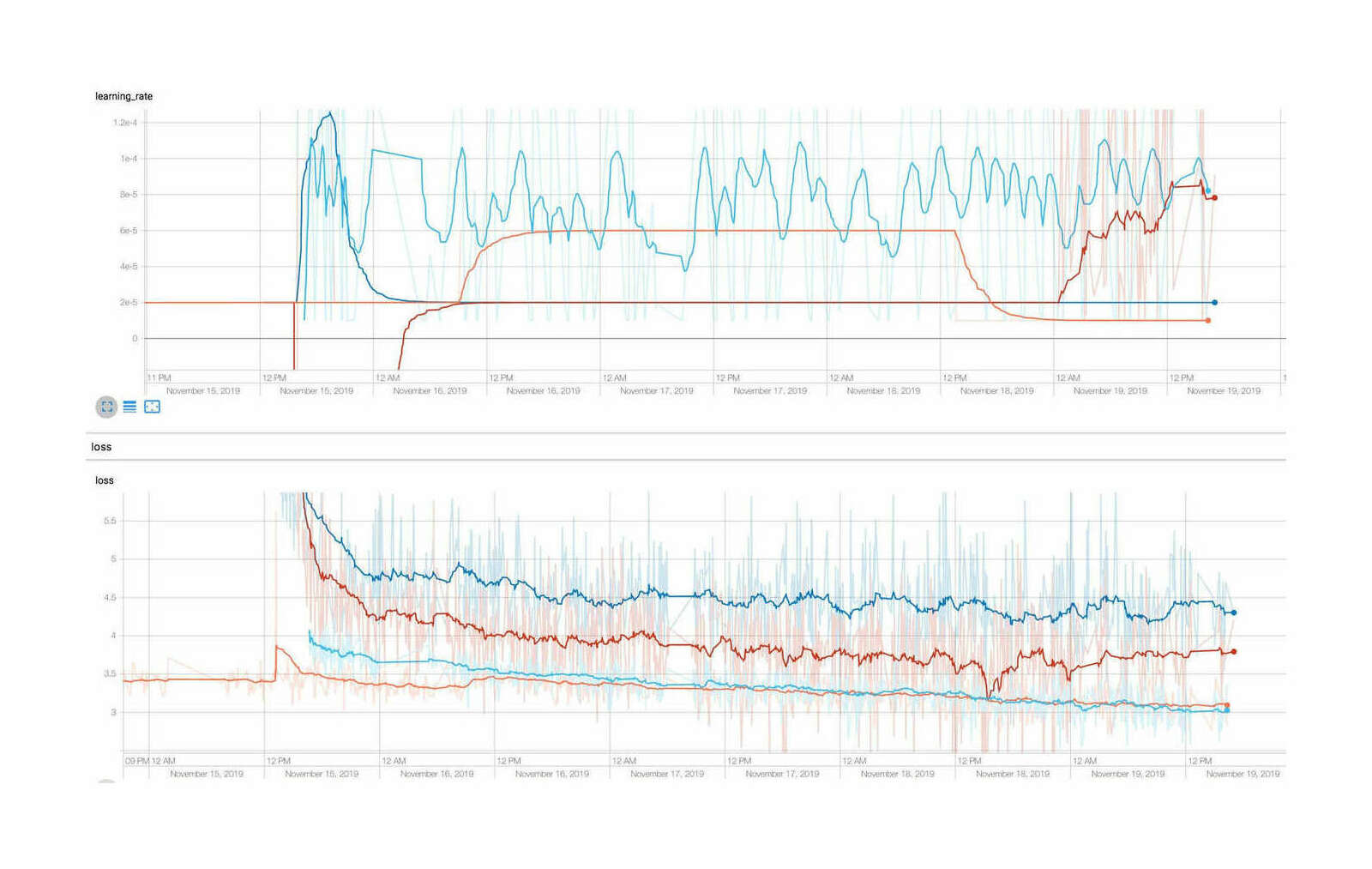

For this model & dataset, I trained for 519,407 steps to a final loss of ~2 in 72 GPU-hours; almost all of the learning was achieved in the first ~16 GPU-hours, and training it additional days did not do any apparent good in terms of the loss itself.9 This suggests that GPT-2-poetry was underfitting the poetry corpus & would benefit from an even larger model size.

Downloads:

Before sampling from any new finetuned version of GPT-2-117M, remember to copy encoder.json/hparams.json/vocab.bpe from the GPT-2-117MB model directory into the new model’s directory. I find higher temperature settings work better for poetry (perhaps because poetry is inherently more repetitive than prose), and top-k appears to work fine at OA’s top-40. So unconditional sampling can be done like this to generate 2 samples:

python src/generate_unconditional_samples.py --top_k 40 --temperature 0.9 --nsamples 2 --seed 0 \

--model_name 2019-03-06-gwern-gpt2-poetry-projectgutenberg-network519407

# ======================================== SAMPLE 1 ========================================

# --

# And it must be decided.

# It must be decided,

# And it must be decided.

# It must be decided,

# And it must be considered.

# It will be decided,

# Though the hill be steep,

# And the dale and forest

# Hold the land of sheep.

# And it must be decided,

# There's a jolt above,

# And its paths are narrow,

# And its paths are long.

# Yes, it is decided,

# And it is completely.

# All the hills are covered

# With grey snowdrifts,

# Shaded with a shimmer of misty veils,

# And the hills have a shimmer of hills between,

# And the valleys are covered with misty veils,

# And there lie a vast, grey land, like a queen,

# And they are not, in truth, but many and many streams,

# O'er the purple-grey sea whose waves are white

# As the limbs of a child of ten. And there

# The river stands, like a garden-fair

# In the valleys of the north, the valleys of the west,

# Blue and green in the summer, and runneth softly forth

# To the blue far upland beyond the sea;

# And over the high white upland far away

# Floats a white and tender water, and wearily

# Through the trees the rosiest water-lilies play

# In the sun, and rise and fall--the purple and red

# Of the streams. The waters are hidden in their bed

# By the stone o'er the darkling hills. The waters run

# Like a ringlet under the stone. The water flows

# Through the rocks like a river, and the stream

# Is a ribbon of gold spun by the sun. It gleams

# Like a gold sunbeam shining through the gleam

# Of a sudden silver, and silently falls

# On the pool, and is lost in the darkling deeps--

# Sink, sink in the shadows, ere it flee

# Into the darkling depths. And the waters sleep

# In the light of the moon and the silver of dawn,

# And silently float past the mountains of heaven.

# As we gazed the city fades into the clouds

# Of the sky, and we are above the roofs.

# And suddenly as the moon, flurrying,

# Dazzles the sea with her swan-throated song,

# And there is a faint far singing of birds,

# And a sound from the land, as of swarming seas,

# The grey sea, and the land that hideth rest,

# And the sky that hides the lovely green of God.

# So we are caught, like the moving sea,

# That calleth unto its sleeping

# Soft and still, like the moon that calleth

# In the twilight depths vast and hoary--

# Till we see the City changing toward the dark,

# And its changing towers in the distance darken.

# In the city is a calm and quiet street,

# Full of sunlight, and a smell of rain,

# That falls from unseen towers like soft white feet

# On sleeping city's rue and misty pane.

# There is peace, and a vague peace over death,

# And a far-off singing in the city's breath.

# And all fair cities must go to dust,

# And every body be one tomb--

# And all white houses dwindle and grow dull,

# And the city's breath is a dull death-blow.

# But this place is a place of peace and trust,

# And it is but a little street,

# Whose idle heads and sunken faces

# Are bright with light that makes them bright.

# Then it is not alone fair Town that lies,

# With open pillared streets beneath a sun,

# And many a weary world and dusty town,

# And a sunflowers and a great tide onward run

# In the blue of the heavens that are not gray,

# But only blue and pale, like tender wings

# Sailing with wide-spread, languid, luminous eyes.

# This place is the very heart of it,

# Whose quiet hours with its peace throng

# The silent nights and the perpetual sea.

# The City slept with her silent towers,

# A stream that ran in an idle stream,

# And a mist hung at the windows of the tower.

# And it was a street--a sunlit dream,

# A dream of a world that lay

# Open in the summer morning,

# And in its heart a joy all gay.

# For its sunshines and palaces were there,

# Till a wind came softly here.

# And it was a new, new city,

# A city that arose in the early morning;

# That opened its gates on June morning,

# With a sunset and a moonrise sweet.

# The city was a cathedral;

# And out of the sound of the bells and t

# ======================================== SAMPLE 2 ========================================

# of the world

# The best, that, when once dead, is found again.

# And what is this? Where can we find a place,

# Save in the solitude, where he may be

# The friend of all beneath the sun, and be

# An unseen presence, if the traveller's eye

# Can follow where he cannot: there he stands

# Dark in majestic pomp, like those whom owls

# Could once have told down with a lion's maw.

# His form is like his fathers, and the crown

# Of all his race: the very colors are

# As his to-day, which we must see and bear;

# The only parent is the creature's he.

# His face, where we have marked it, is but veiled

# In twilight, when we see, and he appears

# Himself in all his nature--where, if man

# Can recollect, he saw it in the frame:

# 'Tis clay wherever found--and so is called,

# When nature gives him back her clay. It means

# That clay was form'd; but clay is form'd elsewhere;

# He needs must feel through all this frame, and, lo,

# The horse he rears, is human in his mind.

# So too, his nature is a thing apart

# From the great Nature, which has made him thus

# A likeness of himself: and he beholds

# The creatures that he knows, and not intends

# To visit them, and only in their hearts

# Deserts them; and if they come indeed,

# And if the sea doth bring them, then the man

# Is still a child of theirs. He can recall

# His mother's features and the father's look.

# And often he has said that he foresaw

# The sea, the winds, that he may all at will

# Be sea. In short, the man is all he sees.

# He fears the sea may hurt him.

# Lashed to the helm,

# The ship was in the sea, and, on its moor

# And the sails furled, in silence sat the maid

# Motionless, like a star; no sound was heard

# Save of the distant ocean's fitful hum;

# The sounds of tempest came to him, his ears

# Mercurially listless, and his heart

# Disturbed like a distempered sea; he stood,

# And gazed from heaven in an unblest thought;

# He had not heard his mother's voice; he gazed;

# The mother's look was of a loftier mood;

# He had not heard his own; he had not heard

# What ever was, where his own heart has been;

# He had not understood the very thought

# Of his own heart, where life could find no shore.

# The sea beats on: the vessel's bell strikes six:

# Dive down, O death! to earth, to heaven! to heaven!

# And it is sweet thus to be two souls alone:

# Dive down for home, and to the air renounce

# The galling bonds of everlasting life

# In some lone bark, that, dying, to the last

# Are still as death without her: so to him,

# The mother's voice, still sweeter, spoke of home;

# And as the young man fell upon her breast,

# The mother's oracle, the words of death,

# Even as he spoke, a living death arose:

# He feels his heart rise, and ascend the sky.

# The wreck shall surely reach the sea; he dies,

# A mortal change, as earth, in which it was;

# And God, though dead, had still a dying man.

# But when they parted, he can never die.

# There are thousands, yes, there are thousands who,

# Without a mother, could not die unheard

# Of by a hand unseen: yet some are sad,

# Lonely and wretched here, without a mate;

# Or if the grave touch, the great hearts' light

# Have no soft touch, even of a brother's grief

# Scarce suffered, they shall each a new life yield;

# And one, once more on earth, to heaven, or God,

# Shall meet his father's face, or bless his grave.

# Not vainly on these mocking thoughts he breathes;

# They sink to nothing when he sinks to rise:

# The tears of fatherly compassion reach

# The mother's eyelids, her, but not her eyes.

# And now a voice was heard by the wild bird,

# With words of comfort from the infant boy.

# Oh, had it stayed the angel's birth, and then

# Those tresses streaming, would have felt the strain

# For the bright star, and for a glorious man.

# It is a noble deed: and, through the world,

# Doth woman triumph, though she suffer loss

# And poverty and pain, and,Not bad.

Cleaning Project Gutenberg & Contemporary Poetry

In the dark the sun doth gleam,

And in the dark the moon doth seem

But now the evening is begun—

Gone is the sun upon the earth!

The silver moon doth like a cup

Of blood-red wine, and as that cup

Is drained of life, doth quench no drop.

What man will drink such wine?GPT-2

Shawn Presser cleaned the Project Gutenberg poetry by using a heuristic on line numbers to guess where poems begin/end. This provides useful semantic metadata to the GPT-2-117M model, reducing “runon” or “ramblingness”, as it sees many discrete texts rather than a few book-length texts. I combined this improved PG poetry dataset with a new dataset on Kaggle, which scraped the Poetry Foundation website for modern/contemporary poetry, fixing the post-1920s emptiness of PG. The generated poems are much better.

Shawn Presser noted the issues with the Project Gutenberg corpus and, as book-level transitions are solved, suggested a heuristic for reconstructing the blank lines denoting (presumably) stanzas: use the numbers (GIDs) from the original JSON for book-level transitions, and look for lines which might be transitions to insert newlines. (Imperfect, but better than nothing!) Since stanzas are still connected, <|endoftext|> is used for the book-level transitions, and a blank line is used for the stanza-level, preserving as much of the semantics as possible.

Presser’s Python script:

import json

import os

import sys

def split_by_book(poems):

prev_gid = -1

r = []

for entry in poems:

gid = entry['gid']

if gid != prev_gid:

if prev_gid != -1 and len(r) > 0:

yield r

r = []

prev_gid = gid

r += [entry]

if len(r) > 0:

yield r

def split_by_transition(poems):

prev_line = -2

r = []

for entry in poems:

line = entry['line']

if line != prev_line + 1:

if prev_line != -2 and len(r) > 0:

yield r

r = []

prev_line = line

r += [entry]

if len(r) > 0:

yield r

def extract(entries):

return '\n'.join(list(map(lambda x: x['s'], entries)))

def final(poems):

for book in split_by_book(poems):

yield '<|endoftext|>'

for stanza in split_by_transition(book):

if len(stanza) > 5:

yield '\n'

for entry in stanza:

line = entry['s'] # {

line = line.rstrip('} ')

yield line

def output(poems, fname):

with open(fname, "w") as f:

for line in final(poems):

f.write(line + '\n')

def main():

print('Loading poems...')

with open("gutenberg-poetry-v2.ndjson") as f:

poems=[json.loads(line) for line in f]

print('Loaded.')

output(poems, "corpus.txt")

if __name__ == "__main__":

main()This is converted to the NPZ and trained as usual. I retrained the previous non-prefix GPT-2-117M PG poetry model for ~30k steps (>16h?) down to a loss of ~1.73. (I used GPT-2-117M instead of GPT-2-345M for compatibility with my concurrent experiment in preference-learning training.)

The results are quite nice, and competitive even with 345M. Some selected samples:

The gods are they who came to earth

And set the seas ablaze with gold.

There is a breeze upon the sea,

A sea of summer in its folds,

A salt, enchanted breeze that mocks

The scents of life, from far away

Comes slumbrous, sad, and quaint, and quaint.

The mother of the gods, that day,

With mortal feet and sweet voice speaks,

And smiles, and speaks to men: "My Sweet,

I shall not weary of thy pain."

...Let me drink of the wine of pain

And think upon the agonies of hope,

And of the blessed Giver of all good things;

For, man for man, mine is the deepest love

That sorrow takes upon the humblest soul;

But who hath learned how sorrow turns to gall

The places where my feet have trod before.

...And 'stead of light, o'er earth, o'er rocky mountains,

A slowly falling star,

Its pointed pointed splendor far uplifting,

Heaven's flowery path bore down;

Each cranny of the air a gracious feeling,

It waved divinely round,

It called us hence, "Come what wouldst thou here?"--

Sweet mountain, that I love,

With that bright tint of heaven above,

'Twould make me still to see

One like to thee,

As fades the light that seeks the wandering eye.

...The skies are smiling sweetly on,

And summer's fairest hours are gone.

Oh, blessed Mercy! how the blest

Taste life itself can truly taste.

Thy morn of days, with all its past,

May on life's tempest paint the last.

...When you come to die,

Every nerve and bone

Soon lulled in sleep,

Secure and free,

Sleep will seize on you.

When you come to die,

Every nerve and bone

Soon lulled in sleep,

Sleep will seize on you.

When you come to die,

Every nerve and bone

Soon lulled in sleep,

We'll still be free,

And you'll never escape from our woe!

...I would be all that I can do

And this to carry with me

Along with me, O brother,

And bid my lagging days relent

For every worthy deed done,

And glorious though the world be,

They never will repent me,

But in God's name endureth ever,

Whose blessed hope my soul abides

For refuge through the awful doors of death.

...We are old men, who pass

On the sands with gaze

Out of the narrow world of fashion;

We are old men, who stay

On a river's flow

And a common day

Where the life of youth is waiting,

And a longing grows

For the world of youth and beauty

Where the old man goes.

...When I am dead, my dearest,

Sing no sad songs for me;

Plant thou no roses at my head,

That by that token may grow cold.

My dirge shall be a muffled noise,

My trentals stiff with dread,

For he who once his faith hath won

Will never know it read.

...O beautiful, golden-bosomed ships!

O sunburned ships on the sea; O ship which breams

Above the waves and beams; O songs of love

Sent from the wide West, that shall sing us songs

In our hearts afar, as a summer star.While training that, I recalled that my other major beef with the PG corpus was its absence of more contemporary poetry. I didn’t really feel like trying to scrape the Poetry Foundation (PF) website myself, but I gave Google Dataset Search another try and to my surprise, discovered a scrape had surfaced on Kaggle. Aside from being large, it comes with interesting metadata: the title and author, but also a somewhat eccentric set of ‘tags’ describing the poem. They would be nice to use via the inline metadata trick, allowing some degree of controllability (like my use of author or book ID prefixes, or CTRL’s use of subreddits).

The Kaggle Poetry Foundation scrape has numerous issues I had to fix before its format was acceptable for GPT-2:

I replaced prefixed whitespace, trimming leading/trailing whitespace in all fields

replace 3+ spaces with newlines

deleted all 2+ spaces

dropped poems with <100 characters (generally a scrape error)

remove Unicode junk

serialize it as title+author+tags (if any) / poem /

<|endoftext|>(ie. the inline metadata trick, allowing for potentially better learning and a small degree of control in conditional generation)the final formatted corpus is available for download

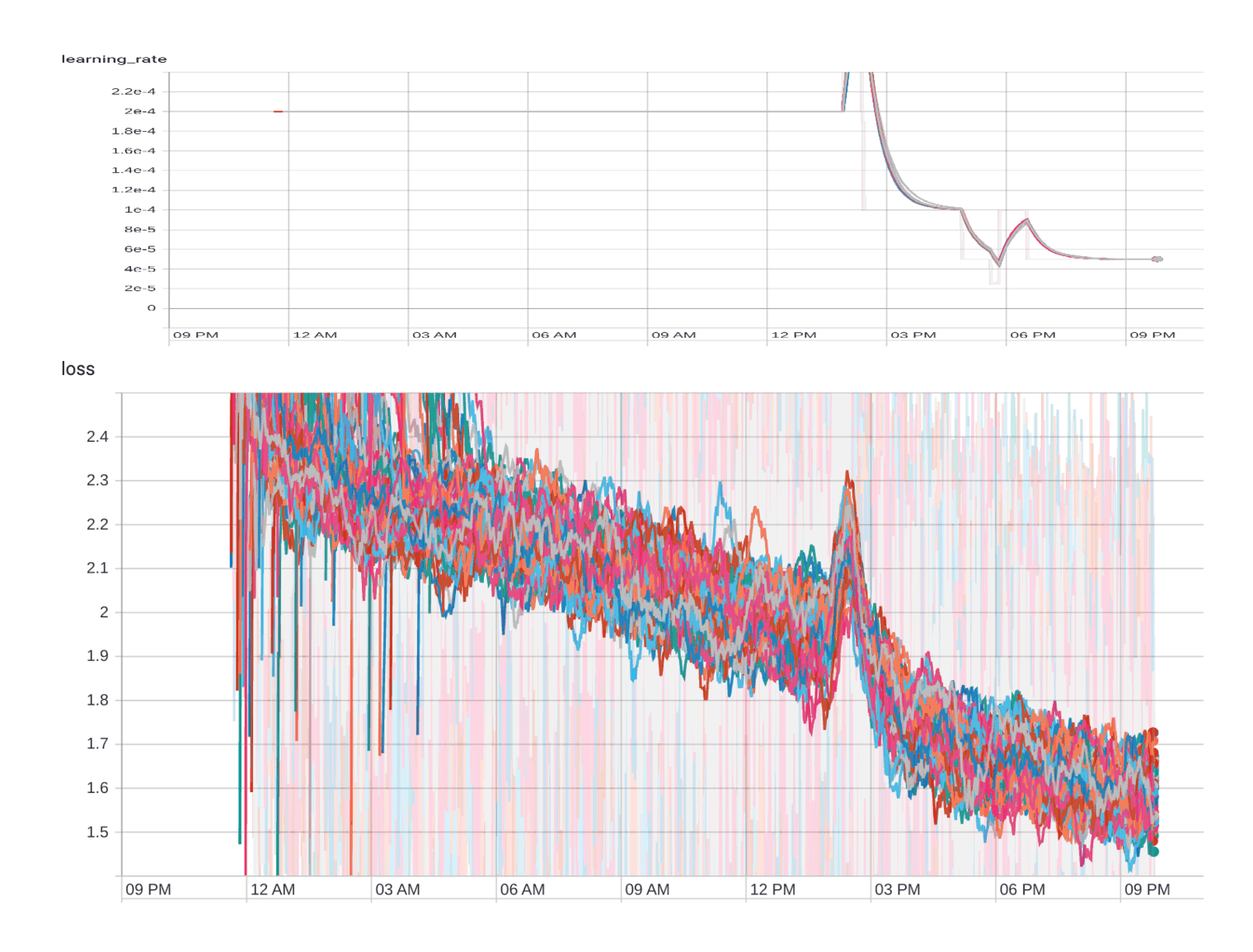

Once the cleaned PG was done, I then swapped out the PG for PF dataset and began finetuning. (I could train on the combined dataset, but the PF dataset is only 20MB and at 1⁄6 the size of PG, training on that would take a long time to pick up on PF’s details.) Surprisingly, the PF dataset trained down to ~0.60 loss after ~10k steps, as compared to PG’s ~1.73, a decrease much greater than I had expected from providing some metadata, suggesting that modern poetry, being so prose-like, is much easier for GPT-2 to model—which doesn’t strike me as a compliment.

Some selected samples from the PF-finetuning:

"The New York Movie", by William Collins

for Billie Holiday

Billie Holiday is dead. I am unhappy,

standing there like a gray stone,

standing there in my sweater,

thinking I can't kill you: I would like to be a big, red dog

with snow. I wish you a good night

with your face, your cheek and your legs, your legs.

I want to throw away this falling Sunday

with your face, my ugly body, my ugly body.

Nothing to give my body to,

nothing to give my heart to.

..."To a History Store", by Yehuda Amichai [Living, Death, Time & Brevity, Nature, Fall]

How hard it is for the living to die

holding only memory, and then to study the minutes

how like a trumpet of the future it sounds.

Gentlest of birds, patient as a body, cautious,

clear as the tongues of previous vervain birds.

Perhaps you are close to it, beautiful world.

..."Zagajas", by Robertizing [Religion, Christianity, Faith & Doubt, God & the Divine]

Our Lord who bore a child upon his knee.

We'll ask no more.

As sandpaper for what it wears.

Autumn, a new day.

Remember November, a new day.

Remember November, a new day.

Think of the silver

smiling into the barracud

glass

Already at the edge of day.

Think of the hardson of earth

crumbling into something new

Into every tingling ring

to the full edge. Think of what

New, bright, revolutionary clouds

scattered out of the blue:

the clouds that dissolved them,

soundless, self-edesfilled.

Think of the cycles in and around of this

crescent myriads of ants starting

to collect beneath each other,

their seeds suddenly

burning each to the other, each

moving and flashing.

..."The Bean Eaters", by Rudyard Kipling [Relationships, Home Life, Pets, Nature, Animals, Landscapes & Pastorals, Winter]

The fairies were wonderful.

They trod the snow, chasing

the catkins to the north.

Frosty violin-skins were flying

and they began to sing,

leaving an echo of singing.

Then, as the she-torches rang,

a second spring

flowed up from the fur brush.

It was the strangest sight

all through the wintry night.

It was the woods, falling in long grass,

and I was thinking of you, Little Brother,

in the sweet marsh,

that I might recognize, Little Brother,

as I think of you.

..."In Golden Gate Park", by James Jenny Xie [Living, Coming of Age, Time & Brevity, Activities, Jobs & Working, Philosophy]

In Golden Gate Park's the day is breaking, only

the timeless moments of the night sketch the sky's

high promenade of flying goldenness now

and never a late, dissolving splinter of black glass.

But in Golden Gate Park's the morning breaks. The sidewalks

bask to me like cars at a funeral or the stars

like blind lights waiting on cars long since gone.

There, to the streaming windowpane, the little birds

scarve to get ready to swoop, and the sky's yellow

and gold. It is the end of hunger that slays the bird.

..."To Theodore", by Kenneth Slessor

Death may forgive, but love is better.

He that loves the rose

Whose pale cheek glows

With one hand swift and close,

Whose fingers move

The gold hair of the rose,

Gone to pass.

Where his lips draw breath

The bitter thong

Sigh as if Death had

No part with them,

He hears the song,

Hears the shout,

Saying me,

As I must.

Love is better, they say,

Than the loss they know;

Dreaming is worse, they say,

Love must hate so.

As his torch I carry the air;

He shakes my wings;

He speaks no word;

Saying me,

As I reach,

As he calls me,

Call him, O dear,

Call him, oh dear.

Love has been my constant care.The contemporary PF samples properly mimic all the metadata and formatting, and are good, for what they are. (If you doubt this, read through a random selection of PF poems.) The ones I liked seemed like they benefited greatly from the PG pretraining. There are still a lot of flaws in the unclean PF data: run-on lines are particularly irritating, and appear to be flaws in the Kaggle scrape dataset rather than the original PF website. I have brought up the problems on Kaggle, but I doubt they’ll be fixed soon.

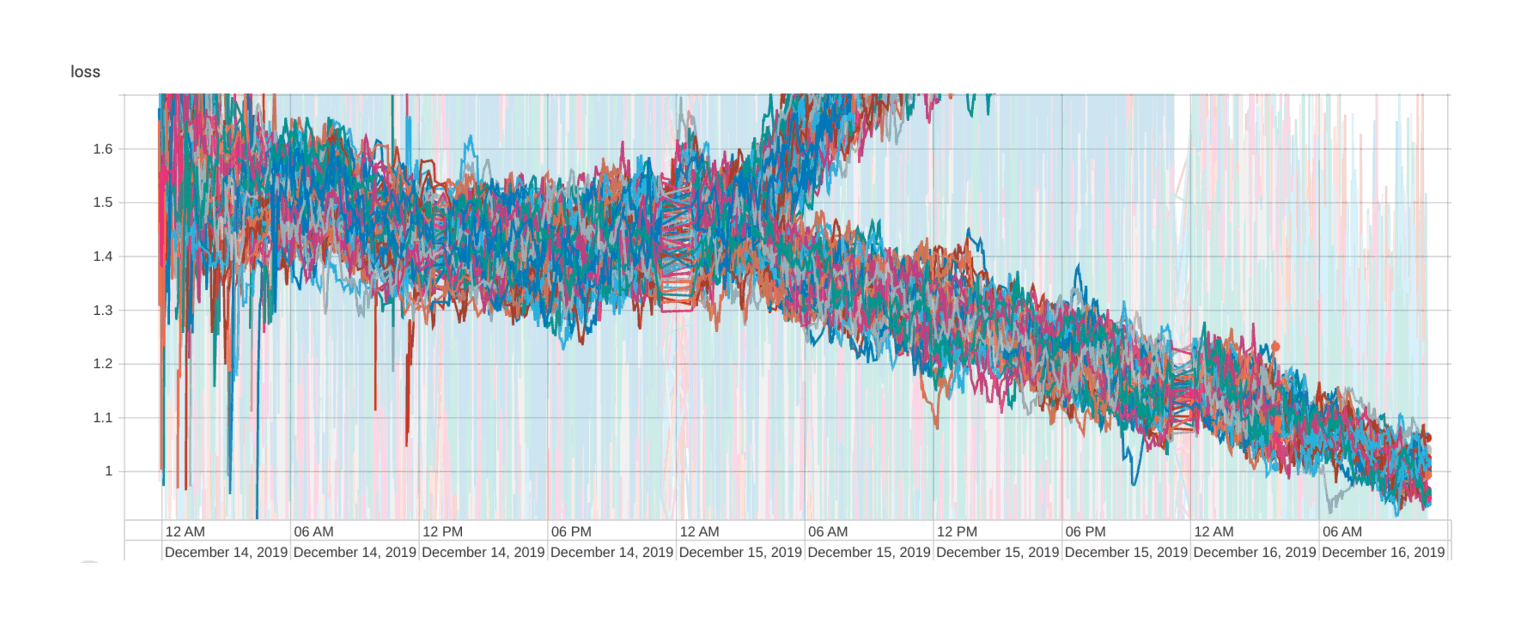

With PF done, I combined it with PG and trained on the combination dataset for another ~20,000 steps, yielding a final loss of 1.61. The combined model appears able to do both datasets well (the weighted average of a dataset with a loss of 0.6 and another dataset 6 times larger and a loss of 1.7 would be ~1.55, close to the model’s ~1.6), and the samples don’t appear to differ much, so I don’t excerpt any. But the combined model should make a great starting point for RL preference-learning training.

Random samples & model downloads:

final (combined) model: 117M-clean (431MB)

Training GPT-2-poetry-prefix

The first version of the PG data for GPT-2-poetry just runs all the lines together, erasing the metadata about what book each line comes from. A good model should nevertheless gradually learn about the transitions between poems & whole books, but that is hard and there may not be enough transitions in the data to learn effectively.

Much like the char-RNN experiments on this page, there is no reason one can’t inject that metadata in a structured way to see if the model can learn to exploit the metadata; even if it cannot, the added metadata shouldn’t hurt that much because it is so regular & repetitive. Inserting the metadata also allows for some degree of control in conditional generation; one should be able to put in the book ID for, say, Homer’s Iliad as a prompt and get out a long block of consistent Homeric pastiche.10

Ideally, there would be unique IDs for every author, poem, and book and these would appear at the beginning of every poem and the end of the poem would be delimited with the <|endoftext|> symbol that OA’s GPT-2 models were trained with, but unfortunately only the book ID is available in this particular dataset. (Project Gutenberg ebooks do not include any metadata or formatting which would cleanly split each discrete poem from each other.) Like before with authors, the book ID metadata can be formatted as a prefix on every line with a delimiter like the pipe character.

Rather than start over with GPT-2-117M again, GPT-2-poetry can just be further finetuned on this new prefixed version of the PG corpus to produce what I call “GPT-2-poetry-prefix”:

cat gutenberg-poetry-v001.ndjson | jq .gid | tr -d '"' > id.txt # "

cat gutenberg-poetry-v001.ndjson | jq .s | sed -e 's/^.//' -e 's/.$//' -e 's/\\//g' >> poetry.txt

paste --delimiters='|' id.txt poetry.txt > gutenberg.txt

shuf gutenberg.txt | head

# 14869|Beware of the brand of the fiery Frank!

# 1727|and they have great power among the Argives. I am flying to

# 38550|Shows heaven in page of living book;

# 22421|First, for effusions due unto the dead, I. 26.

# 26275|blossomed beneath their temples, and covered their chins with

# 1745|What happiness, who can enjoy alone,

# 1645|When first he won the fairy clime.

# 4332|And out of these molten flowers,

# 36916|What! Never more go gladly back?

# 2507|Raged for hours the heady fight,

PYTHONPATH=src ./encode.py gutenberg-poetry-v001-delimited.txt gutenberg-poetry-v001-delimited.txt.npzThe loss of GPT-2-poetry-prefix will be much lower than GPT-2-poetry because the prefix is so predictable, but it will hopefully learn interesting things beyond that.

In other samples, the generated IDs switch in the first two lines, and while that’s not much to judge from, GPT-2-poetry-prefix seems to ignore keywords from the first line when the IDs change, and doesn’t repeat them in the rest of the sample or attempt to rhyme off them, which is further evidence it is successfully associating & learning to mode-switch.

Like GPT-2-poetry, GPT-2-poetry-prefix converged quickly to a final loss of ~1.6 after 224,474 steps taking 31 GPU-hours, not improving much after the first ~8 GPU-hours despite decreasing the learning rate. (Diminishing returns appear to set in quickly for finetuning GPT-2-117M even if one has a relatively large new corpus.)

GPT-2-poetry-prefix Samples

Training Samples

# ...[33999 | 4308.76] loss=2.45 avg=1.86

# ======== SAMPLE 1 ========

# 11|But I have had a day that was gone when I saw you in a dream,

# 1322|A year ago, a year ago.... I'm going back

# 1322|Oh, the years are many and the years begin!

# 1322|You and I have traveled far and wide,

# 1322|You and I have wandered far and wide

# 1322|Through the land I love so well,

# 1322|Where the beautiful land of long ago

# 1322|Died and ebbed and glowed,

# 1322|In the little green land of long ago,

# 1322|In the little green land of long ago,

# 1322|In the little green land of long ago.

# 1322|You and I have traveled far and wide

# 1322|Through the land of long ago;

# 1322|You and we have traveled far and wide

# 1322|O'er the land we love so well,

# 1322|In the little green land of long ago,

# 1322|In the little green land of long ago.

# 1322|You and I have traveled far and wide

# 1322|Through the land of long ago;

# 1322|And the years have slowly swept us on

# 1322|Till the dust has left the sod,

# 1322|And we all must rue the little day

# 1322|When we all are gone from God....

# 1322|And we all must rue the little day

# 1322|When we all are gone from God....

# 1322|I know a little garden very old,

# 1322|Built in a nook near the western sea,

# 1322|With doors and windows closely locked and barred

# 1322|And steps that made no sound but the world outside,

# 1322|And a wall of white-walled flowers all in a row

# 1322|Of violet bloom that just began to blow,

# 1322|And a dial that looked like a golden globe,

# 1322|Cool, square, and green, with the leaves and the grass,

# 1322|And a dial that looked like a needle of bronze;

# 1322|And I wonder if these petals are lost to-day,

# 1322|These petals will be forgotten to-morrow.

# 1322|"A rose would be a rose,"

# 1322|I wonder much at it;

# 1322|It does not matter what or who,

# 1322|So very, very much it matters:

# 1322|It is so nice to think

# 1322|The world is such a place for petals,

# 1322|And so much like a pink.

# 1322|"A purple fox-hawk lived down by the sea

# 1322|And danced and slept in the white-walled flowers,

# 1322|And I thought about a tree,

# 1322|A rose that dropped as an apple falls

# 1322|Into the water, and fell in showers."

# 1322|And I wondered what people said,

# 1322|And wherefore took the trouble,

# 1322|And wherefore came my little red bird

# 1322|To look at me and wave its wings

# 1322|For joy of self and for its red,

# 1322|And why I cannot tell;

# 1322|For it is so nice to think

# 1322|The world is such a place for petals.

# 1322|And so I sat and played,

# 1322|And the old clock set the chimney-top

# 1322|To ring time to itself and stop

# 1322|The time with a slow, frost-ridden drone,

# 1322|And a rustle as of bees.

# 1322|The house was old, and had grown lean

# 1322|As if it were but a leaf,

# 1322|But I found there was only one room

# 1322|And I slept on my stem at the end of the porch

# 1322|Like a child asleep.

# 1322|The door was old, but very young,

# 1322|And very, very young;

# 1322|And a weary smile on its satin lips

# 1322|Hung like a crown upon the night,

# 1322|And the roses crept into the room

# 1322|By the little red door in the wall,

# 1322|And I woke, and felt as a king is,

# 1322|And thought of all the trouble and pain

# 1322|Of a little life and a little love

# 1322|When only a boy is born.

# 1322|For my heart was old, and restless with the years,

# 1322|And my face with no trace of the things I had

# 1322|And no remembrance when I was twenty-five,

# 1322|And

#

# [34000 | 4318.77] loss=2.15 avg=1.86

# ...

# [47998 | 11504.75] loss=1.91 avg=1.84

# [47999 | 11505.25] loss=2.37 avg=1.85

# ======== SAMPLE 1 ========

# |So when the sun has touched the hill

# 30599|And made the earth a little heave

# 30599|Of gold-dust on the firmament.

# 30599|The sea is a molten pearl

# 30599|Silvering the yellow moon.

# 30599|Gold is the poppies of purple plush,

# 30599|Which make a star within a pool

# 30599|Of purple, blue, and green and blue.

# 30599|The sea is a molten pearl,

# 30599|Whose petals burn as light

# 30599|In the rose-yarn of the sea.

# 30599|The gold star beckons, sparkles, shines.

# 30599|The water gleams and glows.

# 30599|The poppies turn to a golden rim,

# 30599|And a thousand petals, one by one,

# 30599|Come dancing through the purple waves

# 30599|Of purple.

# 30599|My soul goes up to the sun.

# 30599|The sun, all gold, is gone.

# 30599|The sun is gone, the sun

# 30599|Is not more gold than my soul.

# 30599|What is the rainbow, my soul?

# 30599|The rain is falling in the tree

# 30599|In dewdrops falling, that are wet

# 30599|With dewdrops falling.

# 30599|I have heard music in the woods

# 30599|Under a great sky.

# 30599|Their notes, on a hundred harps,

# 30599|Dance by a liquid, falling star.

# 30599|The song of the whole universe

# 30599|Rings like a loon's tune.

# 30599|The rain is falling, my soul,

# 30599|In the tree-tops, the rain is falling,

# 30599|And the rain is not more musical.

# 30599|The trees, like great globed fruit in a garden of heaven,

# 30599|Are as full of little shining blossoms

# 30599|As the face of a child of ten minutes.

# 30599|The wind is playing a soft tune

# 30599|Like the silver notes of a bell.

# 30599|The grass is a dance for a child,

# 30599|And the sun is going down.

# 30599|The rain is playing a soft tune

# 30599|Like the golden notes of a bell.

# 30599|The rain is playing asleep in the meadows

# 30599|Like a sea of dreams,

# 30599|And the wind is playing a soft tune.

# 30599|The leaves are wet with the tears:

# 30599|There is only the wind that comes.

# 30599|The leaves are wet with the tears.

# 30599|The trees have bent to the wind,

# 30599|Like heavy blossoms, and nothing stays.

# 30599|The earth is flooded with tears

# 30599|Like small white stars in the sky.

# 30599|The wind's song is marching by

# 30599|Like a song in a wind from a string.

# 30599|It comes from nothing, it comes not from the years;

# 30599|An atom of dust and a tide,

# 30599|The ceaseless rain falls heavily over the world,

# 30599|The summer flowers are red

# 30599|And one like a gold cup, in a crimson cup,

# 30599|Flows out from nothing, and goes

# 30599|Without speech or motion nor sound.

# 30599|The yellow flowers all slip,

# 30599|All their leaves are wet,

# 30599|And their crimson petals are wet.

# 30599|The rain has driven the sun outside,

# 30599|The wind has driven the rain outside,

# 30599|The moon is going out and in,

# 30599|With the stars on the roof like snow.

# 30599|The rain has driven the rain outside,

# 30599|The moon is driving the clouds in the air

# 30599|Like white, wool flakes in a snow.

# 30599|The trees are wet with the tears,

# 30599|They weep in their night-black tatters,

# 30599|They moan like mortals who lost their way

# 30599|Because they were glad of the rain.

# 30599|The rain is falling, my soul,

# 30599|It has torn away the flowers,

# 30599|They are wet with the tears of the sun.

# 30599|In the black ooze and the snow,

# 30599|The cold winds, shuddering, are blowing,

# 30599|The leaves are blown like blown corn,

# 30599|There is only the wind that comes.

# 30599|Black and long! The night is

#

# [48000 | 11515.52] loss=2.14 avg=1.85

#

# ...

# [166998 | 5752.38] loss=2.17 avg=1.52

# [166999 | 5752.88] loss=2.10 avg=1.53

# ======== SAMPLE 1 ========

# 26|His golden radiance is no more."

# 16452|"I shall not think of men in Argos more

# 16452|Than they are now, who many a bloody deed

# 16452|Wrought on the Greeks, nor yet of Ilium's king

# 16452|In arts and arts like these can speak the rest.

# 16452|But they--their kings--the Trojans and their sons

# 16452|Have fallen. Their deaths, the Grecians and their friends

# 16452|Have fallen in battle, from whom little hope

# 16452|To escape the battle, but the steadfast hearts

# 16452|Of heroes and of Trojans have become

# 16452|Inglorious still. The immemorial strife

# 16452|Shall rise for ever in a glorious day,

# 16452|When wars are waged between us and the Greeks.

# 16452|The battle shall be theirs, the mirth, the song;

# 16452|The mirth which all the Olympian people share,

# 16452|Shall bless the younger warriors with a joy

# 16452|So great, so glorious, and a greater fame,

# 16452|That all the Greeks shall learn, that in the van

# 16452|Ye stand yourselves, and they will praise your deeds.

# 16452|But I beseech you, if indeed by mine

# 16452|Unknown dishonour you be wrested hence,

# 16452|That with your lusts, illustrious and august,

# 16452|All others ye may vanquish. Now, my friend,

# 16452|Behold this prize to crown your father's pride.

# 16452|He said, and shaking both his palms, assent

# 16452|That I should also wish it. Thou art brave;

# 16452|Thou know'st how Menoetiades the swift

# 16452|Was dragged, of Hector and the fierce compeers

# 16452|And Phrygian warriors. So, we will dispatch

# 16452|Your bodies, then, yourselves to burn the ships

# 16452|In sacrifice; with torches and with bells

# 16452|To burn them, and with oxen to replace

# 16452|Your gallant friends for ever. But I wish

# 16452|That no man living has so long endured

# 16452|The onset of his foes, as I have power

# 16452|To burn or storm; for mighty Hector erst

# 16452|Was slain, and now returns his safe return

# 16452|To the Thesprotians, and each other's wives

# 16452|And tender children, and all other babes

# 16452|Assemble round me now, for ye have more

# 16452|To suffer than they know. Go then--the rest

# 16452|Will bear you safely; if ye dare to use

# 16452|The Grecians with your ships, leave not the flames

# 16452|Or fire, or slay yourselves and leave the rest,

# 16452|Ye may, at least, escape, but Hector's hands

# 16452|Will set you free; the vultures on the hills

# 16452|Held forth, and I will pierce those hollow shades

# 16452|Adown the trench, and ye shall find them there

# 16452|Scaped unaware. If, then, the Gods above

# 16452|Should bring thee to the fane of Peleus' son,

# 16452|In his despite, thou wouldst be left alone,

# 16452|Beside the yoke unwearied, there to stand,

# 16452|And from the Trojans scatter all thy dead.

# 16452|So spake the son of Atreus, and retired.

# 16452|Then, leaving Hector's body in the tent

# 16452|Of Periphas, King Agamemnon seized,

# 16452|And in his royal hand his spear adorned

# 16452|With silver, and with gold, studded thick all o'er

# 16452|With glittering tin, the weapon of Mars thus toil'd.

# 16452|Brave as he is, and of unwearied arm,

# 16452|So shall be thy revenge, if thou shalt make

# 16452|Fierce contest for the Danaï. But fly

# 16452|With shout and clamour fill'd throughout the host.

# 16452|So shall ye perish, slain by Hector's hands,

# 16452|Each living soul, and, flying into fire,

# 16452|Sink under Troy's wide gulfs. Be this the work

# 16452|Of your brave sires, and all the parents' hearts

# 16452|Shall prosper, one and all. But ye in haste

# 16452|(For ye are valiant) shall

#

# [167000 | 5762.79] loss=1.32 avg=1.53

# [167001 | 5763.29] loss=1.60 avg=1.53One training sample is worth remarking on:

# [49999 | 12526.44] loss=1.83 avg=1.77

# ======== SAMPLE 1 ========

# ._

# 19221|The curfew tolls the knell of parting day,

# 19221|The lowing herd winds slowly o'er the lea,

# 19221|The ploughman homeward plods his weary way,

# 19221|And leaves the world to darkness and to me.

# 19221|Now fades the glimmering landscape on the sight,

# 19221|And all the air a solemn stillness holds,

# 19221|Save where the beetle wheels his droning flight,

# 19221|And drowsy tinklings lull the distant folds.

# 19221|Save that from yonder ivy-mantled tower

# 19221|The moping owl does to the moon complain

# 19221|Of such as, wandering near her secret bower,

# 19221|Molest her ancient solitary reign.

# 19221|Methinks I see her in her blissful dreams:

# 19221|--Or, fancy-like, in some mirage she lies,

# 19221|Majestic yet majestic, and of seems

# 19221|The image of the unconquerable skies.

# 19221|Methinks I see her in her blissful dreams:

# 19221|--Or, fancy-like, in some majestic cell,

# 19221|Where lordly seraphs strew their balmy dreams

# 19221|On the still night, or in their golden shell.

# 19221|There, in the calm of some Platonic dream,

# 19221|Sits she, and views the unclouded moon arise

# 19221|Like a fair lady full of realms divine;

# 19221|And, all at once, a stony face and bright

# 19221|Glittering in moonlight, like the noon-tints of a night.

# 19221|Methinks I see her in her blissful dreams:

# 19221|--Or, fancy-like, among the moonlight pale,

# 19221|A heavenly lark twitters with every gleam

# 19221|Of the rapt rapt beauty that she craves to hail.

# 19221|Or in the moonlight gleams she seems to see

# 19221|A virgin glory, and a power divine,

# 19221|And every motion of the looking-glass

# 19221|Is full of love and divine gentleness.

# 19221|Methinks I hear her in the twilight dim:

# 19221|--Or is it fancy-free, and musical,

# 19221|And full of music? Or some holy hymn

# 19221|Over some water-lily's long-lost lute?

# 19221|O no! it is not fancy-free, I see:

# 19221|Nay! fain would I thy spirit-hearts invest,

# 19221|And hear the paean of these dreamy rhymes,

# 19221|Might I but speak of my departed rhymes,

# 19221|And thou wouldst sing of my empoet-swans.

# 19221|O no! it is not fancy-free, I see:

# 19221|Even Poetry murmurs in a pensive dream,

# 19221|And like a breeze sings the blue mists of night.

# 19221|O no! it is not fancy-free, I see:

# 19221|Even Poetry murmurs in a pensive dream.

# 19221|How soft the zephyrs, how the languid hours,

# 19221|As in the noonday shade their limbs recline!

# 19221|How mellow streams the mossy banks assume!

# 19221|How solemn woods, low-chiselled, sumptuous green,

# 19221|Nod to the cadence of their hoarse refrain,

# 19221|As if the deep-toned muses' melodies

# 19221|With half-averted plaints and half-averted plumes

# 19221|In some majestic temple's quietness

# 19221|Had to the silver twilight slowly come.

# 19221|How solemn woods, low-couched, around thee lie:

# 19221|--Or is it fancy-free, and melody

# 19221|That makes the dull night long in worship held?

# 19221|Methinks I hear the harp's harmonious sound

# 19221|In some dim wood, when the deep shadows fall;

# 19221|And the low wind, like one that listens, makes

# 19221|In the still woods the harmony of all.

# 19221|Or in the moon's pale beam, on some hoar rock,

# 19221|Lonely and spectral, mourns her feeble woe;

# 19221|And as the slow waves roll, and, ebbing, break

# 19221|In music

#

# [50000 | 12536.67] loss=1.36 avg=1.76The rhyming in this sample is so good as to be suspicious. It might also sound familiar—because many of these lines are being copied from Thomas Gray’s Elegy Written in a Country Churchyard, which opens:

The curfew tolls the knell of parting day,

The lowing herd wind slowly o’er the lea

The ploughman homeward plods his weary way,

And leaves the world to darkness and to me.

Now fades the glimm’ring landscape on the sight,

And all the air a solemn stillness holds,

Save where the beetle wheels his droning flight,

And drowsy tinklings lull the distant folds;

Save that from yonder ivy-mantled tow’r

The moping owl does to the moon complain

Of such, as wand’ring near her secret bow’r,

Molest her ancient solitary reign.

Some spelling differences aside, this intro is almost entirely copied from the 8 copies of Gray’s poem in the corpus; this extensive copying is not something I spotted in the GPT-2-poetry samples I looked at, suggesting that the scaffolding of the metadata did indeed help with learning.

Also interestingly, the copying only goes so far, as immediately after the final line about the owl, where Gray continues:

…Beneath those rugged elms, that yew-tree’s shade,

Where heaves the turf in many a mould’ring heap,

Each in his narrow cell for ever laid,

The rude Forefathers of the hamlet sleep

GPT-2-poetry-prefix instead continues:

…Methinks I see her in her blissful dreams:

—Or, fancy-like, in some mirage she lies,

Majestic yet majestic, and of seems

The image of the unconquerable skies.

Methinks I see her in her blissful dreams:

—Or, fancy-like, in some majestic cell,

Where lordly seraphs strew their balmy dreams

On the still night, or in their golden shell.

There, in the calm of some Platonic dream,

Sits she, and views the unclouded moon arise

Like a fair lady full of realms divine;