This Waifu Does Not Exist

I describe how I made the website ThisWaifuDoesNotExist.net (TWDNE) for displaying random anime faces generated by StyleGAN neural networks, and how it went viral.

Generating high-quality anime faces has long been a task neural networks struggled with. The invention of StyleGAN in 2018 has effectively solved this task and I have trained a StyleGAN model which can generate high-quality anime faces at 512px resolution.

To show off the recent progress, I made a website, “This Waifu Does Not Exist” for displaying random StyleGAN 2 faces. TWDNE displays a different neural-net-generated face & plot summary every 15s. The site was popular and went viral online, especially in China. The model can also be used interactively for exploration & editing in the Artbreeder online service.

TWDNE faces have been used as screensavers, user avatars, character art for game packs or online games, painted watercolors, uploaded to Pixiv, given away in streams, and used in a research paper (2019). TWDNE results also helped inspired Sizigi Studio’s online interactive waifu GAN, Waifu Labs, which generates even better anime faces than my StyleGAN results.

In December 2018, “A Style-Based Generator Architecture for Generative Adversarial Networks”, et al 2018 (source code/demo video) came out, a stunning followup to their 2017 ProGAN (source/video), which improved the generation of high-resolution (1024px) realistic human faces even further. In my long-running dabbling in generating anime with GANs, ProGAN had by far the best results, but on my 2×1080ti GPUs, reasonable results required >3 weeks, and it was proving difficult to get decent results in acceptable time; StyleGAN excited me because it used a radically different architecture which seemed like it might be able to handle non-photographic images like anime.

The source code & trained models were released 2019-02-04. The wait was agonizing, but I immediately applied it to my faces (based on a corpus I made by processing Danbooru2017/Danbooru2018), with astonishing results: after 1 day, the faces were superior to ProGAN after 3 weeks—so StyleGAN turned out to not just work well on anime, but it improved more on ProGAN for anime than it did for photographs!

While I was doing this & sharing results on Twitter, other people began setting up websites to show StyleGAN samples from the pretrained models or StyleGANs they’d trained themselves: the quiz “Which Face is Real”, “This Fursona Does Not Exist”/“This Person Does Not Exist”/“This Rental Does Not Exist”/“These Cats Do Not Exist”/“This Car Does Not Exist”/“This Marketing Blog Does Not Exist” etc.

Since my StyleGAN anime faces were so good, I thought I’d hop on the bandwagon and created, yes, “This Waifu Does Not Exist”—one might say that “waifus” do not exist, but these waifus especially do not exist.

Examples

I feel like that rat in the experiment where it can press a button for instant gratification—I can’t stop refreshing

Anonymous

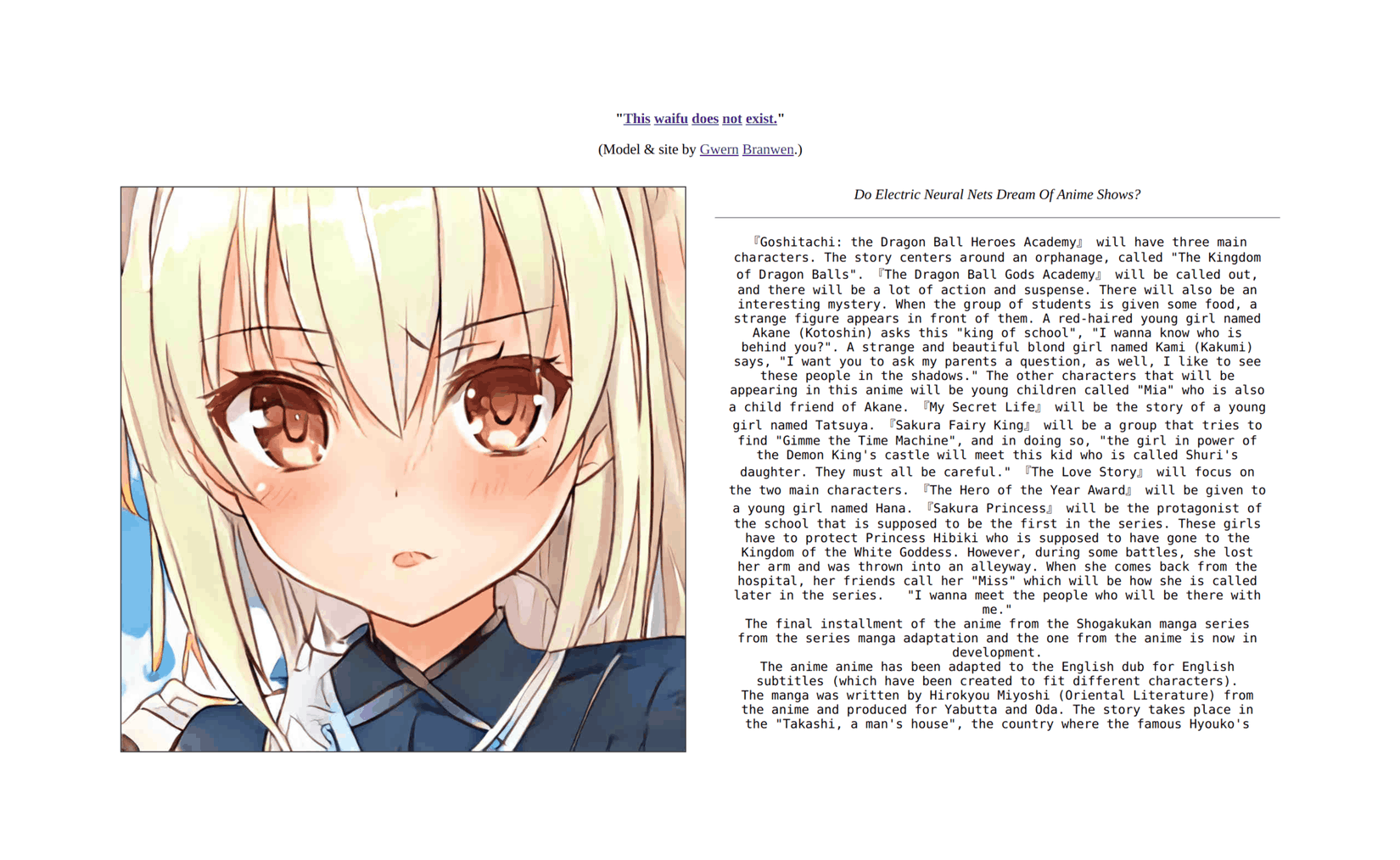

A screenshot of “This Waifu Does Not Exist” (TWDNE) showing a random StyleGAN-generated anime face and a random GPT-2-117M text sample conditioned on anime keywords/phrases.

“It is so sad to say that this manga has never been seen by any anime fans in the real world and this is an issue that must be addressed. Please make anime movies about me. Please make anime about me. Please make anime about your beautiful cat. Please make anime movies about me. Please make anime about your cute cat. I wish you the best of luck in your life.

Please make anime about me. Please make anime about my cute cute kitten.” —TWDNE #283 (second screenshot of TWDNE)

64 TWDNE face samples selected from social media, in an 8×8 grid.

Face samples selected by users of Artbreeder

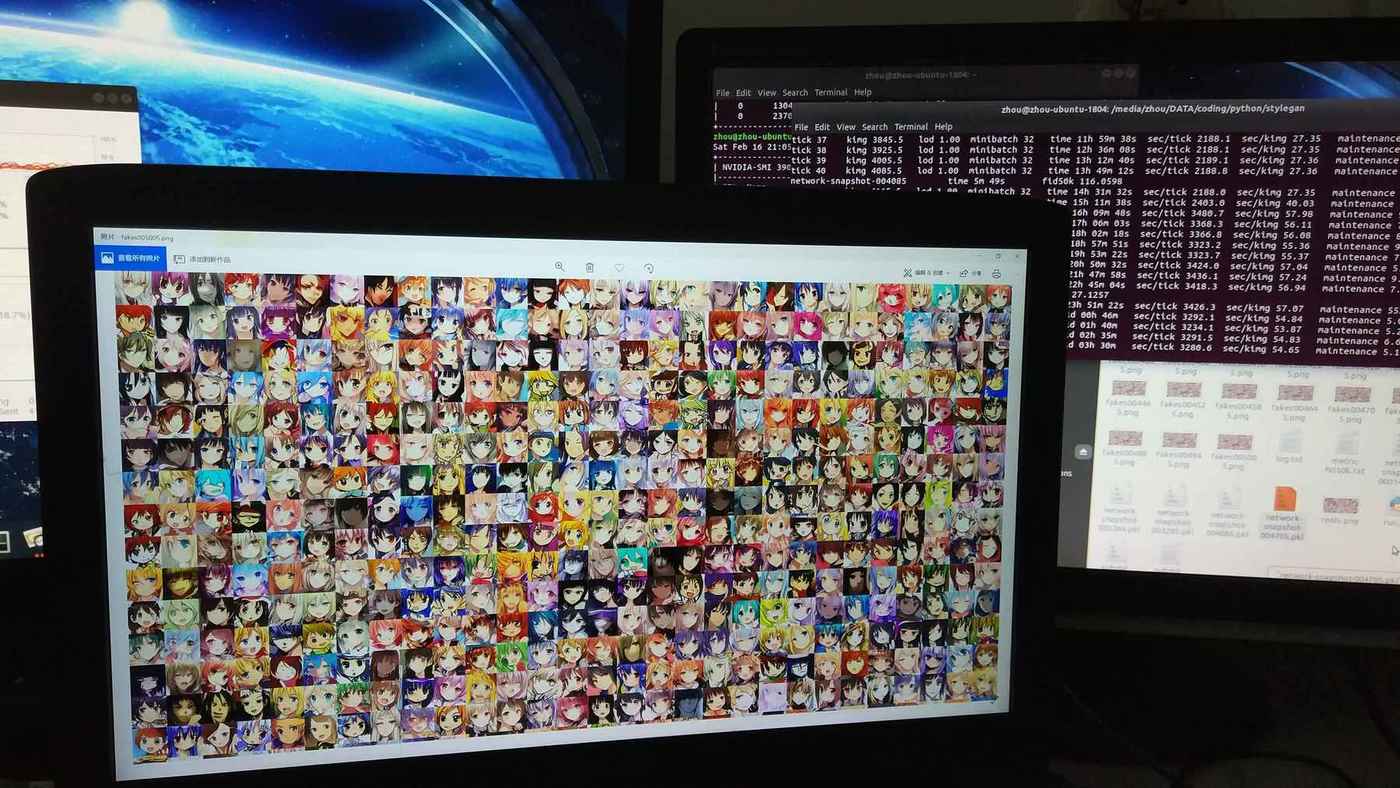

Obormot made a version of TWDNE–dubbed “These Waifus Do Not Exist”–which displays a constantly-updating grid of anime faces, with an alternative version displaying an infinite moving grid. On a large screen, these are particularly striking as one Baidu forum poster demonstrated:

Photograph by 大藏游星 of “These Waifus Do Not Exist” displayed maximized on a large computer monitor.

And the sliding-grid is hypnotic (half-hour-long video version):

Copyright

They are so very strange and beautiful, the beings who come before the end.

TWDNE faces are randomly generated and so, as far as anyone can tell, under current IP laws are not copyrightable and are all public domain; I release any copyright I may have to all generated text snippets & images under the CC-0 (public domain equivalent) license.

Implementation

Once opened I was shown a waifu so lovely and pure that I stared in amazement and awe.

Then the page refreshed automatically.

Now I am doomed to forever refresh to get her back, knowing it shall never be.

TWDNE is a simple static website which has 100,000 random StyleGAN faces and 100,000 random GPT-2-small text snippets; it displays a new image/text pair every 15 seconds.

TWDNE is implemented as a static site serving pre-generated files.

Why static instead of using a GPU server to generate images on the fly? The StyleGAN model is controllable (allowing for image editing), but wasn’t available in time for TWDNE and Artbreeder-style evolutionary exploration hasn’t been implemented at all, so there was no point in renting an expensive (>$127.58$1002019/month) GPU server to generate random faces on the fly, and it is better to simply generate a large number of random samples and show those; anyone who wants to look at even more faces can download the model and run it themselves (which would let them control psi or retrain it on new datasets such as faces of a specific character or attribute they are looking for). This is also far easier to implement.

There are 3 groups of random faces generated with different hyperparameter settings to show the full spectrum of the tradeoff between quality & diversity in StyleGAN. The first 70k text-samples are generated using OA’s publicly-released model GPT-2-117M, given a random seed 1–70,000 + a long prompt with many anime-related words & phrases I picked arbitrarily while playing with it; the final 30k were generated using a 2-step process, where GPT-2-117M was retrained on an Anime News Network dataset of short plot synopses to emit plot synopses which are then fed into the original GPT-2-117M as a prompt for it to enlarge on. (Unfortunately, it is not yet possible to make the generated face & text related in any way, but some of the juxtapositions will, by chance, be amusing anyway.) One can see the first set of 40k all displayed in a video by Scavin.

The static site is a single HTML page, ./index.html, plus 100,000 images at the filenames ./example-{0..99999}.jpg and 100,000 text snippets at ./snippet-{0..99999}.txt. The JS selects a random integer 0–99,9991, loads the image with that ID in the background, swaps it2, and loads a new text snippet with the same ID; this repeats every 15s. Additional JS adds buttons for forcing an immediate refresh, stopping the refreshing process (perhaps because the user likes a pair & wants to look at it longer or screenshot/excerpt it), and of course loading Google Analytics to keep an eye on traffic.

There is no rewind button or history-tracking, as that would cheapen the experience, eliminating the mono no aware & the feeling of reading through an anime version of the infinite Library of Babel—if the user is too slow, a face or story will vanish, effectively forever (unless they want to go through them by hand).

A large pile of responsive CSS (written by Obormot) in the HTML page attempts to make TWDNE usable on all devices from a small smartphone screen3 to a widescreen 4k display, resizing the faces to fit the screen width and putting the image & text side-by-side on sufficiently-wide displays.

As a static site, it can be hosted on Amazon S3 as a bucket of files, and cached by CloudFlare (a feature which turned out to be critical when TWDNE went viral). The total TWDNEv1 website size is ~6GB. (As of TWDNEv3, with all versions & text snippets, it weighs 34GB.)

The main upfront cost was ~$63.79$502019 to prepay 4 years of DNS for thiswaifudoesnotexist.net (infuriatingly, thiswaifudoesnotexist.com turned out to have been squatted just hours before I began working on it, possibly because of my earlier tweet); while CloudFlare is free, it doesn’t cache 100% and the non-CloudFlare-cached S3 bandwidth & hosting cost $125.03$982019 in February 2019.

“There’s this neural network that generates anime girls…some results look normal and others are terrifying. I painted a collage of my favorite results.” —Dinosarden, 2019-02-22

![“Reality can be whatever he wants.” —Venyes [Thanos meme about “These Waifus Do Not Exist”]](/doc/ai/nn/gan/2019-03-11-venyes-reddit-twdnethanosmeme.png)

“Reality can be whatever he wants.” —Venyes [Thanos meme about “These Waifus Do Not Exist”]

Downloads

The StyleGAN model used for the TWDNEv1 samples (294MB,

.pkl):all TWDNEv1–3 faces & text snippets (34GB) are available for download via a public rsync mirror:

rsync --recursive --verbose rsync://176.9.41.242:873/twdne/ ./twdne/all 100,000 text samples (50MB,

.txt)

Creating

Training StyleGAN

Faces

TWDNEv1

We’re reaching levels of smug never thought possible

Anonymous

I don’t know what happened here.

Generating the faces is straightforward. The StyleGAN repo provides pretrained_example.py, which downloads one of the Nvidia models, loads it, and generates a single face with the fixed random seed 5; to make this more useful, I simply replace the remote URL with a local model file, change the random seed to None so a different seed is used every time, and loop n times to generate n faces:

23,25c23,26

< url = 'https://drive.google.com/uc?id=1MEGjdvVpUsu1jB4zrXZN7Y4kBBOzizDQ' # karras2019stylegan-ffhq-1024x1024.pkl

< with dnnlib.util.open_url(url, cache_dir=config.cache_dir) as f:

< _G, _D, Gs = pickle.load(f)

---

> _G, _D, Gs = pickle.load(open("results/02046-sgan-faces-2gpu/network-snapshot-011809.pkl", "rb"))

34,35c35,37

< rnd = np.random.RandomState(5)

< latents = rnd.randn(1, Gs.input_shape[1])

---

> for i in range(60000,70000):

> rnd = np.random.RandomState(None)

...I ran the pretrained_example.py script on the then-latest face model with psi=0.7 for 60k 512px faces4, upscaled each image to 1024px with waifu2x, and used ImageMagick to convert the PNGs to JPGs at quality = 25% to save ~90% space/bandwidth (average image size, 63kb).

I’m terrified but also intrigued by this cryptid ghost waifu.

For the next 10,000, because people online were particularly enjoying looking at the weirdest & most bizarre faces (and because the weird samples help rebut the common misconception that StyleGAN merely memorizes), I increased the truncation hyperparameter (line 41) to psi = 1.0. For the final 30k, I took the then-latest model and set psi=0.6.

An overview of faces:

100 random sample images from the StyleGAN anime faces on TWDNE, arranged in a 10×10 grid.

TWDNEv2

I can’t stop hitting reload

The first set of 100k faces was generated using all of Danbooru2017’s faces both SFW & NSFW, the default face cropping settings, and 2 additional Holo/Asuka datasets I had. This led to some problems, so to fix them, I created a new improved dataset of faces with expanded margins I call ‘portraits’, to retrain the anime face StyleGAN on. When done training, they were considerably better, so I generated another 100k and replace the old ones.

TWDNEv3

Recently, he has begun to craft objects of great power. And many admire him more for this. But within his creations, one finds hints of a twisted humour. Look closely at his miracles and victims begin to emerge. Take an older project of his, ThisWaifuDoesNotExist.net. A wonder to behold? An amusement but nothing more?…How many more young men sit, hunched and enslaved to this magic? What strange purpose does this serve?

Ghenlezo, “Beware of the Gwern”

Discussion of TWDNEv3, launched January 2020. TWDNEv3 upgrades TWDNEv2 to use 100k anime portraits from an anime portrait StyleGAN2, which removes the blob artifacts and is generally of somewhat higher visual quality. TWDNEv3 provides images in 3 ranges of diversity, showing off both narrow but high quality samples and more wild samples. It replaces the StyleGAN 1 faces and portrait samples.

In December 2019, Nvidia released StyleGAN 2. S2 diagnosed the frustrating ‘blob’ artifacts that dogged S1 samples, both face & portrait, as stemming from a fundamental flaw in the S1 architecture, and the best way the neural network could figure out to work around the flaw. It removed the flaw, and made a few other more minor changes (for better latent spaces etc.). Aaron Gokaslan trained a S2 model on the portrait dataset, and I used it to generate a fresh batch of 100k faces, with different 𝜓 values (0.6, 0.8, & 1.1) as before:

python3 run_generator.py generate-images --seeds=0-50000 --truncation-psi=0.8 \

--network=2020-01-11-skylion-stylegan2-animeportraits-networksnapshot-024664.pkl

python3 run_generator.py generate-images --seeds=50001-75000 --truncation-psi=0.6 \

--network=2020-01-11-skylion-stylegan2-animeportraits-networksnapshot-024664.pkl

python3 run_generator.py generate-images --seeds=75001-100000 --truncation-psi=1.1 \

--network=2020-01-11-skylion-stylegan2-animeportraits-networksnapshot-024664.pkl

100 random sample images from the StyleGAN 2 anime portrait faces in TWDNEv3, arranged in a 10×10 grid.

I didn’t delete the v1–2 images, but did move them to a subdirectory (www.thiswaifudoesnotexist.net/v2/$ID), where they are also rsync-able.

Text

the f— did i just read

Anonymous

I thought it would be funny to include NN-generated text about anime, the way “This Rental Does Not Exist” included char-RNN generated text about hotel rooms. But the accompanying GPT-2-117M snippet generation turned out to be a little more tricky than faces.

GPT-2-117M: Prompted Plot Summaries

It’s a GAN AI, and it just sits there, endlessly generating fake anime characters along with gibberish anime story-plots.

The full GPT-2-1.5b model which generates the best text has not been released by OpenAI, but they did release a much smaller model which generates decent but not great text samples, and that would just have to do.

I used the gpt-2-PyTorch CLI package to run the downloaded model without messing with the original OA code myself. The repo explains how to download the GPT-2-117M model and pip install the Python dependencies. Hyperparameter-wise, I roughly approximated the OpenAI settings by using top_k=30 (OA used top_k=40 but I didn’t see much of a quality difference and it slowed down an already slow model); the temperature hyperparameter I did not change but gpt-2-PyTorch seems to use the equivalent of OA’s 0.7 temperature.

I found that feeding in a long prompt with many anime-related phrases & words seemed to improve output quality, and I noticed it would easily generate MAL/Wikipedia-like “plot summaries” if I prompted it the right way, so I went with that. (I threw in a parody light novel title to see if GPT-2-117M would catch on, and as for “Raccoon Girl”, that was a little joke about The Rising of the Shield Hero which I’d seen so many anime memes about in January/February 2019.)

I couldn’t figure out gpt-2-PyTorch’s minibatch functionality, so I settled for running it the simplest way possible, 1 text sample per invocation, and parallelizing it with parallel as usual.

The Bash script I used to generate all samples:

function gpt2 {

BIT="$(($@ % 2))"; # use ID/seed modulo 2 to split GPT-2-117M instances evenly across my 2 GPUs

CUDA_VISIBLE_DEVICES="$BIT" python main.py --seed "$@" --top_k 30 --text "Anime ai nani arigatou gomen \

sayonara chigau dame Madoka jigoku kami kanojo mahou magical girl youkai 4koma yonkoma Japan Oreimo baka \

chibi gakuran schoolgirl school uniform club Funimation Gainax Khara Ghibli Hayao Miyazaki Saber Fate \

Stay Night Pop Team Epic Japanese Aria Escaflowne Kanon Clannad comedy itai manga Shonen Jump pocky \

tsundere urusai weeaboo yaoi yuri zettai harem senpai otaku waifu weeb fanfiction doujinshi trope \

Anime News Network Anime Central Touhou kanji kaiju Neon Genesis Evangelion Spice and Wolf Holo Asuka \

kawaii bishonen bishojo visual novel light novel video game story plot Fruits Basket Toradora Taiga \

Aisaka tiger Detective Conan Pokemon Osamu Tezuka cat ears neko romantic comedy little sister character \

plot drama article nekomimi bunny isekai tanuki catgirl moe manga manga manga anime anime anime review \

plot summary. An exciting cute kawaii new anime series based on a light novel shoujo manga called \

\"I Can't Believe My Alien Visitor Is My Little Sister\" or \"MAVISM\", a sequel to \"Raccoon Girl\", \

is the latest hit. In the first episode of this anime, " \

>> /media/gwern/Data/thiswaifudoesnotexist/snippet-"$@".txt;

cat /media/gwern/Data/thiswaifudoesnotexist/snippet-"$@".txt; }

export -f gpt2

seq 0 70000 | parallel --jobs 12 --progress gpt2

# Computers / CPU cores / Max jobs to run

# 12:local / 32 / 12

#

# Computer:jobs running/jobs completed/%of started jobs/Average seconds to complete

# local:1/0/100%/0.0s harem anime heroine "Raccoon Girl II" was shown at Ani \

# me Funimation's Anime Festival (and it's a very popular anime) and was nominated as a top 100 anime \

# on the Tokyo Otaku Mode list (I'll tell about that soon, I promise) . The suitable ending for the f \

# irst episode (after a lot of scenes with a lot of bad guys, which is the end of episode three of the \

# series) was shown as an early preview of the game which can also be downloaded fro \

# m the website.

# In my second review, I went by many titles, which you can find all here to download t \

# he episodes as well, but for the sake of brevity, here is an overview of the games I saw (I won't go \

# into all the other titles because I think there are too many titles you can download that might be \

# good, I have some I wouldn't name because I don't want to spoil them) 土安黄满收

# The only one t \

# hat I did not watch the whole series for my liking was "AoT, the End". The first episode \

# is about (Katsuko) x2 and x2 was my first love story, x2 is one whe \

# re it all gets a second time. This anime x2 is not only a really great game and it \

# x2, x3. But for a few moments of enjoyment x3 was the ending and I \

# feel that I am not here to argue that "it wasn't even good but it was awesome and it changed my l \

# ife it was fun it made my eyes roll I felt that it was great, it fixed any that I"

# 100%|████████████████████████████████████████████████████████████████| 512⁄512 [00:14<00:00, 35.54it/s]

# ...While running the first pass, some GPT-2-117M instances will fail for reasons like running out of GPU VRAM (each instance takes almost 1GB of VRAM and my 1080tis only have ~10GB usable VRAM each). These can be fixed by looking for empty text files, extracting their ID/seed, and trying again:

MISSING_TXT=$(find /media/gwern/Data/thiswaifudoesnotexist/ -type f -name "*.txt" -size 0 \

| cut -d '-' -f 2 | cut -d '.' -f 1 | sort --numeric-sort)

echo "$MISSING_TXT" | parallel --jobs 5 --progress gpt2The final generated GPT-2-117M text samples have a major flaw: it will frequently end its generation of anime text and switch to another topic, as denoted by the token <endoftext>. These other topics would be things like sports news or news articles about Donald Trump, and would ruin the mood if included on TWDNE. The problem there is that gpt-2-PyTorch does not monitor the model’s output, it just runs the model for an arbitrary number of steps regardless of whether <endoftext> has been reached or not. To remove these unwanted topic changes & leave only anime text, I run each text file through sed and it exits/stops processing when the token is reached, thereby deleting the token & everything afterwards:

find /media/gwern/Data/thiswaifudoesnotexist/ -name "snippet-*.txt" -type f \

-exec sed -i '/<|endoftext|>/{s/<|endoftext|>.*//;q}' {} \;This leaves 70k clean anime-themed gibberish text samples which can be loaded by the same randomization process as the faces—hours of fun for the whole family.

GPT-2-Anime Plot Synopses for GPT-2-117M

okay my brain literally cannot comprehend the fact that these aren’t just real drawings and were made by a computer. what

I needed to feed GPT-2-117M such a large prompt because it is a general language model, which has learned about anime as merely one of countless topics in its giant corpus. The primary goal of training such language models is to then use them on narrow task-specific corpuses to ‘finetune’ or ‘transfer learn’ or provide ‘informative priors’ for a new model, with the global knowledge serving to model all the generic language (if you have a small corpus of medical clinical reports, you don’t want to waste precious data learning something like capitalization) and getting the model up to speed for the new corpus, and tapping into hidden knowledge of the original.

By the same reasoning, training GPT-2-117M on an anime text corpus might lead to better or at least, funnier, generated text. nshepperd wrote some GPT-2 training code for finetuning and retrained GPT-2-117M for ~3 epochs on Canggih P Wibowo’s 2016 Anime News Network scrape, using the title & plot synopsis fields (TITLE|PLOT SYNOPSIS) I have also used the finetuning code for Project Gutenberg poetry and wrote a GPT-2-117M finetuning-training tutorial using the poetry as a running example.

GPT-2-117M appeared to start overfitting quickly and training was stopped, yielding a final trained checkpoint we call “GPT-2-anime” (441MB). To use it, one can simply unpack it into the GPT-2-117M model directory, overwriting the files, and then running the OA code as usual.

Sample unconditional output:

======================================== SAMPLE 1

========================================

a boy who works in a magic shop. Her magic power is not despite the power

these animals and the atmosphere grows stronger every night. She can't use

magic at all so she can get her witch powers back.

Shirobako: My Island (TV)|"Abe Eigaku is a rookie high school student who is

about to have a battle dyed by the colors of samurai and a sordid fangirl who

only wished to destroy the hell that befall him. One day, he runs into a

samurai and strikes a deal. The deal: If he can get to, then distract the

soaked Edo sword will be able to change his form. The deal was an attack, and

the young boy is left with an injuries and he is left with bitter injuries in

the hands of the Kumano family. From the experience that inherits the "gods'

ability" in him, he comes into an outdoor fighting ring with the Gices. There

he meets Setsu, a former show comor who has changed his life---like his father,

himself as a ""demon"" of the Gices, and a member of the Gices. At the family's

house, he makes his ultimate weapon, the three-headed demon Gekkou, Kabuto.

Yukino comes to his aid, and their rivalry soon evolves as they head into more

serious and skillful monsters. With his ability, Yukino faces others both

fierce and charming. The series follows their everyday lives, together with

their personal ones and each other, as they go through their daily lives and

try to grow up as close to each other as possible to the one they love.

Berserk: The Golden Age Arc (TV)|Guts is a young man who has been accepted into

a powerful industrial city by a mysterious woman named Null Mother, and is then

summoned to the city of Midland. The first he ever summoned to his world was

the Ghost Ship, a brutal battle that has been held on for thousands of years.

Now he is on the eve of the Bloody War, when the inhabitants of the little

world try to destroy the automated sentry. However, Guts cannot stop them and

blithelyave their fate as the inhabitants of Midland are devourant of the very

world they reside in.

Lupin III: The Legend of the Gold of Babylon (movie)|Deep beneath New York city

are buried tablets that tell the tale of Babylon's gold that was lost during

Babylon's destruction. Lupin is interested in finding this gold, but will have

to deal with two mafia families and Zenigata during his quest in unsolving the

mystery of the tablets. During Lupin's journey he encounters an old woman who

has a connection with this treasure.

Fortune Arterial: Akai Yakusoku (TV)|Kohei Hasekura has lived a live of

transferring schools for boys all his life. He's been the site of a recently

bankrupt well known as Moon River for years and has been looking forward to

getting it back. At his new school, however, he sees the school's most wanted

fight and gets a duel to choose the strongest in the class. The battle is about

to be fought by a monster called Hildegarn either. Hasekura's friend Orca

Buchizouji gets dragged into the fight and loses a majority of key to the duel.

Hasekura fights alone against the bullies and punks from the previous season,

and when the duel is over everyone comes to an end.

Gunslinger Girl: Il Teatrino (TV)|When the Social Welfare Agency investigates

the disappearance of an operative, their inquiry leads them right into the lair

of their rival, the Five Republics. The assassin Triela infiltrates the hostile

organization, but her search is cut short when she finds herself staring down

at the barrel of a gun...

Yuruyuri Nachu Yachumi! (TV)|

Magical Sisters Yoyo & Nene (TV)|Yoyo and Nene are witches living in the

Magical Kingdom who specialize in curse and decusing. They are negotiating with

a woman who wants to find her sister, a witch that disappeared twelve years

ago, when a monstrous tree appears in front of Yoyo and Nene´s house. Embedded

within the tree are unfamiliar buildings which prompt Yoyo to explore these

strange constructions. During her scout, Yoyo unintentionally ends up thrown

into modern day Japan. He is taken away by a doll-cat who falls from a magical

tree on his way back. He is taken back to Japan by Gogyou, a girl who is their

own, who falls from a magical tree. He and his friend Nanami lands on a magical

journey to recover the lost magical powers of the magical stone.

Sakura Taisen: Ecole de Paris (TV)|"Anastasia was supposed to be invaded by

various people. She is so damaged that she can't see anything. But her friendcesThere are two immediately visible problems with the GPT-2-anime output.

Too Short: prompted/conditional output from GPT-2-anime looks… much the same.

Apparently what happened was that during the finetuning, GPT-2-117M learned that the format was incorrigible and internalized that to such an extent that it would only generate title+short-synopsis-followed-by-new-pairs. If a prompt is provided, it might influence the first generated pair, but then GPT-2-anime knows that the prompt is guaranteed to be near-irrelevant to the next one (aside from perhaps franchise entries alphabetized together, like sequels) and can be ignored, and each title+plot synopsis is only a few sentences long, so GPT-2-anime will transition quickly to the next one, which will be effectively unconditional.

This is annoying but perhaps not that big of a problem. One can surely fix it by using a dataset in which the title/synopsis is then followed by a much longer plot description (perhaps by merging this ANN scrape with Wikipedia articles). And for generating random text snippets for TWDNE, we don’t need the control since the unconditional samples are all on-topic about anime now (which was part of the point of the finetuning).

Low Quality: some outputs are thoroughly unsatisfactory on their own merits.

The first entry lacks a series title and any introduction of the premise. One entry has a title—but no plot. And while most are shorter than I would like, the last one is simply far too short even for a plot synopsis.

This is a problem. The outputs are moderately interesting (eg. “Shirobako: My Island”) and provide a good plot synopsis which is a nice starting point, but as-is, are unacceptably low-quality for TWDNE purposes, since they are so much shorter & less interesting on average than the long prompted GPT-2-117M examples were.

However, the 2 problems suggest their own solution: if the GPT-2-anime plot synopses are good premises but GPT-2-anime refuses to continue them with plot/dialogue, while GPT-2-117M generates good long plot/dialogue continuations but only if it’s given a good prompt to force it into anime mode with useful keywords, why not combine them? Generate a wacky plot synopsis with GPT-2-anime, and then feed it into GPT-2-117M—to get the best of both worlds!

As before, sed will take care of the <endoftext> markers (& if one doesn’t like the synopses’ “Source:” end-markers, they can be removed with sed -e 's/ (Source: .*//'), the titles can be italicized, and we can post-process it further for quality: if either of the synopsis or plot summary are too short, drop that text snippet entirely. This should yield long consistent high-quality samples of anime-only text samples of the form title/synopsis/coherent plot-summary or dialogue or article about said title+synopsis.

After generating 12MB of GPT-2-anime plot synopses yielding ~35k synopses (assuming some percentage will be thrown out for being too short), I fed them into this script for GPT-2-117M similar to before, to generate text-snippets #70,000–100,000:

TARGET="/media/gwern/Data/thiswaifudoesnotexist"

I=70001

grep -F '|' /home/gwern/src/gpt-2/samples | sed -e 's/^\(.*\)|/_\1_: /' | \

while IFS= read -r PROMPT; do

if [ ${#PROMPT} -gt 150 ]; then

PLOT="$(CUDA_VISIBLE_DEVICES=1 python main.py --seed 5 --top_k 40 \

--text "Japanese anime manga light novel. $PROMPT. Plot \

Summary. In the first episode of this anime " | \

sed -e '/<|endoftext|>/{s/<|endoftext|>.*//;q}'))"

if [ ${#PLOT} -gt 250 ]; then

echo "$PROMPT In the first episode of this anime $PLOT" \

>> "$TARGET/snippet-$I.txt"

echo "$TARGET/snippet-$I.txt"

cat "$TARGET/snippet-$I.txt"

I=$((I+1))

fi

fi

doneSample combined output:

/media/gwern/Data/thiswaifudoesnotexist/snippet-71240.txt

_Mayonaka wa Junketsu_: His older brother has been hospitalized for about 5

weeks for about 6 weeks. Because of his unaccidental death, Ryouta's parents

send him to live with his uncle, Yuuichirou. To his surprise, Yuuichirou is

actually the son of his father's lover! Will Ryouta get to know Yuuichirou?

(from B-U) In the first episode of this anime 【The Second Episode】, the

story takes place in Koegi-ji town. After Yuuichirou and his family leave to

live with his uncle, they encounter "Ryouta and the three of them". They learn

about their real relationship, but they're not going to tell this kind of story

for fear of being banned from the show. The characters' father, Kazui, is the

first name his family does not use. The story begins with the trio and Kazui

being together, while their parents are fighting for the survival of their

families, Yuuichirou and Ryouta in the middle of a battle. 【In the Next

Chapter】 I also do a series of interviews with people from the anime.

---------------------------The story is about three girls in the house.

-------------------From that moment on, the plot follows them as their story

goes on. They are all in shock about what has happened, but they believe that

Yuuichirou is the real Ryouta. From that moment on, they feel they have a

chance to change their path for the better after the events at the hospital

which led to Yuuichirou's death to come home and they try to gain friends by

helping Yuuichirou. ---------------There are many other characters besides the

three in the story, so what is the real one? The main character is called

Yuuichirou Kazui ( 招仮猿 名子 ) 【First Character】 【Second

Character】 【Third Character】 ---------------This anime tells a story very

similar to the show, just with characters that make you smile. If you are in a

certain situation and see the person that is your favorite, there is no

problem. However, you need to be mindful of the following things to make sure

you know who you are dealing with. The story is very short, but there are many

things that need to be done in order to make you smile. There are many times

that I would write a scene that just needs to end, the most important part, of

the scene, or I would have to make the scene not end at all. As long as it

doesn't end with a big scene that was in a certain moment, you wouldn't be able

to use your eyes to make out that someone is laughing, or a child being played

or something. Just be mindful of how you are dealing with those moments and

keep yourself a little calm even if they are actually happening

/media/gwern/Data/thiswaifudoesnotexist/snippet-71241.txt

_Shijin no Koibito_: Collection of short stories: (1) Shibatte (2) Love Me Tell

Me (3) I Love You (4) I've Fine (5) Meido (6) Take Of The Class (7) Liar (8) Take Of

The Precious Parade In the first episode of this anime iku wa Hana

kawakukushigai wa (Love me tell me a secret), a student named Ritoye has to

learn to read all of the kanji correctly. While they are doing this, they'll

notice something odd, they can't talk and the class will change. They'll also

notice some other things that happened, and Ritoye will get annoyed with the

school and will leave her class. Once they're through with her class, she'll

explain the problems and the solutions. She'll begin by looking back through

their textbooks, searching for the kanji that says 'I'm on the line' and 'I'm

here to talk a little bit about the story'. At some point in the story Ritoye

finds out that the class used to be 'on the line' with 'I'm just being a

teacher', so if someone is on the line they can start a lesson by asking the

name of that person you're teaching and giving a name of the class 'I-Mitsu'

which you have to pick from a few names on the internet. While the topic gets a

little heated, there'll be a brief period of humor where the class becomes even

funnier. One character shows off some random drawings while Ritoye is sitting

by her desk, so maybe it's not a complete mess of drawings, but I was sure it

would have been better if it was. The manga was originally published in

February 2014. I'll update this article if anything changes, then give up.

------------- RITOYE: A school principal

~ ~ ~

My parents will be around a week or two after school. During that time, the

school's president will take care of the school and it will be my job to pick

up students and take care of the textbooks.

We don't even need to call her. Her name is Ritoye.

She has all the classes they need, but she still says she doesn't like 'those

guys...

I love you everyone. I really do.'

I'm here, to get this in front of class, as I want to make sure she knows I'm

serious.

That's what the head teacher says.

I'll just take everything from you to make sure she knows that I'm serious.

"You, you're the best teacher in class, aren't you?"

It doesn't matter how difficult, we'll give you everything. We'll

/media/gwern/Data/thiswaifudoesnotexist/snippet-71242.txt

_Hitodenashi no Hirusagari_: A mysterious disease. A disease. The disease has

drastically differ from the usual suspects of the type of disease, but it is

basically living in a hospital as a guinea pig for a few weeks. A short story

of a person's persistent determination to save a person's life, and the story

of a person's struggles to find it in this "comedy" story. (Source: MU) In the

first episode of this anime 『Hitodenashi no Hirusagari』 (1962) the

protagonist, Hiroki, begins his life. In the next episode he has to learn that

he cannot leave Kyoto. From this he starts to find out more about himself and

the world, as well as a great deal about the life he has created for himself.

He learns a great deal about himself, as well as about the world. It goes

through the story like the story of a kid having a terrible experience...or the

story of young people taking it upon themselves. What Hiroki learns, however,

is quite difficult to grasp because of the many factors that are involved.

This is the first light novel I read that started off as a light novel, in the

spirit of the first book that came out in English.

When I came through the last arc of this short anime, I was very disappointed.

I was expecting much much more of a series of stories and anime but instead, I

found that it felt more about the plot. As the first episode started, in a

similar manner to the last arc, each arc is different from the previous arc.

From what I can gather, many plot points were missed when read through and how

often it was made a point of focus to tell a story instead of the original.

At the end of a story, you get to make your own sense of it based on how you

read it.

One time there was two main things left out as the main plot that needed me to

know a lot more.

First, I couldn't really get that the stories you saw in the first arcs were

actually the people that you could actually talk to.

This left me feeling more like I had lost some of my sense of immersion in a

story and just didn't really find it there. For example, I started to feel that

the main plot was very different from what you would see in the first arcs. The

two main themes we saw in the first arcs were how to live and how to do a life.

I found the story of how the life of a person is different from one that you

might see in the books.

The story of a person's living and living alone is also different from the

story of the world that you might see in a short play.

And to answer the question that many people have asked me over the last six

months, and these comments, but in order to

/media/gwern/Data/thiswaifudoesnotexist/snippet-71243.txt

_The King Of Debt_: Souta is a rich guy, who is unlucky in love, and punishes

by the rich son of a rich Chinese mafia as he is tossed about debt. But is he

really bad at such a guy? (from B-U) In the first episode of this anime

何月处, Souta is forced to marry a rich kid to buy him a piece of his ass. A

guy who doesn't know how to make friends with strangers, only has a friend for

the rich guy's sake. Souta does this by being the only person in the house that

doesn't have a job but a car, and even a friend of hers can't really call her

sister 'fae'. He also has an idol he can sing on stage, and he is forced to

fight for her (a guy named Katsu) against Katsu himself in the episode entitled

Souta Is Fae. In a final episode, he is forced to run the family business for

$100,000. What's the point in doing it? The kids only get to run the business,

but when they pay out the money they want in their inheritance, Souta starts to

run the shop as well. It's very simple for Souta and the guy he's running his

business for. After the episode has been rated H, the new episode has been

rated H...

favorite favorite favorite favorite ( 5 reviews )

Topics: Drama, TV, Romance, Romance

Community Audio 91 91 Seiken Hamamoto's Hacronyms 2 1 of 1 View 164 of 190

Topics: Drama, TV, Fantasy, Fantasy

Community Audio 88 88 The Man From The Outer Heaven 4 3 of 3 View 164 of 189

Topic: Drama, OVA

Community Audio 87 87 Seiken Hamamoto's Hacronyms 9 8 of 8 View 164 of 190

Topic: Drama, OVA

Community Audio 85 85 The Man From The Outer Heaven 3 2 of 2 View 164 of 185

Topic: Drama, OVA

Community Audio 84 84 Seiken Hamamoto's Hacronyms 2 2 of 2 View 164 of 189

Topic: Drama, OVA

Community Audio 83 83 Seiken Hamamoto's Hacronyms 3 3 of 3 View 164 of 190

Topic: OVA, DVD, Blu-ray, DVD-R

Topics: Drama, TV

Community Audio 82 82 Seiken Hamamoto's Hacronyms 3 3 of 3 View 164 of 190

Topic: Drama, OVA

Community Audio 81 81 Seiken Hamamoto's Hacronyms 2 2 of 2 View 164 of 188

Topic: OVA, DVD,

/media/gwern/Data/thiswaifudoesnotexist/snippet-71244.txt

_Yuuhi Zukan_: A story about a student council president and his student

council president, and how they are going to boot on the plan of how to change

the financial system. Unfortunately, this plan results in both failure and

budget failure. (from B-U) In the first episode of this anime 一日本の橙

and other characters form a conspiracy to gain access to his store, after being

cut in half, they make their way to the store to recruit them using the powers

of a demon. The demon has three characteristics: a strong, sharp eye, a strong

hand; a black eye, with white and black pupils with a big black mark on, and a

silver eye, with a black eye mark. The Demon's ability to create spells is

"demonic mana" that can be activated as if it were "guru aura". After a brief

delay, however, the Demon enters a black space that has a black face, and then

the Demon is attacked by a small black hole in his chest. The Demon attacks the

small black hole and when the black hole dies, the Demon's power to create

spells is restored. The demon returns to his house where is an old man called

Tsubayasu who says that he is going to create a dragon with great power. He has

been searching for a good magic and after his work with Tsubayasu and his

students for about half a decade now, he sees the dragon coming on a distant

night. Once this happens, his plans fall apart. When he discovers it was some

magic wizard he has been using, he leaves Tsubayasu thinking that he can not

use the dragon because of the power-up and his plans have come to an end. He

wakes up his parents and tells them that Tsubayasu had found money and a dragon

he created. (from B-U). 一日京初日 歋未夢の橙面者 (from B-U) A

young girl named Fushikata is assigned to work for the student council

President in the store. Fushikata is an ordinary girl who works as a maid, yet

she is also an extremely dangerous person. She lives with her mother and sister

as the main characters of the series. One day Fushikata's mother goes missing.

When they return to the store, a group of students, with a group of girls,

enter. They are given the key to the store, but after they get home Fushikata

is killed by the two of them. The group discovers that the woman they have lost

is Tsubayasu's old teacher, Hidetoshi. At this time Fushikata starts to think

about how she should treat him at a later date but that she is too busyThe corresponding 30k faces are generated & upscaled as before, index.html updated to randomized 0–100,000, and so on.

GPT-3

Upgrade of ThisWaifuDoesNotExist.net, using GPT-3 to regenerate text samples. This section describes the prompt used, and provides the shell script for generating GPT-3 anime reviews.

The previous pipeline used a multi-stage workflow: a finetuned (on anime plot thumbnails) small GPT-2 would generate short anime snippets, which were used to prompt the full-sized GPT-2-1.5b along with keywords. GPT-3 is powerful enough to dispense with the need for this—a short simple prompt cuing a review of ‘new’ anime is enough to generate anime reviews & plot summaries of greater quality.

Further, as GPT-3 is hosted behind the OpenAI API, samples can be generated by a short

curlscript to call the API with the prompt & hyperparameters, so the user does not (and cannot anyway, in light of GPT-3’s size) need to run GPUs etc. locally.

OpenAI released GPT-3 in June 2020 as a SaaS, with the model available only through a closed beta API. I was given access, and ran extensive experiments in generating poetry/nonfiction using the web interface. After satisfying myself with that, I thought to update TWDNE with GPT-3 anime plot summaries.

Because GPT-3 is so much more powerful than any of the GPT-2 models, the two-phase process and extensive keywords can be omitted entirely in favor of just a single short prompt—GPT-3 will get the idea immediately. After some experimentation (“prompt programming” remains a black art), I found that I could get a novel anime title & plot summary by framing it as a review of an anime from the future (eg. 2022). A simple prompt that worked nicely was

2022 Anime Fall Season Previews and Reviews

Previews of the latest and hottest upcoming Japanese anime this fall season!

Below, a review of the themes and plot of the first episode of the widely-anticipated 2022 fall original new 1-season anime

GPT-3 API

The API itself is straightforward to use, and can be interacted with via their Python package or just curl. You have to provide an API key, but otherwise, one just needs the temperature and top-p (for nucleus sampling) and a prompt, and one gets back the text completion. Since the prompt is so short, we don’t need to worry about issues like our text tokenizing into unexpected BPEs (which was a major issue with literary uses) or hitting the context window (doubled to 2048 BPEs, but still all too painfully narrow). The returned string is JSON like this:

{

"id": "cmpl-zFdIa6r5oO6AV4iogorDoAXh",

"object": "text_completion",

"created": 1593397747,

"model": "davinci:2020-05-03",

"choices": [

{

"text": "Yutori-chan which is produced by Sunrise:\nThe Kind-Hearted T-back Hurdler\nYutori-chan\nAt\n

long last we welcome the challenging and heartwarming anime Yutori-chan produced by",

"index": 0,

"logprobs": null,

"finish_reason": "length"

}

]

}GPT-3 Generation

So, to generate new anime plot summaries, one can just loop through with curl, extract the text field with jq, do a little reformatting, and that’s it:

for i in {0..100000};

do

echo -n "Review of the themes and plot of the first episode of the new anime " > snippet-$i.txt

curl --silent 'https://api.openai.com/v1/engines/davinci/completions'

-H 'Content-Type: application/json' -H 'Authorization: XYZ' # NOTE: insert your own API token here

-d '{"temperature": 0.95,"top_p": 0.98, # }

"prompt": "2022 Anime Fall Season Previews and Reviews\nPreviews of the latest and hottest \

upcoming Japanese anime this fall season!\nBelow, a review of the themes and plot of the \

first episode of the widely-anticipated 2022 fall original new 1-season anime \" ",

"max_tokens": 700 }' | \

## Select just the text completion:

jq '.choices[0].text' | \

## unescape quotes:

sed -e 's/\\\"/"/g' | \

tee --append snippet-$i.txt

echo -n "…" >> snippet-$i.txt

sleep 3s

doneThe hyperparameters are vanilla GPT-3 settings:

best-of (BO = 1): using Meena-style best-of ranking like BO = 20 is not worth the expense here as we are not asking tricky questions or assigning tasks with a ‘right answer’; for creative writing like anime reviews, regular sampling is fine

temperature: varying temperatures from 0.80 to 0.95 all work fine, so this is not a task that is temperature-sensitive—apparently there are always reasonable completions even if a lot of low-probability tokens are selected

top-p: I generally use nucleus sampling to cut off the gibberish tail of the bottom 2–5%; here, it didn’t make much difference that I could tell, so I left it at my usual 2% (0.98).

The use of future years in the prompt doesn’t always succeed in generating novel anime; here is a failure case, a perfectly sensible, coherent, plausibly-human-written anime review which is, however, too close to reviewing an anime which already exists:

Review of the themes and plot of the first episode of the new anime “DARLING in the FRANKXX”, scheduled to premiere on October 7th, 2017.

Visuals: 4.0/5.0 (Average)

The visuals of this new anime are nothing particularly spectacular, but still pretty good. Character designs are fairly unique, but there’s not a whole lot of colors for this episode. It does set up for a darker story and does everything pretty effectively.

Visuals are rarely an indicator of the anime’s quality, but it gives you a feel for what it looks like overall.

Sound: 3.5/5.0

This opening song was pretty cool, and the seiyuus did a great job in making their characters come to life.

Music wasn’t spectacular, but the voice actors did a great job in bringing their characters to life.

Story: 3.5/5.0

Surprisingly this seems like it’s going to be a pretty intense and gloomy romance story about having kids and falling in love. I’ll be definitely checking this out when it comes out.

The plot summary for “DARLING in the FRANKXX” is basically the setup of this episode.

Character: 3.5/5.0

This character I didn’t really like when she first started showing up, but by the end of the episode I was pretty much warming up to her. The MC doesn’t really do a whole lot in this episode, but he seems pretty nice. A lot of development is probably gonna go on with the other characters, so I’ll be watching out for that.

Closing Thoughts: (note: this was written before this anime came out)

This anime did end up being quite decent, and my complaints about it mostly concerned the time crunch, so it’s not the anime’s fault at all. I had only two other things that would have improved this anime even more: One would be to include more gags involving the flowers, which were actually pretty cute. They were a funny way to tell us about who the characters were and their dynamics with one another. The other would be to not rush things so much. Anyways this was a pretty great anime overall. I hope they do a second season. A quick review about this anime: (written after it was aired) When you first hear “DARLING in the FRANKXX”, you might not think that a 1920’s themed anime with an odd premise could be done, but believe me when I tell you it can, and it was done really well. A review of the first episode can be found on my previous post. They introduced the key elements to the plot well, and had a good plot outline. The beginning setup took a little getting used to, but it became quite cute by the end. Themes of the episode were mostly romance, and they did the themes well. It uses its music and visuals quite effectively. Overall the story was quite unique and well done. Other notable things would include a relatively high amount of diversity in characters, such as having a “henenak” as well as having a relatively equal amount of female to male characters. The art style for this anime was solid, although the art team wasn’t really given enough time to come up with a great budget for it. Therefore, the few colors it did have were used to the best of their abilities in drawing the characters. Overall, it was a pretty fun anime and I hope

But they are still much better than the GPT-2 ones!

So I generated 100,002 final snippets from 2020-07-12–2020-09-05, sometimes tweaking the temperature or prompt for variety’s sake, and resampling any completions below ~550 characters. (Total: 330,800 lines; 55,301,038 words; 328,099,060 bytes.)

GPT-3 Download

Snippets can be seen at TWDNE, of course but also available as a tarball archive (77MB).

Results

Anon, please tell me the artist of that picture. The way they draw that hair is amazing.

Anonymous

“Someone know who she is? (for a friend)”

“Probably from some weird visual novel.”

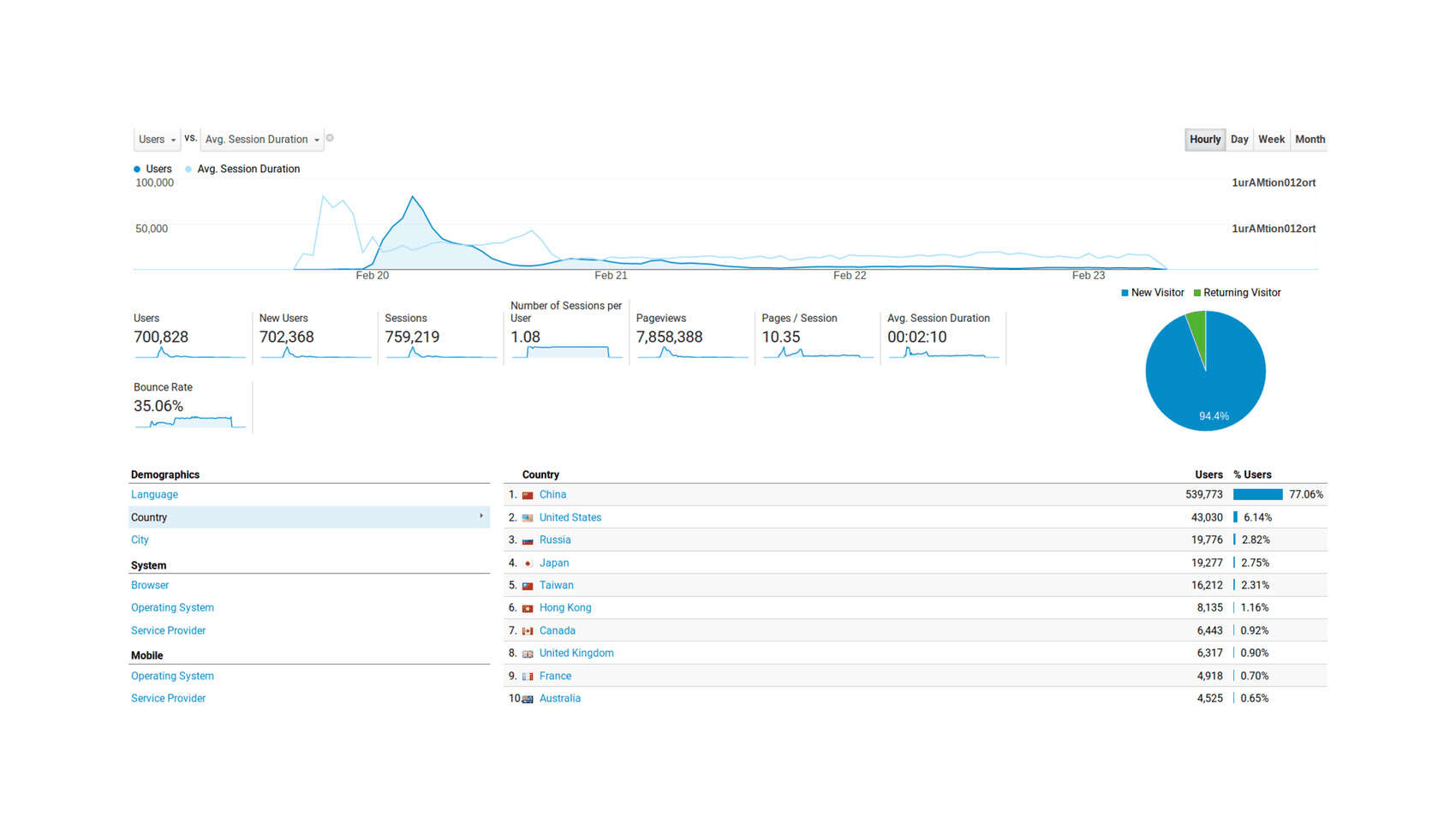

TWDNE traffic results: China virality drove hundreds of thousands of users, and traffic remains at nontrivial levels through to 2020.

I set up the first version of TWDNE with faces Tuesday 2019-02-19; overnight, it went viral after being posted to a Chinese FLOSS/technology website, receiving hundreds of thousands of unique visitors over the next few days (>700,000 unique visitors), with surprisingly long average sessions (I guess looking at all the possible faces is hypnotic, or some people were using it as a screensaver):

Google Analytics traffic statistics for TWDNE, 2019-02-19–2019-02-23

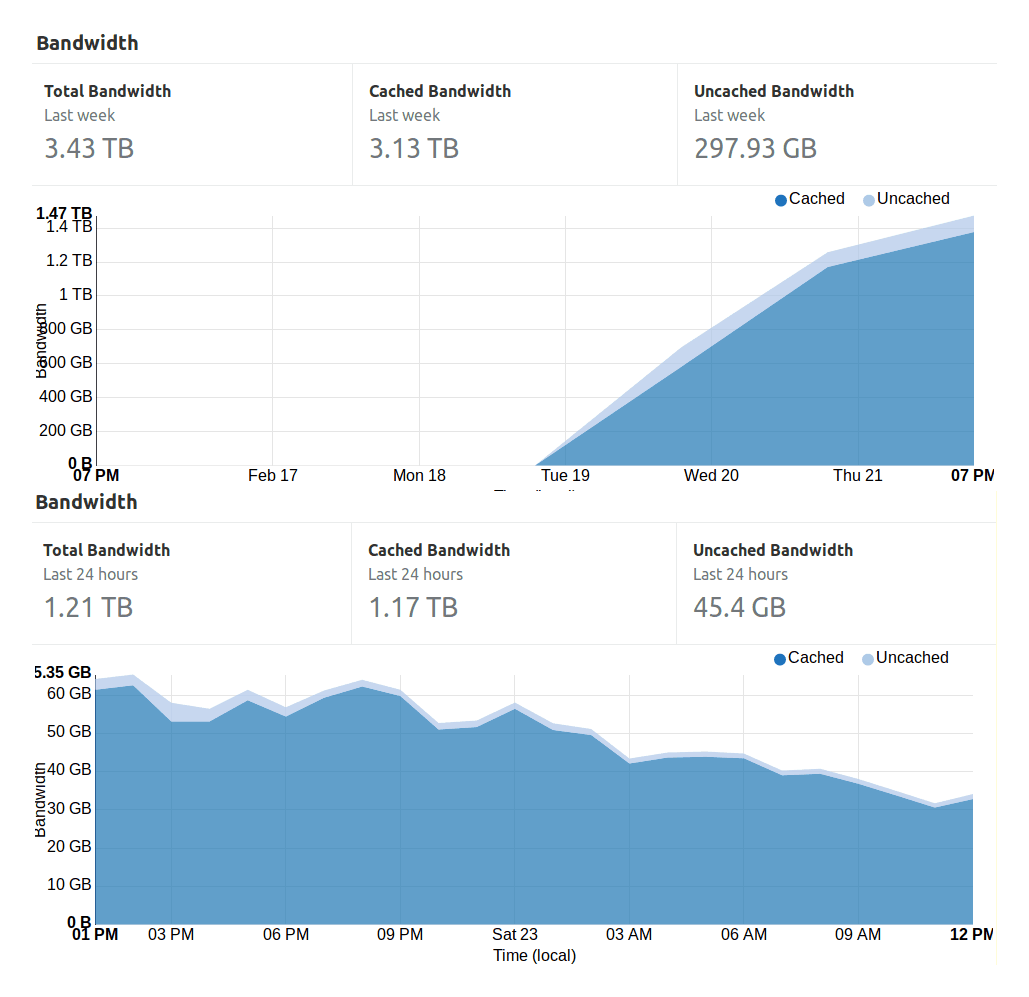

This traffic surge was accompanied by a bandwidth surge (>3.5TB):

CloudFlare cache bandwidth usage for TWDNE, 2019-02-19–2019-02-23, and 2019-02-22–2019-02-23

By 2019-04-03, there were >1 million unique visitors. Traffic remained steady at several thousand visitors a day for the next few months (~900GB/month), producing Amazon S3 bills of ~$114.82$902019/month, so in July 2019 I moved hosting to a nginx Hetzner server. By 2019-07-20, TWDNE traffic hit 1,161,978 unique users in 1,384,602 sessions; because of the JS refresh, ‘pageviews’ (12,102,431) is not particularly meaningful, but we can infer from the remarkable 1m:48s length of the average session, that TWDNE users are looking at >7 images per session.

As jokes go, this was a good one. Once whole-images are solved, perhaps I can make a “This Booru Does Not Exist” website to show off samples from that!

External Links

Media:

“The AI tech behind scary-real celebrity ‘deepfakes’ is being used to create completely fictitious faces, cats, and Airbnb listings”, Business Insider

“ThisPersonDoesNotExist has spawned a host of amazing copycat sites”, Digital Trends

“‘This Person Does Not Exist’ Has Spawned a Host of AI-Powered Copycats’”, Inverse

“A website creates an unlimited number of fake faces”, Yahoo! Finance (video)

“From Faces to Kitties to Apartments: GAN Fakes the World”, Synced

“Why websites are churning out fake images of people (and cats)”, CNN

“Deepfakes don’t just imitate celebrities. They can create people (and cats and Airbnbs) that don’t exist”, Desert News

Social Media:

Foreign Media:

Cybozu blog (JA)

“美少女イラスト風に自動生成された「俺の嫁」画像をズラッと大量に並べて見せてくれる「These Waifus Do Not Exist」”, Gigazine (JA)

“この世に存在しない「美少女キャラ」を自動生成するサイトが爆誕!”, Yurukuyaru.com (JA)

JeuxVideo (FR)

OSChina (ZH)

“Находка: нейросети создают портреты людей, которых не существует”, Mirf.ru (RU)

Nerdcore.de (DE)

Social Impact

Yes.