Making Anime Faces With StyleGAN

A tutorial explaining how to train and generate high-quality anime faces with StyleGAN 1+2 neural networks, and tips/scripts for effective StyleGAN use.

- Examples

- Background

- FAQ

- Training Requirements

- Data Preparation

- Training

- Sampling

- Models

- Transfer Learning

- Reversing StyleGAN To Control & Modify Images

- StyleGAN 2

- Future Work

- BigGAN

- See Also

- External Links

- Appendix

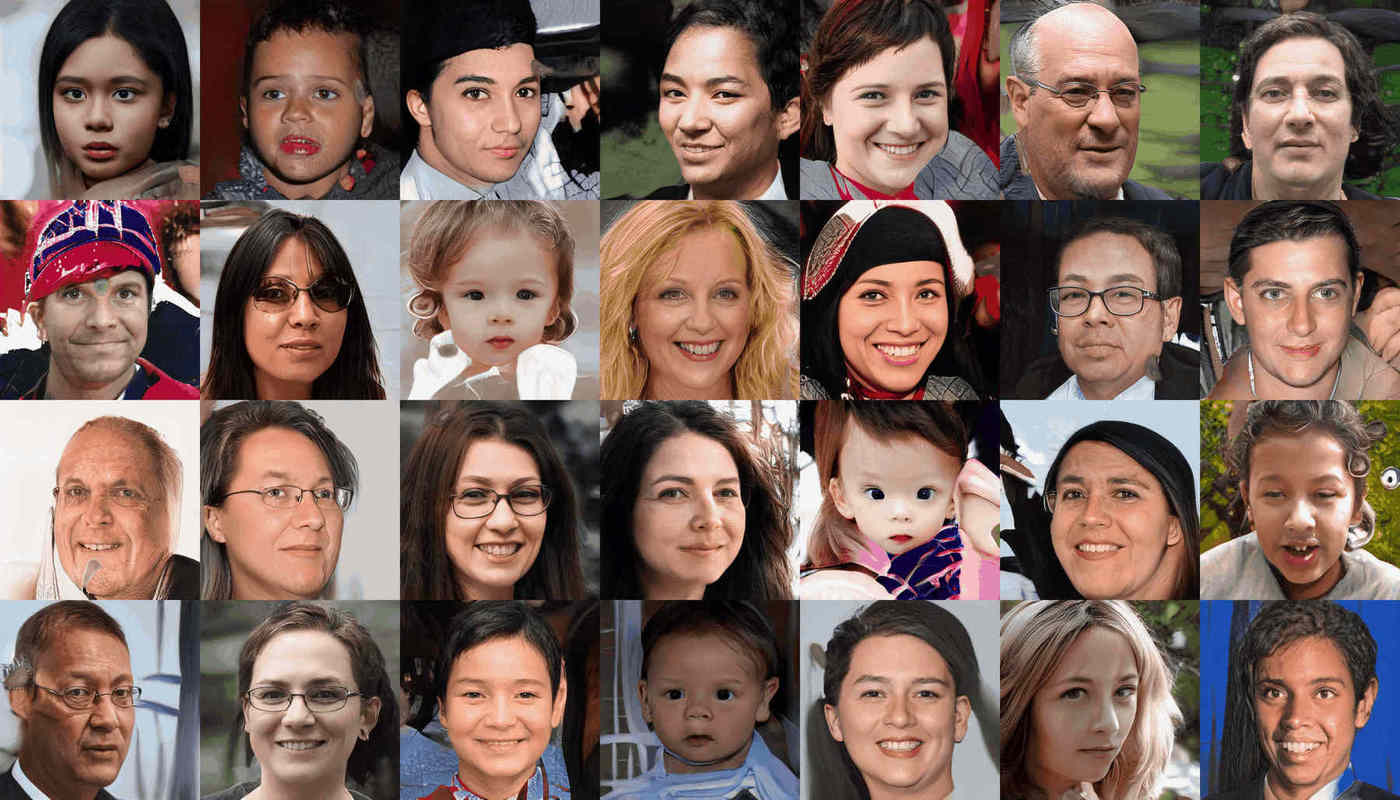

Generative neural networks, such as GANs, have struggled for years to generate decent-quality anime faces, despite their great success with photographic imagery such as real human faces. The task has now been effectively solved, for anime faces as well as many other domains, by the development of a new generative adversarial network, StyleGAN, whose source code was released in February 2019.

I show off my StyleGAN 1+2 CC-0-licensed anime faces & videos, provide downloads for the final models & anime portrait face dataset, provide the ‘missing manual’ & explain how I trained them based on Danbooru2017/2018 with source code for the data preprocessing, document installation & configuration & training tricks.

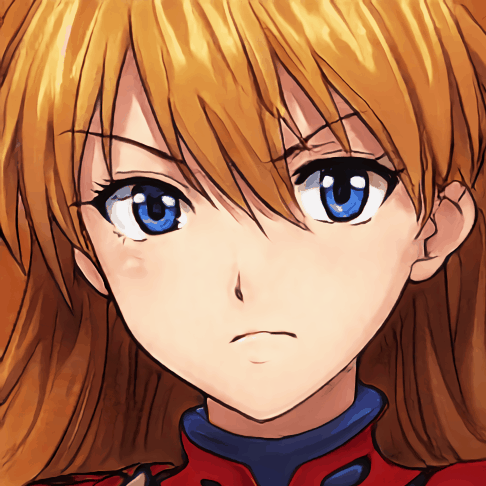

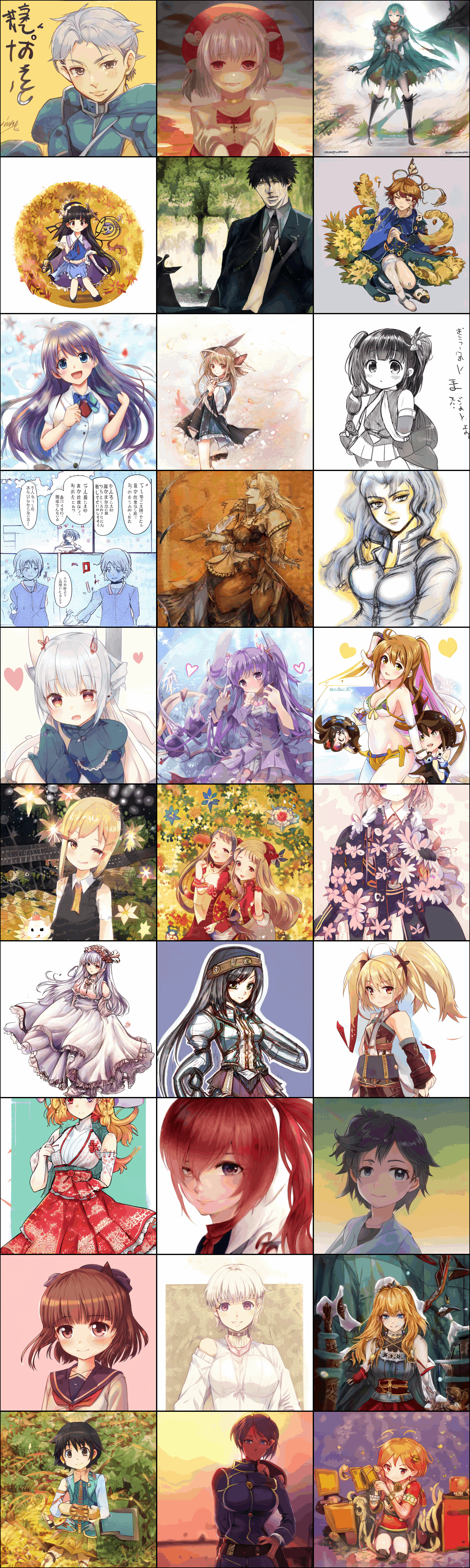

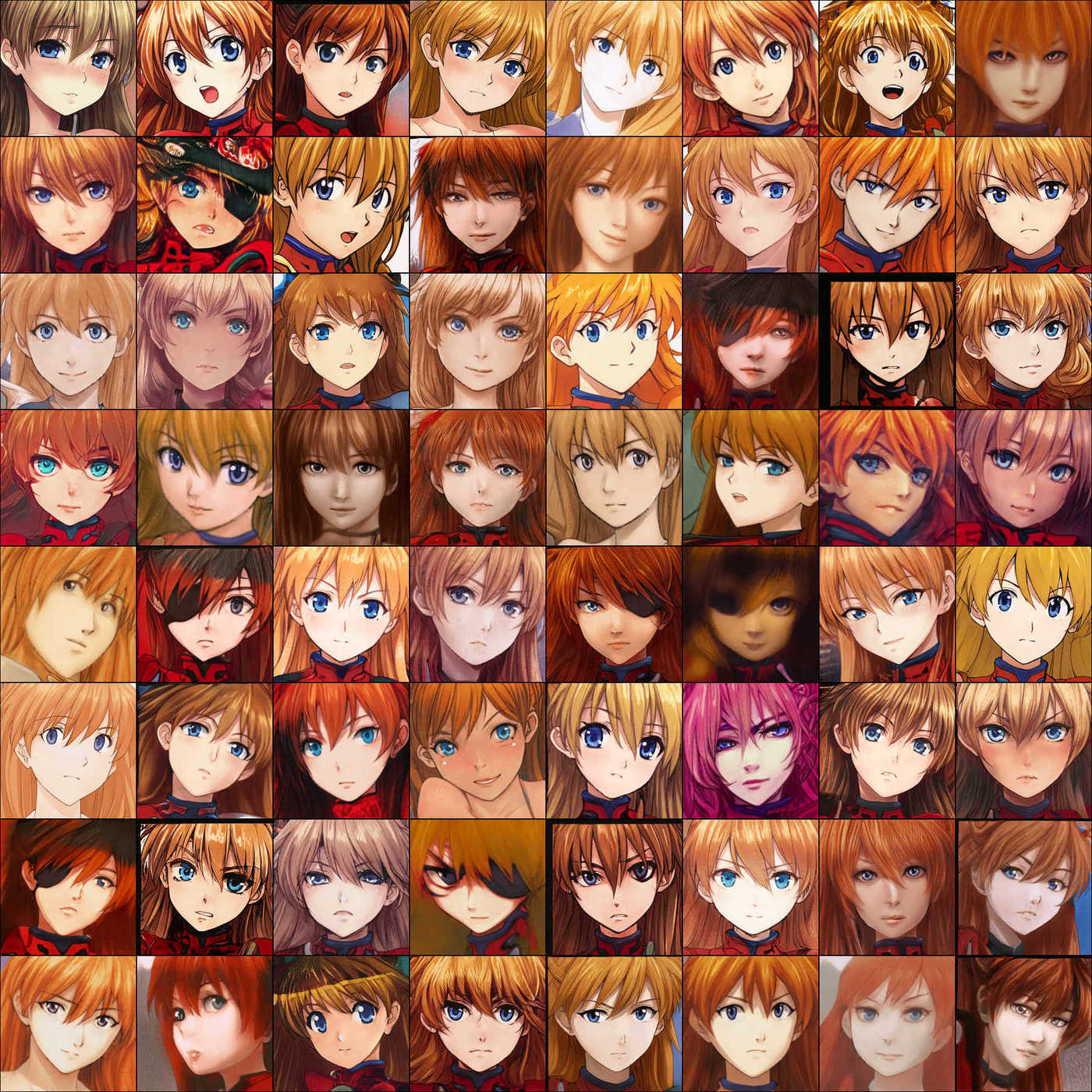

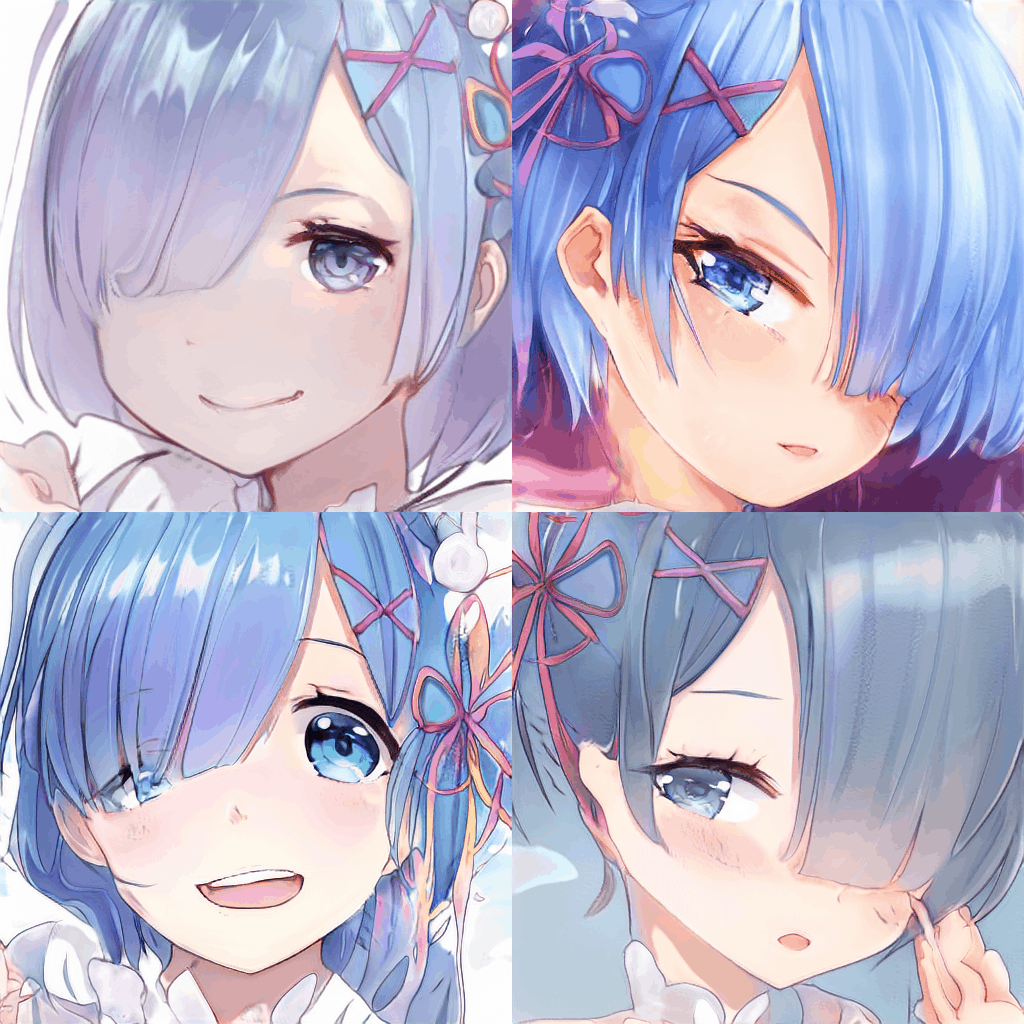

For application, I document various scripts for generating images & videos, briefly describe the website “This Waifu Does Not Exist” I set up as a public demo & its followup This Anime Does Not Exist.ai (TADNE) (see also Artbreeder), discuss how the trained models can be used for transfer learning such as generating high-quality faces of anime characters with small datasets (eg. Holo or Asuka Souryuu Langley), and touch on more advanced StyleGAN applications like encoders & controllable generation.

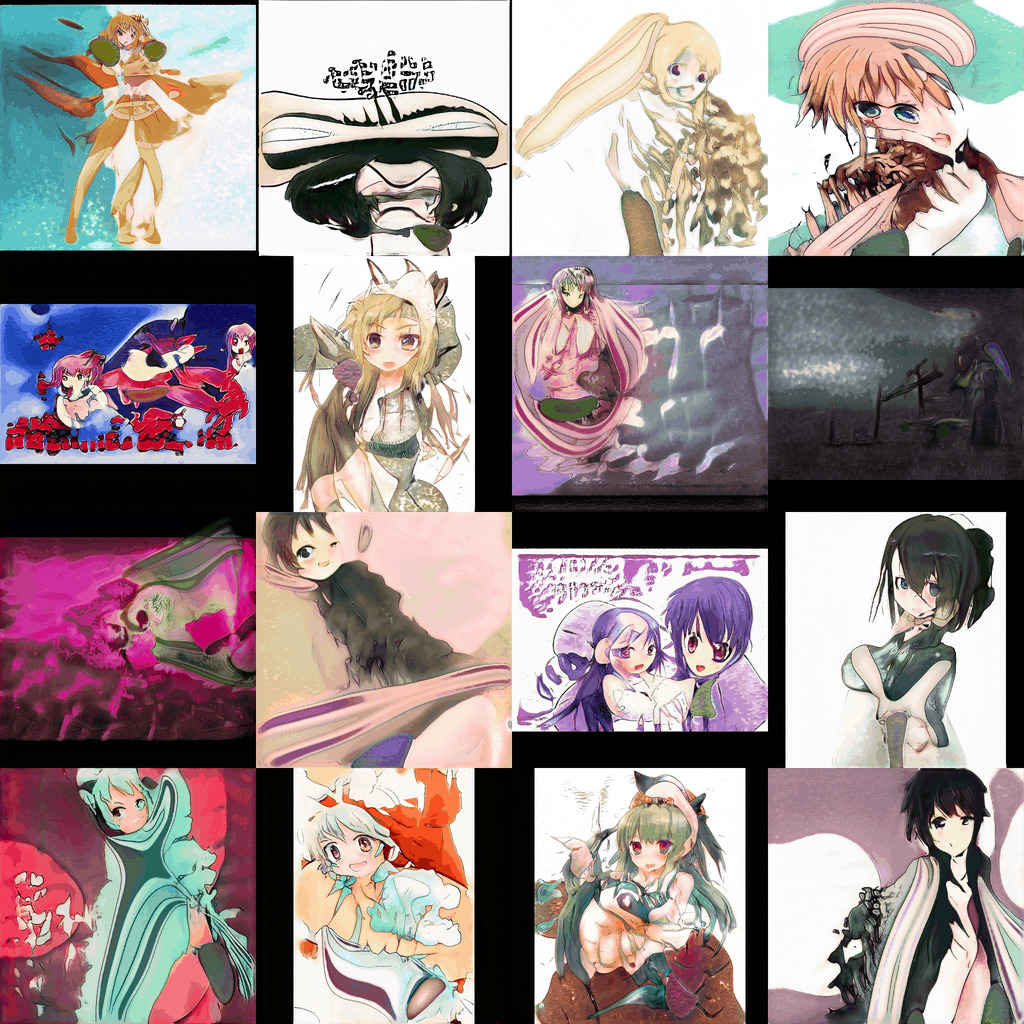

The anime face graveyard gives samples of my failures with earlier GANs for anime face generation, and I provide samples & model from a relatively large-scale BigGAN training run suggesting that BigGAN may be the next step forward to generating full-scale anime images.

A minute of reading could save an hour of debugging!

StyleGAN2 Is Obsolete

As of 2022, StyleGAN2 is obsolete for anime generation. Alternatives include (newest-first): Pony Diffusion v6 XL, niji • journey, Holara, Waifu-Diffusion, NovelAI, Waifu Labs, or Crypko.

When Ian Goodfellow’s first GAN paper came out in 2014, with its blurry 64px grayscale faces, I said to myself, “given the rate at which GPUs & NN architectures improve, in a few years, we’ll probably be able to throw a few GPUs at some anime collection like Danbooru and the results will be hilarious.” There is something intrinsically amusing about trying to make computers draw anime, and it would be much more fun than working with yet more celebrity headshots or ImageNet samples; further, anime/illustrations/drawings are so different from the exclusively-photographic datasets always (over)used in contemporary ML research that I was curious how it would work on anime—better, worse, faster, or different failure modes? Even more amusing—if random images become doable, then text → images would not be far behind.

So when GANs hit 128px color images on ImageNet, and could do somewhat passable CelebA face samples around 201511ya, along with my char-RNN experiments, I began experimenting with Soumith Chintala’s implementation of DCGAN, restricting myself to faces of single anime characters where I could easily scrape up ~5–10k faces. (I did a lot of Asuka Souryuu Langley from Neon Genesis Evangelion because she has a color-centric design which made it easy to tell if a GAN run was making any progress: blonde-red hair, blue eyes, and red hair ornaments.)

It did not work. Despite many runs on my laptop & a borrowed desktop, DCGAN never got remotely near to the level of the CelebA face samples, typically topping out at reddish blobs before diverging or outright crashing.1 Thinking perhaps the problem was too-small datasets & I needed to train on all the faces, I began creating the Danbooru2017 version of “Danbooru2018: A Large-Scale Crowdsourced & Tagged Anime Illustration Dataset”. Armed with a large dataset, I subsequently began working through particularly promising members of the GAN zoo, emphasizing SOTA & open implementations.

Among others, I have tried StackGAN/StackGAN++ & Pixel*NN* (failed to get running)2, WGAN-GP, Glow, GAN-QP, MSG-GAN, SAGAN, IntroVAE VGAN, PokeGAN, BigGAN3, ProGAN, & StyleGAN. These architectures vary widely in their design & core algorithms and which of the many stabilization tricks (2019) they use, but they were more similar in their results: dismal.

Glow & BigGAN had promising results reported on CelebA & ImageNet respectively, but unfortunately their training requirements were out of the question.4 (As interesting as SPIRAL and CAN are, no source was released and I couldn’t even attempt them.)

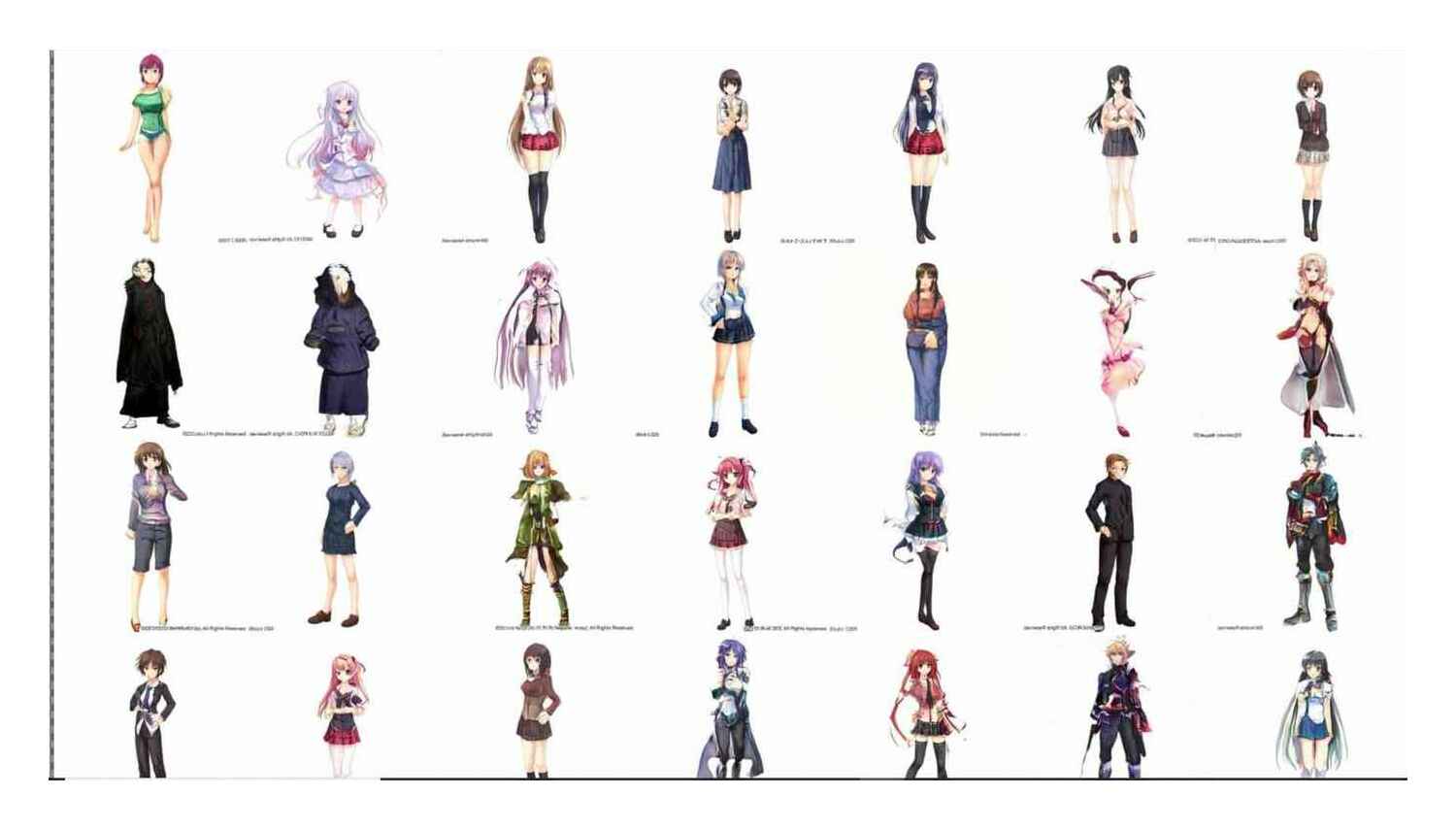

While some remarkable tools like PaintsTransfer/style2paints were created, and there were the occasional semi-successful anime face GANs like IllustrationGAN, the most notable attempt at anime face generation was Make Girls.moe ( et al 2017). MGM could, interestingly, do in-browser 256px anime face generation using tiny GANs, but that is a dead end. MGM accomplished that much by making the problem easier: they added some light supervision in the form of a crude tag embedding5, and then simplifying the problem drastically to n = 42k faces cropped from professional video game character artwork, which I regarded as not an acceptable solution—the faces were small & boring, and it was unclear if this data-cleaning approach could scale to anime faces in general, much less anime images in general. They are recognizably anime faces but the resolution is low and the quality is not great:

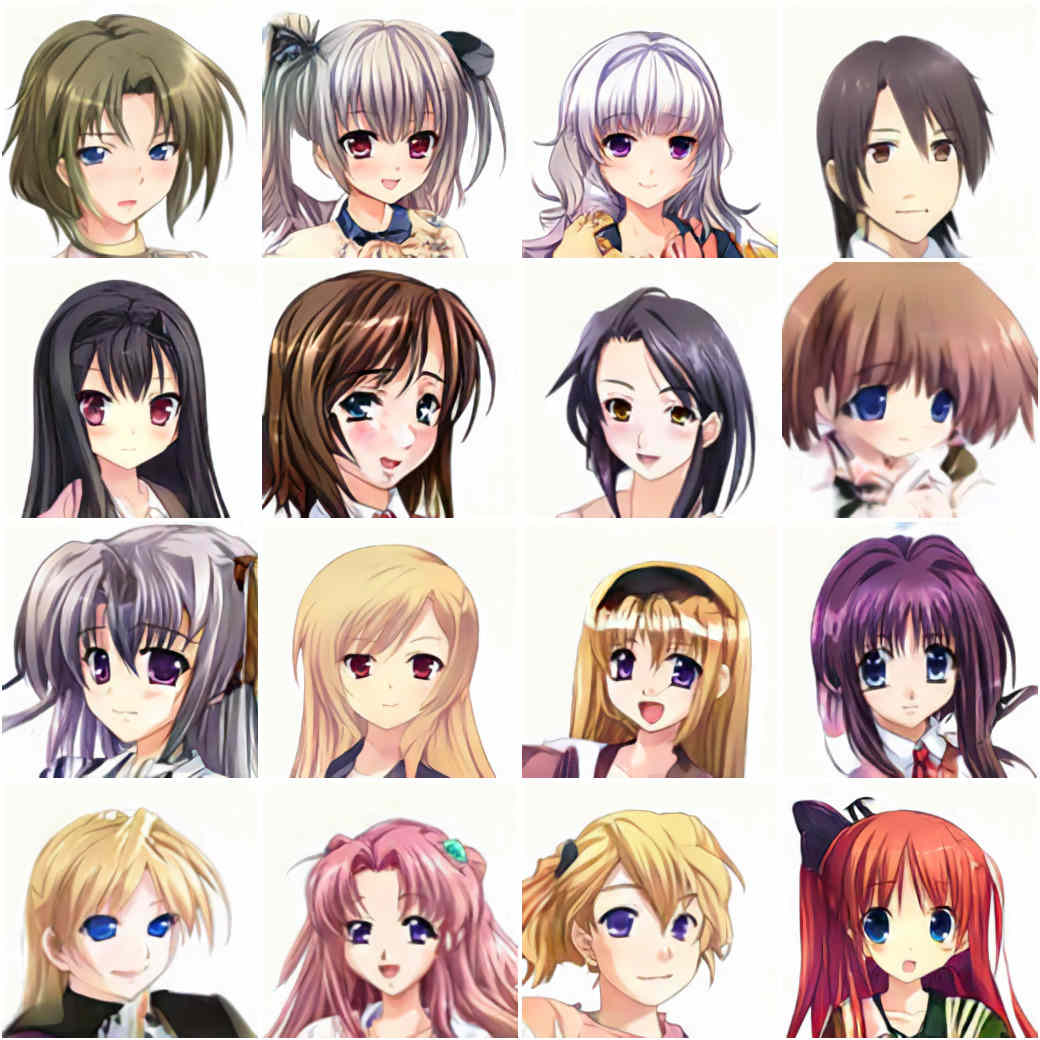

2017 SOTA: 16 random Make Girls.Moe face samples (4×4 grid)

Typically, a GAN would diverge after a day or two of training, or it would collapse to producing a limited range of faces (or a single face), or if it was stable, simply converge to a low level of quality with a lot of fuzziness; perhaps the most typical failure mode was heterochromia (which is common in anime but not that common)—mismatched eye colors (each color individually plausible), from the Generator apparently being unable to coordinate with itself to pick consistently. With more recent architectures like VGAN or SAGAN, which carefully weaken the Discriminator or which add extremely-powerful components like self-attention layers, I could reach fuzzy 128px faces.

Given the miserable failure of all the prior NNs I had tried, I had begun to seriously wonder if there was something about non-photographs which made them intrinsically unable to be easily modeled by convolutional neural networks (the common ingredient to them all). Did convolutions render it unable to generate sharp lines or flat regions of color? Did regular GANs work only because photographs were made almost entirely of blurry textures?

But BigGAN demonstrated that a large cutting-edge GAN architecture could scale, given enough training, to all of ImageNet at even 512px. And ProGAN demonstrated that regular CNNs could learn to generate sharp clear anime images with only somewhat infeasible amounts of training. ProGAN (source; video), while expensive and requiring >6 GPU-weeks6, did work and was even powerful enough to overfit single-character face datasets; I didn’t have enough GPU time to train on unrestricted face datasets, much less anime images in general, but merely getting this far was exciting. Because, a common sequence in DL/DRL (unlike many areas of AI) is that a problem seems intractable for long periods, until someone modifies a scalable architecture slightly, produces somewhat-credible (not necessarily human or even near-human) results, and then throws a ton of compute/data at it and, since the architecture scales, it rapidly exceeds SOTA and approaches human levels (and potentially exceeds human-level). Now I just needed a faster GAN architecture which I could train a much bigger model with on a much bigger dataset.

A history of GAN generation of anime faces: ‘do want’ to ‘oh no’ to ‘awesome’

StyleGAN was the final breakthrough in providing ProGAN-level capabilities but fast: by switching to a radically different architecture, it minimized the need for the slow progressive growing (perhaps eliminating it entirely7), and learned efficiently at multiple levels of resolution, with bonuses in providing much more control of the generated images with its “style transfer” metaphor.

Examples

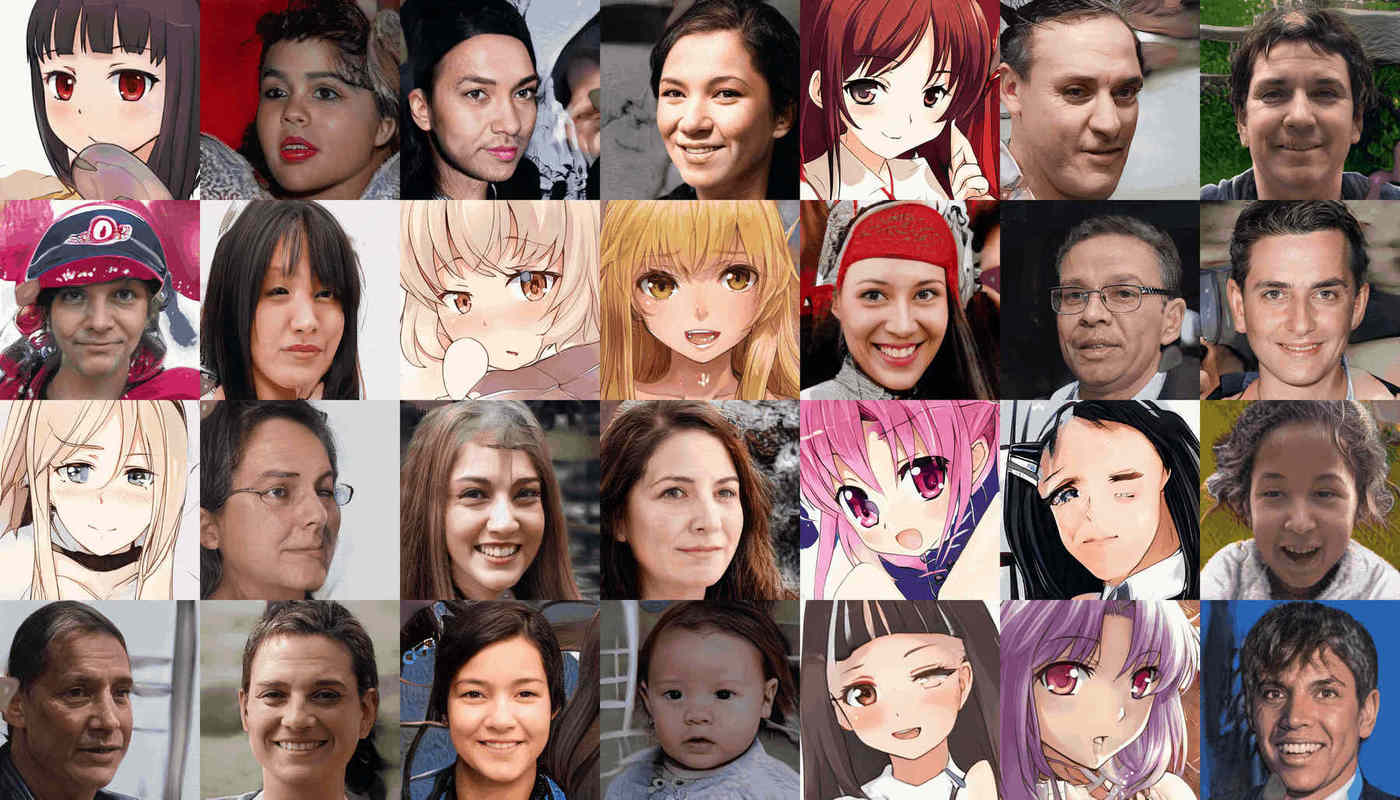

First, some demonstrations of what is possible with StyleGAN on anime faces:

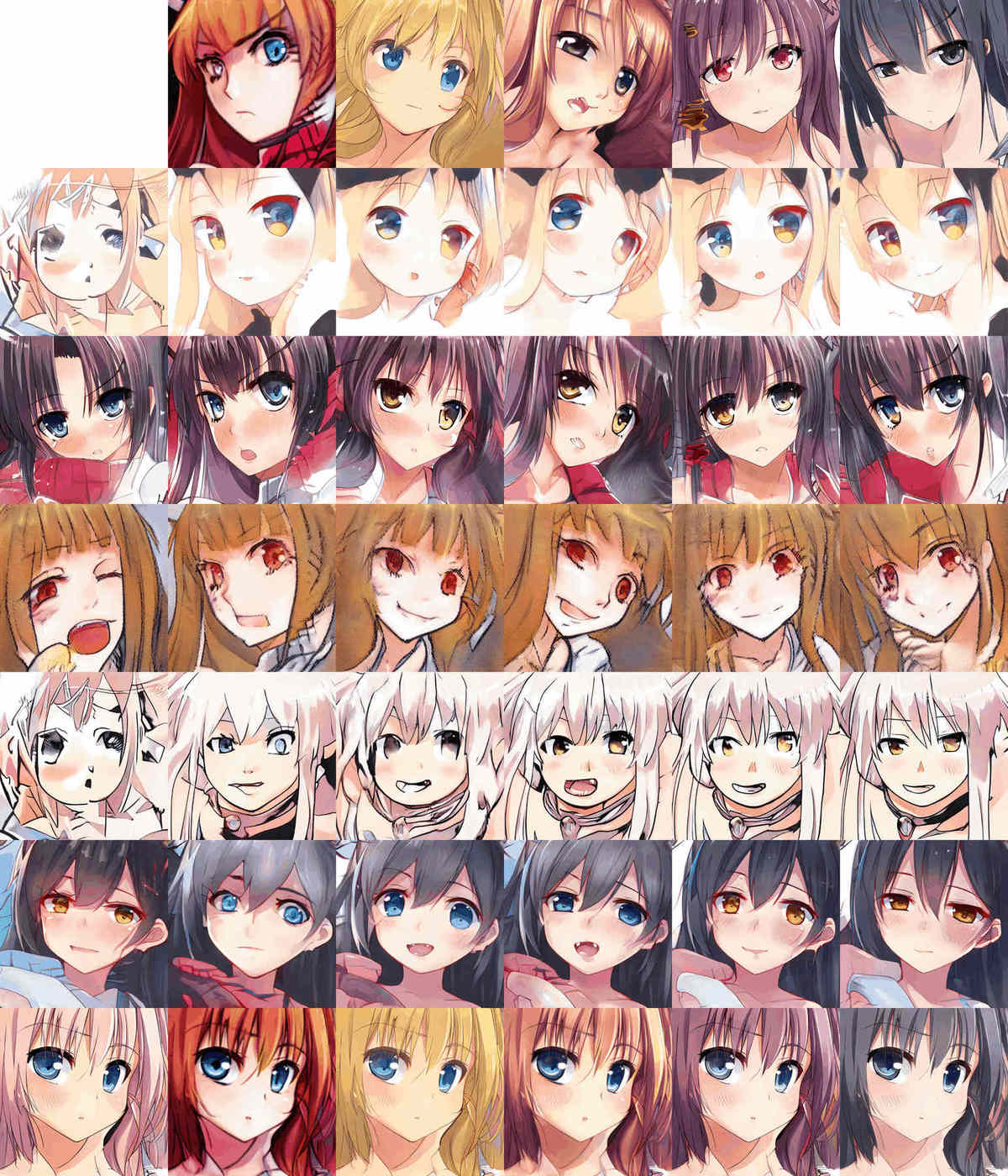

When it works: a hand-selected StyleGAN sample from my Asuka Souryuu Langley-finetuned StyleGAN

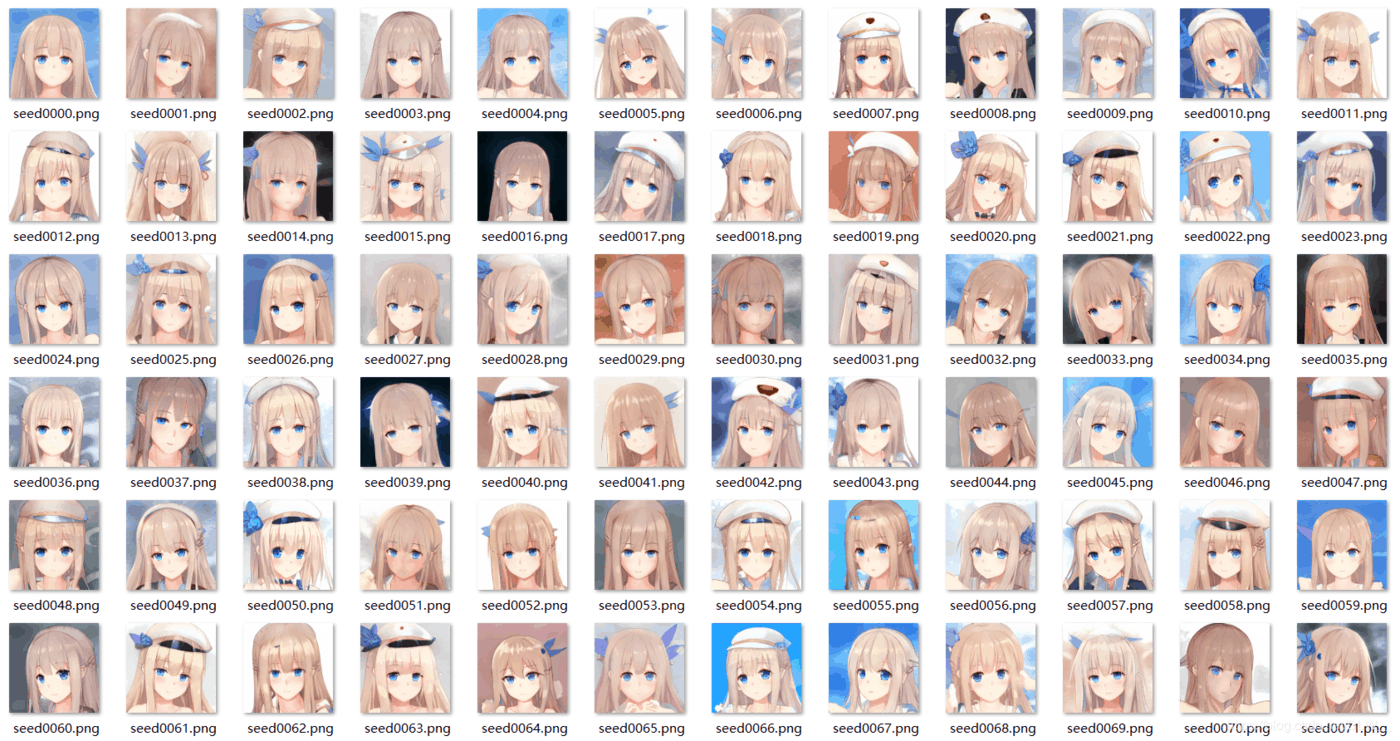

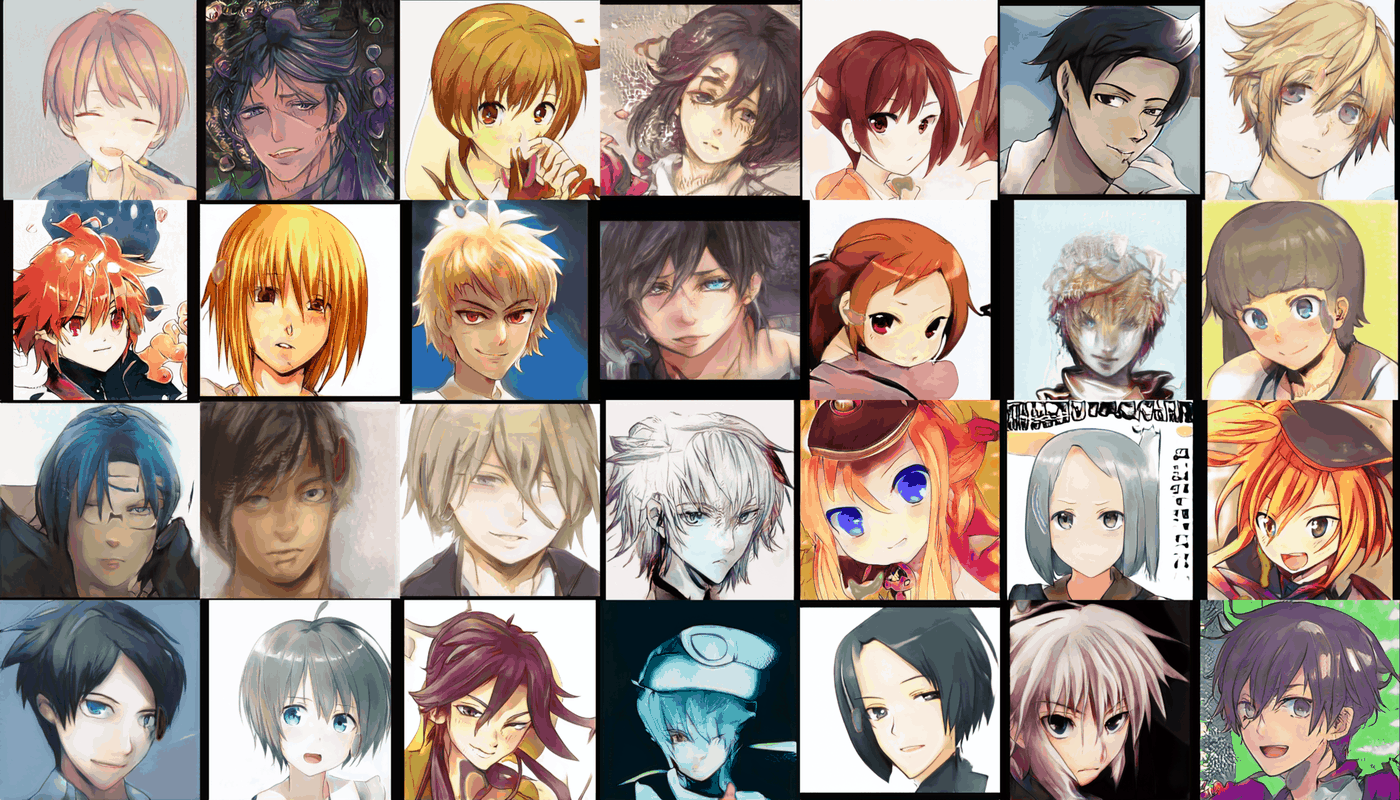

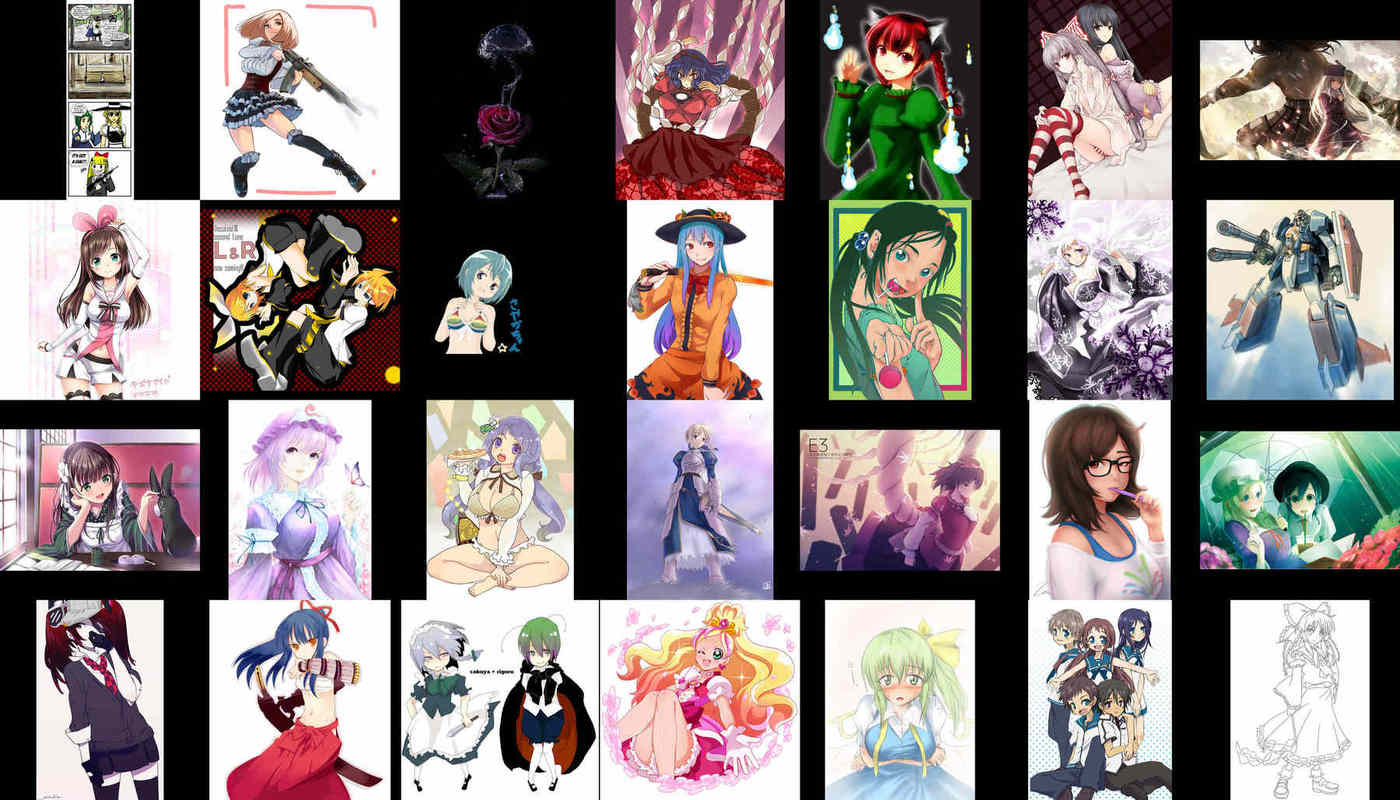

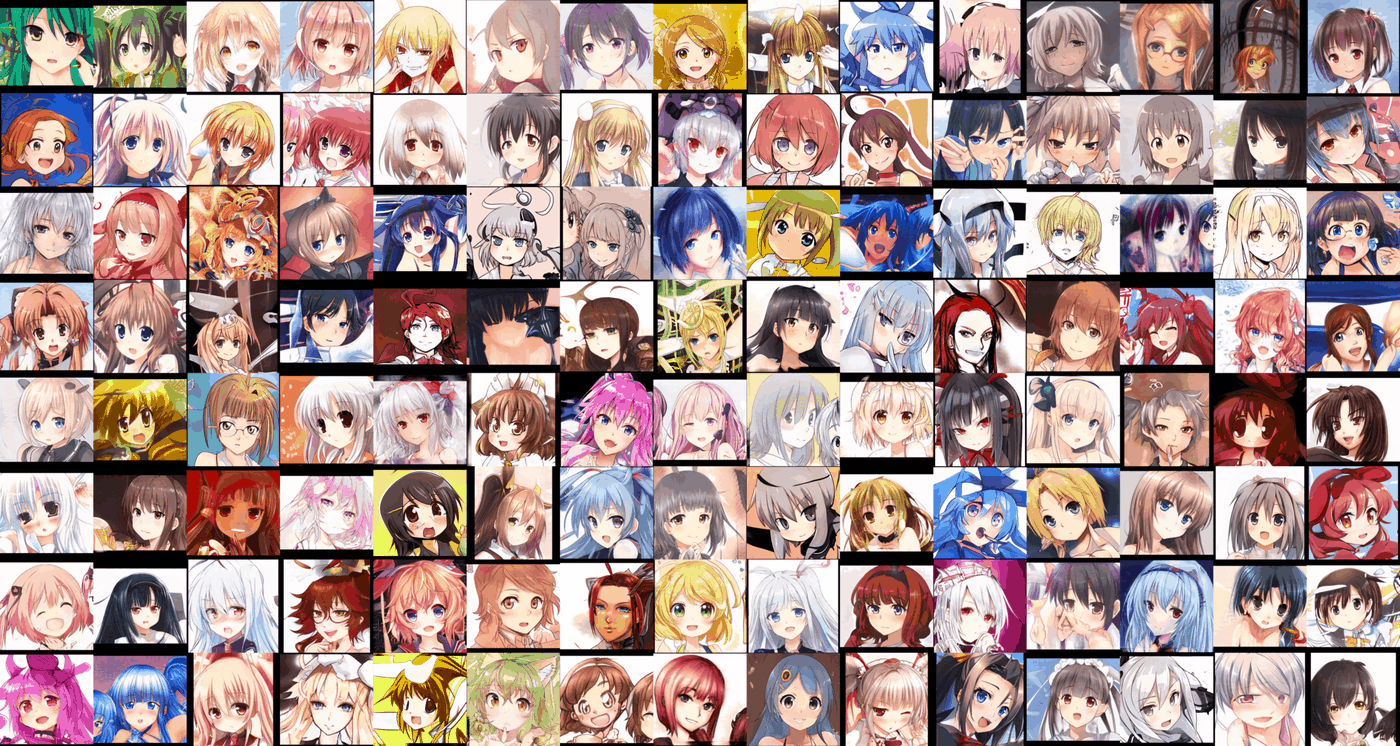

64 of the best TWDNE anime face samples selected from social media (click to zoom).

100 random sample images from the StyleGAN anime faces on TWDNE

Even a quick look at the MGM & StyleGAN samples demonstrates the latter to be superior in resolution, fine details, and overall appearance (although the MGM faces admittedly have fewer global mistakes). It is also superior to my 2018 ProGAN faces. Perhaps the most striking fact about these faces, which should be emphasized for those fortunate enough not to have spent as much time looking at awful GAN samples as I have, is not that the individual faces are good, but rather that the faces are so diverse, particularly when I look through face samples with Ψ ≥ 1—it is not just the hair/eye color or head orientation or fine details that differ, but the overall style ranges from CG to cartoon sketch, and even the ‘media’ differ, I could swear many of these are trying to imitate watercolors, charcoal sketching, or oil painting rather than digital drawings, and some come off as recognizably ’90s-anime-style vs ’00s-anime-style. (I could look through samples all day despite the global errors because so many are interesting, which is not something I could say of the MGM model whose novelty is quickly exhausted, and it appears that users of my TWDNE website feel similarly as the average length of each visit is 1m:55s.)

StyleGAN anime face interpolation videos were Elon Musk™-approved8, in happier times.

Background

Example of the StyleGAN upscaling image pyramid architecture: small → large (visualization by Shawn Presser)

StyleGAN was published in 2018 as “A Style-Based Generator Architecture for Generative Adversarial Networks”, et al 2018 (source code; demo video/algorithmic review video/results & discussions video; Colab notebook9; GenForce PyTorch reimplementation with model zoo/Keras; explainers: Skymind.ai/Lyrn.ai/Two Minute Papers video). StyleGAN takes the standard GAN architecture embodied by ProGAN (whose source code it reuses) and, like the similar GAN architecture et al 2018, draws inspiration from the field of “style transfer” (essentially invented by et al 2014), by changing the Generator (G) which creates the image by repeatedly upscaling its resolution to take, at each level of resolution from 8px → 16px → 32px → 64px → 128px etc. a random input or “style noise”, which is combined with AdaIN and is used to tell the Generator how to ‘style’ the image at that resolution by changing the hair or changing the skin texture and so on. ‘Style noise’ at a low resolution like 32px affects the image relatively globally, perhaps determining the hair length or color, while style noise at a higher level like 256px might affect how frizzy individual strands of hair are. In contrast, ProGAN and almost all other GANs inject noise into the G as well, but only at the beginning, which appears to work not nearly as well (perhaps because it is difficult to propagate that randomness ‘upwards’ along with the upscaled image itself to the later layers to enable them to make consistent choices?). To put it simply, by systematically providing a bit of randomness at each step in the process of generating the image, StyleGAN can ‘choose’ variations effectively.

![Karras et al 2018, StyleGAN vs ProGAN architecture: “Figure 1. While a traditional generator [29] feeds the latent code [z] though the input layer only, we first map the input to an intermediate latent space W, which then controls the generator through adaptive instance normalization (AdaIN) at each convolution layer. Gaussian noise is added after each convolution, before evaluating the nonlinearity. Here”A” stands for a learned affine transform, and “B” applies learned per-channel scaling factors to the noise input. The mapping network f consists of 8 layers and the synthesis network g consists of 18 layers—two for each resolution (42-−10242). The output of the last layer is converted to RGB using a separate 1×1 convolution, similar to Karras et al. [29]. Our generator has a total of 26.2M trainable parameters, compared to 23.1M in the traditional generator.”](/doc/ai/nn/gan/stylegan/2018-karras-stylegan-figure1-styleganarchitecture.png)

et al 2018, StyleGAN vs ProGAN architecture: “Figure 1. While a traditional generator [29] feeds the latent code [z] though the input layer only, we first map the input to an intermediate latent space W, which then controls the generator through adaptive instance normalization (AdaIN) at each convolution layer. Gaussian noise is added after each convolution, before evaluating the nonlinearity. Here”A” stands for a learned affine transform, and “B” applies learned per-channel scaling factors to the noise input. The mapping network f consists of 8 layers and the synthesis network g consists of 18 layers—two for each resolution (42-−10242). The output of the last layer is converted to RGB using a separate 1×1 convolution, similar to Karras et al. [29]. Our generator has a total of 26.2M trainable parameters, compared to 23.1M in the traditional generator.”

StyleGAN makes a number of additional improvements, but they appear to be less important: for example, it introduces a new “FFHQ” face/portrait dataset with 1024px images in order to show that StyleGAN convincingly improves on ProGAN in final image quality; switches to a loss which is more well-behaved than the usual logistic-style losses; and architecture-wise, it makes unusually heavy use of fully-connected (FC) layers to process an initial random input, no less than 8 layers of 512 neurons, where most GANs use 1 or 2 FC layers.10 More striking is that it omits techniques that other GANs have found critical for being able to train at 512px–1024px scale: it does not use newer losses like the relativistic loss, SAGAN-style self-attention layers in either G/D, VGAN-style variational Discriminator bottlenecks, conditioning on a tag or category embedding11, BigGAN-style large minibatches, different noise distributions12, advanced regularization like spectral normalization, etc.13 One possible reason for StyleGAN’s success is the way it combines outputs from the multiple layers into a single final image rather than repeatedly upscaling; when we visualize the output of each layer as an RGB image in anime StyleGANs, there is a striking division of labor between layers—some layers focus on monochrome outlines, while others fill in textured regions of color, and they sum up into an image with sharp lines and good color gradients while maintaining details like eyes.

Aside from the FCs and style noise & normalization, it is a vanilla architecture. (One oddity is the use of only 3×3 convolutions & so few layers in each upscaling block; a more conventional upscaling block than StyleGAN’s 3×3 → 3×3 would be something like BigGAN which does 1×1 → 3×3 → 3×3 → 1×1. It’s not clear if this is a good idea as it limits the spatial influence of each pixel by providing limited receptive fields14.) Thus, if one has some familiarity with training a ProGAN or another GAN, one can immediately work with StyleGAN with no trouble: the training dynamics are similar and the hyperparameters have their usual meaning, and the codebase is much the same as the original ProGAN (with the main exception being that config.py has been renamed train.py (or run_training.py in S2) and the original train.py, which stores the critical configuration parameters, has been moved to training/training_loop.py; there is still no support for command-line options and StyleGAN must be controlled by editing train.py/training_loop.py by hand).

Applications

Because of its speed and stability, when the source code was released on 2019-02-04 (a date that will long be noted in the ANNals of GANime), the Nvidia models & sample dumps were quickly perused & new StyleGANs trained on a wide variety of image types, yielding, in addition to the original faces/carts/cats of et al 2018:

“This Person Does Not Exist” (random samples from the 1024px FFHQ face StyleGAN)

quizzes: “Which Face is Real”/“Real or Fake?”

voting: “Judge Fake People”

cats: “These Cats Do Not Exist”, “This Cat Does Not Exist” (cat failure modes; interpolation/style-transfer)/corgies

hotel rooms (with char-RNN-generated text descriptions): “This Rental Does Not Exist”

kitchen/dining room/living room/bedroom (using transfer learning)

satellite imagery; Gothic cathedrals; Frank Gehry buildings; cityscapes; floor plans

“This Waifu Does Not Exist” (background/implementation)

-

large upgrade over TWDNE: random generation, exploration, image attribute editing, saving to a gallery, and crossbreeding portraits

-

interactive waifu generator (improved results, inspired by TWDNE & using Danbooru2018 as a dataset)

-

fonts: 1/2; Unicode characters; kanji

eyes (ProGAN)

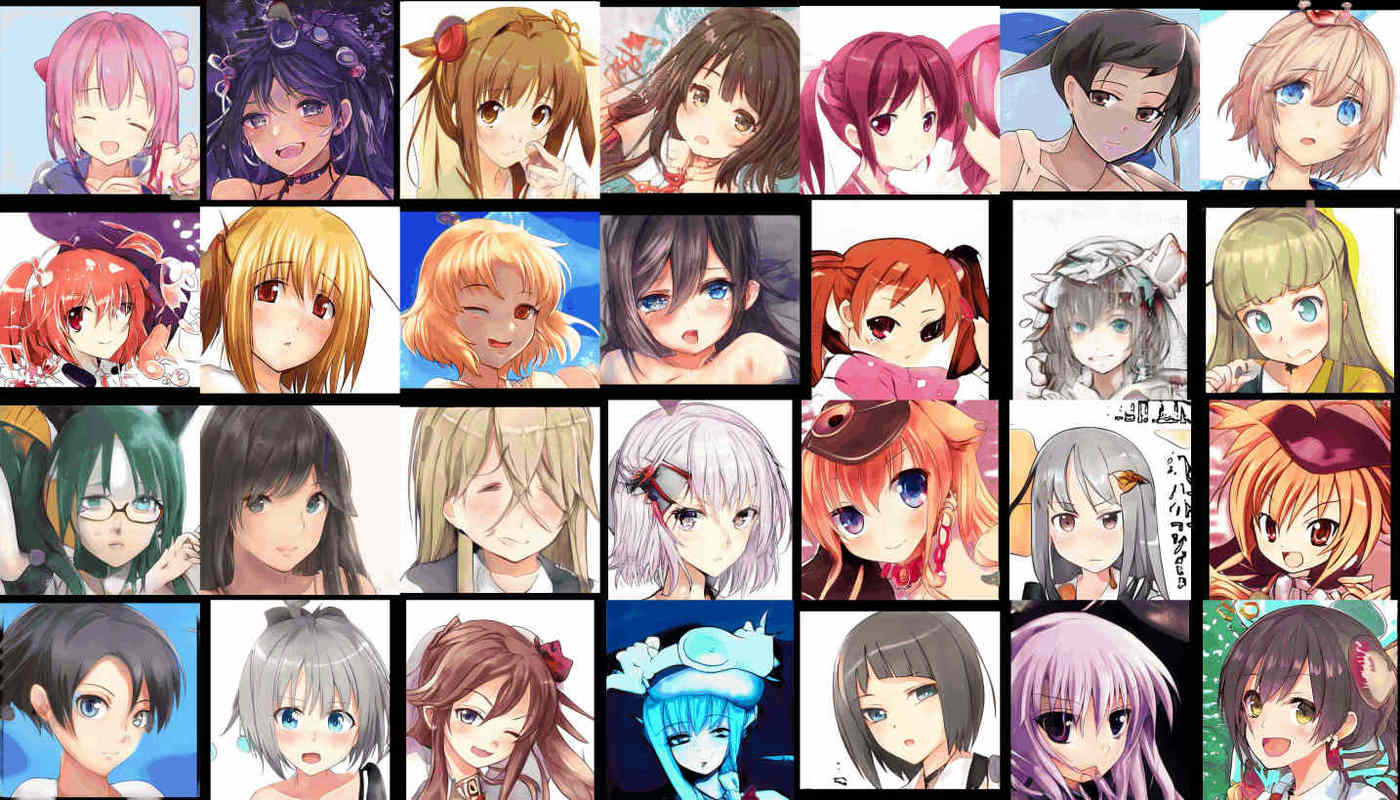

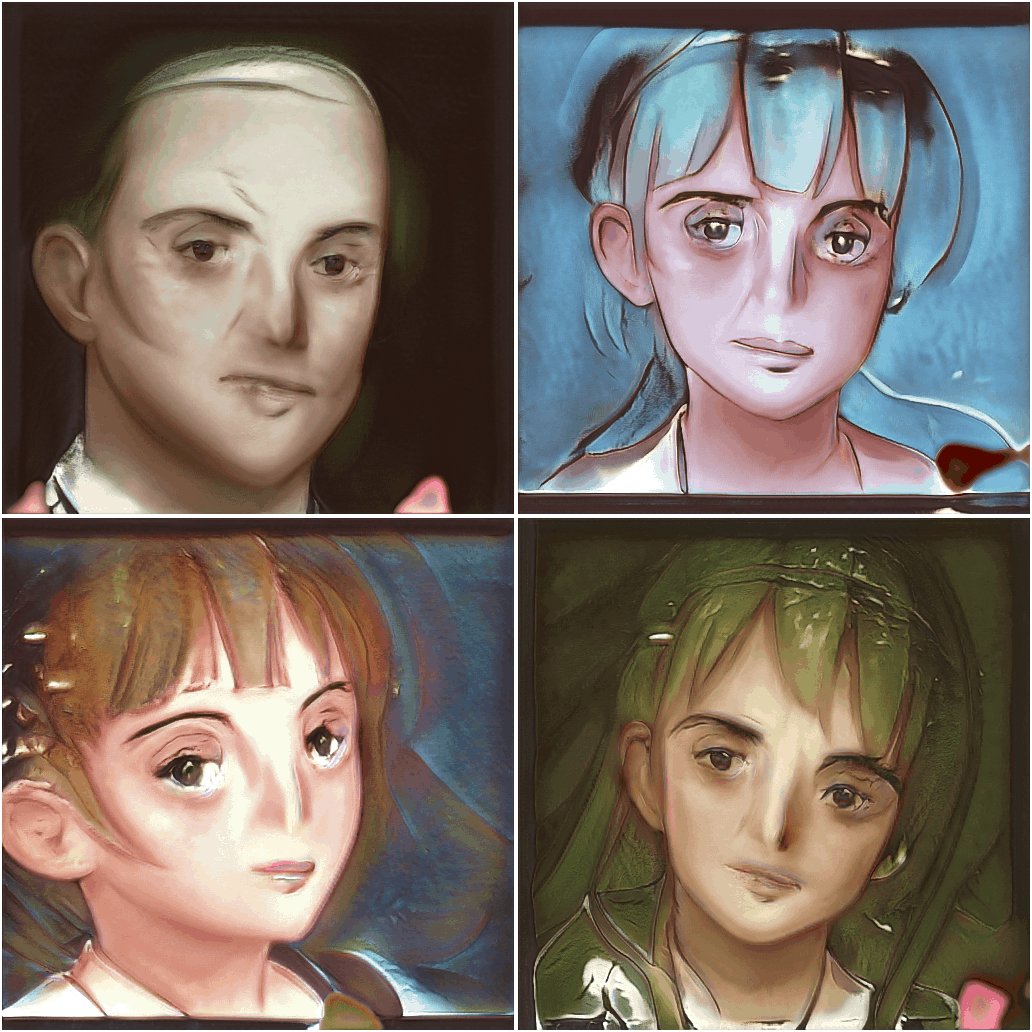

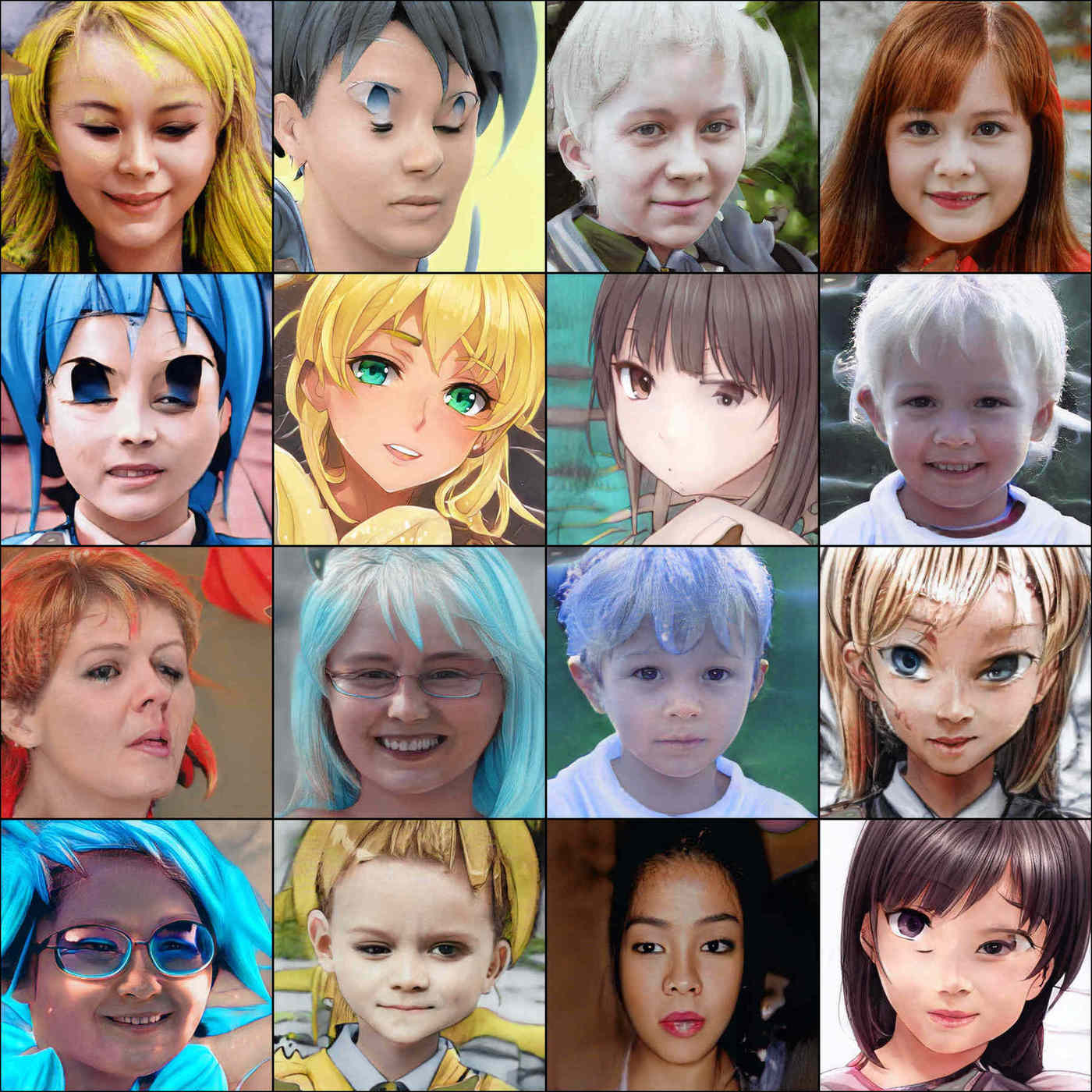

Imagequilt visualization of the wide range of visual subjects StyleGAN has been applied to

Why Don’t GANs Work?

Why does StyleGAN work so well on anime images while other GANs worked not at all or slowly at best?

The lesson I took from “Are GANs Created Equal? A Large-Scale Study”, et al 2017, is that CelebA/CIFAR-10 are too easy, as almost all evaluated GAN architectures were capable of occasionally achieving good FID if one simply did enough iterations & hyperparameter tuning.

Interestingly, I consistently observe in training all GANs on anime that clear lines & sharpness & cel-like smooth gradients appear only toward the end of training, after typically initially blurry textures have coalesced. This suggests an inherent bias of CNNs: color images work because they provide some degree of textures to start with, but lineart/monochrome stuff fails because the GAN optimization dynamics flail around. This is consistent with et al 2018’s findings—which uses style transfer to construct a data-augmented/transformed “Stylized-ImageNet”—showing that ImageNet CNNs are lazy and, because the tasks can be achieved to some degree with texture-only classification (as demonstrated by several of et al 2018’s authors via “BagNets”), focus on textures unless otherwise forced; and by ResNeXt & 2019, who find that although CNNs are perfectly capable of emphasizing shape over texture, lower-performing models tend to rely more heavily on texture and that many kinds of training (including BigBiGAN) will induce a texture focus, suggesting texture tends to be lower-hanging fruit. So while CNNs can learn sharp lines & shapes rather than textures, the typical GAN architecture & training algorithm do not make it easy. Since CIFAR-10/CelebA can be fairly described as being just as heavy on textures as ImageNet (which is not true of anime images), it is not surprising that GANs train easily on them starting with textures and gradually refining into good samples but then struggle on anime.

This raises a question of whether the StyleGAN architecture is necessary and whether many GANs might work, if only one had good style transfer for anime images and could, to defeat the texture bias, generate many versions of each anime image which kept the shape while changing the color palette? (Current style transfer methods like the AdaIN PyTorch implementation used by et al 2018, do not work well on anime images, ironically enough, because they are trained on photographic images, typically using the old VGG model.)

FAQ

…Its social accountability seems sort of like that of designers of military weapons: unculpable right up until they get a little too good at their job.

David Foster Wallace, “E unibus pluram: Television and U.S. Fiction”

To address some common questions people have after seeing generated samples:

Overfitting: “Aren’t StyleGAN (or BigGAN) just overfitting & memorizing data?”

Amusingly, this is not a question anyone really bothered to ask of earlier GAN architectures, which is a sign of progress. Overfitting is a better problem to have than underfitting, because overfitting means you can use a smaller model or more data or more aggressive regularization techniques, while underfitting means your approach just isn’t working.

In any case, while there is currently no way to conclusively prove that cutting-edge GANs are not 100% memorizing (because they should be memorizing to a considerable extent in order to learn image generation, and evaluating generative models is hard in general, and for GANs in particular, because they don’t provide standard metrics like likelihoods which could be used on held-out samples), there are several reasons to think that they are not just memorizing:16

Sample/Dataset Overlap: a standard check for overfitting is to compare generated images to their closest matches using nearest-neighbors (where distance is defined by features like a CNN embedding) lookup; an example of this are StackGAN’s Figure 6 & BigGAN’s Figures 10–14 & 2021, where the photorealistic samples are nevertheless completely different from the most similar ImageNet datapoints. (It’s worth noting that Clearview AI’s facial recognition reportedly does not return Flickr matches for random FFHQ StyleGAN faces, suggesting the generated faces genuinely look like new faces rather than any of the original Flickr faces.)

One intriguing observation about GANs made by the BigGAN paper is that the criticisms of Generators memorizing datapoints may be precisely the opposite of reality: GANs may work primarily by the Discriminator (adaptively) overfitting to datapoints, thereby repelling the Generator away from real datapoints and forcing it to learn nearby possible images which collectively span the image distribution. (With enough data, this creates generalization because “neural nets are lazy” and only learn to generalize when easier strategies fail.)

Semantic Understanding: GANs appear to learn meaningful concepts like individual objects, as demonstrated by “latent space addition” or research tools like GANdissection/ et al 2018; regression tasks, depth maps, or image edits like object deletions/additions ( et al 2020) or segmenting objects like dogs from their backgrounds (2020/ et al 2020, et al 2021, et al 2021, et al 2021, et al 2022—as do diffusion models) are difficult to explain without some genuine understanding of images.

In the case of StyleGAN anime faces, there are encoders and controllable face generation now which demonstrate that the latent variables do map onto meaningful factors of variation & the model must have genuinely learned about creating images rather than merely memorizing real images or image patches. Similarly, when we use the “truncation trick”/ψ to sample from relatively extreme unlikely images and we look at the distortions, they show how generated images break down in semantically-relevant ways, which would not be the case if it was just plagiarism. (A particularly extreme example of the power of the learned StyleGAN primitives is et al 2019’s demonstration that Karras et al’s FFHQ faces StyleGAN can be used to generate fairly realistic images of cats/dogs/cars.)

Latent Space Smoothness: in general, interpolation in the latent space (z) shows smooth changes of images and logical transformations or variations of face features; if StyleGAN were merely memorizing individual datapoints, the interpolation would be expected to be low quality, yield many terrible faces, and exhibit ‘jumps’ in between points corresponding to real, memorized, datapoints. The StyleGAN anime face models do not exhibit this. (In contrast, the Holo ProGAN, which overfit badly, does show severe problems in its latent space interpolation videos.)

Which is not to say that GANs do not have issues: “mode dropping” seems to still be an issue for BigGAN despite the expensive large-minibatch training, which is overfitting to some degree, and StyleGAN presumably suffers from it too.

Transfer Learning: GANs have been used for semi-supervised learning (eg. generating plausible ‘labeled’ samples to train a classifier on), imitation learning like GAIL, and retraining on further datasets; if the G is merely memorizing, it is difficult to explain how any of this would work.

Compute Requirements: “Doesn’t StyleGAN take too long to train?”

StyleGAN is remarkably fast-training for a GAN. With the anime faces, I got better results after 1–3 days of StyleGAN training than I’d gotten with >3 weeks of ProGAN training. The training times quoted by the StyleGAN repo may sound scary, but they are, in practice, a steep overestimate of what you actually need, for several reasons:

Lower Resolution: the largest figures are for 1024px images but you may not need them to be that large or even have a big dataset of 1024px images. For anime faces, 1024px-sized faces are relatively rare, and training at 512px & upscaling 2× to 1,024 with

waifu2x17 works fine & is much faster. Since upscaling is relatively simple & easy, another strategy is to change the progressive-growing schedule: instead of proceeding to the final resolution as fast as possible, instead adjust the schedule to stop at a more feasible resolution & spend the bulk of training time there instead and then do just enough training at the final resolution to learn to upscale (eg. spend 10% of training growing to 512px, then 80% of training time at 512px, then 10% at 1024px).Diminishing Returns: the largest gains in image quality are seen in the first few days or weeks of training with the remaining training being not that useful as they focus on improving small details (so just a few days may be more than adequate for your purposes, especially if you’re willing to select a little more aggressively from samples)

Transfer Learning from a related model can save days or weeks of training, as there is no need to train from scratch; with the anime face StyleGAN, one can train a character-specific StyleGAN with a few hours or days at most, and certainly do not need to spend multiple weeks training from scratch! (assuming that wouldn’t just cause overfitting) Similarly, if one wants to train on some 1024px face dataset, why start from scratch, taking ~1000 GPU-hours, when you can start from Nvidia’s FFHQ face model which is already fully trained, and can converge in a fraction of the from-scratch time? For 1024px, you could use a super-resolution GAN like to upscale? Alternately, you could change the image progression budget to spend most of your time at 512px and then at the tail end try 1024px.

One-Time Costs: the upfront cost of a few hundred dollars of GPU-time (at inflated AWS prices) may seem steep, but should be kept in perspective. As with almost all NNs, training 1 StyleGAN model can be literally tens of millions of times more expensive than simply running the Generator to produce 1 image; but it also need be paid only once by only one person, and the total price need not even be paid by the same person, given transfer learning, but can be amortized across various datasets. Indeed, given how fast running the Generator is, the trained model doesn’t even need to be run on a GPU. (The rule of thumb is that a GPU is 20–30× faster than the same thing on CPU, with rare instances when overhead dominates of the CPU being as fast or faster, so since generating 1 image takes on the order of ~0.1s on GPU, a CPU can do it in ~3s, which is adequate for many purposes.)

Copyright Infringement: “Who owns StyleGAN images?”

The Nvidia Source Code & Released Models for StyleGAN 1 are under a CC-BY-NC license, and you cannot edit them or produce “derivative works” such as retraining their FFHQ, cat, or cat StyleGAN models. (StyleGAN 2 is under a new “Nvidia Source Code License-NC”, which appears to be effectively the same as the CC-BY-NC with the addition of a patent retaliation clause.)

If a model is trained from scratch, then that does not apply as the source code is simply another tool used to create the model and nothing about the CC-BY-NC license forces you to donate the copyright to Nvidia. (It would be odd if such a thing did happen—if your word processor claimed to transfer the copyrights of everything written in it to Microsoft!)

For those concerned by the CC-BY-NC license, a 512px FFHQ config-f StyleGAN 2 has been trained & released into the public domain by Aydao, and is available for download

Models in general are generally considered “transformative works” and the copyright owners of whatever data the model was trained on have no copyright on the model. (The fact that the datasets or inputs are copyrighted is irrelevant, as training on them is universally considered fair use and transformative, similar to artists or search engines; see the further reading.) The model is copyrighted to whomever created it. Hence, Nvidia has copyright on the models it created but I have copyright under the models I trained (which I release under CC-0).

Samples are trickier. The usual widely-stated legal interpretation is that the standard copyright law position is that only human authors can earn a copyright and that machines, animals, inanimate objects or most famously, monkeys, cannot. The US Copyright Office states clearly that regardless of whether we regard a GAN as a machine or a something more intelligent like an animal, either way, it doesn’t count:

A work of authorship must possess “some minimal degree of creativity” to sustain a copyright claim. Feist, 499 U.S. at 358, 362 (citation omitted). “[T]he requisite level of creativity is extremely low.” Even a “slight amount” of creative expression will suffice. “The vast majority of works make the grade quite easily, as they possess some creative spark, ‘no matter how crude, humble or obvious it might be.’” Id. at 346 (citation omitted).

… To qualify as a work of “authorship” a work must be created by a human being. See Burrow-Giles Lithographic Co., 111 U.S. at 58. Works that do not satisfy this requirement are not copyrightable. The Office will not register works produced by nature, animals, or plants.

Examples:

A photograph taken by a monkey.

A mural painted by an elephant.

…the Office will not register works produced by a machine or mere mechanical process that operates randomly or automatically without any creative input or intervention from a human author.

A dump of random samples such as the Nvidia samples or TWDNE therefore has no copyright & by definition is in the public domain.

A new copyright can be created, however, if a human author is sufficiently ‘in the loop’, so to speak, as to exert a de minimis amount of creative effort, even if that ‘creative effort’ is simply selecting a single image out of a dump of thousands of them or twiddling knobs (eg. on Make Girls.Moe). Crypko, for example, take this position.

Further reading on computer-generated art copyrights:

“Copyrights In Computer-Generated Works: Whom, If Anyone, Do We Reward?”, 2001

“Ex Machina: Copyright Protection For Computer-Generated Works”, 2016

“Computer Generated Works and Copyright: Selfies, Traps, Robots, AI and Machine Learning”, 2017

“Who holds the Copyright in AI Created Art?”, Steve Schlackman (2018)

“The Machine as Author”, 2019

“Why Is AI Art Copyright So Complicated?”, Jason Bailey (2019)

“We’ve been warned about AI and music for over 50 years, but no one’s prepared: ‘This road is literally being paved as we’re walking on it’” (The Verge 2019)

Copyright

Per the copyright point above, all my generated videos and samples and models are released under the CC-0 (public domain equivalent) license. Source code listed may be derivative works of Nvidia’s CC-BY-NC-licensed StyleGAN code, and may be CC-BY-NC.

Training Requirements

Data

The road of excess leads to the palace of wisdom

…If the fool would persist in his folly he would become wise

…You never know what is enough unless you know what is more than enough.

…If others had not been foolish, we should be so.William Blake, “Proverbs of Hell”, The Marriage of Heaven and Hell

The necessary size for a dataset depends on the complexity of the domain and whether transfer learning is being used. StyleGAN’s default settings yield a 1024px Generator with 26.2M parameters, which is a large model and can soak up potentially millions of images, so there is no such thing as too much.

For learning decent-quality anime faces from scratch, a minimum of 5000 appears to be necessary in practice; for learning a specific character when using the anime face StyleGAN, potentially as little as ~500 (especially with data augmentation) can give good results. For domains as complicated as “any cat photo” like et al 2018’s cat StyleGAN which is trained on the LSUN CATS category of ~1.8M18 cat photos, that appears to either not be enough or StyleGAN was not trained to convergence; et al 2018 note that “CATS continues to be a difficult dataset due to the high intrinsic variation in poses, zoom levels, and backgrounds.”19

Compute

To fit reasonable minibatch sizes, one will want GPUs with >11GB VRAM. At 512px, that will only train n = 4, and going below that means it’ll be even slower (and you may have to reduce learning rates to avoid unstable training). So, 1080ti & up would be good. (Reportedly, AMD/OpenCL works for running StyleGAN models, and there is one report of successful training with “Radeon VII with tensorflow-rocm 1.13.2 and rocm 2.3.14”.)

The StyleGAN repo provide the following estimated training times for 1–8 GPU systems (which I convert to total GPU-hours & provide a worst-case AWS-based cost estimate):

Estimated StyleGAN wallclock training times for various resolutions & GPU-clusters (source: StyleGAN repo)

GPUs

10242

5122

2562

[March 2019 AWS Costs20]

1

41 days 4 hours [988 GPU-hours]

24 days 21 hours [597 GPU-hours]

14 days 22 hours [358 GPU-hours]

[$408.25$3202019, $247.5$1942019, $146.71$1152019]

2

21 days 22 hours [1,052]

13 days 7 hours [638]

9 days 5 hours [442]

[NA]

4

11 days 8 hours [1,088]

7 days 0 hours [672]

4 days 21 hours [468]

[NA]

8

6 days 14 hours [1,264]

4 days 10 hours [848]

3 days 8 hours [640]

[$3,482.85$2,7302019, $2,335.93$1,8312019, $1,763.11$1,3822019]

AWS GPU instances are some of the most expensive ways to train a NN and provide an upper bound (compare Vast.ai); 512px is often an acceptable (or necessary) resolution; and in practice, the full quoted training time is not really necessary—with my anime face StyleGAN, the faces themselves were high quality within 48 GPU-hours, and what training it for ~1000 additional GPU-hours accomplished was primarily to improve details like the shoulders & backgrounds. (ProGAN/StyleGAN particularly struggle with backgrounds & edges of images because those are cut off, obscured, and highly-varied compared to the faces, whether anime or FFHQ. I hypothesize that the telltale blurry backgrounds are due to the impoverishment of the backgrounds/edges in cropped face photos, and they could be fixed by transfer-learning or pretraining on a more generic dataset like ImageNet, so it learns what the backgrounds even are in the first place; then in face training, it merely has to remember them & defocus a bit to generate correct blurry backgrounds.)

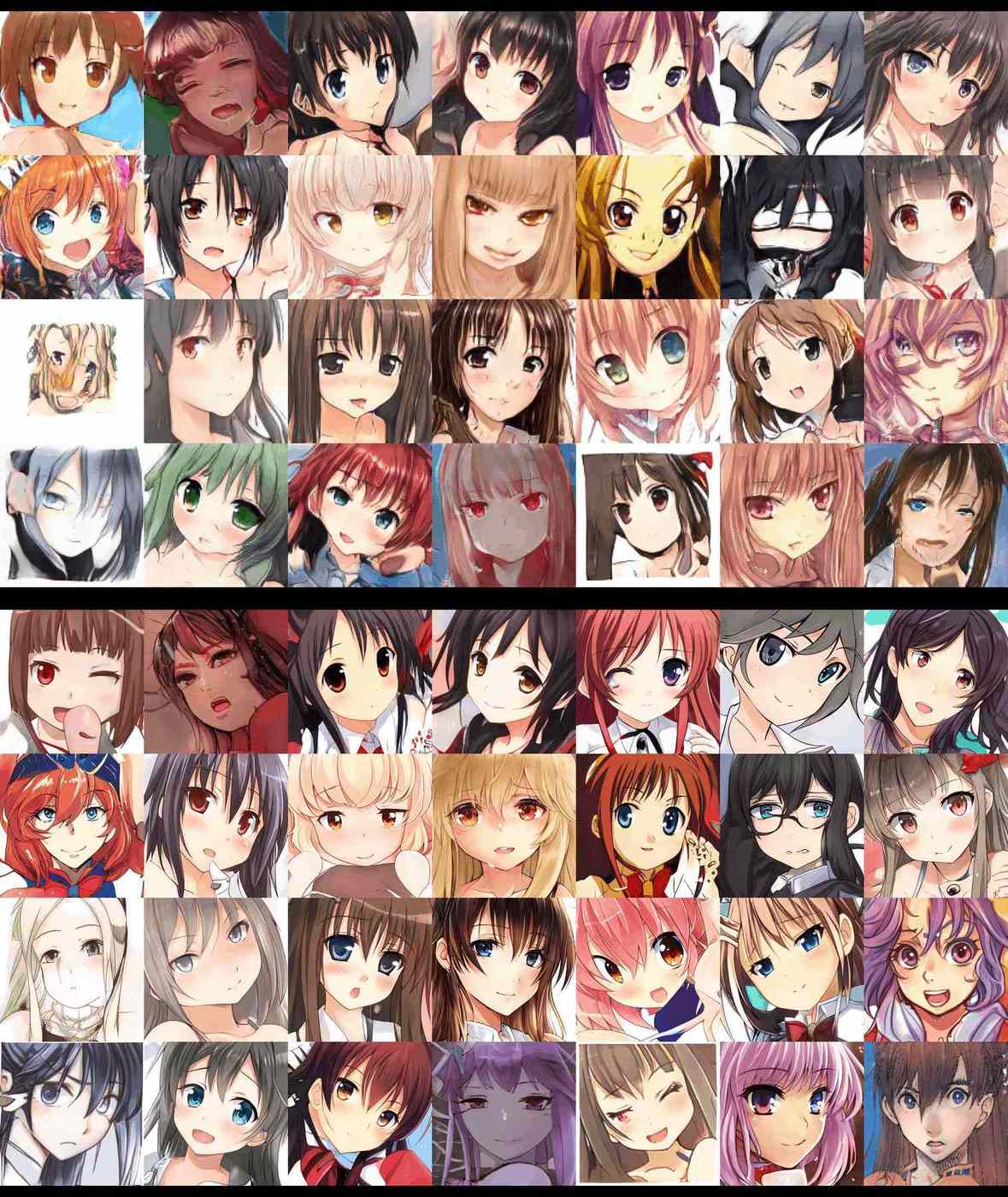

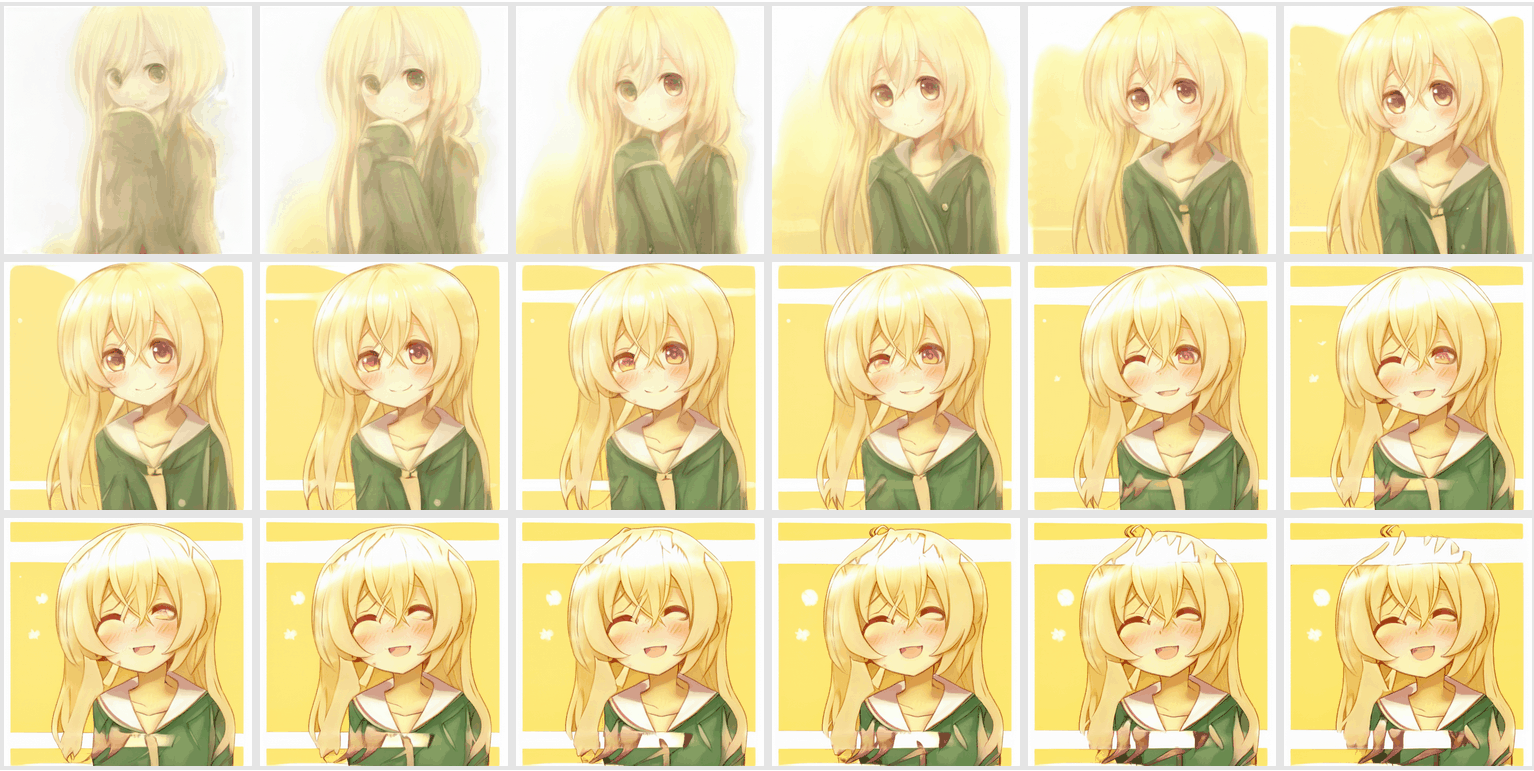

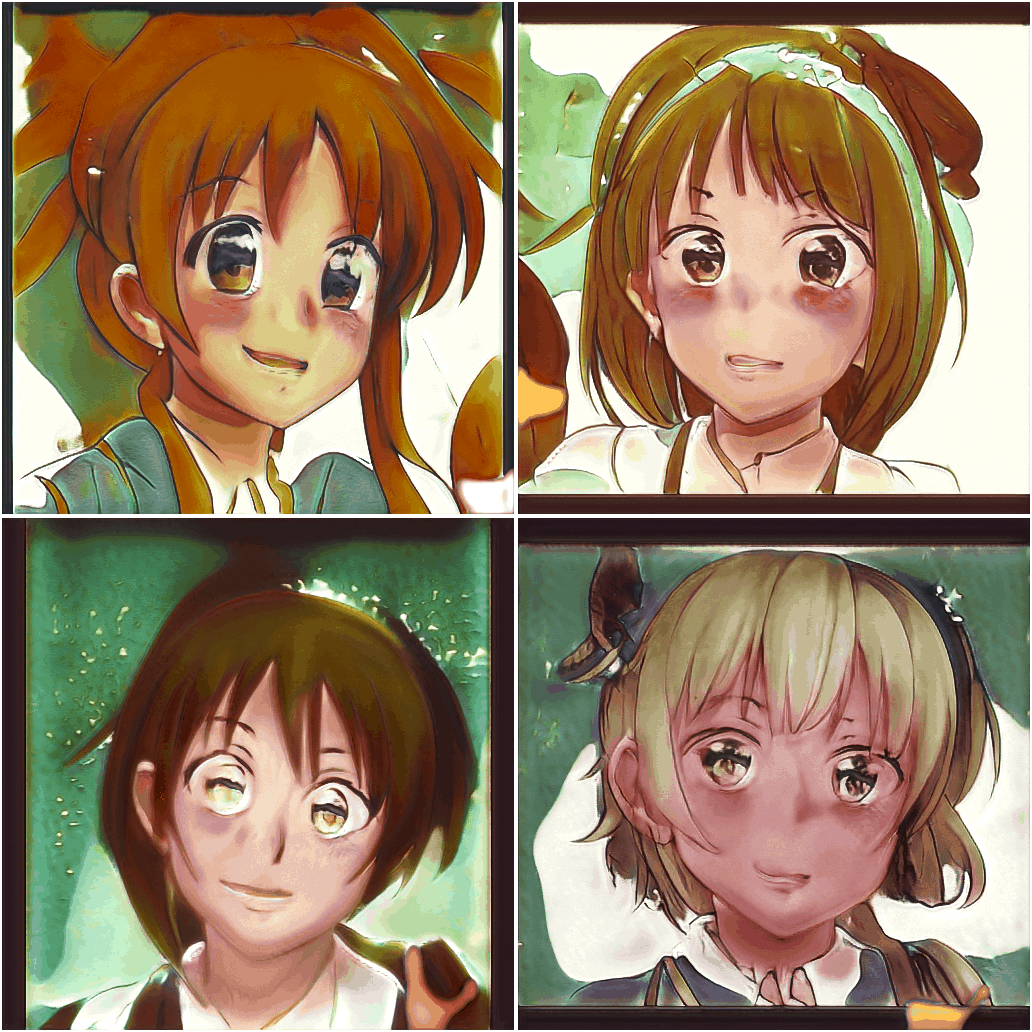

Training improvements: 256px StyleGAN anime faces after ~46 GPU-hours (top) vs 512px anime faces after 382 GPU-hours (bottom); see also the video montage of first 9k iterations

Training on a desktop is a bit painful because GPU use is not controlled by nice/ionice priorities and any training will badly degrade the usability/latency of your GUI, which is a frustration that builds up over month-long runtimes. If you have multiple GPUs, you can offload training to the spare GPUs; if you have only 1 GPU, you can try using lazy: a tool for running processes in idle time—it will cost you some throughput as it pauses training while you are actively using your computer, but it’ll be worth it.

Data Preparation

The most difficult part of running StyleGAN is preparing the dataset properly. StyleGAN does not, unlike most GAN implementations (particularly PyTorch ones), support reading a directory of files as input; it can only read its unique .tfrecord format which stores each image as raw arrays at every relevant resolution.21 Thus, input files must be perfectly uniform, (slowly) converted to the .tfrecord format by the special dataset_tool.py tool, and will take up ~19× more disk space.22

A StyleGAN dataset must consist of images all formatted exactly the same way.

Images must be precisely 512×512px or 1,024×1,024px etc. (any eg. 512×513px images will kill the entire run), they must all be the same colorspace (you cannot have sRGB and Grayscale JPGs—and I doubt other color spaces work at all), they must not be transparent, the filetype must be the same as the model you intend to (re)train (ie. you cannot retrain a PNG-trained model on a JPG dataset, StyleGAN will crash every time with inscrutable convolution/channel-related errors)23, and there must be no subtle errors like CRC checksum errors which image viewers or libraries like ImageMagick often ignore.

Faces Preparation

My workflow:

Download raw images from Danbooru2021 if necessary

Extract from the JSON Danbooru2018 metadata all the IDs of a subset of images if a specific Danbooru tag (such as a single character) is desired, using

jqand shell scriptingCrop square anime faces from raw images using Nagadomi’s

lbpcascade_animeface(regular face-detection methods do not work on anime images)Delete empty files, monochrome or grayscale files, & exact-duplicate files

Convert to JPG

Upscale below the target resolution (512px) images with

waifu2xConvert all images to exactly 512×512 resolution sRGB JPG images

If feasible, improve data quality by checking for low-quality images by hand, removing near-duplicates images found by

findimagedupes, and filtering with a pretrained GAN’s DiscriminatorConvert to StyleGAN format using

dataset_tool.py

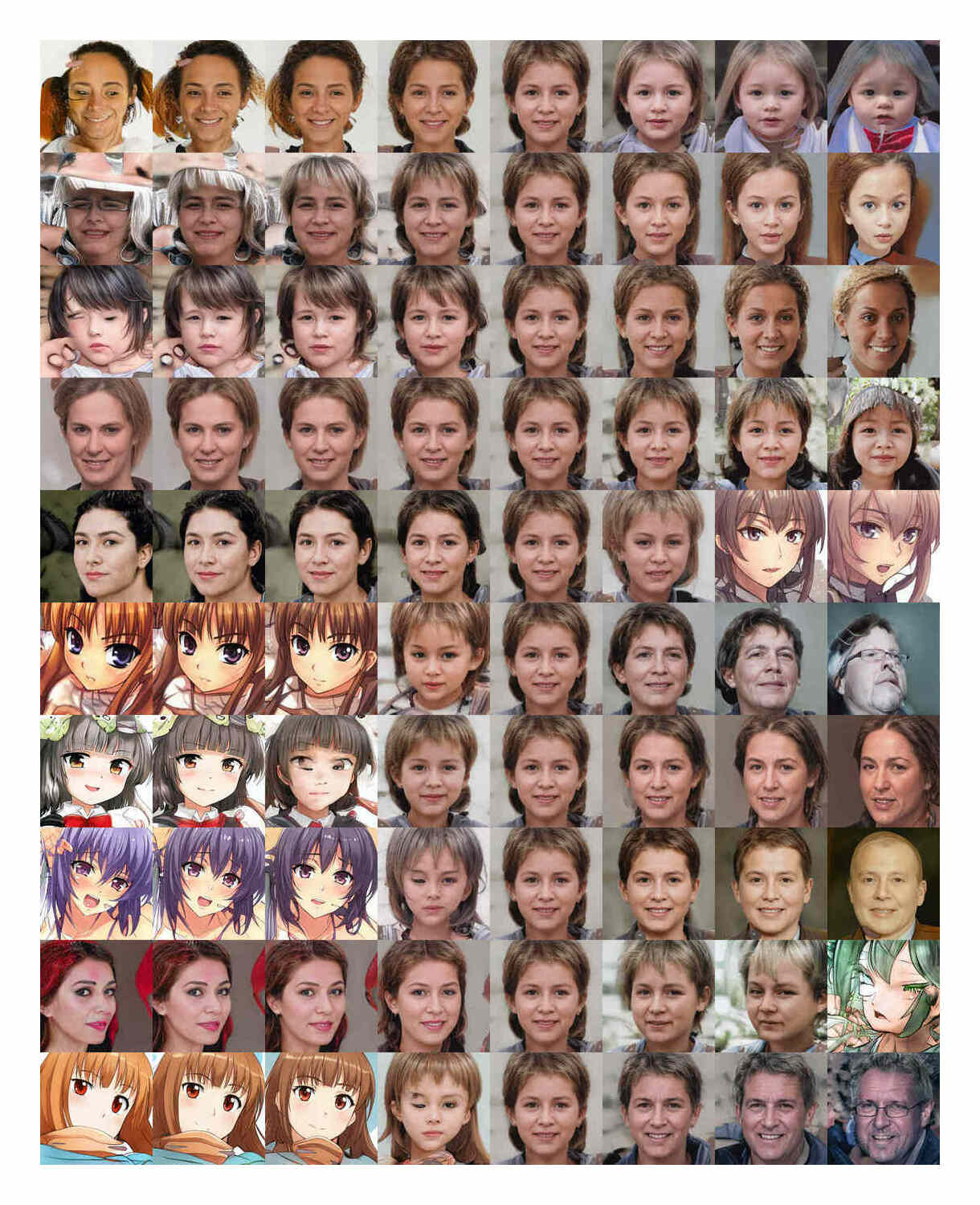

The goal is to turn this:

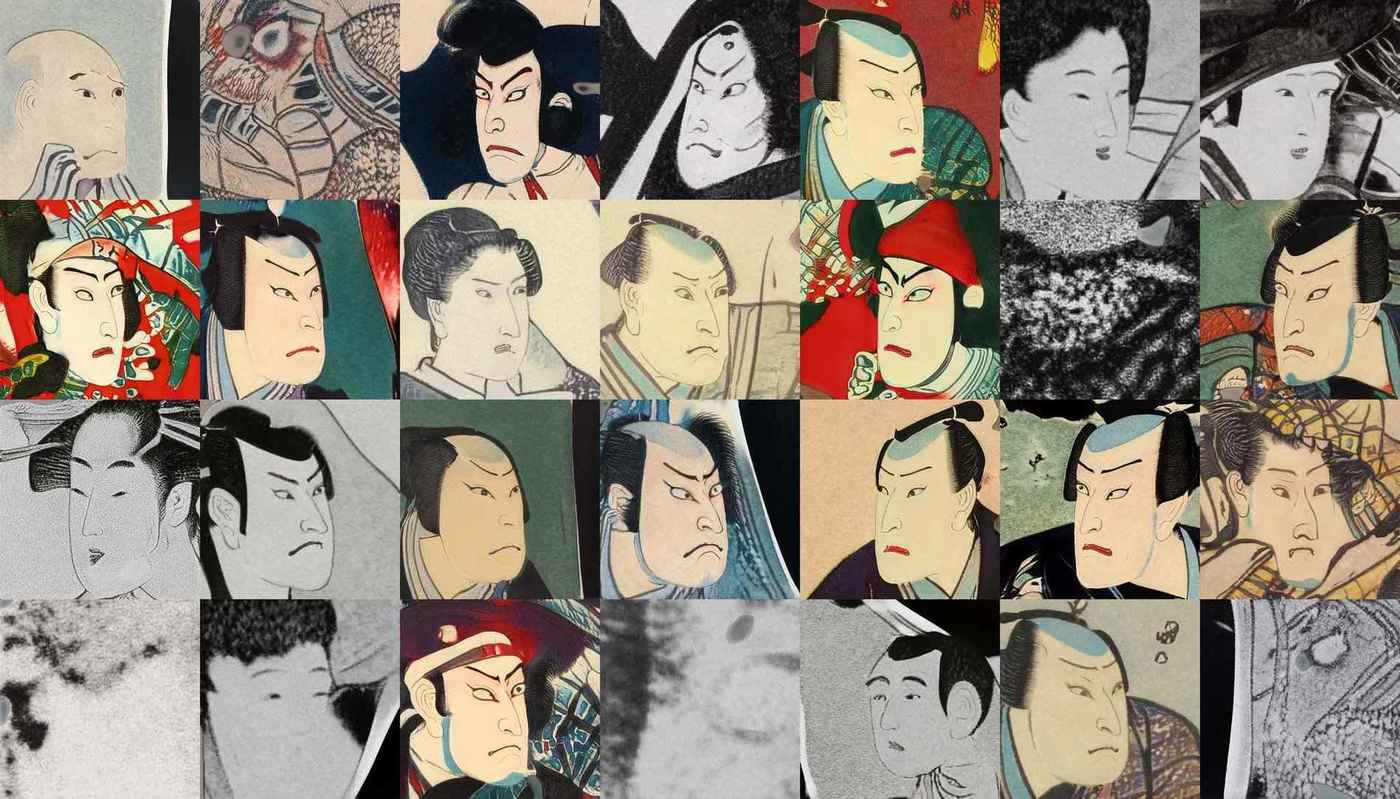

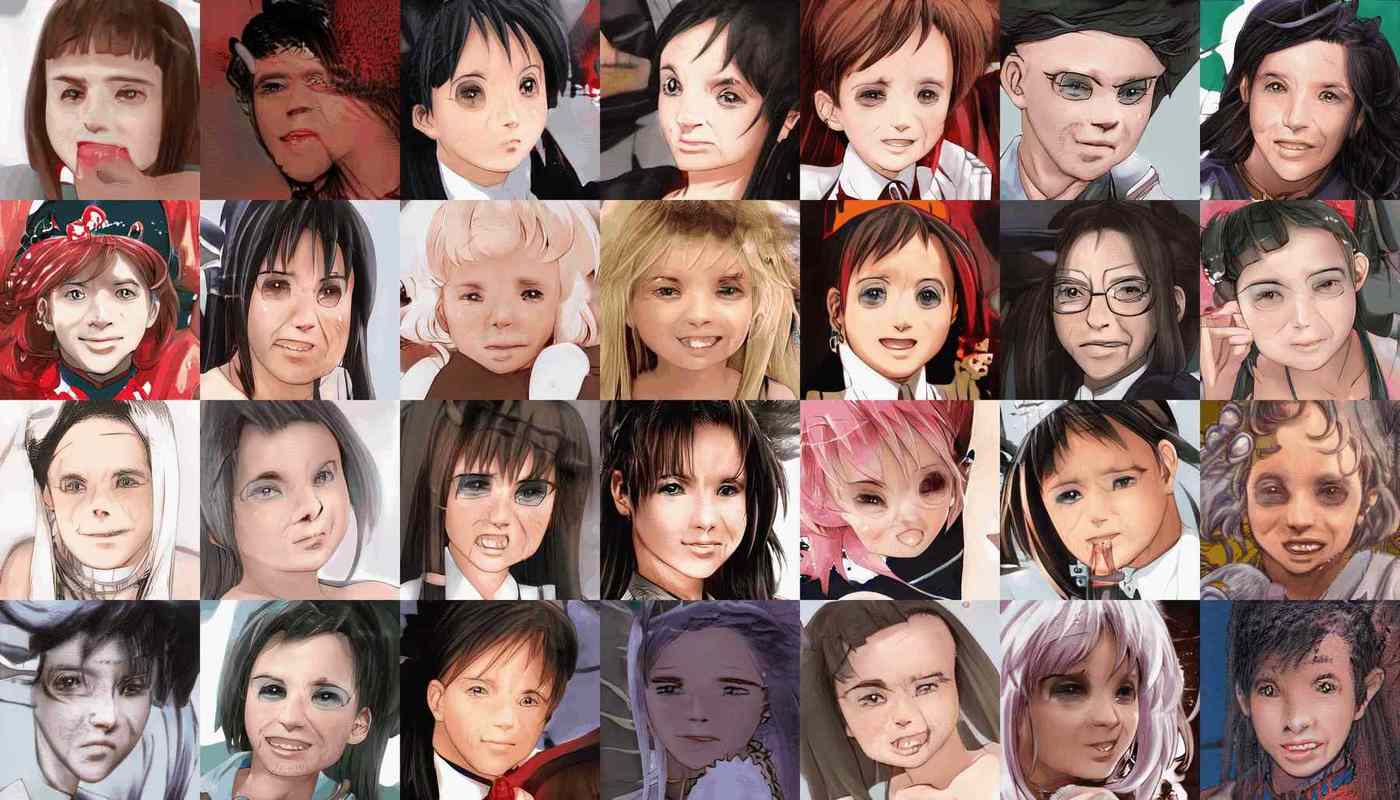

100 random real sample images from the 512px SFW subset of Danbooru in a 10×10 grid.

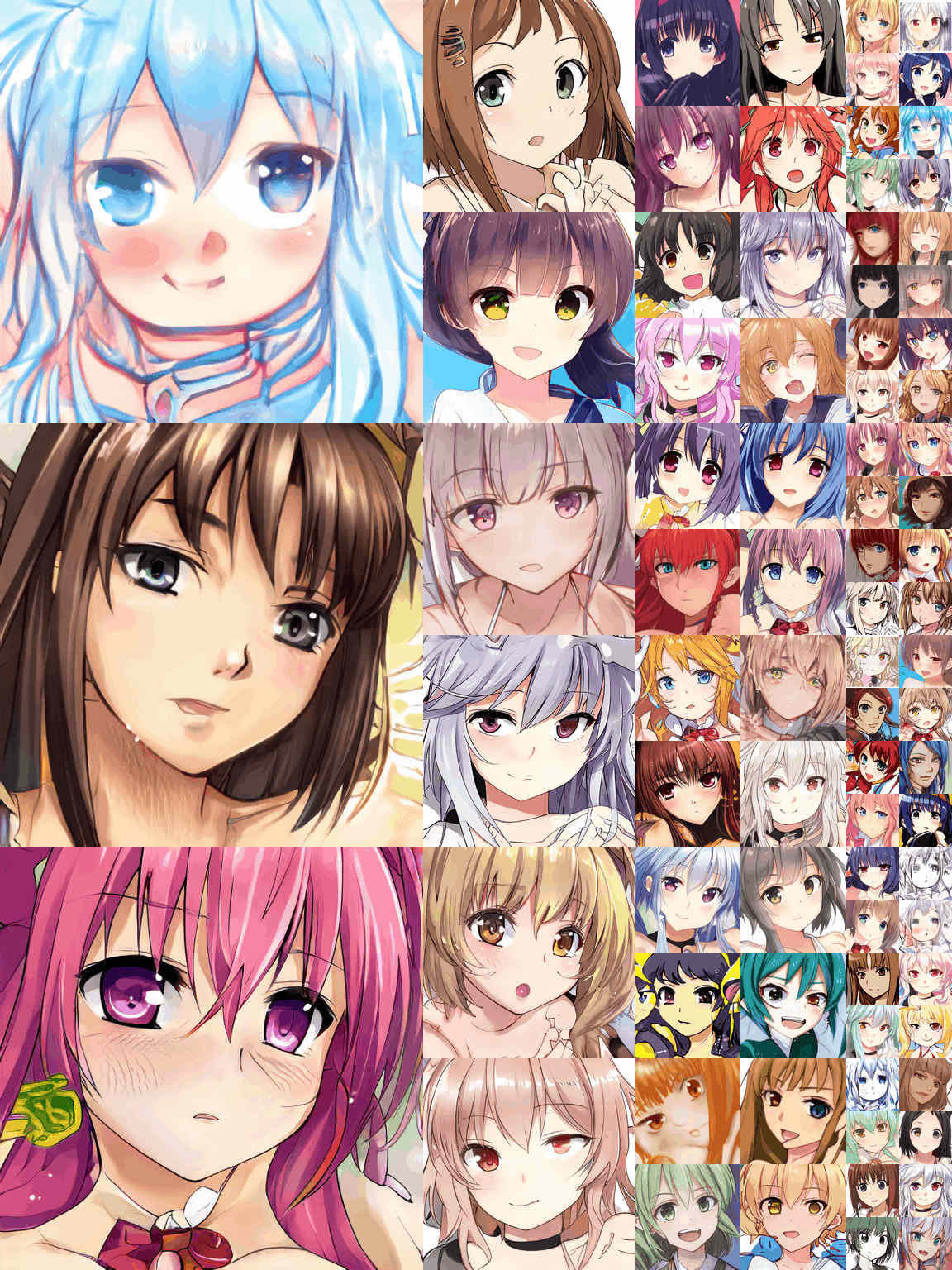

into this:

36 random real sample images from the cropped Danbooru faces in a 6×6 grid.

Below I use shell scripting to prepare the dataset. A possible alternative is danbooru-utility, which aims to help “explore the dataset, filter by tags, rating, and score, detect faces, and resize the images”.

Cropping

The Danbooru download can be done via rsync, which provides a JSON metadata tarball which unpacks into metadata/2* & a folder structure of {original,512px}/{0-999}/$ID.{png,jpg,...}.

For training on SFW whole images, the 512px/ version of Danbooru2018 would work, but it is not a great idea for faces because by scaling images down to 512px, a lot of face detail has been lost, and getting high-quality faces is a challenge. The SFW IDs can be extracted from the filenames in 512px/ directly or from the metadata by extracting the id & rating fields (and saving to a file):

find ./512px/ -type f | sed -e 's/.*\/\([[:digit:]]*\)\.jpg/\1/'

# 967769

# 1853769

# 2729769

# 704769

# 1799769

# ...

tar xf metadata.json.tar.xz

cat metadata/20180000000000* | jq '[.id, .rating]' -c | grep -F '"s"' | cut -d '"' -f 2 # "

# ...After installing and testing Nagadomi’s lbpcascade_animeface to make sure it & OpenCV works, one can use a simple script which crops the face(s) from a single input image. The accuracy on Danbooru images is fairly good, perhaps 90% excellent faces, 5% low-quality faces (genuine but either awful art or tiny little faces on the order of 64px which useless), and 5% outright errors—non-faces like armpits or elbows (oddly enough). It can be improved by making the script more restrictive, such as requiring 250×250px regions, which eliminates most of the low-quality faces & mistakes. (There is an alternative more-difficult-to-run library by Nakatomi which offers a face-cropping script, animeface-2009’s face_collector.rb, which Nakatomi says is better at cropping faces, but I was not impressed when I tried it out.) crop.py:

import cv2

import sys

import os.path

def detect(cascade_file, filename, outputname):

if not os.path.isfile(cascade_file):

raise RuntimeError("%s: not found" % cascade_file)

cascade = cv2.CascadeClassifier(cascade_file)

image = cv2.imread(filename)

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

gray = cv2.equalizeHist(gray)

## NOTE: Suggested modification: increase minSize to '(250,250)' px,

## increasing proportion of high-quality faces & reducing

## false positives. Faces which are only 50×50px are useless

## and often not faces at all.

## FOr my StyleGANs, I use 250 or 300px boxes

faces = cascade.detectMultiScale(gray,

# detector options

scaleFactor = 1.1,

minNeighbors = 5,

minSize = (50, 50))

i=0

for (x, y, w, h) in faces:

cropped = image[y: y + h, x: x + w]

cv2.imwrite(outputname+str(i)+".png", cropped)

i=i+1

if len(sys.argv) != 4:

sys.stderr.write("usage: detect.py <animeface.xml file> <input> <output prefix>\n")

sys.exit(-1)

detect(sys.argv[1], sys.argv[2], sys.argv[3])The IDs can be combined with the provided lbpcascade_animeface script using xargs, however this will be far too slow and it would be better to exploit parallelism with xargs --max-args=1 --max-procs=16 or parallel. It’s also worth noting that lbpcascade_animeface seems to use up GPU VRAM even though GPU use offers no apparent speedup (a slowdown if anything, given limited VRAM), so I find it helps to explicitly disable GPU use by setting CUDA_VISIBLE_DEVICES="". (For this step, it’s quite helpful to have a many-core system like a Threadripper.)

Combining everything, parallel face-cropping of an entire Danbooru2018 subset can be done like this:

cropFaces() {

BUCKET=$(printf "%04d" $(( $@ % 1000 )) )

ID="$@"

CUDA_VISIBLE_DEVICES="" nice python ~/src/lbpcascade_animeface/examples/crop.py \

~/src/lbpcascade_animeface/lbpcascade_animeface.xml \

./original/$BUCKET/$ID.* "./faces/$ID"

}

export -f cropFaces

mkdir ./faces/

cat sfw-ids.txt | parallel --progress cropFaces

# NOTE: because of the possibility of multiple crops from an image, the script appends a N counter;

# remove that to get back the original ID & filepath: eg

#

## original/0196/933196.jpg → portrait/9331961.jpg

## original/0669/1712669.png → portrait/17126690.jpg

## original/0997/3093997.jpg → portrait/30939970.jpgNvidia StyleGAN, by default and like most image-related tools, expects square images like 512×512px, but there is nothing inherent to neural nets or convolutions that requires square inputs or outputs, and rectangular convolutions are possible. In the case of faces, they tend to be more rectangular than square, and we’d prefer to use a rectangular convolution if possible to focus the image on the relevant dimension rather than either pay the severe performance penalty of increasing total dimensions to 1,024×1,024px or stick with 512×512px & waste image outputs on emitting black bars/backgrounds. A properly-sized rectangular convolution can offer a nice speedup (eg. Fast.ai’s training ImageNet in 18m for $52.56$402018 using them among other tricks). Nolan Kent’s StyleGAN re-implementation (released October 2019) does support rectangular convolutions, and as he demonstrates in his blog post, it works nicely.

Cleaning & Upscaling

Miscellaneous cleanups can be done:

## Delete failed/empty files

find faces/ -size 0 -type f -delete

## Delete 'too small' files which is indicative of low quality:

find faces/ -size -40k -type f -delete

## Delete exact duplicates:

fdupes --delete --omitfirst --noprompt faces/

## Delete monochrome or minimally-colored images:

### the heuristic of <257 unique colors is imperfect but better than anything else I tried

deleteBW() { if [[ `identify -format "%k" "$@"` -lt 257 ]];

then rm "$@"; fi; }

export -f deleteBW

find faces -type f | parallel --progress deleteBWI remove black-white or grayscale images from all my GAN experiments because in my earliest experiments, their inclusion appeared to increase instability: mixed datasets were extremely unstable, monochrome datasets failed to learn at all, but color-only runs made some progress. It is likely that StyleGAN is now powerful enough to be able to learn on mixed datasets (and some later experiments by other people suggest that StyleGAN can handle both monochrome & color anime-style faces without a problem), but I have not risked a full month-long run to investigate, and so I continue doing color-only.

Discriminator Ranking: Using a Trained Discriminator to Rank and Clean Data

The Discriminator of a GAN is trained to detect outliers or bad datapoints. So it can be used for cleaning the original dataset of aberrant samples. This works reasonably well and I obtained BigGAN/StyleGAN quality improvements by manually deleting the worst samples (typically badly-cropped or low-quality faces), but has peculiar behavior which indicates that the Discriminator is not learning anything equivalent to a “quality” score but may be doing some form of memorization of specific real datapoints. What does this mean for how GANs work?

What is a D doing? I find that the highest ranked images often contain many anomalies or low-quality images which need to be deleted. Why? The BigGAN paper notes a well-trained D which achieves 98% real vs fake classification performance on the ImageNet training dataset falls to 50–55% accuracy when run on the validation dataset, suggesting the D’s role is about memorizing the training data rather than some measure of ‘realism’.

A good trick with GANs is, after training to reasonable levels of quality, reusing the Discriminator to rank the real datapoints; images the trained D assigns the lowest probability/score of being real are often the worst-quality ones and going through the bottom decile (or deleting them entirely) should remove many anomalies and may improve the GAN. The GAN is then trained on the new cleaned dataset, making this a kind of “active learning”.

Since rating images is what the D already does, no new algorithms or training methods are necessary, and almost no code is necessary: run the D on the whole dataset to rank each image (faster than it seems since the G & backpropagation are unnecessary, even a large dataset can be ranked in a wallclock hour or two), then one can review manually the bottom & top X%, or perhaps just delete the bottom X% sight unseen if enough data is available.

Perhaps because the D ranking is not necessarily a ‘quality’ score but simply a sort of confidence rating that an image is from the real dataset; if the real images contain certain easily-detectable images which the G can’t replicate, then the D might memorize or learn them quickly. For example, in face crops, whole figure crops are common mistaken crops, making up a tiny percentage of images; how could a face-only G learn to generate whole realistic bodies without the intermediate steps being instantly detected & defeated as errors by D, while D is easily able to detect realistic bodies as definitely real? This would explain the polarized rankings. And given the close connections between GANs & DRL, I have to wonder if there is more memorization going on than suspected in things like “Deep reinforcement learning from human preferences”? Incidentally, this may also explain the problems with using Discriminators for visualization (image generation via gradient ascent) & semi-supervised representation learning: if the D is memorizing datapoints to force the G to generalize, then its internal representations would be expected to be useless—to memorize, it is doing things like learning datapoint-specific details or learning non-robust features, which would lead to visualizations which just look like high-frequency noise to a human viewer or to ungeneralizable ‘embeddings’. (For embeddings, one would instead want to extract knowledge from the G, perhaps by encoding an image into z and using the z as the representation because it has so many disentangled semantics encoded into it.)

An alternative perspective is offered by a crop of 2020 papers ( et al 2020b; et al 2020; et al 2020; et al 2020c) examining how useful GAN data augmentation requires it to be done during training, and one must augment all images.24 et al 2020c & et al 2020 observe, with regular GAN training, there is a striking steady decline of D performance on heldout data, and increase on training data, throughout the course of training, confirming the BigGAN observation but also showing it is a dynamic phenomenon, and probably a bad one. Adding in correct data augmentation reduces this overfitting—and markedly improves sample-efficiency & final quality. This suggests that the D does indeed memorize, but that this is not a good thing. et al 2020 describes what happens as

Convergence is now achieved [with ADA/data augmentation] regardless of the training set size and overfitting no longer occurs. Without augmentations, the gradients the generator receives from the discriminator become very simplistic over time—the discriminator starts to pay attention to only a handful of features, and the generator is free to create otherwise nonsensical images. With ADA, the gradient field stays much more detailed which prevents such deterioration.

In other words, just as the G can ‘mode collapse’ by focusing on generating images with only a few features, the D can also ‘feature collapse’ by focusing on a few features which happen to correctly split the training data’s reals from fakes, such as by memorizing them outright. This technically works, but not well. This also explains why BigGAN training stabilized when training on JFT-300M: divergence/collapse usually starts with D winning; if D wins because it memorizes, then a sufficiently large dataset should make memorization infeasible; and JFT-300M turns out to be sufficiently large. (This would predict that if Brock et al had checked the JFT-300M BigGAN D’s classification performance on a held-out JFT-300M, rather than just on their ImageNet BigGAN, then they would have found that it classified reals vs fake well above chance.)

Perhaps the way GANs work is that the G is constantly trying to generate samples which are near real datapoints, but then the D ‘repels’ samples too near a real datapoint, so G orbits the real datapoints; the D successfully memorizing distinguishing features of each real datapoint breaks the dynamics because it can either always detect G’s samples or G can get arbitrarily close & it memorize.

et al 2018 suggests that Discriminators may need to grow large for GANs to be able to learn many datapoints; to me this suggests that there may be useful ways to increase the capacity of Discriminators which are not simply increasing parameter size. For example, by analogy to minibatch discrimination, what we might call minibatch retrieval using retrieval methods to boost Discriminator capacity by retrieving the real nearest-neighbors of every generated sample to pass into the Discriminator to make its job much easier. By adding in hard negatives from the dataset—at scale, minibatch discrimination would stop being useful because it is ever more unlikely, even with minibatches of thousands of images like BigGAN, that any of the other images in the minibatch are all that similar to a fake or exhibit the same flaws.

This could be seen as parallel to retrieval diffusion models? See also “novelty nets”.

If so, this suggests that for D ranking, it may not be too useful to take the D from the end of a run, if not using data augmentation, because that D be the version with the greatest degree of memorization!

Here is a simple StyleGAN2 script (ranker.py) to open a StyleGAN .pkl and run it on a list of image filenames to print out the D score, courtesy of Shao Xuning:

import pickle

import numpy as np

import cv2

import dnnlib.tflib as tflib

import random

import argparse

import PIL.Image

from training.misc import adjust_dynamic_range

def preprocess(file_path):

# print(file_path)

img = np.asarray(PIL.Image.open(file_path))

# Preprocessing from dataset_tool.create_from_images

img = img.transpose([2, 0, 1]) # HWC => CHW

# img = np.expand_dims(img, axis=0)

img = img.reshape((1, 3, 512, 512))

# Preprocessing from training_loop.process_reals

img = adjust_dynamic_range(data=img, drange_in=[0, 255], drange_out=[-1.0, 1.0])

return img

def main(args):

random.seed(args.random_seed)

minibatch_size = args.minibatch_size

input_shape = (minibatch_size, 3, 512, 512)

# print(args.images)

images = args.images

images.sort()

tflib.init_tf()

_G, D, _Gs = pickle.load(open(args.model, "rb"))

# D.print_layers()

image_score_all = [(image, []) for image in images]

# Shuffle the images and process each image in multiple minibatches.

# Note: networks.stylegan2.minibatch_stddev_layer

# calculates the standard deviation of a minibatch group as a feature channel,

# which means that the output of the discriminator actually depends

# on the companion images in the same minibatch.

for i_shuffle in range(args.num_shuffles):

# print('shuffle: {}'.format(i_shuffle))

random.shuffle(image_score_all)

for idx_1st_img in range(0, len(image_score_all), minibatch_size):

idx_img_minibatch = []

images_minibatch = []

input_minibatch = np.zeros(input_shape)

for i in range(minibatch_size):

idx_img = (idx_1st_img + i) % len(image_score_all)

idx_img_minibatch.append(idx_img)

image = image_score_all[idx_img][0]

images_minibatch.append(image)

img = preprocess(image)

input_minibatch[i, :] = img

output = D.run(input_minibatch, None, resolution=512)

print('shuffle: {}, indices: {}, images: {}'

.format(i_shuffle, idx_img_minibatch, images_minibatch))

print('Output: {}'.format(output))

for i in range(minibatch_size):

idx_img = idx_img_minibatch[i]

image_score_all[idx_img][1].append(output[i][0])

with open(args.output, 'a') as fout:

for image, score_list in image_score_all:

print('Image: {}, score_list: {}'.format(image, score_list))

avg_score = sum(score_list)/len(score_list)

fout.write(image + ' ' + str(avg_score) + '\n')

def parse_arguments():

parser = argparse.ArgumentParser()

parser.add_argument('--model', type=str, required=True,

help='.pkl model')

parser.add_argument('--images', nargs='+')

parser.add_argument('--output', type=str, default='rank.txt')

parser.add_argument('--minibatch_size', type=int, default=4)

parser.add_argument('--num_shuffles', type=int, default=5)

parser.add_argument('--random_seed', type=int, default=0)

return parser.parse_args()

if __name__ == '__main__':

main(parse_arguments())Depending on how noisy the rankings are in terms of ‘quality’ and available sample size, one can either review the worst-ranked images by hand, or delete the bottom X%. One should check the top-ranked images as well to make sure the ordering is right; there can also be some odd images in the top X% as well which should be removed.

It might be possible to use ranker.py to improve the quality of generated samples as well, as a simple version of discriminator rejection sampling.

Upscaling

The next major step is upscaling images using waifu2x, which does an excellent job on 2× upscaling of anime images, which are nigh-indistinguishable from a higher-resolution original and greatly increase the usable corpus. The downside is that it can take 1–10s per image, must run on the GPU (I can reliably fit ~9 instances on my 2×1080ti), and is written in a now-unmaintained DL framework, Torch, with no current plans to port to PyTorch, and is gradually becoming harder to get running (one hopes that by the time CUDA updates break it entirely, there will be another super-resolution GAN I or someone else can train on Danbooru to replace it). If pressed for time, one can just upscale the faces normally with ImageMagick but I believe there will be some quality loss and it’s worthwhile.

. ~/src/torch/install/bin/torch-activate

upscaleWaifu2x() {

SIZE1=$(identify -format "%h" "$@")

SIZE2=$(identify -format "%w" "$@");

if (( $SIZE1 < 512 && $SIZE2 < 512 )); then

echo "$@" $SIZE

TMP=$(mktemp "/tmp/XXXXXX.png")

CUDA_VISIBLE_DEVICES="$((RANDOM % 2 < 1))" nice th ~/src/waifu2x/waifu2x.lua -model_dir \

~/src/waifu2x/models/upconv_7/art -tta 1 -m scale -scale 2 \

-i "$@" -o "$TMP"

convert "$TMP" "$@"

rm "$TMP"

fi; }

export -f upscaleWaifu2x

find faces/ -type f | parallel --progress --jobs 9 upscaleWaifu2xQuality Checks & Data Augmentation

The single most effective strategy to improve a GAN is to clean the data. StyleGAN cannot handle too-diverse datasets composed of multiple objects or single objects shifted around, and rare or odd images cannot be learned well. Karras et al get such good results with StyleGAN on faces in part because they constructed FFHQ to be an extremely clean consistent dataset of just centered well-lit clear human faces without any obstructions or other variation. Similarly, Arfa’s “This Fursona Does Not Exist” (TFDNE) S2 generates much better furry portraits than my own “This Waifu Does Not Exist” (TWDNE) S2 anime portraits, due partly to training longer to convergence on a TPU pod but mostly due to his investment in data cleaning: aligning the faces and heavy filtering of samples—this left him with only n = 50k but TFDNE nevertheless outperforms TWDNE’s n = 300k. (Data cleaning/augmentation is one of the more powerful ways to improve results; if we imagine deep learning as ‘programming’ or ‘Software 2.0’25 in Andrej Karpathy’s terms, data cleaning/augmentation is one of the easiest ways to finetune the loss function towards what we really want by gardening our data to remove what we don’t want and increase what we do.)

At this point, one can do manual quality checks by viewing a few hundred images, running findimagedupes -t 99% to look for near-identical faces, or dabble in further modifications such as doing “data augmentation”. Working with Danbooru2018, at this point one would have ~600–700,000 faces, which is more than enough to train StyleGAN and one will have difficulty storing the final StyleGAN dataset because of its sheer size (due to the ~18portrait dataset with n = 300k.

However, if that is not enough or one is working with a small dataset like for a single character, data augmentation may be necessary. The mirror/horizontal flip is not necessary as StyleGAN has that built-in as an option26, but there are many other possible data augmentations. One can stretch, shift colors, sharpen, blur, increase/decrease contrast/brightness, crop, and so on. An example, extremely aggressive, set of data augmentations could be done like this:

dataAugment () {

image="$@"

target=$(basename "$@")

suffix="png"

convert -deskew 50 "$image" "$target".deskew."$suffix"

convert -resize 110%x100% "$image" "$target".horizstretch."$suffix"

convert -resize 100%x110% "$image" "$target".vertstretch."$suffix"

convert -blue-shift 1.1 "$image" "$target".midnight."$suffix"

convert -fill red -colorize 5% "$image" "$target".red."$suffix"

convert -fill orange -colorize 5% "$image" "$target".orange."$suffix"

convert -fill yellow -colorize 5% "$image" "$target".yellow."$suffix"

convert -fill green -colorize 5% "$image" "$target".green."$suffix"

convert -fill blue -colorize 5% "$image" "$target".blue."$suffix"

convert -fill purple -colorize 5% "$image" "$target".purple."$suffix"

convert -adaptive-blur 3x2 "$image" "$target".blur."$suffix"

convert -adaptive-sharpen 4x2 "$image" "$target".sharpen."$suffix"

convert -brightness-contrast 10 "$image" "$target".brighter."$suffix"

convert -brightness-contrast 10x10 "$image" "$target".brightercontraster."$suffix"

convert -brightness-contrast -10 "$image" "$target".darker."$suffix"

convert -brightness-contrast -10x10 "$image" "$target".darkerlesscontrast."$suffix"

convert +level 5% "$image" "$target".contraster."$suffix"

convert -level 5%\! "$image" "$target".lesscontrast."$suffix"

}

export -f dataAugment

find faces/ -type f | parallel --progress dataAugmentUpscaling & Conversion

Once any quality fixes or data augmentation are done, it’d be a good idea to save a lot of disk space by converting to JPG & lossily reducing quality (I find 33% saves a ton of space at no visible change):

convertPNGToJPG() { convert -quality 33 "$@" "$@".jpg && rm "$@"; }

export -f convertPNGToJPG

find faces/ -type f -name "*.png" | parallel --progress convertPNGToJPGRemember that StyleGAN models are only compatible with images of the type they were trained on, so if you are using a StyleGAN pretrained model which was trained on PNGs (like, IIRC, the FFHQ StyleGAN models), you will need to keep using PNGs.

Doing the final scaling to exactly 512px can be done at many points but I generally postpone it to the end in order to work with images in their ‘native’ resolutions & aspect-ratios for as long as possible. At this point we carefully tell ImageMagick to rescale everything to 512×51227, not preserving the aspect ratio by filling in with a black background as necessary on either side:

find faces/ -type f | xargs --max-procs=16 -n 9000 \

mogrify -resize 512x512\> -extent 512x512\> -gravity center -background blackAny slightly-different image could crash the import process. Therefore, we delete any image which is even slightly different from the 512×512 sRGB JPG they are supposed to be:

find faces/ -type f | xargs --max-procs=16 -n 9000 identify | \

# remember the warning: images must be identical, square, and sRGB/grayscale:

grep -F -v " JPEG 512x512 512x512+0+0 8-bit sRGB"| cut -d ' ' -f 1 | \

xargs --max-procs=16 -n 10000 rmHaving done all this, we should have a large consistent high-quality dataset.

Finally, the faces can now be converted to the ProGAN or StyleGAN dataset format using dataset_tool.py. It is worth remembering at this point how fragile that is and the requirements ImageMagick’s identify command is handy for looking at files in more details, particularly their resolution & colorspace, which are often the problem.

Because of the extreme fragility of dataset_tool.py, I strongly advise that you edit it to print out the filenames of each file as they are being processed so that when (not if) it crashes, you can investigate the culprit and check the rest. The edit could be as simple as this:

diff --git a/dataset_tool.py b/dataset_tool.py

index 4ddfe44..e64e40b 100755

--- a/dataset_tool.py

+++ b/dataset_tool.py

@@ -519,6 +519,7 @@ def create_from_images(tfrecord_dir, image_dir, shuffle):

with TFRecordExporter(tfrecord_dir, len(image_filenames)) as tfr:

order = tfr.choose_shuffled_order() if shuffle else np.arange(len(image_filenames))

for idx in range(order.size):

+ print(image_filenames[order[idx]])

img = np.asarray(PIL.Image.open(image_filenames[order[idx]]))

if channels == 1:

img = img[np.newaxis, :, :] # HW => CHWThere should be no issues if all the images were thoroughly checked earlier, but should any images crash it, they can be checked in more detail by identify. (I advise just deleting them and not trying to rescue them.)

Then the conversion is just (assuming StyleGAN prerequisites are installed, see next section):

source activate MY_TENSORFLOW_ENVIRONMENT

python dataset_tool.py create_from_images datasets/faces /media/gwern/Data/danbooru2018/faces/Congratulations, the hardest part is over. Most of the rest simply requires patience (and a willingness to edit Python files directly in order to configure StyleGAN).

Training

Installation

I assume you have CUDA installed & functioning. If not, good luck. (On my Ubuntu Bionic 18.04.2 LTS OS, I have successfully used the Nvidia driver version #410.104, CUDA 10.1, and TensorFlow 1.13.1.)

A Python ≥3.628 virtual environment can be set up for StyleGAN to keep dependencies tidy, TensorFlow & StyleGAN dependencies installed:

conda create -n stylegan pip python=3.6

source activate stylegan

## TF:

pip install tensorflow-gpu

## Test install:

python -c "import tensorflow as tf; tf.enable_eager_execution(); \

print(tf.reduce_sum(tf.random_normal([1000, 1000])))"

pip install tensorboard

## StyleGAN:

## Install pre-requisites:

pip install pillow numpy moviepy scipy opencv-python lmdb # requests?

## Download:

git clone 'https://github.com/NVlabs/stylegan.git' && cd ./stylegan/

## Test install:

python pretrained_example.py

## ./results/example.png should be a photograph of a middle-aged manStyleGAN can also be trained on the interactive Google Colab service, which provides free slices of K80 GPUs 12-GPU-hour chunks, using this Colab notebook. Colab is much slower than training on a local machine & the free instances are not enough to train the best StyleGANs, but this might be a useful option for people who simply want to try it a little or who are doing something quick like extremely low-resolution training or transfer-learning where a few GPU-hours on a slow small GPU might be enough.

Configuration

StyleGAN doesn’t ship with any support for CLI options; instead, one must edit train.py and train/training_loop.py:

train/training_loop.pyThe core configuration is done in the function defaults to

training_loopbeginning line 112.The key arguments are

G_smoothing_kimg&D_repeats(affects the learning dynamics),network_snapshot_ticks(how often to save the pickle snapshots—more frequent means less progress lost in crashes, but as each one weighs 300MB+, can quickly use up gigabytes of space),resume_run_id(set to"latest"), andresume_kimg.Don’t Erase Your Model

resume_kimggoverns where in the overall progressive-growing training schedule StyleGAN starts from. If it is set to 0, training begins at the beginning of the progressive-growing schedule, at the lowest resolution, regardless of how much training has been previously done. It is vitally important when doing transfer learning that it is set to a sufficiently high number (eg. 10000) that training begins at the highest desired resolution like 512px, as it appears that layers are erased when added during progressive-growing. (resume_kimgmay also need to be set to a high value to make it skip straight to training at the highest resolution if you are training on small datasets of small images, where there’s risk of it overfitting under the normal training schedule and never reaching the highest resolution.) This trick is unnecessary in StyleGAN 2, which is simpler in not using progressive growing.More experimentally, I suggest setting

minibatch_repeats = 1instead ofminibatch_repeats = 5; in line with the suspiciousness of the gradient-accumulation implementation in ProGAN/StyleGAN, this appears to make training both stabler & faster.Note that some of these variables, like learning rates, are overridden in

train.py. It’s better to set those there or else you may confuse yourself badly (like I did in wondering why ProGAN & StyleGAN seemed extraordinarily robust to large changes in the learning rates…).train.py(previouslyconfig.pyin ProGAN; renamedrun_training.pyin StyleGAN 2)Here we set the number of GPUs, image resolution, dataset, learning rates, horizontal flipping/mirroring data augmentation, and minibatch sizes. (This file includes settings intended ProGAN—watch out that you don’t accidentally turn on ProGAN instead of StyleGAN & confuse yourself.) Learning rate & minibatch should generally be left alone (except towards the end of training when one wants to lower the learning rate to promote convergence or rebalance the G/D), but the image resolution/dataset/mirroring do need to be set, like thus:

desc += '-faces'; dataset = EasyDict(tfrecord_dir='faces', resolution=512); train.mirror_augment = TrueThis sets up the 512px face dataset which was previously created in

dataset/faces, turns on mirroring (because while there may be writing in the background, we don’t care about it for face generation), and sets a title for the checkpoints/logs, which will now appear inresults/with the ‘-faces’ string.Assuming you do not have 8 GPUs (as you probably do not), you must change the

-presetto match your number of GPUs, StyleGAN will not automatically choose the correct number of GPUs. If you fail to set it correctly to the appropriate preset, StyleGAN will attempt to use GPUs which do not exist and will crash with the opaque error message (note that CUDA uses zero-indexing soGPU:0refers to the first GPU,GPU:1refers to my second GPU, and thus/device:GPU:2refers to my—nonexistent—third GPU):tensorflow.python.framework.errors_impl.InvalidArgumentError: Cannot assign a device for operation \ G_synthesis_3/lod: {{node G_synthesis_3/lod}}was explicitly assigned to /device:GPU:2 but available \ devices are [ /job:localhost/replica:0/task:0/device:CPU:0, /job:localhost/replica:0/task:0/device:GPU:0, \ /job:localhost/replica:0/task:0/device:GPU:1, /job:localhost/replica:0/task:0/device:XLA_CPU:0, \ /job:localhost/replica:0/task:0/device:XLA_GPU:0, /job:localhost/replica:0/task:0/device:XLA_GPU:1 ]. \ Make sure the device specification refers to a valid device. [[{{node G_synthesis_3/lod}}]]For my 2×1080ti I’d set:

desc += '-preset-v2-2gpus'; submit_config.num_gpus = 2; sched.minibatch_base = 8; sched.minibatch_dict = \ {4: 256, 8: 256, 16: 128, 32: 64, 64: 32, 128: 16, 256: 8}; sched.G_lrate_dict = {512: 0.0015, 1024: 0.002}; \ sched.D_lrate_dict = EasyDict(sched.G_lrate_dict); train.total_kimg = 99000So my results get saved to

results/00001-sgan-faces-2gpuetc. (the run ID increments, ‘sgan’ because StyleGAN rather than ProGAN, ‘-faces’ as the dataset being trained on, and ‘2gpu’ because it’s multi-GPU).

Running

I typically run StyleGAN in a screen session which can be detached and keeps multiple shells organized: 1 terminal/shell for the StyleGAN run, 1 terminal/shell for TensorBoard, and 1 for Emacs.

With Emacs, I keep the two key Python files open (train.py and train/training_loop.py) for reference & easy editing.

With the “latest” patch, StyleGAN can be thrown into a while-loop to keep running after crashes, like:

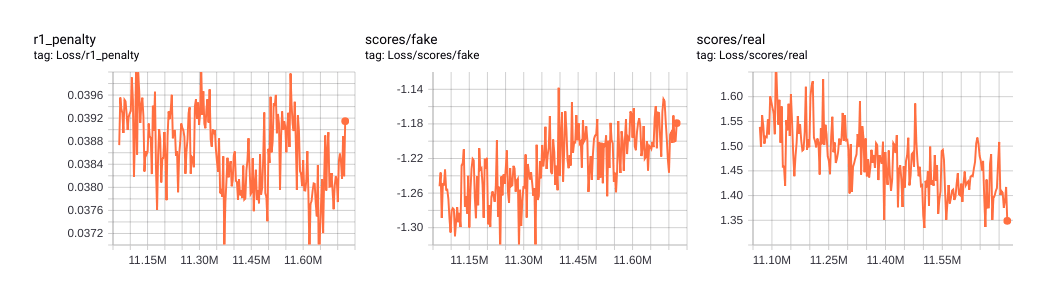

while true; do nice py train.py; date; (xmessage "alert: StyleGAN crashed" &); sleep 10s; doneTensorBoard is a logging utility which displays little time-series of recorded variables which one views in a web browser, eg:

tensorboard --logdir results/02022-sgan-faces-2gpu/

# TensorBoard 1.13.0 at http://127.0.0.1:6006 (Press CTRL+C to quit)Note that TensorBoard can be backgrounded, but needs to be updated every time a new run is started as the results will then be in a different folder.

Training StyleGAN is much easier & more reliable than other GANs, but it is still more of an art than a science. (We put up with it because while GANs suck, everything else sucks more.) Notes on training:

Crashproofing:

The initial release of StyleGAN was prone to crashing when I ran it, segfaulting at random. Updating TensorFlow appeared to reduce this but the root cause is still unknown. Segfaulting or crashing is also reportedly common if running on mixed GPUs (eg. a 1080ti + Titan V).

Unfortunately, StyleGAN has no setting for simply resuming from the latest snapshot after crashing/exiting (which is what one usually wants), and one must manually edit the

resume_run_idline intraining_loop.pyto set it to the latest run ID. This is tedious and error-prone—at one point I realized I had wasted 6 GPU-days of training by restarting from a 3-day-old snapshot because I had not updated theresume_run_idafter a segfault!If you are doing any runs longer than a few wallclock hours, I strongly advise use of nshepperd’s patch to automatically restart from the latest snapshot by setting

resume_run_id = "latest":diff --git a/training/misc.py b/training/misc.py index 50ae51c..d906a2d 100755 --- a/training/misc.py +++ b/training/misc.py @@ -119,6 +119,14 @@ def list_network_pkls(run_id_or_run_dir, include_final=True): del pkls[0] return pkls +def locate_latest_pkl(): + allpickles = sorted(glob.glob(os.path.join(config.result_dir, '0*', 'network-*.pkl'))) + latest_pickle = allpickles[-1] + resume_run_id = os.path.basename(os.path.dirname(latest_pickle)) + RE_KIMG = re.compile('network-snapshot-(\d+).pkl') + kimg = int(RE_KIMG.match(os.path.basename(latest_pickle)).group(1)) + return (locate_network_pkl(resume_run_id), float(kimg)) + def locate_network_pkl(run_id_or_run_dir_or_network_pkl, snapshot_or_network_pkl=None): for candidate in [snapshot_or_network_pkl, run_id_or_run_dir_or_network_pkl]: if isinstance(candidate, str): diff --git a/training/training_loop.py b/training/training_loop.py index 78d6fe1..20966d9 100755 --- a/training/training_loop.py +++ b/training/training_loop.py @@ -148,7 +148,10 @@ def training_loop( # Construct networks. with tf.device('/gpu:0'): if resume_run_id is not None: - network_pkl = misc.locate_network_pkl(resume_run_id, resume_snapshot) + if resume_run_id == 'latest': + network_pkl, resume_kimg = misc.locate_latest_pkl() + else: + network_pkl = misc.locate_network_pkl(resume_run_id, resume_snapshot) print('Loading networks from "%s"...' % network_pkl) G, D, Gs = misc.load_pkl(network_pkl) else:(The diff can be edited by hand, or copied into the repo as a file like

latest.patch& then applied withgit apply latest.patch.)Tuning Learning Rates

The LR is one of the most critical hyperparameters: too-large updates based on too-small minibatches are devastating to GAN stability & final quality. The LR also seems to interact with the intrinsic difficulty or diversity of an image domain; et al 2019 use 0.003 G/D LRs on their FFHQ dataset (which has been carefully curated and the faces aligned to put landmarks like eyes/mouth in the same locations in every image) when training on 8-GPU machines with minibatches of n = 32, but I find lower to be better on my anime face/portrait datasets where I can only do n = 8. From looking at training videos of whole-Danbooru2018 StyleGAN runs, I suspect that the necessary LRs would be lower still. Learning rates are closely related to minibatch size (a common rule of thumb in supervised learning of CNNs is that the relationship of biggest usable LR follows a square-root curve in minibatch size) and the BigGAN research argues that minibatch size itself strongly influences how bad mode dropping is, which suggests that smaller LRs may be more necessary the more diverse/difficult a dataset is.

Balancing G/D:

Screenshot of TensorBoard G/D losses for an anime face StyleGAN making progress towards convergence

Later in training, if the G is not making good progress towards the ultimate goal of a 0.5 loss (and the D’s loss gradually decreasing towards 0.5), and has a loss stubbornly stuck around −1 or something, it may be necessary to change the balance of G/D. This can be done several ways but the easiest is to adjust the LRs in

train.py,sched.G_lrate_dict&sched.D_lrate_dict.One needs to keep an eye on the G/D losses and also the perceptual quality of the faces (since we don’t have any good FID equivalent yet for anime faces, which requires a good open-source Danbooru tagger to create embeddings), and reduce both LRs (or usually just the D’s LR) based on the face quality and whether the G/D losses are exploding or otherwise look imbalanced. What you want, I think, is for the G/D losses to be stable at a certain absolute amount for a long time while the quality visibly improves, reducing D’s LR as necessary to keep it balanced with G; and then once you’ve run out of time/patience or artifacts are showing up, then you can decrease both LRs to converge onto a local optima.

I find the default of 0.003 can be too high once quality reaches a high level with both faces & portraits, and it helps to reduce it by a third to 0.001 or a tenth to 0.0003. If there still isn’t convergence, the D may be too strong and it can be turned down separately, to a tenth or a fiftieth even. (Given the stochasticity of training & the relativity of the losses, one should wait several wallclock hours or days after each modification to see if it made a difference.)

Skipping FID metrics:

Some metrics are computed for logging/reporting. The FID metrics are calculated using an old ImageNet CNN; what is realistic on ImageNet may have little to do with your particular domain and while a large FID like 100 is concerning, FIDs like 20 or even increasing are not necessarily a problem or useful guidance compared to just looking at the generated samples or the loss curves. Given that computing FID metrics is not free & potentially irrelevant or misleading on many image domains, I suggest disabling them entirely. (They are not used in the training for anything, and disabling them is safe.)

They can be edited out of the main training loop by commenting out the call to

metrics.runlike so:@@ -261,7 +265,7 @@ def training_loop() if cur_tick % network_snapshot_ticks == 0 or done or cur_tick == 1: pkl = os.path.join(submit_config.run_dir, 'network-snapshot-%06d.pkl' % (cur_nimg // 1000)) misc.save_pkl((G, D, Gs), pkl) # metrics.run(pkl, run_dir=submit_config.run_dir, num_gpus=submit_config.num_gpus, tf_config=tf_config)‘Blob’ & ‘Crack’ Artifacts: