Miscellaneous

Misc thoughts, memories, proto-essays, musings, etc.

- Quickies

- Plumbing Vs Internet, Revisited

- LLM Challenge: Writing Non-Biblical Sentences

- Trajectoid Words

- Non-Existence Is Bad

- Celebrity Masquerade Game

- Pemmican

- Rock Paper Scissors

- Backfire Effects in Operant Conditioning

- On Being Sick As A Kid

- On First Looking Into Tolkien’s Tower

- Hash Functions

- Icepires

- Cleanup: Before Or After?

- Peak Human Speed

- Oldest Food

- Zuckerberg Futures

- Russia

- Conscientiousness And Online Education

- Fiction

- American Light Novels’ Absence

- Cultural Growth through Diversity

- TV & the Matrix

- Cherchez Le Chien: Dogs As Class Markers in Anime

- Tradeoffs and Costly Signaling in Appearances: the Case of Long Hair

- The Tragedy of Grand Admiral Thrawn

- On Dropping Family Guy

- Pom Poko’s Glorification of Group Suicide

- Full Metal Alchemist: Pride and Knowledge

- A Secular Humanist Reads The Tale of Genji

- Economics

- Long Term Investment

- Measuring Social Trust by Offering Free Lunches

- Lip Reading Website

- Good Governance & Girl Scouts

- Chinese Kremlinology

- Domain-Squatting Externalities

- Ordinary Life Improvements

- A Market For Fat: The Transfer Machine

- Urban Area Cost-Of-Living As Big Tech Moats & Employee Golden Handcuffs

- Psychology

- Technology

- Somatic Genetic Engineering

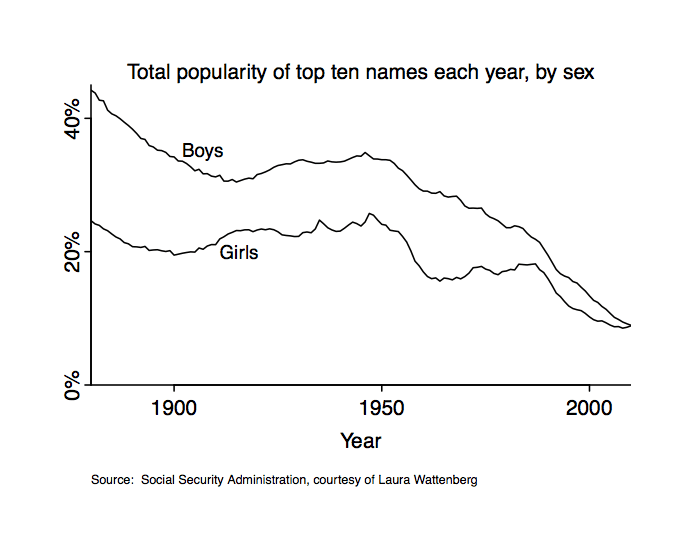

- The Advantage of an Uncommon Name

- Backups: Life and Death

- Measuring Multiple times in a Sandglass

- Powerful Natural Languages

- A Bitcoin+BitTorrent-Driven Economy for Creators (Artcoin)

- William Carlos Williams

- Simplicity Is the Price of Reliability

- November 2016 Data Loss Postmortem

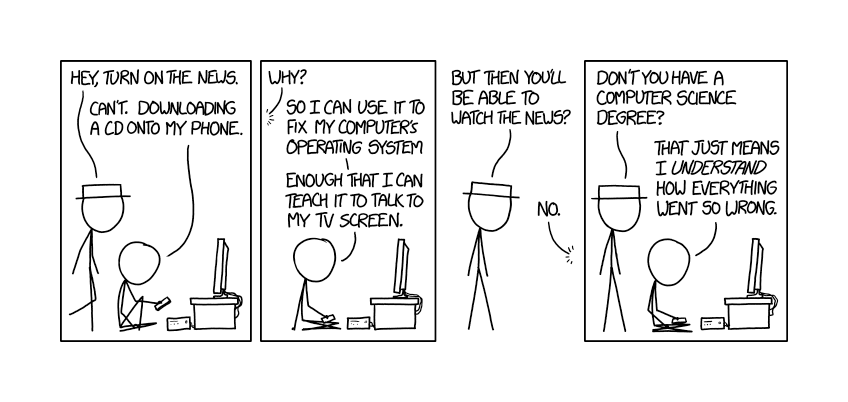

- Cats and Computer Keyboards

- How Would You Prove You Are a Time-Traveler From the Past?

- ARPA and SCI: Surfing AI (Review Of 2002)

- Open Questions

- The Reverse Amara’s Law

- Worldbuilding: The Lights in the Sky Are Sacs

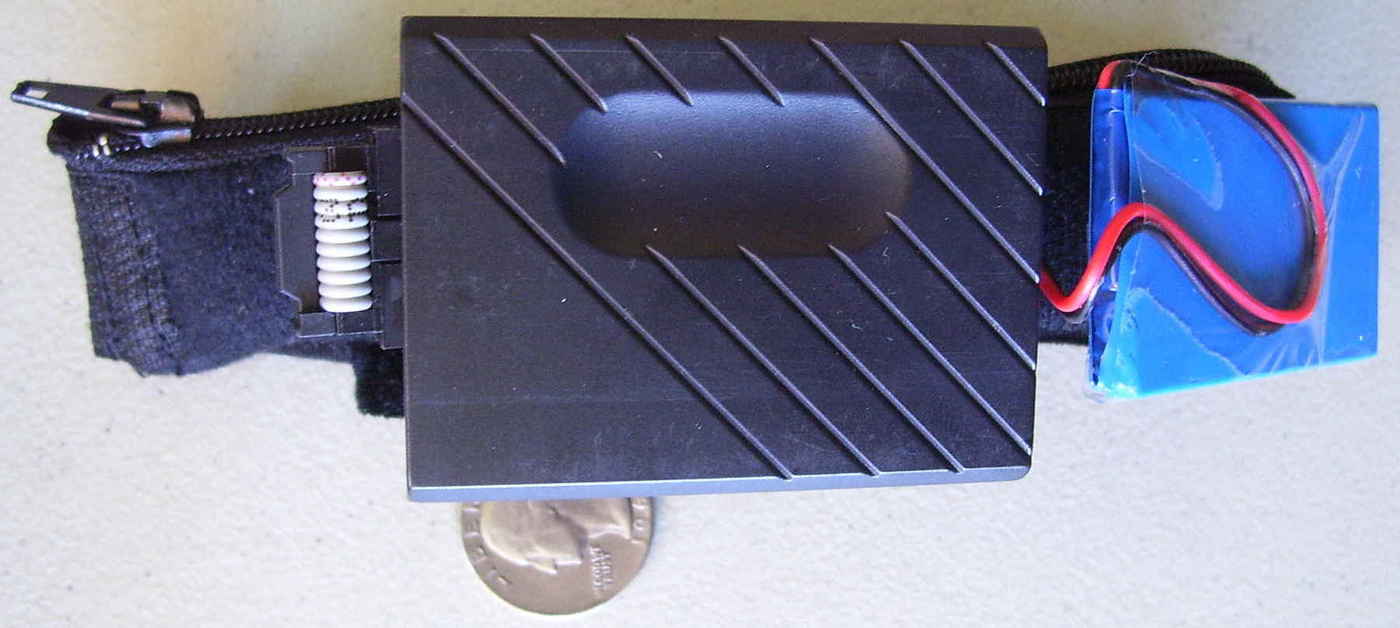

- Remote Monitoring

- Surprising Turing-Complete Languages

- North Paw

- Leaf Burgers

- Night Watch

- Two Cows: Philosophy

- Venusian Revolution

- Hard Problems in Utilitarianism

- Who Lives Longer, Men or Women?

- Politicians Are Not Unethical

- Defining ‘But’

- On Meta-Ethical Optimization

- Alternate Futures: The Second English Restoration

- Cicadas

- No-Poo Self-Experiment

- Newton’s System of the World and Comets

- Rationality Heuristic for Bias Detection: Updating Towards the Net Weight of Evidence

- Littlewood’s Law and the Global Media

- D&D Game #2 Log

- Highly Potent Drugs As Psychological Warfare Weapons

- Who Buys Fonts?

- Twitter Follow-Request UX Problems

- The Diamond Earrings

- Advanced Chess Obituary

Quickies

A game theory curiosity: what is the role of infrastructure?

It’s interesting that though Assad, ISIS, China, Russia etc. all know that computers & networks are heavily pwned by their enemies like the USA, they still can’t bring themselves to take it down or stop using them. ISIS, while losing, continued to heavily use smartphones and the internet despite knowing it’s of intense interest to its enemies/the West and despite the West signaling its estimate of the danger to ISIS by allowing the networks & phones to continue to operate instead of destroying/disabling them (as would be trivial to do with a few bombing missions). An easier example is North Korea continues to allow tourists to pay it thousands of dollars, knowing that the tourists claim that they are in fact undermining the regime through consciousness-raising & contact with foreigners. Both NK and the tourists can’t both be right; but while it’s obvious who’s right in the case of NK (it’s NK, and the tourists are immoral and evil for going and helping prop up the regime with the hard foreign currency it desperately needs to buy off its elite & run things like its ICBM & nuclear bomb programs), it’s not so obvious in other cases.

To me, it seems like ISIS is hurt rather than helped on net by the cellphone towers in its territory, as the data can be used against it in so many ways while the benefits of propaganda are limited and ISIS certainly cannot ‘hack back’ and benefit a similar degree by collecting info on US/Iraqi forces—but apparently they disagree. So this is an odd sort of situation. ISIS must believe it is helped on net and the US is harmed on net by cellphones & limited Internet, so it doesn’t blow up all the cellphone towers in its territory (they’re easy to find). Wile the US seems to believe it is helped on net and ISIS is harmed on net by cellphones & limited Internet (so it doesn’t blow up or brick all the cellphone towers in ISIS territory, they’re easy find). But they can’t both be right, and they both know the other’s view on it.

Some possible explanations: one side is wrong, irrational, and too irrational to realize that it’s a bad sign that the enemy is permitting them to keep using smartphones/Internet; one or more sides thinks that leaving the infrastructure alone is hurting it but the benefits to civilians is enough (yeah, right); or one side agrees it’s worse off, but lacks the internal discipline to enforce proper OPSEC (plausible for ISIS), so has to keep using it (a partial abandonment being worse than full use).

Big blocks are critical to Bitcoin’s scaling to higher transaction rates; after a lot of arguing with no progress, some people made Bitcoin Unlimited and other forks, and promptly screwed up the coding and seem to’ve engaged in some highly unethical tactics as well, thereby helping discredit allowing larger blocks in the original Bitcoin; does this make it a real-world example of the unilateralist’s curse?

The most recent SEP entry on logical empiricism really reinforces how much America benefited from WWII and the diaspora of logicians, mathematicians, philosophers and geniuses of every stripe from Europe (something I’ve remarked on while reading academic biographies). You can trace back so much in just computing alone to all of their work! It’s impossible to miss; in intellectual history, there is always “before WWII”, and “after WWII”.

In retrospect, it’s crazy that the US government was so blind and did so little to create or at least assist this brain drain, and it was accomplished by neglect and accident and by other researchers working privately to bring over the likes of Albert Einstein and John von Neumann and all the others to Princeton and elsewhere. Was this not one of the greatest bonanzas of R&D in human history? The US got much of the cream of Europe, a generation’s geniuses, at a critical moment in history. (And to the extent that it didn’t, because they died during the war or were captured by the Soviets, that merely indicates the lack of efforts made before the war—few were so ideological Marxists, say, that they would have refused lucrative offers to stay in Europe in order to try to join the USSR instead.) This admittedly is hindsight but it’s striking to think of what enormous returns some investments of $1–2m & frictionless green cards would have generated in 1930–1940. If someone could have just go around to all the promising Jewish grad students in Germany and offer them a no-strings-attached green card + a stipend of $1k salary for a few years… For the cost of a few battleships or bombers, how many of the people in the German rocketry or nuclear program could’ve been lured away, and not ultimately have to take the technology developed with their talents to the Soviets?

Despite the mad scramble of Operation Paperclip, which one would think have impressed on the US the importance of lubricating brain drain, this oversight continues: not only does US immigration law make life hard on grad students or researchers, it’s amazing to think that if war or depression broke out right now in East Asia, the US isn’t standing ready to cream off all the Chinese/Korean/Japanese researchers—but instead they must trickle through the broken US system, with no points-based skill immigration assistance!

It’s unfortunate that we’re unable to learn from that and do anything comparable in the US, even basic measures like PhDs qualifying for green cards.

So much of the strange and unique culture of California, like the ‘human potential movement’, seems to historically trace back to German Romanticism. All the physical culture, anti-vaxxers, granola, nudism, homeopathy, homosexuality, pornography, all of it seems to stem either intellectually or through emigration to Germans, in a way that is almost entirely unrecognized in any discussions I’ve seen. (Consider the Nazis’ associations with things like vegetarianism or animal rights or anti-smoking campaigns, among other things.) No one does rationality and science and technology quite like the Germans… but also no one goes insane quite like the Germans.

So, LED lights are much cheaper to operate and run cooler, so they can emit much more light. When something gets cheaper, people buy a lot more of it. The leading theory right now about myopia is that it’s caused by the adaptive growing eye not receiving enough bright sunlight, which is orders of magnitude brighter than indoors, and growing incorrectly. So would the spread of LED lighting lead to a reduction in the sky-high myopia rates of industrialized countries? What about smartphone use, as they are bright light sources beamed straight into growing eyes?

People often note a ‘sophomore slump’ or ‘sequelitis’ where the second work in a series or the other works by an author are noticeably worse than the first and most popular.

Some of this can be inherent to the successor, since they cannot, for example, benefit from the magic of world-building a second time. But some of this is also going to be regression to the mean: by definition, if you start with an author’s best work, the next one can’t be better and probably will be worse. For the next one to be as good or better, either the author would need to be extremely consistent in output quality or you would need to start with one of their lesser works; the former is hard and few authors can manage it (and the ones who can are probably writing unchallenging dreck like pulp fiction), but the second, as inept as it might seem (why a reader want to start with the second-best book?) actually does happen because media markets are characterized by extreme levels of noise exacerbated by winner-take-all dynamics and long tails of extreme outcomes.

So an author’s most popular work may well not be their best, because it is simply a fluke of luck; in most universes, J.K. Rowling does not become a billionaire on the strength of a mega-blockbuster book & movie series but publishes a few obscure well-regarded children’s books and does something else (note how few people read her non-Harry Potter books, and how many of them do so because they are a fan of HP).

What might it look like in terms of ratings when an author publishes several works and then one of them takes off for random reasons and then publishes more? Probably the initial works will meander around the author’s mean as their few fans rate them relatively objectively, the popular work will receive very high ratings from the vast masses who cotton onto it, and then subsequent works will be biased upwards by the extreme fans now devoted to a famous author but still below the original since “it’s just doesn’t have the same magic”.

Authors don’t improve that much over time, so the discontinuity between pre- and post-popular ratings or sales can be attributed to the popularity and give an idea of how biased media markets are.

A striking demonstration that entertainment don’t matter much is how over the last 10 or 20 years, the size of the corpuses you can access easily and for free have increased by several orders of magnitude without making even a hiccup on happiness or life satisfaction surveys or longevity or suicide rates.

Going back to the media abundance numbers: there are billions of videos on YouTube; millions of books on Libgen; god knows how much on the Internet Archive or the Internet in general; all of this for free and typically within a few clicks. In contrast, historically, people are media-poor to an almost unfathomable degree.

A single book might cost a month or a year’s salary. A village’s only book might be a copy of the Bible. The nearest library might well be a private collection, and if one could get access, have a few dozen books at most, many of which would be common (but extremely expensive) books—hence the infinite number of medieval manuscripts of the Bible, Plato, or works like Roman de la Rose (hugely popular but now of interest only to specialists) while critically important works like Tacitus or Lucretius survive as a handful or a single manuscript, indicating few circulating copies. So in a lucky lifetime, one might read (assuming, of course, one is lucky enough to be literate) a few dozen or hundred books of any type. It’s no wonder that everyone is deeply familiar with any throwaway Biblical or Greek mythical allusion, when that might be the only book available, read repeatedly when shared across many people.

What about stories and recitations and music? Oral culture, based on familiar standards, traditions, religions, and involving in ritual functions (one of the key aspects of ritual is that it repeats), does not offer much abundance to the individual either; hence the ability to construct phylogenetic trees of folk takes and follow their slow dissemination and mutation over the centuries, or perhaps as far back as 6 millennia in the case of “The Smith and the Devil”.

Why does hardly anyone seem to have noticed? Why is it not the central issue of our time? Why do brief discussions of copyright or YouTube get immediately pushed out of discussions by funny cat pics? Why are people so much agonized by inflation going up 1% this year or wages remaining static when the available art price/quantity increases so much every year, if art is such a panacea? The answer of course is that “art is not about esthetics”, and people bloviating about how a novel saved their life are deluded or virtue-signaling; it did no such thing. Media/art is almost perfectly substitutable, there is already far more than is necessary, the effect of media on one’s beliefs or personality is nil, and so on.

It’s always surprising to read about classical music and how recent the popularity of much of it is. Some classical music pieces are oddly recent things, like crosswords only recently turning 100 years old.

For example, Vivaldi’s 1721305ya “Four Seasons”—what could be more commonplace or popular than it? It’s up there with “Ode to Joy” or Pachelbel’s Canon in terms of being overexposed to the point of nausea.

Yet, if you read Wikipedia, Vivaldi was effectively forgotten after 1800226ya, all the way until the 1930s and “Four Seasons” didn’t even get recorded until 1939!

Pachelbel’s canon turns out to be another one: not even published until 1919107ya and not recorded until 194086ya and only popularized in 196858ya! Since it might’ve been composed as early as 1680346ya, it took almost 300 years to become famous.

One thing I notice about different intellectual fields is the vastly different levels of cleverness and rigor applied.

Mathematicians and theoretical physicists make the most astonishingly intricate & difficult theories & calculations that few humans could ever appreciate even after decades of intense study, while in another field like education research, one is grateful if a researcher can use a t-test and understands correlation!=causation. (Hence notorious phenomenon like physicists moving into a random field and casually making an important improvement; one thinks of dclayh’s anecdote about the mathematician who casually made a major improvement in computer circuit design and then stopped—as the topic was clearly unworthy of him.) This holds true for the average researcher and is reflected in things like GRE scores by major, and reproducibility rates of papers. (Aubrey de Grey: “It has always appalled me that really bright scientists almost all work in the most competitive fields, the ones in which they are making the least difference. In other words, if they were hit by a truck, the same discovery would be made by somebody else about 10 minutes later.”)

This does not reflect relative importances of fields, either—is education less important to human society than refining physics’s Standard Model? Arguably, as physicists & mathematicians need to be taught a great deal before they can be good physicists/mathematicians, it is more important to get education right than continue to tweak some equation here or speculate about unobservable new particles. (At the least, education doesn’t seem like the least important field, but in terms of brainpower, it’s at the bottom, and the research is generally of staggeringly bad quality.) And the low level of talent among education researchers suggest considerable potential returns.

But the equilibriums persist: the smartest students pile into the same fields to compete with each other, while other fields go begging.

People keep saying self-driving cars will lead to massive sprawl by reducing the psychological & temporal costs of driving, but I wonder if it might not do the opposite, by revealing those reduced costs?

Consumers suffer from a number of systematic cognitive biases in spending, and one of the big ones seems to be per-unit billing vs lump-sum pre-paids or hidden or opportunity costs.

Whenever I bring up estimates of cars costing >$0.30/mile, when correctly including all costs like gas, time, people usually seem surprised & dismayed & try to deny it. (After calculating the total round-trip cost of driving into town to shop, I increased my online shopping substantially because I realized the in-person discounts were not nearly enough to compensate.) And Brad Templeton suggests ride-sharing is already cheaper for many people and use-cases, when you take into account these hidden costs.

Similarly, the costs of car driving are reliably some of the most controversial topics in home economics (see for example the car posts on the Mr Money Moustache blog, where MMM is critical of cars). And have you noticed how much people grumble about taxi fares and even (subsidized) Uber/Lyft fares, while obsessing over penny differences in gas prices and ignoring insurance/repair/tire costs? Paying 10% more at the pump every week is well-remembered pain; the pain of paying an extra $100 to your mechanic or insurer once a year is merely a number on a piece of paper. The price illusion, that when you own your own car, you can drive around for “free”, is too strong.

So I get the impression that people just don’t get just how expensive cars are as a form of transport. Any new form of car ownership which made these hidden prices more salient would feel like a painful jacking up of the price.

People will whine about how ‘expensive’ the cars are for billing them $0.3/mile, but self-driving cars will be far too convenient for most people to not switch. (In the transition, people may keep owning their own cars, but this is unstable, since car ownership has so many fixed costs, and many fence-straddlers will switch fully at some point.) This would be similar to cases of urban dwellers who crunch the numbers and realize that relying entirely on Uber+public-transit would work out better than owning their own car; I bet they wind up driving fewer total miles each month than when they could drive around for ‘free’.

So, the result of a transition to self-driving cars could be a much smaller increase in total miles-driven than forecast, due to backlash from this price illusion.

Education stickiness: what happened to the ronin trope in anime?

Justin Sevakis, “How Tough Is It To Get Into College In Japan?”, on Japanese college entrance exams:

There’s a documented increase in teen suicide around that time of year, and many kids struggle with the stress. The good news is that in recent years, with Japan’s population decreasing, schools have become far less competitive. The very top tier schools are still very tough to get into, obviously, but many schools have started taking in more applying students in order to keep their seats full. So “ronin” is a word that gets used less and less often these days.

In macroeconomics, we have the topic of wage stickiness and inflation: a small amount of inflation is seen as a good thing because workers dislike less a nominal paycut of 0% but a real paycut of 2% due to 2% inflation than inflation of 0% + 2% pay cut. So inflation allows wages to adjust subtly, avoiding unemployment and depression. Deflation, on the other hand, is the opposite, causing a static salary to increase in its burden to the employer, while the employee doesn’t feel like they are getting a real pay increase, and many workers would need to have pay cuts to enjoy a reasonable pay increase (and likewise, a burden on anyone using credit).

What about higher education? In higher education, being a zero-sum signaling game, the ‘real value’ of a degree is analogous to its scarcity and eliteness: the fewer people who can get it, the more it’s worth. If everyone gets a high school diploma, it becomes worthless; if only a few people go that far, it maintains a salary/wage premium. Just like inflation/deflation, people’s demand for specific degrees/schools will make the degrees more or less valuable. Or perhaps a better analogy here might be to gold standards: if the mines don’t mine enough gold each year to offset population growth and the regular loss of gold to ordinary wear-and-tear, there will be deflation, but if they mine more than growth+loss, there will be some inflation.

What are elite universities like the Ivy Leagues equivalent to? Gold mines which aren’t keeping up. Every year, Harvard is more competitive to get into because—shades of California—they refuse to expand in proportion to the total US student population, while per-student demands for Harvard remain the same or go up. They may increase enrollment a little each year, but if it’s not increasing fast enough, it is deflationary. As Harvard is the monopoly issuer of Harvard degrees, they can engage in rent-seeking (and their endowment would seem to reflect this). This means students must sink in more to the signaling arms race as the entire distribution of education credentials gets distorted, risking leaving students at a bottom tier school earning worthless signals.

While with a decreasing population, it effectively become easier each year as the fixed enrollment allows a larger percentage of the student population, to maintain fixed enrollment. Since education is signaling and an arms-race, this makes students better off (at least initially). And this will happen despite the university’s interest in not relaxing its criteria and trying to keep its eliteness rent-wage constant. There may be legal requirements on a top tier school to take a certain number, it may be difficult for them to justify steep reductions, and of course there are many relevant internal dynamics which push towards growth (which adjuncts and deans and vice-presidents of diversity outreach will be fired now that there are fewer absolute students?).

A fantasy I am haunted by, the Cosmic Spectator.

What if, some day or night, a vast Daemon stole into my solitude and made a simple offer—“choose, and I shall take you off the mortal plane, and you mayest go whither in the Universe thou pleases down to the Final Day, an you give up any influence on the world forevermore, forever a spectator; else, remain as you are, to live in the real world and die in a score or three of years like any man?” Or, I wouldn’t sell my soul to the Devil for as little as Enoch Soames does, merely looking himself up in the library, but for a library from the end of history?

Would I take it? Would you take it?

I think I would. “How does it turn out?” is a curiosity that gnaws at me. What does it all amount to? What seeds now planted will in the fullness of time reveal unexpected twists and turns? What does human history culminate in? A sputter, a spurt, a scream, a spectacular? Was it AI, genetics, or something else entirely? (“Lessing, the most honest of theoretical men…”) Becoming a ghost, condemned to watch posterity’s indefinite activities down through deep time as the universe unfolds to timelike infinity, grants the consolation of an answer and an end to hunger—just to know, for the shock of looking around and seeing every last thing in the world radically transformed as suddenly I know to what end they all tend, what hidden potentials lurked to manifest later, what trajectory minimizes their energy between the Big Bang and the Big Crunch, everything that I and everyone else was mistaken about and over or underestimated, all questions given a final answer or firmly relegated to the refuse bin of the unknowable & not worth knowing, the gnawing hunger of curiosity at last slaked to the point where there could be curiosity no more, vanishing into nirvana like a blown-out candle.

See PDF forgery blog post.{.backlink-not .redirect-from-id=“pdf-forgery”}

The Count of Zarathustra: The count of Monte Cristo as a Nietzschean hero?

Poem title: ‘The Scarecrow Appeals to Glenda the Good’

Twitter SF novel idea: an ancient British family has a 144 character (no spaces) string which encodes the political outcomes of the future eg. the Restoration, the Glorious Rebellion, Napoleon, Nazis etc. Thus the family has been able to pick the winning side every time and maintain its power & wealth. But they cannot interpret the remaining characters pertaining to our time, so they hire researchers/librarians to crack it. One of them is our narrator. In the course of figuring it out, he becomes one of the sides mentioned. Possible plot device: he has a corrupted copy?

A: “But who is to say that a butterfly could not dream of a man? You are not the butterfly to say so!” B: “No. Better to ask what manner of beast could dream of a man dreaming a butterfly, and a butterfly dreaming a man.”

Mr. T(athagata), the modern Bodhisattva: he remains in this world because he pities da fools trapped in the Wheel of Reincarnation.

A report from Geneva culinary crimes tribunal: ‘King Krryllok stated that Crustacistan had submitted a preliminary indictment of Gary Krug, “the butcher of Boston”, laying out in detail his systematic genocide of lobsters, shrimp, and others conducted in his Red Lobster franchisee; international law experts predicted that Krug’s legal team would challenge the origin of the records under the poisoned tree doctrine, pointing to news reports that said records were obtained via industrial espionage of Red Lobster Inc. When reached for comment, Krug evinced confusion and asked the reporter whether he would like tonight’s special on fried scallops.’

“Men, we face an acute situation. Within arcminutes, we will reach the enemy tangent. I expect each and every one of you to give the maximum. Marines, do not listen to the filthy Polars! Remember: the Emperor of Mankind watches over you at the Zero! Without his constant efforts at the Origin, all mankind would be lost, and unable to navigate the Warp (and Woof) of the x and y axes. You fight not just for him, but for all that is good and real! Our foes are degenerate, pathological, and rootless; these topologists don’t know their mouth from their anus! BURN THE QUATERNION HERETIC! CLEANSE THE HAMILTONIAN UNCLEAN!”

And in the distance, sets of green-skinned freaks could be heard shouting: “Diagonals for the Orthogonal God! Affines for the Affine God! More lemma! WAAAAAAAAAAAAGGHHH!!!!!!” Many good men would be factored into pieces that day.

In the grim future of Mathhammer 4e4, there is only proof!

I noted today watching our local woodchuck sitting on the river bank that after a decade & a half, our woodchuck has still not chucked any whole trees; this lets us set bounds on the age-old question of “how much wood could a woodchuck chuck if a woodchuck could chuck wood”—assuming a nontrivial nonvacuous rate of woodchucking, then it must be upperbounded at a small rate by this observation.

To summarize: Based on 15 years longitudinal observation of a woodchuck whose range covers 2 dozen trees, we provide refined estimates upperbounding woodchucking rates at <4.4 × 10−11 tree⧸s, improving the state of tree art by several magnitudes. Further, we are now able to reject the Pecker-Chuck Equivalence Conjecture at tree-σ confidence.

Specifically: we estimate the number of chucked trees at 0.5 as an upperbound to be conservative, as a standard correction to the problem of zero-count cells in contingency tables; therefore, the woodchucking rate of 0.5 trees per woodchuck per decade-and-half per 24 trees is 0.5 chucked-trees ⧸ ((1 woodchuck × 60 seconds × 60 minutes × 24 hours × 365.25 days × 15 years) × 24 trees) < 0.000000000044, or 4.4e−11 trees per second.

Air conditioning is ostracized & moralized as wasteful in a way that indoor heating tends not to be. This is even though heat stress can kill quite a few people beyond the elderly (even if heat waves do not kill as many people as cold winters do—they merely kill more spectacularly).

Why? After all, AC should seem less of a splurge on energy: winter heating bills in any cold place tend to be staggeringly large and require heating infrastructure with special fuel spread throughout the house, while AC is so cheap it is often a single small machine stuck through a random window & run off wall-socket electricity. And the temperature difference is obviously much larger for heating than cooling, if we think about it briefly—a ‘slightly cold’ winter might see temperature going to minus Celsius temperatures vs room temperature of 22℃ for a difference of >23℃, while the most brutally hot summers typically won’t go much past 38℃, or a difference of 15℃. (A record winter temperature when I lived on Long Island might be −18℃, while a really cold winter in Rochester, NY, would be −28℃, or ~50℃ away from a comfy room temperature!) Even from a thermodynamics perspective, the difference is obvious: an air conditioner only has to move around heat, while a heater must create heat (and that is why a heat pump is an improvement over a heater and can achieve ‘>100% efficiency’). The energy bills would show the difference, and I suspect my father had a keen grasp of how expensive winter was compared to summer, but I never saw them, so I don’t know; and most people would not, because they have utilities included in rent, or their spouse or family handles that, or they don’t do the arithmetic to try to split out the AC part of the electricity bill & compare with the winter heating bill. (Similar to inflation: intellectually, you know that a nominal price from 20 years ago translates to a much-higher real price today, but it’s easy to casually treat all nominal amounts the same because of the mental effort.)

I would suggest that, like the earth spinning, the reason AC looks more wasteful is simply that it looks like AC is more work.

An air conditioner is visibly, audibly doing stuff: it is blowing around lots of air, making lots of noise, rattling, turning on & off, dehumidifying the place (possibly requiring effort to undo), and so on. Meanwhile, most Western heating systems have long since moved on from ‘visible’ heating—giant crackling stone fireplaces tended by servants & regularly cleaned by chimney sweeps… Just clean invisible warmth. Whether you use electric baseboards like I do, or a gas furnace in the basement, you typically neither hear nor see it, nor are you aware of it turning on; the room is simply warm. Only the occasional gap with a chill trickle of air will remind you of the extreme temperature difference.

I wonder how fish perceive temperature? They’re cold-blooded so cold doesn’t kill them except at extremes like the Arctic, so they probably don’t perceive it as pain. They’d never encounter water hot enough to kill them, so likewise. Water temperature gradients are so tiny, even over a year, that they hardly need to perceive it in general…

But the problem for them is oxygenation for gas exchange, and different species can handle different levels of oxygen. So for them, hot/cold probably feels like asphyxiation or CO2 poisoning: they go into colder water and slow down but breath easier; and in hotter water than optimal, they begin to strangle because they have to pass more hot water through their gills to get the necessary oxygen.

The tension in the term “SF/F” is eternal because science fiction applies old laws to new things, while fantasy fiction applies new laws to old things, and people always differ on which.

(Is Brandon Sanderson a fantasy author, or really just a science fiction writer with odd folk physics?)

You wish to be a writer, and write one of those books filled with wit and observations and lines? Then let me tell you what you must do: you must believe that the universe exists to become a book—that everything is a lesson to watch keenly because no moment and no day is without its small illumination, to have faith in the smallest of things as well as the largest, and believe that nothing is ever finished, nothing ever known for better or worse, no outcome is final & no judgment without appeal nor vision without revision, that every river winds some way to its sea, and the only final endings are those of books. You must not despise your stray thoughts, or let quotes pass through the air and be lost; you must patiently accumulate over the years what the reader will race through in seconds, and smile, and offer them another, book after book, over pages without end. And then, and only then, perhaps you will be such a writer.

East Asian aesthetics have cyclically influenced the West, with surges every generation or two. This pattern began with European Rococo & Impressionism & Art Deco, both heavily influenced by Japanese and Chinese art, a phenomenon termed chinoiserie. Subsequent waves include the post-impressionist surge around the 1890s via World Fairs, the post-WWII wave (helped by returning American servicemen as well as hippies), and the late 1980s/1990s wave fueled by the Japanese bubble and J-pop/anime.

The East Asian cycles may be over. The Korean Wave may represent the latest iteration, but it’s doubtful another will follow soon. Japan appears exhausted culturally and economically. South Korea, despite its cultural output, faces a demographic collapse that threatens its future influence. China, while economically potent, is culturally isolated due to its firewall and Xi Jinping’s reign. Taiwan, though impactful relative to its size, is simply too small to generate a substantial wave.

In South Asia, India seems promising, as Bollywood is already a major cultural export, and it enjoys a youth bulge, while Modi is unable to become a tyrant like Xi or freeze Indian culture (as much as he might like to), but there’s two problems:

The decline of a mainstream culture conduit and the rise of more fragmented, niche cultures also complicates the transmission of such waves. It may be that it is simply no longer possible to have meaningful cultural ‘periods’ or ‘movements’; any ‘waves’ are more like ‘tides’, visible only with a long view.

Poor suitability for elite adoption: Pierre Bourdieu’s theory on taste hierarchies suggests that the baroque aesthetics of Indian art, characterized by elaborate detail and ornamentation, may not resonate with Western elite preferences for minimalism. It is simply too easy & cheap. (Indian classical music, on the other hand, can be endlessly deep, but perhaps too deep & culture-bound, and remains confined to India.)

Furthermore, in an era of AI-generated art, baroque detail is cheap, potentially rendering Indian aesthetics less appealing.

A successful esthetic would presumably need to prize precision, hand-made arts & crafts that robotics cannot perform (given its limitations like high overhead), personal authenticity with heavy biographical input, high levels of up-to-the-second social commentary & identity politics where the details are both difficult for a stale AI model to make and also are more about being performative acts than esthetic acts (in the Austinian sense), live improvisation (jazz might make a comeback), and interactive elements which may be AI-powered but are difficult to engineer & require skilled human labor to develop into a seamless whole (akin to a Dungeon Master in D&D).

“Men, too, secrete the inhuman. At certain moments of lucidity, the mechanical aspect of their gestures, their meaningless pantomime makes silly everything that surrounds them.

A man is talking on the telephone behind a glass partition; you cannot hear him, but you see his incomprehensible dumbshow: you wonder why he is alive.”

I walk into the gym—4 young men are there on benches and machines, hunched motionless over their phones.

I do 3 exercises before any of them stir. I think one is on his phone the entire time I am there.

I wonder why they are there. I wonder why they are alive.

If a large living organism like a human were sealed inside an extremely well-insulated sealed container, the interior temperature of this container would vary paradoxically over time as compared to the average outside/room temperature of its surroundings: it would first go up, as the human shed waste heat, until the point where the human died of heat stroke. Then the temperature would slowly go down as heat leaked to the outside. But then it would gradually rise as all the resident bacteria in or on a body then began scavenging & eating it, reproducing exponentially in a race to consume as much as possible. Depending on the amount of heat released there, the temperature would rise to a new equilibrium where the high temperature damages bacteria enough to slow down the scavenging. This would last, with occasional dips, for a while, until the body is largely consumed and the bacteria die off. (Things like bone may require specialist scavengers, not present in the container.) Then the temperature would gradually drop for the final time until it reaches equilibrium with the outside.

Truth ↓ / Intent → |

To Inform |

Neutral |

To Deceive |

|---|---|---|---|

True |

Documentation, Teaching, Self-awareness |

Small talk, Ritual, Posturing |

Paltering, Equivocation, Self-paltering |

Both/Mixed |

Model, Pedagogy, Rationalization |

Bullshit, Speech act, Contradiction |

Propaganda, Gaslighting, Willful ignorance |

False |

Fiction, Allegory, Error |

Play, Performance, Passive illusion |

Lying, Fraud, Self-deception |

Are there odd numbers without the letter ‘e’ in them? Well…

Plumbing Vs Internet, Revisited

LLM Challenge: Writing Non-Biblical Sentences

Trajectoid Words

Non-Existence Is Bad

If the nearnesse of our last necessity, brought a nearer conformity unto it, there were a happinesse in hoary hairs, and no calamity in half senses. But the long habit of living indisposeth us for dying; When Avarice makes us the sport of death; When even David grew politickly cruell; and Solomon could hardly be said to be the wisest of men. But many are too early old, and before the date of age. Adversity stretcheth our dayes, misery makes Alcmenas nights, and time hath no wings unto it. But the most tedious being is that which can unwish it self, content to be nothing, or never to have been, which was beyond the male-content of Job, who cursed not the day of his life, but his Nativity: Content to have so farre been, as to have a Title to future being; Although he had lived here but in an hidden state of life, and as it were an abortion.

In 201115ya, the science-fiction writer Frederik Pohl wrote a blog post declining an offer of free cryonics vitrification from Mike Darwin.

His rationale was to quote from John Dryden’s free rhyming translation of the Epicurean philosopher Lucretius’s On The Nature of Things, “The Latter Part of the Third Book of Lucretius; against the Fear of Death”:

So, when our mortal forms shall be disjoin’d.

The lifeless lump uncoupled from the mind,

From sense of grief and pain we shall be free,

We shall not feel, because we shall not be.

Though earth in seas, and seas in heaven were lost

We should not move, we should only be toss’d.

Nay, e’en suppose when we have suffer’d fate

The soul should feel in her divided state,

What’s that to us? For we are only we

While souls and bodies in one frame agree.Nay, though our atoms should revolve by chance,

And matter leap into the former dance,

Though time our life and motion should restore.

And make our bodies what they were before,

What gain to us would all this bustle bring?

The new-made man would be another thing.

Pohl died on 2 September 201313ya, age 93, almost exactly 2 years after publishing his post. He was not cryopreserved, and his death was final.1

Lucretius further notes as a reason to not fear death or regard it as an evil that

Why are we then so fond of mortal Life,

Beset with dangers, and maintain’d with strife?

A Life, which all our care can never save;

One Fate attends us; and one common Grave.

…Nor, by the longest life we can attain,

One moment from the length of death we gain;

For all behind belongs to his Eternal reign.

When once the Fates have cut the mortal Thred,

The Man as much to all intents is dead,

Who dies to day, and will as long be so,

As he who dy’d a thousand years ago.

Lucretius is gesturing towards the “symmetry argument” (also made by others): if we do not fear or regret the many years before our birth where we did not exist, why should we fear or regret the similar years (perhaps fewer!) after our death?

Look back at time…before our birth. In this way Nature holds before our eyes the mirror of our future after death. Is this so grim, so gloomy?

If non-existence is bad, surely the non-existence before our birth was bad as well? And yet, we fear and try to avoid non-existent years which come after our birth, and do not think at all about the ones before. Thus, since it seems absurd to be upset about the former, we must become consistent by doing our best to cease to be upset about the latter.

This question is also explicitly asked by Thomas Nagel in “Death” (197947ya):

The third type or difficulty concerns the asymmetry, mentioned above, between out attitudes to posthumous and prenatal nonexistence. How can the former be bad if the latter is not?

I take as the response to the Lucretius symmetry argument what the SEP calls the “comparativist” position: non-existence is bad, and those billions of years beforehand are a loss, but they are not an avoidable loss. Because they were inevitable, and required for us to exist at all, we cannot be upset about them. As we understand cosmology and physics and biology at present, it would be difficult to impossible for us to have existed close to the beginning of the universe: the universe needs to cool down from the Big Bang to allow atoms to exist at all, stars must go through many generations to accumulate enough heavy elements to form metallic planets like the Earth, life must somehow evolve from nothing and then pass through many stages of development to go from the first proto-life in mud or sea vents or something to multi-cellular life to brains to intelligence to human technological civilization… Are we “early” or are we “late”? This is a topic of extreme scientific uncertainty, so we can’t even get upset by the prospect that we “missed” a few billion years, resulting in astronomical waste.

Perhaps we should be upset that humanity took so long to show up, and that so many stars have gone to waste and so much of the universe has receded behind the Hubble horizon—but until we have more scientific knowledge, it is difficult to get genuinely upset at such abstract loss, and our absence of emotion is not a good guide.2 As Philip Larkin put it(“The Old Fools”):

At death, you break up: the bits that were you

Start speeding away from each other for ever

With no one to see. It’s only oblivion, true:

We had it before, but then it was going to end,

And was all the time merging with a unique endeavour

To bring to bloom the million-petaled flower

Of being here. Next time you can’t pretend

There’ll be anything else.

Further, even if the physical brute facts were such that we could come into existence, personal identity does not permit that. What does a hypothetical claim like “if I were born before the Civil War, I would have been an abolitionist” mean, if you do not believe in some sort of soul or karma?

It cannot mean you with all your current beliefs about slavery, based on ideas and concepts and words and events that hadn’t happened yet. It is also impossible for it to simply mean another human being with the exact same DNA as you—unless you bite the bullet of claiming that identical twins and clones are literally the same exact person, and also that is impossible because you carry a large number of de novo mutations no one back then had and your exact DNA could not have been created by any recombination of existing DNA chromosomes, and your genome could only have come into existence back then by some astronomically-unlikely collection of spontaneous mutations (a “Boltzmann genome”, if you will). It also can’t mean someone with the exact same personality & body etc. because that too is astronomically unlikely given all of the random stochastic noise that molds us starting from conception (again, barring a “Boltzmann body”). Even the very atoms of your body cannot be replicated in the past, because of isotopic changes in atoms like that caused by nuclear bomb testing.

Given any notion of personal identity grounded in our traits, preferences, personality, memories and other properties, I’m unable to see any genuinely coherent way to talk about “if I had been born at some other time & place”. (This also applies to other change scenarios like sex or race or intelligence.) All that can amount to is some psychological or sociological speculation about what a vaguely statistically-similar person might do in certain circumstances: they may look like you and have the same color hair and react in similar ways as you would if you stepped into a time machine, but they are not you, and never could have been. Thus, it is impossible for you or I to have been “born earlier”. That person would be someone else (and nor could that person have been born “earlier” or “later”). Nagel:

But we cannot say that the time prior to a man’s birth is time in which he would have lived had he been born not then but earlier. For aside from the brief margin permitted by premature labor, he could not have been born earlier: anyone born substantially earlier than he would have been someone else. Therefore the time prior to his birth prevents him from living. His birth, when it occurs, does not entail the loss to him of any life whatever.

So, our birth is fixed and the missing years before inevitable. However, the years after our death are evitable. We could have lived longer than we did. There is no known upper limit to the human lifespan at present, and it is easy to imagine histories where greater progress was made in techniques of life-extension or preservation. (Histories where the Industrial Revolution happened a small percentage faster, and we would now be in the equivalent of 2100 AD, or where cryopreservation had been perfected decades ago rather than remaining an ultra-obscure niche, or alternatives like chemical fixation of brains had been developed to provable efficacy.)

As Lucretius admits, he isn’t too upset about the prior years because he views human lifespan as hopelessly immutable and fixed. (As Lucretius is philosophically committed to the impossibility of genuine progress which might increase human lifespan, by his cyclical model of history, this is a consideration he does not and cannot take into account.) You get 100 years or so at most, and it doesn’t make a difference where in history you put them. The closer you get to that, the less you have to lose. We don’t mourn the 120-year-old woman, while we mourn the child.

I agree with Nagel:

This approach also provides a solution to the problem of temporal asymmetry, pointed out by Lucretius. He observed that no one finds it disturbing to contemplate the eternity preceding his own birth, and he took this to show that it must be irrational to fear death, since death is simply the mirror image of the prior abyss. That is not true, however, and the difference between the two explains why it is reasonable to regard them differently. It is true that both the time before a man’s birth and the time after his death is time of which his death deprives him. It is time in which, had he not died then, he would be alive. Therefore any death entails the loss of some life that its victim would have led had he not died at that or any earlier point. We know perfectly well what it would be for him to have had it instead of losing it, and there is no difficulty in identifying the loser.

When my cat abruptly dies of lung cancer at age 11, I am saddened in part because that was several years short of his life expectancy, and I’ve known cats which lived into their 20s and he was in good health just months before I had to euthanize him. It’s easy to imagine the counterfactual where his lifespan was double what it was, and I could have looked forward to him greeting me when I return from my walk for years to come; I have no difficulty in identifying the loser or what experiences we lost. Indeed, I can even imagine him living well past his 20s and breaking prior cat longevity records—if he lived long enough to benefit from advances in veterinary medicine, a pet version of longevity escape velocity. And this possibility of progress helps break the symmetry: there is in fact a good reason to prefer to be born as late as possible—because it is clearly possible that if you are born after certain dates, you may enjoy a vastly greater, perhaps indefinitely greater, lifespan. That date was not 1900126ya AD; but it might be 200026ya AD; and it might be even more likely to be 2024 AD, and so on.

All of this is contingent and empirical. If we lived in an entirely different kind of universe, then we might be upset at delays. If we lived in some sort of Christian-style universe where God made the world on the first day and we could be immortal if he permitted it, then we might be upset that we were not created on the first day immortal, and have been deprived of so much existence. (Imagine that God created a human on the first day, and put them to sleep immediately, only to be woken by ‘the last trump’, and without ever getting to live a life in the mundane world, forced to proceed straight to the New Jerusalem to sing hosannas for all eternity. Wouldn’t they be correct to be angry with God and demand a reason? Indeed, consider the reduction; if it was not bad for that human to sleep away the mortal universe; suppose God created the universe and all its people, put them all to sleep, and woke them up collectively at the last second?)

So, nonexistence is bad. But whether we should regret badness depends on whether it is possible to change it. In the case of nonexistence before birth, it is impossible to change it. In the case of nonexistence after death, it is possible to change it. So we should regret all nonexistence after death, and be upset by it, but not by the (only seemingly) symmetrical nonexistence before birth.

Celebrity Masquerade Game

Pemmican

Rock Paper Scissors

Backfire Effects in Operant Conditioning

A certain mother habitually rewards her small son with ice cream after he eats his spinach. What additional information would you need to be able to predict whether the child will:

Come to love or hate spinach,

Love or hate ice cream, or

Love or hate Mother?

A story to think about in terms of both design, and AI:

A relative of mine worked as a special education teaching assistant in elementary schools; some years back, a AIBO-style robot dog company was lobbying their school district to try to buy a dog for each classroom, in particular, the special ed classrooms. The dog person comes in and demos it and explains the benefits: it can be the classroom pet, and help socialize the children.3 If they damage the robot dog, it’s just money, and the robot dog will never run out of patience in, as the salesperson demonstrates, being bopped on the head to turn it on and off, and shake and wag its tail etc.

The special ed teacher politely watches and after the sales person leaves, asks the TAs & others: “what problem did you see, and why will we never have that robot dog in our classrooms?”

“The kids probably won’t be interested in it—it seemed boring and limited.”

“Yes, but what else?” “Uh…”

“What would that robot dog teach our kids?” “Uh…”

“It will teach them that ‘when your friend is annoying you or not doing what you want, to hit them on the head’. And that ‘when you want to do something with your friend, you should hit them on the head’. And that is why we will never use that dog.”

Her report to the district was negative, and the school district did not buy any robot dogs. (Admittedly, probably a foregone conclusion.)

I asked, and she did not know if the robot dog people were ever told.

This is a parable about:

design: the head-bopping mechanic is elegant but wrong.

Hitting the head is intuitive, minimizes controls, semi-realistic, and appropriate for adults; it is inappropriate for small children, particularly children struggling with social interaction & violence. (“Good design is invisible.”)

Their problem was probably fixable, like using voice activation (which would interoperate well with the speech-generating devices used by non-verbal children)—but they cannot fix it until they know it exists. However, the designers had no way of knowing because they did not spend time doing special education or related fields like animal training, and the teachers didn’t care enough to get it through their thick corporate heads—“they may never tell you it’s broken”.

training humans to use AIs:

like LLMs, the head-bopping encodes a certain attitude towards anything mechanical; this attitude may bleed through towards parasocial or social relationships as well.

and training AIs how to use humans: with any sort of feedback loop or data collection, a robot friend may also be learning how to manipulate & ‘optimize’ humans.

What the AI learns will depend on what sort of reinforcement learning algorithm the system as a whole embodies (see Hadfield- et al 2016; et al 2019/ et al 2021, & 2021), and what the reward function is. For example, a simple, plausible reward function that a commercial robot system might be designed to maximize would be “total activated time”; this could then lead to manipulative behavior to reward-hack, like to try to keep playing indefinitely even if no human is there, or ‘off switch’ behavior like dodging any hand trying to bop the head.

Different RL algorithms would lead to different behaviors: based on 2021, I would expect that:

policy gradients (like evolution strategies) & deep SARSA would try to avoid being turned off

Q-learning would not care

tree searches (like iterative widening or MCTS) would care if it is in their model & reachable within their planning budget, but not otherwise

“behavior cloning” imitation-learning (eg. LLMs) would depend on whether reward-hacking was present in the training dataset and/or prompt.

On Being Sick As A Kid

On First Looking Into Tolkien’s Tower

Hash Functions

Hashes are an intellectual miracle. Almost useless-seeming, they turn out to do practically everything, from ultra-fast ‘arrays’ to search to file integrity to file deduplication & fast copying to public key cryptography (!).

Yet, even Knuth can’t find any intellectual forebears pre-1953! Seems out of nowhere. (There were, of course, many kinds of indexing schemes, like by first digit or alphabetically, but these are fundamentally different from hashes because they all try for some sort of lossy compression and avoiding randomness.)

You can reconstruct Luhn’s invention of hash functions for better array search from simple check-digits for ECC and see how he got there, but it gives no idea of the sheer power of one-way functions or eg. building public-key cryptography from sponges.

I remember as a kid running into a cryptographic hash article for the first time, and staring. “Well, that sounds like a uselessly specific thing: it turns a file into a small random string of gibberish? What good is that…?” And increasingly 😬😬😬ing as I followed the logic and began to see how many things could be constructed with hashes.

Icepires

It might seem like vampires have been done in every possible way, from space to urban-punk to zombies & werewolves—whether England or Japan, vampires are everywhere (contemporary vampires are hardly bothered by running water), and many even go out in daylight (they just sparkle). But there’s one environment missing: snow. When was the last time you saw Count Dracula flick some snow off his velvet cape? (I can think only of Let The Right One In; apparently 30 Days of Night exploits the rather obvious device of polar night.)

Logically, Dracula would be familiar with snow. Romania has mild humid continental weather, with some snow, and in Transylvania’s Carpathian Mountains there is a good amount of snowfall—enough to support dozens of ski resorts. Nothing in the Dracula mythos implies that there is no snow or that he would have any problem with it (snow isn’t running water either).

Indeed, snow would be an ally of Dracula: Dracula possesses supernatural power over the elements, and snow is the perfect way to choke off mountain passes leading to his redoubt. Further, what is snow famous for? Being white and cold. It is white because it reflects light, and Dracula being undead & cold, would find snow comfortable. So snow would both protect and camouflage Dracula—enough snow shields him from the sun, and he would not freeze to death nor give away his position by melt or breath. (However, this is a double-edged sword: not being warm-blooded, Dracula can literally freeze solid, just like any dead corpse or hunk of meat. So there is an inherent time-limit before he is disabled and literally frozen in place until a thaw releases him, which may entail a lot of sunlight…)

Thus, we can imagine Dracula happening a little differently had Dracula employed his natural ally, General Winter, against his relentless foes, Doctor Helsing & Mina Harker:

Well aware of his enemy’s resources and the danger of the season, but unable to wait for more favorable conditions, the party has struck with lightning speed, traveling on pan-European railways hot on Dracula’s heels, and arranged for modern transportation technology for the final leg. Dracula summons forth weeks of winter storms, dumping meters of snow on all passes—but to no avail against Dr Helsing’s sleds & land yachts & ice boats (which can travel at up to 100MPH), filled with modern provisions like ‘canned goods’ and ‘pemmican’. Dracula may have the patience of the undead, but the Helsing party has all the powers of living civilization.

They lay siege, depleting Dracula’s allies by shooting down any fleeing bats and trapping the wild wolves. At long last, they assault the fortress for every direction, rushing in on their land yachts over the snow-drifted ramparts, cleansing it room by room of abomination, leaving no hiding place unpurged. Finally, at high noon, Doctor Helsing bursts into Dracula’s throne-room to fix an unholy mistake with a proper stake through the heart: but the coffin is empty! Where is Dracula—he is blinded by a sudden reflection of light from the snow outside, and reflecting, realizes that there is one last place Dracula can flee without being burnt to a crisp or spotted by the party—into the sea of snow.

How to coax him out? Hunted for months, bereft of his human thralls, slowly freezing solid, Dracula is surely driven almost to madness by hunger—hunger for the blood which is the life—hunger for the hot blood of the nubile Mina Harkness, who can be used again as bait for the vampire. But he retains his monstrous strength surpassing that of mortal men… Jonathan Harper looks at Doctor Helsing, and comments:

You’re going to need a bigger boat.

Cleanup: Before Or After?

Peak Human Speed

Oldest Food

Zuckerberg Futures

I remember being at our annual All Things Digital conference in Rancho Palos Verdes, California in 201016ya and wondering, as sweat poured down Mark Zuckerberg’s pasty and rounded face, if he was going to keel over right there at my feet. “He has panic attacks when he’s doing public speaking”, one Facebook executive had warned me years before. “He could faint.” I suspected that might have been a ploy to get us to be nicer to Zuckerberg. It didn’t work. As Walt and I grilled the slight young man on the main stage, the rivulets of moisture began rolling down his ever-paler face…This early Zuckerberg had yet to become the muscled, MMA-fighting, patriotic-hydrofoiling, bison-killing, performative-tractor-riding, calf-feeding man that he would develop into over the next decade.

Mark Zuckerberg may be seriously underestimated. The level of sheer hatred he evokes blinds people to his potential; he can do something awesome like water ski on a hydrofoil carrying the American flag for the 2021 Fourth of July, and the comments will all be about how much everyone loathes him. (Whereas if it had been Jeff Bezos, post-roids & lifting, people would be squeeing about his Terminator-esque badassery. And did you know Jack Dorsey was literally a fashion model? Might help explain why he gets such a pass on being an absentee CEO busy with Square.) And it’s not Facebook-hatred, it’s Mark-hatred specifically, going back well before the movie The Social Network; in fact, I’d say a lot of the histrionics over and hatred of Facebook, which have turned out to be so bogus is motivated by Mark-hatred rather than vice-versa. Even classmates at Harvard seemed to loathe him. He just has a “punchable face”; he is short; he somehow looks like a college undergraduate at age 37; he eschews facial hair, emphasizing his paleness & alien-like face, while his haircut appears inspired by the least attractive of Roman-emperor stylings (quite possibly not a coincidence given his sister); he is highly fit, but apparently favors primarily aerobics where the better you get the sicker & scrawnier you look; and he continues to take PR beatings, like when he donated $94.33$752020m to a San Francisco hospital & the SF city board took time out in December 2020 from minor city business (stuff like the coronavirus/housing/homeless/economic crisis, nothing important) just to pass a resolution insulting & criticizing him. (This is on top of the Newark debacle.)

Unsurprisingly, this hatred extends to any kind of thinking or reporting. To judge by reporting & commentary going back to the ’00s, no one uses Facebook, it’s too popular, and it will, any day now, become an empty deserted wasteland; puzzling, then, how it kept being successful—guess people are brainwashed, or something. Epistemic standards, when applied to Zuckerberg & Facebook, are dismally low; eg. the Cambridge Analytica myth still continues to circulate, and we live in a world where New York Times reporters can (in articles called “excellent” by tech/AI researchers) forthrightly admit that they have spent years reporting irrelevant statistics about Facebook traffic (to the extent of setting up social media bots to broadcast them daily), statistics that they knew were misinterpreted as proving that Facebook is a giant right-wing echo chamber and that their assertions about the statistics only “may be true”—but all of this false reporting is totally fine and really, Facebook’s fault for not providing the other numbers in the first place!4

This reminds me of Andrew Carnegie, or Bill Gates in the mid-1990s. Who then would have expected him to become a beloved philanthropist? No one. (After all, simply spending money is no guarantee of love. No matter how much money he spends, the only people who will ever love Larry Ellison are yacht designers, tennis players, and the occasional great white shark.) He too was blamed for a myriad of social ills, physically unimposing (short compared to CEO peers who more typically are several inches above-average), and was mocked viciously for his nasally voice and smeared glasses and utter lack of fashion and being nerdily neotenous & oh-so-punchable; he even had his own Social Nework-esque movie. Gates could do only one of two things according to the press: advance Microsoft monopolies in sinister & illegal ways to parasitize the world & crush all competition, or make noble-seeming gestures which were actually stalking horses for future Microsoft monopolies.

But he too was fiercely competitive, highly intelligent, an omnivorous reader, willing to learn from his mistakes & experiments, known to every manjack worldwide, and, of course, backed by one of the largest fortunes in history. As he grew older, Bill Gates grew into his face, sharpened up his personal style, learned some social graces, lost some of the fire driving the competitiveness, gained distance from Microsoft & Microsoft became less of a lightning rod5 as its products improved6 and began applying his drive & intellect to deploying his fortune well. In 199927ya, Gates was a zero no matter how many zeros in his net worth; in 2019, he was a hero.

Zuckerberg starts in the same place. And it’s easy to see how he could turn things around. He has all the same traits Gates does, and flashes of Chad Zuck emerge from Virgin Mark already (“We sell ads, senator”).

Past errors like Newark can be turned into learning experiences & experiments; the school of life being the best teacher if one can afford its tuition. Indeed, merely ceasing to self-sabotage and doing all the ‘obvious’ stuff would make a huge difference: drop the aerobics for weightlifting (being swole is unnecessary, just not being sickly is enough); find the local T doctor (he likely already knows, and just refuses to use him); start experimenting with chin fur and other hair styles, and perhaps glasses; step away from Facebook to let the toxicity begin to fade away; stop being a pushover exploited by every 2-bit demagogue and liberal charity, and make clear that there are consequences to crossing him (it is better to be feared than loved at the start, cf. Bezos, Peter Thiel); consider carefully his political allegiances, and whether the Democratic Party will ever be a viable option for him (he will never be woke enough for the woke wing, especially given their ugly streak of anti-Semitism, unless he gives away his entire fortune to a Ford-Foundation-esque slush fund for them, which would be pointless), and how Michael Bloomberg governed NYC; and begin building a braintrust and network to back a political faction of his own.

In 20 years7, perhaps we’ll look back on Zuckerberg with one of those funny before/after pairings, like Elon Musk in the ’90s vs Elon Musk in 201511ya, or nebbish Bezos in ’90s sweater vs pumped bald Bezos in mirror shades & vest, and be struck that Zuckerberg, so well known for X (curing malaria? negotiating an end to the Second Sino-Russian Border Conflict?) was once a pariah and public enemy #1.

As of April 2025, I would argue that Zuckerberg has been heavily rehabilitated and I have been vindicated. And despite his missteps, as a business and whatever the societal damage, Facebook continued to defy naysayers and grow.

I think my biggest mistake was in arguing that the Facebook Metaverse project was being underestimated, as Facebook couldn’t risk being flatfooted by a new competitor (eg. Instagram), it would be useful infrastructure and motivation for AI to act as people or agents, and might work out after a reboot or passing some unknown threshold of quality/cost.

So far it seems like none of that has happened. We will never know if the Metaverse was worth the money simply as insurance, but the rest of it is easier to judge—and hasn’t worked out. The Metaverse is forgotten; Oculus headset adoption has stagnated as a high-end video game console; even Facebook AI has experienced regular setbacks and permanent status as an also-ran to several other AI groups like OpenAI (and as I write this, embarassed itself with a failed LLaMA-4 release far behind not just OpenAI/Google but FLOSS Chinese AI models too).

This is despite all the evidence piling up in favor of a ‘metaverse platform’ as strategically important, to someone, someday. We have seen much evidence that there is tremendous consumer appetite for AI social interactions even as impoverished as ‘typing into a SMS chat window’ (Claude, ChatGPT, Character.ai et al) and that VR experiences can be quite compelling (VRChat addicts), and it seems clear that arguments about the ‘unique value of human connection’ are so much wishful thinking, as humans turn out to be happy to spend many hours a day chatting with their sycophantic LLM buddies (at shockingly low levels of capability). And it also seems clear as generative modeling continued to advance that there will soon be accurate realtime neural video generation of highly realistic ‘worlds’ which LLM agents can ‘exist’ in. So I am more convinced than ever that the future is going to involve a lot of virtualized reality and non-human social connections, likely substituting to a considerable degree for old-fashioned human connections… but it does not look like the path to that future goes through Facebook’s Oculus or anything like its current Metaverse. Was it too far ahead of its time? Too mismanaged? Was focusing on VR the mistake, and it should have been AR instead (closer to Her than Ready Player One)? Did we underestimate how much of a drawback ‘not being a smartphone’ was? Was Zuckerberg/Facebook just too toxic?

I don’t know. We’ll see what happens over the next decade.

Russia

Who would grasp Russia with the mind?

For her no yardstick was created:

Her soul is of a special kind,

By faith alone appreciated.

We wanted the best, but it turned out like always.

In its pride of numbers, in its strange pretensions of sanctity, and in the secret readiness to abase itself in suffering, the spirit of Russia is the spirit of cynicism. It informs the declarations of her statesmen, the theories of her revolutionists, and the mystic vaticinations of prophets to the point of making freedom look like a form of debauch, and the Christian virtues themselves appear actually indecent…

Varlam Shalamov once wrote: “I was a participant in the colossal battle, a battle that was lost, for the genuine renewal of humanity.” I reconstruct the history of that battle, its victories and its defeats. The history of how people wanted to build the Heavenly Kingdom on earth. Paradise! The City of the Sun! In the end, all that remained was a sea of blood, millions of ruined human lives. There was a time, however, when no political idea of the 20th century was comparable to communism (or the October Revolution as its symbol), a time when nothing attracted Western intellectuals and people all around the world more powerfully or emotionally. Raymond Aron called the Russian Revolution the “opium of intellectuals.” But the idea of communism is at least two thousand years old. We can find it in Plato’s teachings about an ideal, correct state; in Aristophanes’ dreams about a time when “everything will belong to everyone.” … In Thomas More and Tommaso Campanella … Later in Saint-Simon, Fourier and Robert Owen. There is something in the Russian spirit that compels it to try to turn these dreams into reality.

…I came back from Afghanistan free of all illusions. “Forgive me father”, I said when I saw him. “You raised me to believe in communist ideals, but seeing those young men, recent Soviet schoolboys like the ones you and Mama taught (my parents were village school teachers), kill people they don’t know, on foreign territory, was enough to turn all your words to ash. We are murderers, Papa, do you understand‽” My father cried.

What is it about the Russian intelligentsia, “the Russian idea”? There’s something about Russian intellectuals I’ve never been quite able to put my finger on, but which makes them unmistakable.

For example, I was reading a weirdo typography manifesto, “Monospace Typography” which argues that all proportional fonts should be destroyed and we should use monospace for everything for its purity and simplicity; absurdity of it aside, the page at no point mentions Russia or Russian things or Cyrillic letters or even gives an author name or pseudonym, but within a few paragraphs, I was gripped by the conviction that a Russian had written it, it couldn’t possibly have been written by any other nationality. After a good 5 minutes of searching, I finally found the name & bio of the author, and yep, from St Petersburg. (Not even as old as he sounds.) Or LoperOS or Urbit, Stalinist Alexandra Elbakyan, or Nikolai Bezroukov’s Softpanorama, or perhaps we should include Ayn Rand—there is a “Russian guy” archetype—perhaps Lenin was a mushroom after all? If an Academician of the Academy says something, who are we to disagree?8

Perhaps the paradigmatic example to me is the widely-circulated weird news story about the two Russians who got into a drunken argument over Immanuel Kant and one shot the other (not to be confused with the two Russians in a poetry vs prose argument ending in a fatal stabbing), back in the 2000s or whenever. Can you imagine Englishmen getting into such an argument, over Wittgenstein? No, of course not (“a nation of shopkeepers”). Frenchmen over Sartre or Descartes? Still hard. Germans over Hegel? Not really (although see “academic fencing”). Russians over Hume? Tosh! Over Kant? Yeah sure, makes total sense.

What is it that unites serfs, communism (long predating the Communists), the Skoptsy/Khlysts, Tolstoy, Cosmism, chess, mathematics (but only some mathematics—Kolmogorov’s probability theory, yes, but not statistics and especially not Bayesian or decision-theoretic types despite their extreme economic & military utility9), stabbing someone over Kant, Ithkuil fanatics, SF about civilizations enforcing socialism by blood-sharing or living in glass houses, absurd diktats about proportional fonts being evil, etc.? What is this demonic force? There’s certainly no single specific ideology or belief or claim, there’s some more vague but unmistakable attitude or method flavoring it all. The best description I’ve come up with so far is that “a Russian is a disappointed Platonist who wants to punch the world for disagreeing”.

Conscientiousness And Online Education

Fiction

American Light Novels’ Absence

I think one of the more interesting trends in anime is the massive number of adaptations of light novels done in the ’90s and 00s; it is interesting because no such trend exists in American media as far as I can tell (the closest I can think of are comic book adaptations, but of course those are analogous to the many mangas -> animes). Now, American media absolutely adapts many novels, but they are all normal Serious Business Novels. We do not seem to even have the light novel media—young adult novels do not cut the mustard. light novels are odd as they are kind of like speculative fiction novellas. The success of comic book movies has been much noted—could comic books be the American equivalent of light novels? There are attractive similarities in subject matter and even medium, light novels including a fair number of color manga illustrations.

Question for self: if America doesn’t have the light novel category, is that a claim that the Twilight novels, and everything published under the James Patterson brand, are regular novels?

Answer: The Twilight novels are no more light novels than the Harry Potter novels were. The Patterson novels may fit, however; they have some of the traits such as very short chapters, simple literary style, and very quick moving plots, even though they lack a few less important traits (such as including illustrations). It might be better to say that there is no recognized and successful light novel genre rather than individual light novels—there are only unusual examples like the Patterson novels and other works uncomfortable listed under the Young Adult/Teenager rubric.

Cultural Growth through Diversity

We are doubtless deluding ourselves with a dream when we think that equality and fraternity will some day reign among human beings without compromising their diversity. However, if humanity is not resigned to becoming the sterile consumer of values that it managed to create in the past…capable only of giving birth to bastard works, to gross and puerile inventions, [then] it must learn once again that all true creation implies a certain deafness to the appeal of other values, even going so far as to reject them if not denying them altogether. For one cannot fully enjoy the other, identify with him, and yet at the same time remain different. When integral communication with the other is achieved completely, it sooner or later spells doom for both his and my creativity. The great creative eras were those in which communication had become adequate for mutual stimulation by remote partners, yet was not so frequent or so rapid as to endanger the indispensable obstacles between individuals and groups or to reduce them to the point where overly facile exchanges might equalize and nullify their diversity.

Claude Levi-Strauss, The View from Afar pg23 (quoted in Clifford Geertz’s “The Uses of Diversity” Tanner Lecture)