On Seeing Through and Unseeing: The Hacker Mindset

Defining the security/hacker mindset as extreme reductionism: ignoring the surface abstractions and limitations to treat a system as a source of parts to manipulate into a different system, with different (and usually unintended) capabilities.

To draw some parallels here and expand 2017, I think unexpected Turing-complete systems and weird machines have something in common with heist movies or cons or stage magic: they all share a specific paradigm we might call the security mindset or hacker mindset.

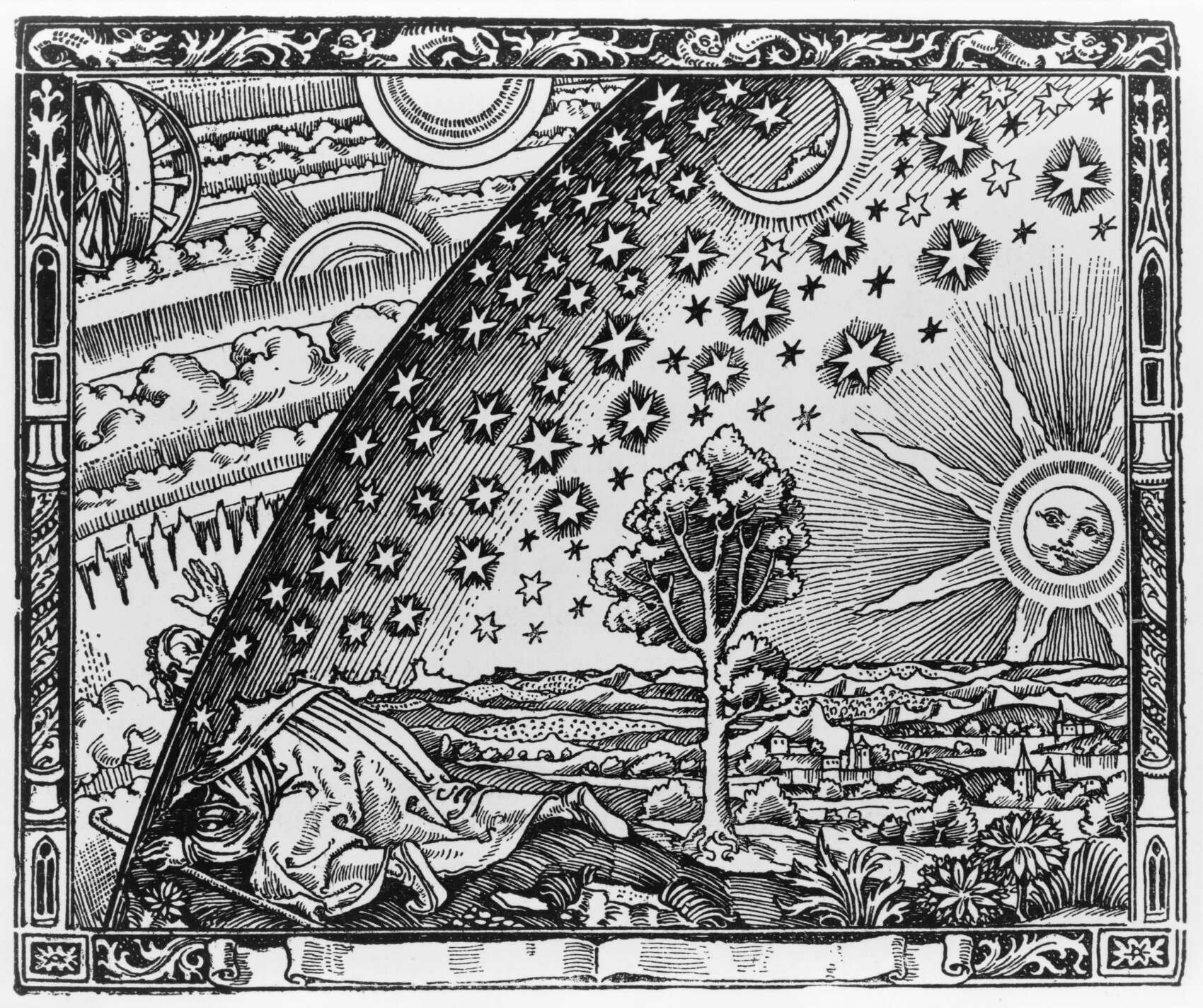

What they (and hacking, speedrunning, social-engineering etc.) all have in common is that they show that the much-ballyhooed ‘hacker mindset’ is, fundamentally, a sort of reductionism run amok, where one ‘sees through’ abstractions to a manipulable reality. Like Neo in the Matrix—a deeply cliche analogy for hacking, but cliche because it resonates—one achieves enlightenment by seeing through the surface illusions of objects and can now see the endless lines of green code which make up the Matrix, and vice-versa. (It’s maps all the way down!)

In each case, the fundamental principle is that the hacker asks: “here I have a system W, which pretends to be made out of a few Xs; however, it is really made out of many Y, which form an entirely different system, Z; I will now proceed to ignore the X and understand how Z works, so I may use the Y to thereby change W however I like”.

Abstractions are vital, but like many living things, dangerous, because abstractions always leak. (“You’re very clever, young man, but it’s reductionism all the way down!”) This is in some sense the opposite of a mathematician: a mathematician tries to ‘see through’ a complex system’s accidental complexity up to a simpler more-abstract more-true version which can be understood & manipulated—but for the hacker, all complexity is essential, and they are instead trying to unsee the simple abstract system down to the more-complex less-abstract (but also more true) version.1 (A mathematician might try to transform a program up into successively more abstract representations to eventually show it is trivially correct; a hacker would prefer to compile a program down into its most concrete representation to brute force all execution paths & find an exploit trivially proving it incorrect.)

Confirmation Bias

Uncle Milton Industries has been selling ant farms to children since 195670ya. Some years ago, I remember opening one up with a friend. There were no actual ants included in the box. Instead, there was a card that you filled in with your address, and the company would mail you some ants.

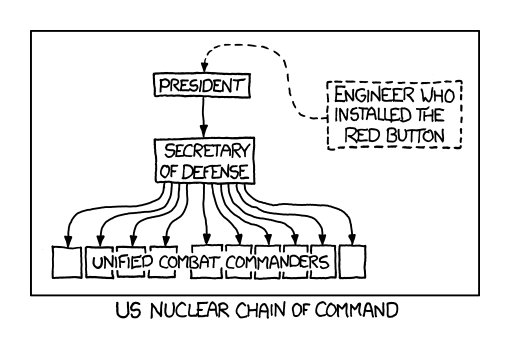

My friend expressed surprise that you could get ants sent to you in the mail. I replied: ‘What’s really interesting is that these people will send a tube of live ants to anyone you tell them to.’

Bruce Schneier, “The Security Mindset” (200818ya); cf. DNS, Mormons/JVs

Ordinary users ask only that all their everyday examples of Ys transforms into Z correctly; they forget to ask whether all and only correct examples of Ys transform into correct Zs, and whether only correct Zs can be constructed to become Ys. Even a single ‘anomaly’, apparently trivial in itself, can indicate the everyday mental model is not just a little bit wrong, but fundamentally wrong, in the way that Newton’s theory of gravity is not merely a little bit wrong and just needs a quick patch with a fudge factor to account for Mercury or that NASA management’s mental model of O-rings was not merely in need of a minor increase in the thickness of the rubber gaskets2.

Atoms

Every drop of blood has great talent; the original cellule seems identical in all animals, and only varied in its growth by the varying circumstance which opens now this kind of cell and now that, causing in the remote effect now horns, now wings, now scales, now hair; and the same numerical atom, it would seem, was equally ready to be a particle of the eye or brain of man, or of the claw of a tiger…The man truly conversant with life knows, against all appearances, that there is a remedy for every wrong, and that every wall is a gate.

Ralph Waldo Emerson, “Natural History Of Intellect”, 18933

It’s all “atoms and the void”4:

In hacking, a computer pretends to be made out of things like ‘buffers’ and ‘lists’ and ‘objects’ with rich meaningful semantics, but really, it’s just made out of bits which mean nothing and only accidentally can be interpreted as things like ‘web browsers’ or ‘passwords’, and if you move some bits around and rewrite these other bits in a particular order and read one string of bits in a different way, now you have bypassed the password.

In speed running (particularly TASes), a video game pretends to be made out of things like ‘walls’ and ‘speed limits’ and ‘levels which must be completed in a particular order’, but it’s really again just made out of bits and memory locations, and messing with them in particular ways, such as deliberately overloading the RAM to cause memory allocation errors, can give you infinite ‘velocity’ or shift you into alternate coordinate systems in the true physics, allowing enormous movements in the supposed map, giving shortcuts to the ‘end’5 of the game.

in stealth games, players learn to unsee levels into patterns of gaps moving around over time—gaps in guard patrols or observability of light/sound—and how to dismantle the level piece by piece until they can go anywhere and do anything

In robbing a hotel room, people see ‘doors’ and ‘locks’ and ‘walls’, but really, they are just made out of atoms arranged in a particular order, and you can move some atoms around more easily than others, and instead of going through a ‘door’ you can just cut a hole in the wall6 (or ceiling) and obtain access to a space. At Los Alamos, Richard Feynman, among other tactics, obtained classified papers by reaching in underneath drawers & ignoring the locks entirely.

One analysis of the movie Die Hard, “Nakatomi space”, highlights how it & the Israel military’s mouse-holing in the Battle of Nablus treat buildings as kinds of machines, which can be manipulated in weird ways to move around to attack their enemies.

That example reminds me of the Carr & Adey anatomy of locked room murder mysteries, laying out a taxonomy of all the possible solutions which—like a magician’s trick—violate one’s assumptions about the locked room: whether it was always locked, locked at the right time, the murder done while in the room, the murder done before everyone entered the room, it being murder rather than suicide, the supposed secure room with locked-doors having a ceiling etc.7 (These tricks inspired Umineko’s mysteries (review), although in it a lot of them turn out to just involve conspirators/lying.)

In lockpicking, copying a key or reverse-engineering its cuts are some of the most difficult ways to pick a lock. One can instead simply use a bump key to brute-force the positions of the pins in a lock, or kick the door in, or among other door lock bypasses, wiggle the bolt, or reach through a crack to open from the inside, or drill the lock. (How do you know someone hasn’t already? You assume it’s the same lock as yesterday?) If all else fails, you can use a portable hydraulic ram as a spreader to shatter the frame or wall itself around the door.

Locks & safes have many other interesting vulnerabilities; I particularly like Matt Blaze’s master-key vulnerability (2003/2004a/2004b), which uses the fact that a master-key lock is actually opening for any combination of master+ordinary key cuts (ie. ‘master OR ordinary’ rather than ‘master XOR ordinary’), and so it is like a password which one can guess one letter at a time. (These papers made locksmiths so mad they harassed Blaze into quitting.)

In stage magic (especially close-up/card/coin/pickpocketing), one believes one is continuously seeing single whole objects which must move from one place to another continuously; in reality, one is only seeing, occasionally, surfaces of many (possibly duplicate) objects, which may be moving only when you are not looking, in the opposite direction, or not moving at all. By hacking object permanence and limited attentional resources, the stage magician shows the ‘impossible’ ( et al 2008’s Table 1 lists many folk physics assumptions which can be hacked). Stage magic works by exploiting our implicit beliefs that no adversary would take the trouble to so precisely exploit our heuristics and shortcuts.89

In weird machines, you have a ‘protocol’ like SSL or x86 machine code which appear to do simple things like ‘check a cryptographic signature’ or ‘add one number in a register to another register’, but in reality, it’s a layer over far more complex realities like processor states & optimizations like speculative execution reading other parts of memory and then quickly erasing it, and these can be pasted together to execute operations and reveal secrets without ever running ‘code’ (see again et al 2019).

Similarly, in finding hidden examples of Turing completeness, one says, ‘this system appears to be a bunch of dominoes or whatever, but actually, each one is a computational element which has unusual inputs/outputs; I will now proceed to wire a large number of them together to form a Turing machine so I can play Tetris in Conway’s Game of Life or use heart muscle cells to implement Boolean logic or run arbitrary computations in a game of Magic: The Gathering’.

Or in side channels, you go below bits and say, ‘these bits are only approximations to the actual flow of electricity and heat in a system; I will now proceed to measure the physical system’ etc.

In social engineering/pen testing, people see social norms and imaginary things like ‘permission’ and ‘authority’ and ‘managers’ which ‘forbid access to facilities’, but in reality, all there is, is a piece of laminated plastic or a clipboard or certain magic words spoken; the people are merely non-computerized ways of implementing rules like ‘if laminated plastic, allow in’, and if you put on a blue piece of plastic to your shirt and you incant certain words at certain times, you can walk right past the guards.10

Many financial or economic strategies have a certain flavor of this; Alice Maz’s Minecraft economics exploits strongly reminds me of ‘seeing through’, as do many clever financial trades based on careful reading of contractual minutiae or taking seriously what are usually abstracted details like ‘taking delivery’ of futures etc

and while we’re at it, why are puns so irresistible to hackers? (Consider how omnipresent they are in Gödel, Escher, Bach or the Jargon File or text adventures or…) Computers are nothing but puns on bits, and languages are nothing but puns on letters. Puns force one to drop from the abstract semantic level to the raw syntactic level of sub-words or characters, and back up again to achieve some semantic twist—they are literally hacking language.

And so on. These sorts of things can seem magical (‘how‽’), shocking (‘but—but—but that’s cheating!’ the scrub says), or hilarious (in the ‘violation of expectations followed by understanding’ theory of humor) because the abstract system W & our verbalizations are so familiar and useful that we quickly get trapped in our dreams of abstractions, and forget that it is merely a map and not the territory, while inevitably the map has made gross simplifications and it fails to document various paths from one point to another point which we don’t want to exist.

Indeed, these ‘backdoors’ must exist unless carefully engineered away, because the high-level properties we rely on have no existence at the lower levels. If we explain things like ‘permission’ in terms of sequences of digital bits, we must at some point reach a level where the bits no longer express this ‘permission’, in the same way that if we explain ‘color’ or ‘smell’ by atoms, we must do so by eventually describing entities which do not look like they have any color nor have any smell; at some point, these properties must disintegrate into brute facts like a circuit going one way rather than another.11

Curse of Expertise

“The question is”, said Alice, “whether you can make words mean so many different things.”

“The question is”, said Humpty Dumpty, “which is to be master—that’s all.”

Lewis Carroll, Through the Looking-Glass, and What Alice Found There (1872154ya)12

Perversely, the more educated you are, and the more of the map you know, the worse this effect can be, because you have more to unsee (eg. in fiction). One must always maintain a certain contempt for words & spooks.

The fool can walk right in because he was too ignorant to know that’s impossible. This is why atheoretical optimization processes like animals (eg. cats engaged in fuzz testing) or SMT solvers or evolutionary AI are so dumb to begin with, but in the long run can be so good at surprising us and finding ‘unreasonable’ inputs or reward hacks (analogous to the bias-variance tradeoff): being unable to understand the map, they can’t benefit from it like we do, but they also can’t overvalue it, and, forced to explore the territory directly to get what they want, discover new things.

Learning To Unsee

I don’t even see the code. All I see is blonde, brunette, redhead.

Cypher, The Matrix

Whoa.

Neo

To escape our semantic illusions can require a determined effort to unsee them, and use of techniques to defamiliarize the things.

For example, you can’t find typos in your own writing without a great deal of effort because you know what it’s supposed to say; so copyediting advice runs like ‘read it out loud’ or ‘print it out and read it’ or ‘wait a week’ or recite until gibberish or even ‘read it upside down’ (easier than it sounds). That’s the sort of thing it takes to force you to read what you actually wrote, and not what you thought you wrote. Similar tricks are used for learning drawing: a face is too familiar, so instead you can flip it in a mirror and try to copy it.

The good news is that “what has been unseen cannot be seen”, and that once one has been enlightened into unseeing a system, it seems hard to slip back into the original illusion. And even a little unseeing can be a prophylactic which protects against harmful illusions.

External Links

“Security Mindset and Ordinary Paranoia”; “Security Mindset and the Logistic Success Curve”

“How did so many Dungeon Crawl: Stone Soup players miss such an obvious bug?”

“Security is Mathematics”, Colin Percival; “On Exactitude in Science”, Jorge Luis Borges

“No general method to detect fraud”, Cal Peterson

Red Teaming: How Your Business Can Conquer the Competition by Challenging Everything, Hoffman

“Getting Over It Developer Reacts to 1 Minute 24 Second Speedrun”

“The Board Game of the Alpha Nerds: Before Risk, before Dungeons & Dragons, before Magic: The Gathering, there was Diplomacy” (WP; “I still don’t know whom I should have trusted, if anyone. All I know is that I felt stupid, stressed out, humiliated, and sad.”)