Computer Optimization: Your Computer Is Faster Than You Think

You can have a second computer once you’ve shown you know how to use the first one.

“Welcome to the Jungle” (CPU design and Moore’s law; cf. “How Many Computers In Your Computer?”)

“There’s plenty of room at the Top: What will drive computer performance after Moore’s law?”, et al2020 (gains from End-To-End Principle-based systems design1)

“Scalability! But at what COST?”, et al2015 (when laptops > clusters: the importance of good baselines; “Big Data” doesn’t fit in a laptop); “Command-line Tools can be 235× Faster than your Hadoop Cluster”

“What it takes to run Stack Overflow”, Nick Craver; “We stand to save $7m over 5 years from our cloud exit”, David Heinemeier Hansson; “Production Twitter on One Machine? 100Gbps NICs and NVMe are fast”

“Anatomy of a hack: How crackers ransack passwords like

qeadzcwrsfxv1331; For Ars, 3 crackers have at 16,000+ hashed passcodes—with 90% success”; “Computing Adler32 Checksums at 41 GB/s” 59 GB/

s FizzBuzz ; “Modern storage is plenty fast. It is the APIs that are bad.”; “10M IOPS, one physical core.#io_uring #linux”; “Achieving 11M IOPS & 66 GB/s IO on a Single ThreadRipper Workstation” “The C10K problem”, 2001; “Serving Netflix Video at 50 gigabyte/

s on FreeBSD” , 2021/“Serving Netflix Video Traffic at 100 gigabyte/ s and Beyond” , 2022“Multicast and the Markets” (network optimization & mechanical sympathy in high-frequency trading)

Ultra-low bit-rate audio for tiny podcasts with Codec2 and WaveNet decoders (1 hour = 1MB)

“The computers are fast, but you don’t know it” (replacing slow pandas with 6,000× faster parallelized C++)

“Scaling SQLite to 4M QPS on a Single Server (EC2 vs Bare Metal)”, Expensify; “50% faster than 3.7.17” (“… We have achieved this by incorporating hundreds of micro-optimizations. Each micro-optimization might improve the performance by as little as 0.05%.”)

“Optimization story—quantum mechanics simulation speedup”; “14,000× Speedup a.k.a. Computer Science For the Win”

“Cutting The Pipe: Achieving Sub-Second Iteration Times”, 2012 (optimizing game development)

“The Billion Row Challenge (1BRC): Step-by-step in Java from 71s to 1.7s” (and 4s in Go & 0.5s in C)

“GCC Compiler vs Human—119× Faster Assembly 💻🆚🧑💻”; “Python, C, Assembly—2,500× Faster Cosine Similarity 📐”; “Summing ASCII encoded integers on Haswell at almost the speed of memcpy” (“320× faster than the following naive C++ solution”)

“The Prospero Challenge”(rendering a vector graphic toy language; 15,000ms simple implementation → 3,750ms → 6.3ms → 3.9ms)

“8088 Domination”: “how I created 8088 Domination, which is a program that displays fairly decent full-motion video on a 198144ya IBM PC”/

part 2 “A Mind Is Born” (256 bytes; for comparison, this line of Markdown linking the demo is 194 bytes long, and the video several million bytes)

Casey Muratori: refterm v2, Witness pathing (summary)

“A Time Leap Challenge for SAT Solving”, et al2020 (hardware overhang: ~2.5× performance increase in SAT solving since 200025ya, about equally due to software & hardware gains, although slightly more software—new software on old hardware beats old on new.)

“Measuring hardware overhang”, hippke (“with today’s algorithms, computers would have beat the world chess champion already in 199431ya on a contemporary desk computer”)

Memory Locality:

“You’re Doing It Wrong: Think you’ve mastered the art of server performance? Think again.”, Poul-Henning Kamp 201015ya (on using cache-oblivious B-heaps to optimize Varnish performance 10×)

“The LMAX Architecture”, 2011 (TigerBeetle)

“In-place Superscalar Samplesort (IPS4o): Engineering In-place (Shared-memory) Sorting Algorithms”, et al2020; “Vectorized and performance-portable Quicksort”, et al2022

“Fast approximate string matching with large edit distances in Big Data (2015)”

“ParPaRaw: Massively Parallel Parsing of [CSV] Delimiter-Separated Raw Data”, 2019

“Scaling our Spreadsheet Engine from Thousands to Billions of Cells: From Maps To Arrays”, Lukas 2022

“Persistent RNNs: Stashing Recurrent Weights On-Chip”, et al2016; “FlashAttention: Fast and Memory-Efficient Exact Attention with IO-Awareness”, et al2022

DL/

Deep Reinforcement Learning :Training A3C to solve Atari Pong in <4 minutes on the ARCHER supercomputer through brute parallelism

“

megastephelps you build 1-million FPS reinforcement learning environments on a single GPU”, Jones“The Mathematics of 2048: Optimal Play with Markov Decision Processes”

Bruteforcing Breakout (optimal play by depth-first search of an approximate MDP in an optimized C++ simulator for 6 CPU-years; crazy gameplay—like chess endgame tables, a glimpse of superintelligence)

“Sample Factory: Egocentric 3D Control from Pixels at 100,000 FPS with Asynchronous Reinforcement Learning”, et al2020; “Megaverse: Simulating Embodied Agents at One Million Experiences per Second”, et al2021; “Brax: A Differentiable Physics Engine for Large Scale Rigid Body Simulation”, et al2021; “Isaac Gym: High Performance GPU-Based Physics Simulation For Robot Learning”, et al2021 (et al2021); WarpDrive

“Large Batch Simulation for Deep Reinforcement Learning”, et al2021

“Mastering Real-Time Strategy Games with Deep Reinforcement Learning: Mere Mortal Edition”, Winter

“Podracer architectures for scalable Reinforcement Learning”, et al2021 (highly-efficient TPU pod use: eg. solving Pong in <1min at 43 million FPS on a TPU-2048); “Q-DAX: Accelerated Quality-Diversity for Robotics through Massive Parallelism”, et al2022; “EvoJAX: Hardware-Accelerated Neuroevolution”, et al2022; “JaxMARL: Multi-Agent RL Environments in JAX”, et al2023

“This Snake environment simulates 14M snakes and trains 1M snake steps per second.”

“TextWorldExpress: Simulating Text Games at One Million Steps Per Second”, Jansen & 2022

Latency/

UI :Organizations:

WhatsApp (201312ya: “50 staff members” for 200m users; 2015: 50 “engineers”, 900m users)

Craigslist (2017: ~50 employees)

Instagram (201213ya FB acquisition: 13 employees; architecture)

YouTube (200619ya Google acquisition: ~10 employees)

Reddit (201114ya: 4 engineers; 2021: ~700 total staff, >52m users)

Wikimedia Foundation (“Internet hosting” in 2021: $2.98$2.402021m)

Twitter: Musk acquisition cut up to 90% of employees+contractors; widespread confident assertions by ex-Twitter staff that the site would suffer crashes (possibly terminal crashes which could not be recovered from), within a month or two, turned out to be false.

See Also: “Some examples of people quickly accomplishing ambitious things together.”

A novice was trying to fix a broken Lisp machine by turning the power off and on.

Knight, seeing what the student was doing, spoke sternly: “You cannot fix a machine by just power-cycling it with no understanding of what is going wrong.”

Knight turned the machine off and on.

The machine worked.

“Tom Knight and the Lisp Machine”, AI Koans

-

AI scaling can continue even if semiconductors do not, particularly with self-optimizing stacks; John Carmack notes, apropos of Seymour Cray, that “Hyperscale data centers and even national supercomputers are loosely coupled things today, but if challenges demanded it, there is a world with a zetta[flops] scale, tightly integrated, low latency matrix dissipating a gigawatt in a swimming pool of circulating fluorinert.”

-

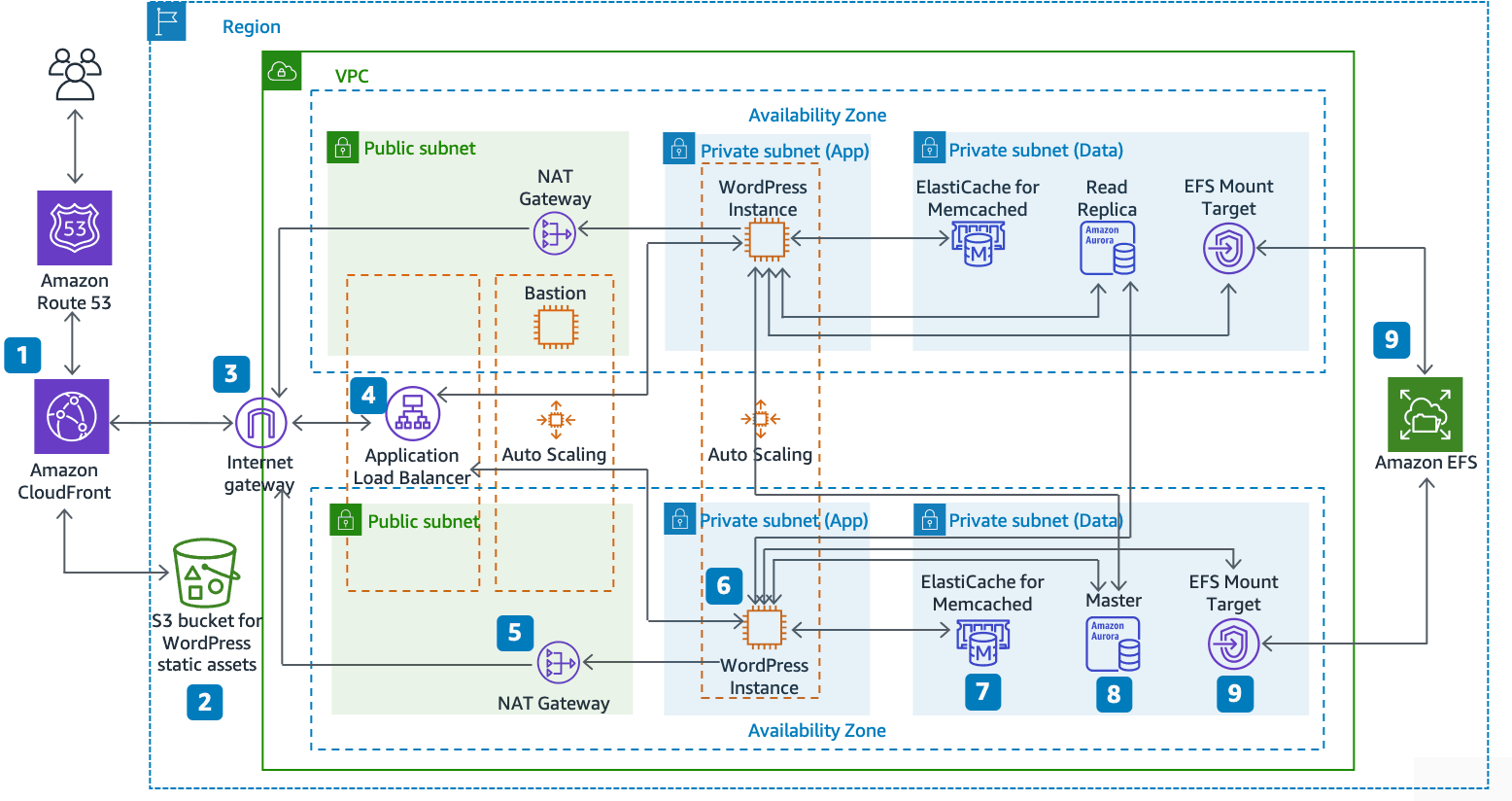

A relevant example is Amazon’s “best practices” for hosting a WordPress blog:

“Reference architecture for hosting WordPress on AWS: The Hosting WordPress on AWS reference architecture available on GitHub outlines best practices for deploying WordPress on AWS and includes a set of AWS CloudFormation templates to get you up and running quickly. The following architecture is based on that reference architecture. The rest of this section will review the reasons behind the architectural choices.”

Backlinks

CQK Is The First Unused TLA (full context):

Problem 14 Dynamic Programming Solutions (full context):

‘NN sparsity’ directory (full context):

They Might Never Tell You It’s Broken:

‘AI scaling’ directory (full context):

Aria’s Past, Present, and Future (full context):

Fast response times signal social connection in conversation:

Absolute Unit NNs: Regression-Based MLPs for Everything (full context):

Research Ideas (full context):