Efficient Attention: Breaking The Quadratic Transformer Bottleneck

Discussion of removing a major architectural limitation in Transformer neural networks: the length of the input it can look at. Beyond a few thousand inputs, the resource requirements explode quadratically, rendering it infeasible to encode raw text at the character level, much less use entire books, images, or many other kinds of data which could be useful. Even for text, this inability also forces limitations like the use of BPE text encoding (responsible for sabotaging GPT-3’s rhyming, among other things), forgetfulness, limits to prompt programming, and inability to write coherent long texts.

A bibliography of possibilities for fixing this are organized hierarchically below:

adding state, through recurrence (a memory) or creating a compressed history/state as an explicit summary

tinkering with matrix algebra to remove the quadratic explosion while still keeping more or less the same self-attention mechanism

approximating self-attention: using attention on only a small subset of tokens at any time (dodging the quadratic limit), or using a mix of local and global attention (local attentions to do most of the work, and global attention on top of the local attentions, each one avoiding the quadratic by considering only a few inputs at a time)

miscellaneous tricks: removing parts, using only randomized untrainable components (with no need to compute gradients over) etc

One of the most frustrating limitations of GPT-3 (as awesome as it is) is the context window: 2048 text tokens (BPEs) is adequate for many text-related tasks, and even GPT-3’s performance on that window is far from perfect, indicating it has a long way to go in truly understanding text. But 2048 BPEs runs out fast when you start prompt programming something hard, hacks like BPEs have nasty & subtle side-effects, and (as iGPT/ViT indicate in their own ways) is totally inadequate for other modalities like images—a single small 256px image is already equivalent to a sequence of l = 65,536, never mind video or raw audio!

How do we get future Transformers with reasonable context windows and/or memory, which we can use for research papers, books, structured text, images, video, audio, point clouds, genomics, and so on, where we need to handle sequences with lengths in the millions? (Such improvements would permit not just doing things GPT-3 struggles to do, like write coherent novels, but many better architectures, like multimodal Transformers which can learn jointly from images & text, accessing image-based datasets like PDFs, and learning far more accurate human-like representations & tacit knowledge with less data & smaller models, providing large models useful for almost all conceivable tasks—especially robotics.)

Below I compile & categorize research on breaking the dense attention quadratic bottleneck (overviews: Lilian Weng, Madison May; review: et al 2020; benchmark suite: Long Range Arena1):

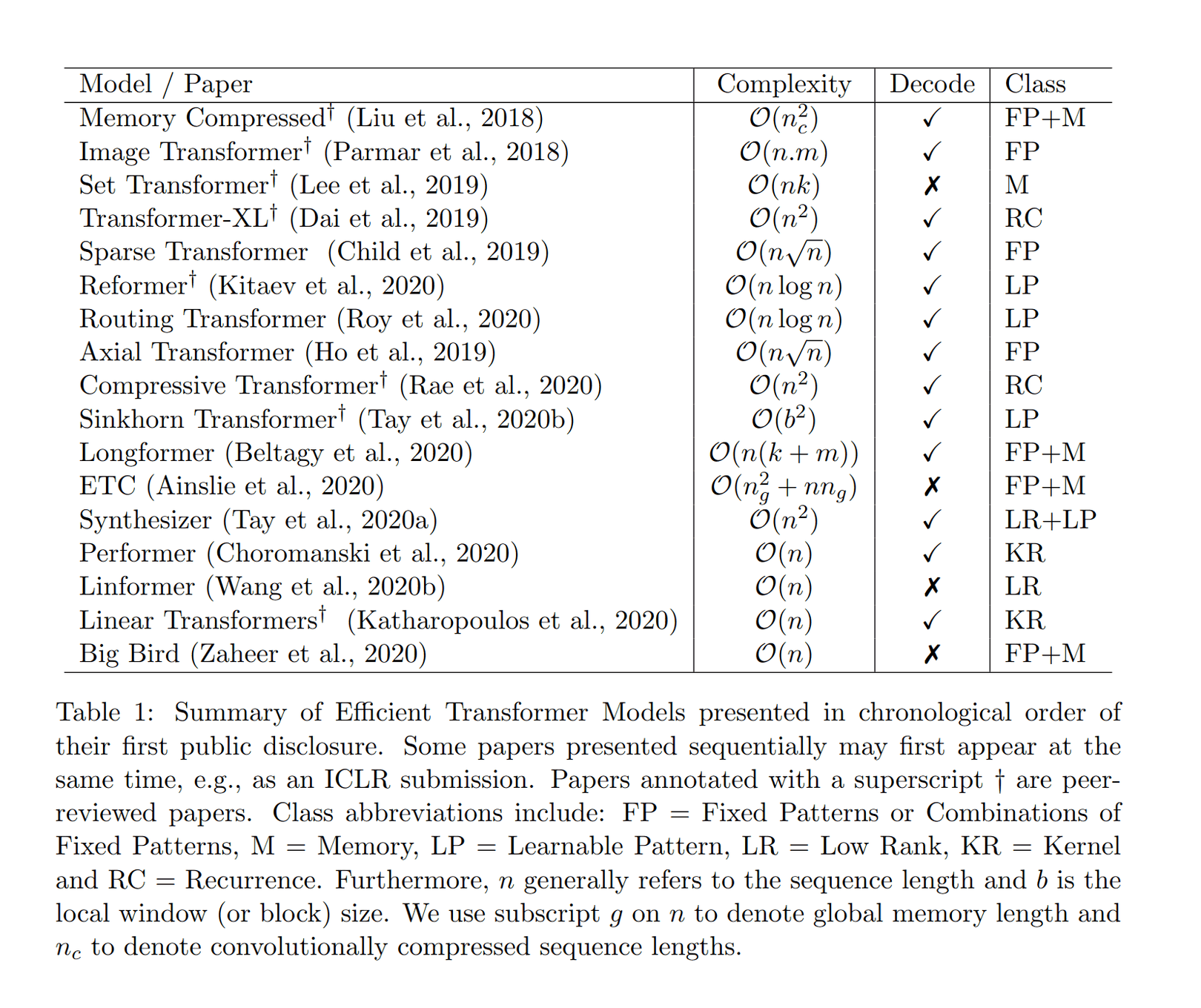

Table 1: Summary of Efficient Transformer Models presented in chronological order of their first public disclosure ( et al 2020)

The summary as of mid-2023: dense Transformers remain surprisingly competitive, and the many proposed variants all have their own drawbacks; none have superseded standard GPT or T5-style Transformers in more than a few niches. To paraphase Chekhov: “If many remedies are prescribed for an illness you can be sure it has no cure.”

Efficient Attention

State

Recurrency

“Universal Transformers”, et al 2018 (?); “Deep Equilibrium Models”, et al 2019

“Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context”, et al 2019 (blog)

“XLNet: Generalized Autoregressive Pretraining for Language Understanding”, et al 20192

“Untangling tradeoffs between recurrence and self-attention in neural networks”, et al 2020

“Feedback Transformer: Addressing Some Limitations of Transformers with Feedback Memory”, et al 2020

“Shortformer: Better Language Modeling using Shorter Inputs”, et al 2020

“SRU++: When Attention Meets Fast Recurrence: Training Language Models with Reduced Compute”, 2021

“SwishRNN: Simple Recurrence Improves Masked Language Models”, et al 2022

“Block-Recurrent Transformers”, et al 2022

RNNs:

Transformer ↔︎ RNN relationship: see Transformer-XL, XLNet, et al 2020, et al 2020, AFT, 2021, et al 2021, 2021, Perceiver, SwishRNN, RWKV

Compressed History/State

“Compressive Transformers for Long-Range Sequence Modeling”, et al 2019; “Expire-Span: Not All Memories are Created Equal: Learning to Forget by Expiring”, et al 2021

“Memory Transformer”, 2020

“Set Transformer: A Framework for Attention-based Permutation-Invariant Neural Networks”, et al 2018; “Perceiver: General Perception with Iterative Attention”, et al 2021a/“Perceiver IO: A General Architecture for Structured Inputs & Outputs”, et al 2021b

“Mem2Mem: Learning to Summarize Long Texts with Memory Compression and Transfer”, et al 2020

“∞-former: Infinite Memory Transformer”, et al 2021

“Memorizing Transformers”, et al 2021

“ABC: Attention with Bounded-memory Control”, et al 2021

“Recursively Summarizing Books with Human Feedback”, et al 2021

“MeMViT: Memory-Augmented Multiscale Vision Transformer for Efficient Long-Term Video Recognition”, et al 2022

“Token Turing Machines”, et al 2022

Matrix Algebra Optimizations

Tricks like rewriting the softmax/dot-product to be linear:

“Efficient Attention: Attention with Linear Complexities”, et al 2018 (blog)

“Linformer: Self-Attention with Linear Complexity”, et al 2020; “Luna: Linear Unified Nested Attention”, et al 2021 (hierarchical?); “Beyond Self-attention: External Attention using Two Linear Layers for Visual Tasks”, et al 2021

“Transformers are RNNs (Linear Transformers): Fast Autoregressive Transformers with Linear Attention”, et al 2020

“AFT: An Attention Free Transformer”, et al 2021

“LambdaNetworks: Modeling long-range Interactions without Attention”, 2020

“cosFormer: Rethinking Softmax in Attention”, et al 2022

Approximations

Sparsity

“Image Transformer”, et al 2018

Sparse Transformer: “Generating Long Sequences with Sparse Transformers”, et al 2019 (blog)

“Adaptive Attention Span in Transformers”, et al 2019

“Reformer: The Efficient Transformer”, et al 2019 (blog: 1, 2); “SMYRF: Efficient Attention using Asymmetric Clustering”, et al 2020; “Cluster-Former: Clustering-based Sparse Transformer for Long-Range Dependency Encoding”, et al 2020; “You Only Sample (Almost) Once: Linear Cost Self-Attention Via Bernoulli Sampling”, et al 2021

“Star-Transformer”, et al 2019

“Efficient Content-Based Sparse Attention with Routing Transformers”, et al 2020

“Sparse Sinkhorn Attention”, et al 2020 (blog)

“BigBird: Transformers for Longer Sequences”, et al 2020 (blog; see also ETC)

Axial attention: “Axial Attention in Multidimensional Transformers”, et al 2019; et al 2018; et al 2020b; et al 20203

“Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting”, et al 2020

“OmniNet: Omnidirectional Representations from Transformers”, et al 2021

“Combiner: Full Attention Transformer with Sparse Computation Cost”, et al 2021

“Scatterbrain: Unifying Sparse and Low-rank Attention Approximation”, et al 2021

“Sparse Is Enough in Scaling Transformers”, et al 2021

Note: Several implementations are available in DeepSpeed

Global ↔︎ Local Attention

“LSRA: Lite Transformer with Long-Short Range Attention”, et al 2020a

“BlockBERT: Blockwise self-attention for long document understanding”, et al 2019

“BP-Transformer: Modeling Long-Range Context via Binary Partitioning”, et al 2019

“Longformer: The Long-Document Transformer”, et al 2020; “CD-LM: Cross-Document Language Modeling”, et al 2021; “Simple Local Attentions Remain Competitive for Long-Context Tasks”, et al 2021

“ETC: Encoding Long and Structured Data in Transformers”, et al 2020; “LongT5: Efficient Text-To-Text Transformer for Long Sequences”, et al 20214

“Conformer: Convolution-augmented Transformer for Speech Recognition”, et al 2020 ( et al 2020)

“SMITH: Beyond 512 Tokens: Siamese Multi-depth Transformer-based Hierarchical Encoder for Document Matching”, et al 2020

“Multi-scale Transformer Language Models”, et al 2020

“Hierarchical Transformers for Multi-Document Summarization”, 2019; “Hi-Transformer: Hierarchical Interactive Transformer for Efficient and Effective Long Document Modeling”, et al 2021

“Transformer-QL: A Step Towards Making Transformer Network Quadratically Large”, 2020

“Coordination Among Neural Modules Through a Shared Global Workspace”, et al 2021

“Swin Transformer: Hierarchical Vision Transformer using Shifted Windows”, et al 2021a; “Swin Transformer V2: Scaling Up Capacity and Resolution”, et al 2021b

“Hierarchical Transformers Are More Efficient Language Models”, et al 2021

“Long-Short Transformer (Transformer-LS): Efficient Transformers for Language and Vision”, et al 2021

“AdaMRA: Adaptive Multi-Resolution Attention with Linear Complexity”, et al 2021

“Fastformer: Additive Attention is All You Need”, et al 2021

“FLASH: Transformer Quality in Linear Time”, et al 2022 (see also MLP-Mixer)

“NAT: Neighborhood Attention Transformer”, et al 2022; “DiNAT: Dilated Neighborhood Attention Transformer”, 2022

Miscellaneous

Dropping components, non-trainable/randomized parts, etc.:

“Generating Wikipedia by Summarizing Long Sequences”, et al 2018 (memory compressed)

“Pay Less Attention with Lightweight and Dynamic Convolutions”, et al 2019b

“Music Transformer”, et al 2020

“Synthesizer: Rethinking Self-Attention in Transformer Models”, et al 2020

“Performer (FAVOR): Masked Language Modeling for Proteins via Linearly Scalable Long-Context Transformers”, et al 2020a (on turning Transformers into RNNs); “FAVOR+: Rethinking Attention with Performers”, et al 2020b (blog; DRL use; can be trained in constant memory); “RFA: Random Feature Attention”, et al 2020; “DPFP: Linear Transformers Are Secretly Fast Weight Memory Systems”, et al 2021; “DAFT: A Dot Product Attention Free Transformer”, et al 2021

“Nyströmformer: A Nyström-Based Algorithm for Approximating Self-Attention”, et al 2021; “Skyformer: Remodel Self-Attention with Gaussian Kernel and Nyström Method”, et al 2021

“Funnel-Transformer: Filtering out Sequential Redundancy for Efficient Language Processing”, et al 2020

“LazyFormer: Self Attention with Lazy Update”, et al 2021

“RASP: Thinking Like Transformers”, et al 2021 (examining limitations of efficient Transformers: in terms of algorithms, what does going from n2 to n cost? What “programs” do Transformers encode?)

“Stable, Fast and Accurate: Kernelized Attention with Relative Positional Encoding”, et al 2021

“On Learning the Transformer Kernel”, et al 2021

Structured State Models (SSMs): “Combining Recurrent, Convolutional, and Continuous-time Models with Linear State-Space Layers”, et al 2021a; “S4: Efficiently Modeling Long Sequences with Structured State Spaces”, et al 2021b; “HiPPO: Recurrent Memory with Optimal Polynomial Projections”, et al 2021c

“Self-attention Does Not Need 𝒪(n2) Memory”, 2021 (does still cost 𝒪(n2) compute)

MLPs (for removing attention entirely)

Retrieval

“REALM: Retrieval-Augmented Language Model Pre-Training”, et al 2020

“MARGE: Pre-training via Paraphrasing”, et al 2020a

“RAG: Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks”, et al 2020b

“Current Limitations of Language Models: What You Need is Retrieval”, 2020

“Memorizing Transformers”, et al 2022