Dynamic Evaluation

Old neural net technique for runtime (online) learning which boosts performance.

Dynamic evaluation or test-time finetuning is a performance-enhancing1 online machine learning technique where the ML model is trained further at runtime on ‘new’ data, eg. an RNN/Transformer is benchmarked on predicting text, but in addition to its prediction each timestep, it does an additional gradient descent on the newly-observed text. (It is analogous to short-term memory neural plasticity.) Dynamic evaluation was introduced for RNNs by et al 2010, where the continual learning reduced perplexity in predicting English, and was used in many RNNs afterwards for the best performance (cf. neural cache).

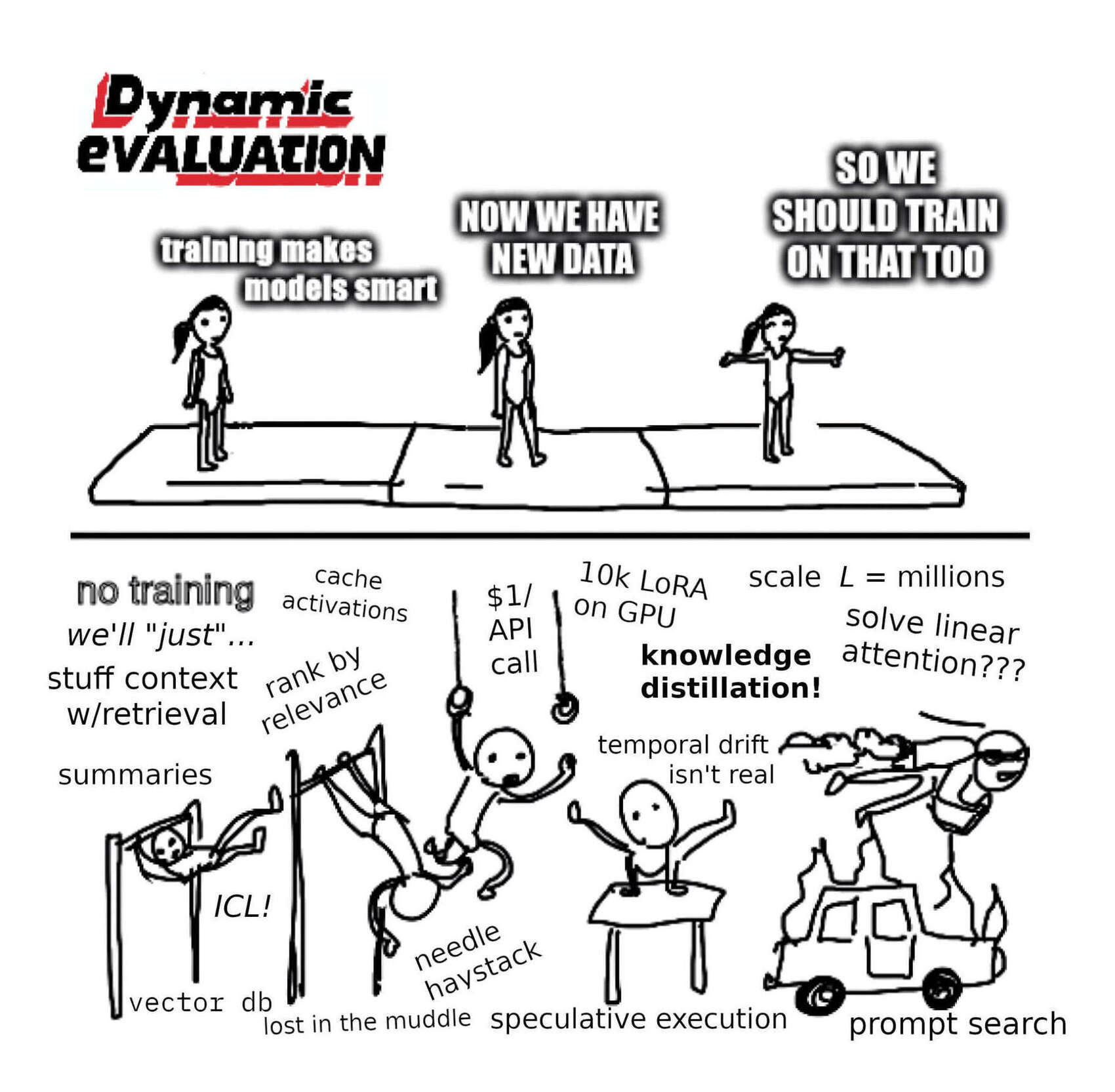

Gradient descent works well. Have you considered using it more?

Dynamic evaluation is attractive because it requires no modifications to the architecture or training—it simply does more ‘training’, rather than leaving the weights frozen and relying on the hidden state (or self-attention) to do all learning, leading to greater consistency.2 It is especially useful when dealing with rare entities, domain shift, or personalization3, and for serial tasks where the best performance is needed. It can also be augmented with retrieval methods or adding in similar datapoints, which can teach the NN more.

Dynamic evaluation has fallen out of fashion due to emphasis on simple-to-deploy models, proprietary cloud services, and throughput over quality; but it may be revived by local NN models, or by tasks requiring cognitive flexibility not handled by pure self-attention (ARC?).