Dual n-Back FAQ

A compendium of DNB, WM, IQ information up to 2015.

- The Argument

- Training

- Terminology

- Notes from the Author

- N-Back Training

- What’s Some Relevant Research?

- Support

- Criticism

- Meta-Analysis

- Does It Really Work?

- Non-IQ or Non-DNB Gains

- Saccading

- Sleep

- Lucid Dreaming

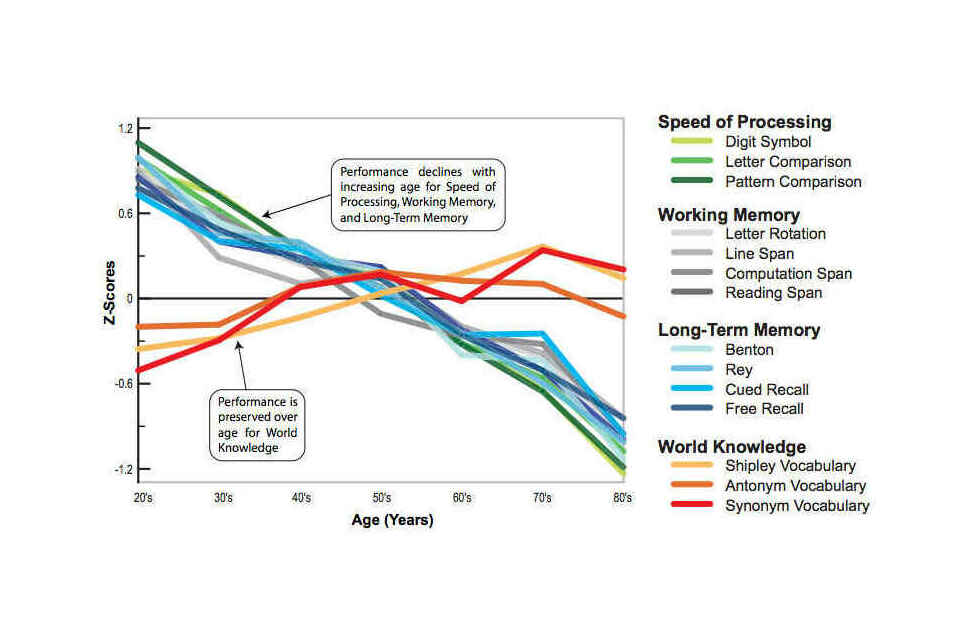

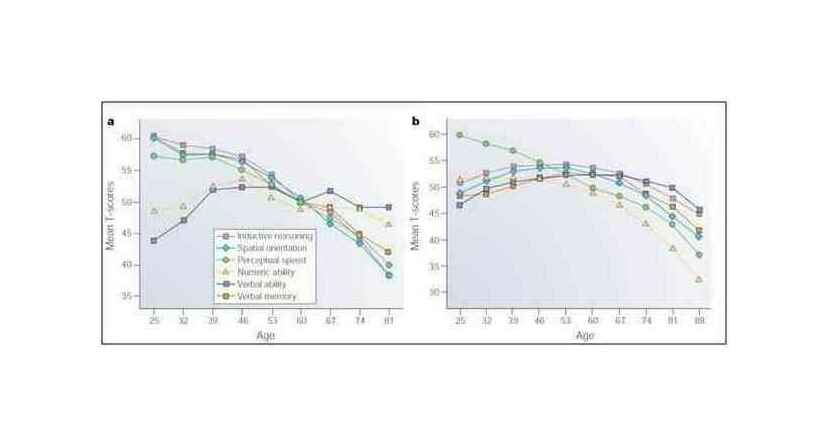

- Aging

- TODO

- Software

- What Else Can I Do?

- See Also

- Appendix

- Footnotes

- Backlinks

- Similar Links

- Bibliography

Dual n-Back is a kind of cognitive training intended to expand your working memory (WM), and hopefully your intelligence (IQ1).

The theory originally went that novel2 cognitive processes tend to overlap and seem to go through one central bottleneck. As it happens, WM predicts and correlates with IQ3 and may use the same neural networks4, suggesting that WM might be IQ5. WM is known to be trainable, and so improving WM would hopefully improve IQ. And N-back is a family of tasks which stress attention and WM.

Later research found that performance and improvement on N-back seems to correlate better with IQ rather than classic measures of WM like reciting lists of numbers, raising the question of whether N-back works via increasing WM or by improving self-control or improving manipulation of WM contents (rather than WM’s size) or somehow training IQ directly.6 Performance on DNB has complicated correlations with performance on other tests of working memory or IQ, so it’s not clear what it is tapping into. (And the link between WM and performance on IQ tests has been disputed; high WM as measured by OSPAN does not correlate well with performance on hard Raven’s questions7 and the validity of single tests of WM training has been questioned8.)

Brain Workshop offers many modes, some far more elaborate than simple Dual N-back; no research has been done on them, so little can be said about what they are good for or what they train or what improvements they may offer; 2010 seemed to find Single N-back better than Dual N-back. Some of the more elaborate modes seem to focus heavily on shifting the correct response among various modalities - not just sound, but left/

The Argument

Working memory is important stuff for learning and also just general intelligence.10 It’s not too hard to see why working memory could be so important. Working memory boils down to ‘how much stuff you can think about at the same time’.

Imagine a poor programmer who has suffered brain damage and has only enough working memory for 1 definition at a time. How could he write anything? To write a correct program, he needs to know simultaneously 2 things - what a variable, say, contains, and what is valid input for a program. But unfortunately, our programmer can know that the variable foo contains a string with the input, or he can know that the function processInput uses a string, but he can’t remember these 2 things simultaneously! He will deadlock forever, unsure either what to do with this foo, or unsure what exactly processInput was supposed to work on.

More seriously, working memory can be useful since it allows one to grasp more of the structure of something at any one time. Commentators on programming often write that one of the great challenges of programming (besides the challenge of accepting & dealing with the reality that a computer really is just a mindless rule-following machine), is that programming requires one to keep in mind dozens of things and circumstances - any one of which could completely bollix things up. Focus is absolutely essential. One of the characteristics of great programmers is their apparent omniscience. Obsession grants them this ability to know what they are actually doing:

“With programmers, it’s especially hard. Productivity depends on being able to juggle a lot of little details in short term memory all at once. Any kind of interruption can cause these details to come crashing down. When you resume work, you can’t remember any of the details (like local variable names you were using, or where you were up to in implementing that search algorithm) and you have to keep looking these things up, which slows you down a lot until you get back up to speed.” –Joel Spolsky, “Where do These People Get Their (Unoriginal) Ideas?”

“Several friends mentioned hackers’ ability to concentrate - their ability, as one put it, to ‘tune out everything outside their own heads.’ I’ve certainly noticed this. And I’ve heard several hackers say that after drinking even half a beer they can’t program at all. So maybe hacking does require some special ability to focus. Perhaps great hackers can load a large amount of context into their head, so that when they look at a line of code, they see not just that line but the whole program around it. John McPhee wrote that Bill Bradley’s success as a basketball player was due partly to his extraordinary peripheral vision. ‘Perfect’ eyesight means about 47° of vertical peripheral vision. Bill Bradley had 70; he could see the basket when he was looking at the floor. Maybe great hackers have some similar inborn ability. (I cheat by using a very dense language, which shrinks the court.) This could explain the disconnect over cubicles. Maybe the people in charge of facilities, not having any concentration to shatter, have no idea that working in a cubicle feels to a hacker like having one’s brain in a blender.” –Paul Graham, “Great Hackers”

It’s surprising, but bugs have a close relationship to number of lines of code - no matter whether the language is as low-level as assembler or high-level as Haskell (humorously, Norris’ number); is this because each line takes up a similar amount of working and short-term memory and there’s only so much memory to go around?11

The Silver Bullet

It’s not all that obvious, but just about every productivity innovation in computing is about either cutting down on how much a programmer needs to know (eg. garbage collection), or making it easier for him to shuffle things in and out of his ‘short term memory’. Why are some commentators like Jeff Atwood so focused12 on having multiple monitors? For that matter, why are there real studies showing surprisingly large productivity boosts by simply adding a second monitor?13 It’s not like the person is any different afterwards. And arguably multiple or larger monitors come with damaging overheads14.

Or, why does Steve Yegge think touch-typing is one of the few skills programmers must know (along with reading)?15 Why is Unix guru Ken Thompson’s one regret not learning typing?16 Typing hardly seems very important - it’s what you say, not how you say it. The compiler doesn’t care if you typed the source code in at 30WPM or 120WPM, after all.

I love being able to type that without looking! It’s empowering, being able to type almost as fast as you can think. Why would you want it any other way?

The thing is, multiple monitors, touch-typing, speed-reading17 - they’re all about making the external world part of your mind. What’s the real difference between having a type signature in your short-term memory or prominently displayed in your second monitor? What’s the real difference between writing a comment in your mind or touch-typing it as fast as you create it?

WM problems

Just some speed. Just some time. And the more visible that type signature is, the faster you can type out that comment, the larger your ‘memory’ gets. And the larger your memory is, the more intelligent/

But as great as things like garbage collection & touch-typing & multiple monitors are (I am a fan & user of the foregoing), they are still imperfect substitutes. Wouldn’t it be better if one could just improve one’s short-term/

Training

Unfortunately, in general, IQ/

…General Dreedle wants his [pilots] to spend as much time on the skeet-shooting range as the facilities and their flight schedule would allow. Shooting skeet eight hours a month was excellent training for them. It trained them to shoot skeet.

Indeed, the general history of attempts to increase IQ in any children or adults remains essentially what it was when Arthur Jensen wrote his 196956ya paper “How Much Can We Boost IQ and Scholastic Achievement?”—a history of failure. The exceptions prove the rule by either applying to narrow groups with specific deficits or work only before birth, like iodization. (See also Algernon’s Law: if there were an easy fitness-increasing way to make us smarter, evolution would have already used it.)

But hope springs eternal, and there are possible exceptions. The one this FAQ focuses on is Dual N-back, and it’s a variant on an old working-memory test.

One of the nice things about N-back is that while it may or may not improve your IQ, it may help you in other ways. WM training helps alcoholics reduce their consumption31 and increases patience in recovering stimulant addicts (cocaine & methamphetamine)32. The self-discipline or willpower of students correlates better with grades than even IQ33, WM correlates with grades and lower behavioral problems34 & WM out-predicts grades 6 years later in 5-year olds & 2 years later in older children35. WM training has been shown to help children with ADHD36 and also preschoolers without ADHD37; 2008 found behavior improvements at a summer camp. Another intervention using a miscellany of ‘reasoning’ games with young (7-9 years old) poor children found a Forwards Digit Span (but not Backwards) and IQ gains, with no gain to the subjects playing games requiring “rapid visual detection and rapid motor responses”38, but it’s worth remembering that IQ scores are unreliable in childhood39 or perhaps, as an adolescent brain imaging study indicates40, they simply are much more malleable at that point. (WM training in teenagers doesn’t seem much studied but given their issues, may help; see “Beautiful Brains” or “The Trouble With Teens”.)

There are many kinds of WM training. One review worth reading is “Does working memory training work? The promise and challenges of enhancing cognition by training working memory”, 2011; “Is Working Memory Training Effective?”, et al 2012 discusses the multiple methodological difficulties of designing WM training experiments (at least, they are difficult if you want to show genuine improvements which transfer to non-WM skills).

N-Back

The original N-back test simply asked that you remember a single stream of letters, and signal if any letters were precisely, say, 2 positions apart. ‘A S S R’ wouldn’t merit a signal, but ‘A S A R’ would since there are ‘A’ characters exactly 2 positions away from each other. The program would give you another letter, you would signal or not, and so on. This is simple enough once you understand it, but is a little hard to explain. It may be best to read the Brain Workshop tutorial, or watch a video.

Dual N-Back

In 200322ya, Susan Jaeggi and her team began fMRI studies using a variant of N-back which tried to increase the burden on each turn - remembering multiple things instead of just 1. The abstract describes the reason why:

With reference to single tasks, activation in the prefrontal cortex (PFC) commonly increases with incremental memory load, whereas for dual tasks it has been hypothesized previously that activity in the PFC decreases in the face of excessive processing demands, ie. if the capacity of the working memory’s central executive system is exceeded. However, our results show that during both single and dual tasks, prefrontal activation increases continuously as a function of memory load. An increase of prefrontal activation was observed in the dual tasks even though processing demands were excessive in the case of the most difficult condition, as indicated by behavioral accuracy measures. The hypothesis concerning the decrease in prefrontal activation could not be supported and was discussed in terms of motivation factors.41

In this version, called “dual N-back” (to distinguish it from the classic single N-back), one is still playing a turn-based game. In the Brain Workshop version, you are presented with a 3x3 grid in which every turn, a block appears in 1 of the 9 spaces and a letter is spoken aloud. (There are any number of variants: the NATO phonetic alphabet, piano keys, etc. And Brain Workshop has any number of modes, like ‘Arithmetic N-back’ or ‘Quintuple N-back’.)

1-Back

In 1-back, the task is to correctly answer whether the letter is the same as the previous round, and whether the position is the same as the previous round. It can be both, making 4 possible responses (position, sound, position+sound, & neither).

This stresses working memory since you need to keep in mind 4 things simultaneously: the position and letter of the previous turn, and the position and letter of the current turn (so you can compare the current letter with the old letter and the current position with the old position). Then on the next turn you need to immediately forget the old position & letter (which are now useless) and remember the new position and letter. So you are constantly remembering and forgetting and comparing.

2-Back

But 1-back is pretty easy. The turns come fast enough that you could easily keep the letters in your phonological loop and lighten the load on your working memory. Indeed, after 10 rounds or so of 1-back, I mastered it - I now get 100%, unless I forget for a second that it’s 1-back and not 2-back (or I simply lose my concentration completely). Most people find 1-back very easy to learn, although a bit challenging at first since the pressure is constant (games and tests usually have some slack or rest periods).

The next step up is a doozy: 2-back. In 2-back, you do the same thing as 1-back but as the name suggests, you are instead matching against 2 turns ago. So before you would be looking for repeated letters - ‘AA’ - but now you need to look for separated letters - ‘ABA’. And of course, you can’t forget so quickly, since you still need to match against something like ‘ABABA’.

2-back stresses your working memory even more, as now you are remembering 6 things, not 4: 2 turns ago, the previous turn, and the current turn - all of which have 2 salient features. At 6 items, we’re also in the mid-range of estimates for normal working memory capacity:

Working memory is generally considered to have limited capacity. The earliest quantification of the capacity limit associated with short-term memory was the “magical number seven” introduced by Miller (195669ya). He noticed that the memory span of young adults was around seven elements, called chunks, regardless whether the elements were digits, letters, words, or other units. Later research revealed that span does depend on the category of chunks used (eg. span is around seven for digits, around six for letters, and around five for words), and even on features of the chunks within a category….Several other factors also affect a person’s measured span, and therefore it is difficult to pin down the capacity of short-term or working memory to a number of chunks. Nonetheless, Cowan (200124ya) has proposed that working memory has a capacity of about four chunks in young adults (and fewer in children and old adults).

And even if there are only a few things to remember, the number of responses you have to choose between go up exponentially with how many ‘modes’ there are, so Triple N-back has not ⅓ more possible responses than Dual N-back, but more than twice as many: if m is the number of modes, then the number of possible responses is 2m-1 (the -1 is there because one can nothing in every mode, but that’s boring and requires no choice or thought), so DNB has 3 possible responses42, while TNB has 743, Quadruple N-back 1544, and Quintuple N-back 3145!

Worse, the temporal gap between elements is deeply confusing. It’s particularly bad when there’s repetition involved - if the same square is selected twice with the same letter, you might wind up forgetting both!

So 2-back is where the challenge first really manifests. After about 20 games I started to get the hang of it. (It helped to play a few games focusing only on one of the stimuli, like the letters; this helps you get used to the ‘reaching back’ of 2-back.)

Personal Reflection on Results

Have I seen any benefits yet? Not really. Thus far it’s like meditation: I haven’t seen any specific improvements, but it’s been interesting just to explore concentration - I’ve learned that my ability to focus is much less than I thought it was! It is very sobering to get 30% scores on something as trivial as 1-back and strain to reach D2B, and even more sobering to score 60% and minutes later score 20%. Besides the intrinsic interest of changing one’s brain through a simple exercise - meditation is equally interesting for how one’s mind refuses to cooperate with the simple work of meditating, and I understand that there are even vivid hallucinations at the higher levels - N-back might function as a kind of mental calisthenics. Few people exercise and stretch because they find the activities intrinsically valuable, but they serve to further some other goal; some people jog because they just enjoy running, but many more jog so they can play soccer better or live longer. I am young, and it’s good to explore these sorts of calisthenics while one has a long life ahead of one; then one can reap the most benefits.

Terminology

N-back training is sometimes referred to simply as ‘N-backing’, and participants in such training are called ‘N-backers’. Almost everyone uses the Free, featureful & portable program Brain Workshop, abbreviated “BW” (but see the software section for alternatives).

There are many variants of N-back training. A 3-letter acronym ending in ‘B’ specifies one of the possibilities. For example, ‘D2B’ and ‘D6B’ both refer to a dual N-back task, but in the former the depth of recall is 2 turns, while in the latter one must remember back 6 rounds; the ‘D’, for ‘Dual’, indicates that each round presents 2 stimuli (usually the position of the square, and a spoken letter).

But one can add further stimuli: spoken letter, position of square, and color of square. That would be ‘Triple N-back’, and so one might speak of how one is doing on ‘T4B’.

One can go further. Spoken letter, position, color, and geometric shape. This would be ‘Quad N-back’, so one might discuss one’s performance on ‘Q3B’. (It’s unclear how to compare the various modes, but it seems to be much harder to go from D2B to T3B than to go from D2B to D3B.)

Past QNB, there is Pentuple N-back (PNB) which was added in Brain Workshop 4.7 (video demonstration). The 5th modality is added by a second audio channel - that is, now sounds are in stereo.

Other abbreviations are in common use: ‘WM’ for ‘working memory’, ‘gf’ for ‘fluid intelligence’, and ‘g’ for the general intelligence factor measured by IQ tests.

N-Back Training

Should I Do Multiple Daily Sessions, or Just One?

Most users seem to go for one long N-back session, pointing out that exercises one’s focus. Others do one session in the morning and one in the evening so they can focus better on each one. There is some scientific support for the idea that evening sessions are better than morning sessions, though; see 2008 on how practice before bedtime was more effective than after waking up.

If you break up sessions into more than 2, you’re probably wasting time due to overhead, and may not be getting enough exercise in each session to really strain yourself like you need to.

Strategies

The simplest mental strategy, and perhaps the most common, is to mentally think of a list, and forget the last one each round, remembering the newest in its place. This begins to break down on higher levels - if one is repeating the list mentally, the repetition can just take too long.

Surcer writes up a list of strategies for different levels in his “My System, let’s share strategies” thread.

Are Strategies Good or Bad?

People frequently ask and discuss whether they should use some sort of strategy, and if so, what.

A number of N-backers adopt an ‘intuition’ strategy. Rather than explicitly rehearsing sequences of letters (‘f-up, h-middle; f-up, h-middle; g-down, f-up…’), they simply think very hard and wait for a feeling that they should press ‘a’ (audio match), or ‘l’ (location match). Some, like SwedishChef can be quite vociferous about it:

The challenges are in helping people understand that dual-n-back is NOT about remembering n number of visual and auditory stimuli. It’s about developing a new mental process that intuitively recognizes when it has seen or heard a stimuli n times ago.

Initially, most students of dual n-back want to remember n items as fast as they can so they can conquer the dual-n-back hill. They use their own already developed techniques to help them remember. They may try to hold the images in their head mentally and review them every time a new image is added and say the sounds out loud and review the sounds every time a new sound is added. This is NOT what we want. We want the brain to learn a new process that intuitively recognizes if an item and sound was shown 3 back or 4 back. It’s sort of like playing a new type of musical instrument.

I’ve helped some students on the site try to understand this. It’s not about how much you can remember, it’s about learning a new process. In theory, this new process translates into a better working memory, which helps you make connections better and faster.

Other N-backers think that intuition can’t work, or at least doesn’t very well:

I don’t believe that much in the “intuitive” method. I mean, sure, you can intuitively remember you heard the same letter or saw the square at the same position a few times ago, but I fail to see how you can “feel” it was exactly 6 or 7 times ago without some kind of “active” remembering. –Gaël DEEST

I totally agree with Gaël about the intuitive method not holding much water…For me a lot of times the intuitive method can be totally unreliable. You’ll be doing 5-back one game and a few games later your failing miserably at 3-back..your score all over the place. Plus, intuitive-wise, it’s best to play the same n-back level over and over because then you train your intuition…and that doesn’t seem right. –MikeM (same thread)

Few N-backers have systematically tracked intuitive versus strategic playing; DarkAlrx reports on his blog the results of his experiment, and while he considers them positive, others find them inconclusive, or like Pheonexia, even unfavorable for the intuitive approach:

Looking at your graphs and the overall drop in your performance, I think it’s clear that intuitive doesn’t work. On your score sheet, the first picture, using the intuitive method over 38 days of TNB training in 44 days your average n-back increased by less than .25. You were performing much better before. With your neurogenesis experiment, your average n-back actually decreased.

Jaeggi herself was more moderate in ~2008:

I would NOT recommend you [train the visual and auditory task separately] if you want to train the dual-task (the one we used in our study). The reason is that the combination of both modalities is an entirely different task than doing both separately! If you do the task separately, I assume you use some “rehearsal strategies”, eg. you repeat the letters or positions for yourself. In the dual-task version however, these strategies might be more difficult to apply (since you have to do 2 things simultaneously…), and that is exactly what we want… We don’t want to train strategies, we want to train processes. Processes that then might help you in the performance of other, non-trained tasks (and that is our ultimate goal). So, it is not important to reach a 7- or 8-back… It is important to fully focus your attention on the task as well as possible.

I can assure you, it is a very tough training regimen…. You can’t divert your attention even 1 second (I’m sure you have noticed…). But eventually, you will see that you get better at it and maybe you notice that you are better able to concentrate on certain things, to remember things more easily, etc. (hopefully).

(Unfortunately, doubt has been cast on this advice by the apparent effectiveness of single n-back in 2010. If single (visual/

this is a question i am being asked a lot and unfortunately, i don’t really know whether i can help with that. i can only tell you what we tell (or rather not tell) our participants and what they tell us. so, first of all, we don’t tell people at all what strategy to use - it is up to them. thing is, there are some people that tell us what you describe above, i.e. some of them tell us that it works best if they don’t use a strategy at all and just “let the squares/

letters flow by”. but of course, many participants also use more conscious strategies like rehearsing or grouping items together. but again - we let people chose their strategies themselves! ref

But it may make no difference. Even if you are engaged in a complex mnemonic-based strategy, you’re still working your memory. Strategies may not work; quoting from 2008 paper:

By this account, one reason for having obtained transfer between working memory and measures of gf is that our training procedure may have facilitated the ability to control attention. This ability would come about because the constant updating of memory representations with the presentation of each new stimulus requires the engagement of mechanisms to shift attention. Also, our training task discourages the development of simple task-specific strategies that can proceed in the absence of controlled allocation of attention.

Even if they do, they may not be a good idea; quoting from 2010:

We also proposed that it is important that participants only minimally learn task-specific strategies in order to prevent specific skill acquisition. We think that besides the transfer to matrix reasoning, the improvement in the near transfer measure provides additional evidence that the participants trained on task-underlying processes rather than relying on material-specific strategies.

Hopefully even if a trick lets you jump from 3-back to 5-back, Brain Workshop will just keep escalating the difficulty until you are challenged again. It’s not the level you reach, but the work you do.

And the Flashing Right/wrong Feedback?

A matter of preference, although those in favor of disabling the visual feedback (SHOW_FEEDBACK = False) seem to be slightly more vocal or numerous. Brain Twister apparently doesn’t give feedback. Jaeggi says:

the gaming literature also disagrees on this issue - there are different ways to think about this: whereas feedback after each trial gives you immediate feedback whether you did right or wrong, it can also be distracting as you are constantly monitoring (and evaluating) your performance. we decided that we wanted people to fully and maximally concentrate on the task itself and thus chose the approach to only give feedback at the end of the run. however, we have newer versions of the task for kids in which we give some sort of feedback (points) for each trial. thus - i can’t tell you what the optimal way is - i guess there are interindividual differences and preferences as well.

Jonathan Toomim writes:

When I was doing visual psychophysics research, I heard from my labmates that this question has been investigated empirically (at least in the context of visual psychophysics), and that the consensus in the field is that using feedback reduces immediate performance but improves learning rates. I haven’t looked up the research to confirm their opinion, but it sounds plausible to me. I would also expect it to apply to Brain Workshop. The idea, as I see it, is that feedback reduces performance because, when you get an answer wrong and you know it, your brain goes into an introspective mode to analyze the reason for the error and (hopefully) correct it, but while in this mode your brain will be distracted from the task at hand and will be more likely to miss subsequent trials.

How Can I Do Better on N-Back?

Focus harder. Play more. Sleep well, and eat healthily. Use natural lighting57. Space out practice. The less stressed you are, the better you can do.

Spacing

This study compared a high intensity working memory training (45 minutes, 4 times per week for 4 weeks) with a distributed training (45 minutes, 2 times per week for 8 weeks) in middle-aged, healthy adults…Our results indicate that the distributed training led to increased performance in all cognitive domains when compared to the high intensity training and the control group without training. The most significant differences revealed by interaction contrasts were found for verbal and visual working memory, verbal short-term memory and mental speed.

This is reminiscent of sleep’s involvement in other forms of memory and cognitive change, and 2008.

Hardcore

Curtis Warren has noticed that when he underwent a 4-day routine of practicing more than 4 hours a day, he jumped an entire level on even quad N-back58:

For example, over the past week I have been trying a new training routine. My goal was to increase my intelligence as quickly as possible. To that end, over the past 4 days I’ve done a total of roughly 360 sessions @ 2 seconds per trial (≈360 minutes of training). I had to rest on Wednesday, and I’m resting again today (I only plan on doing about 40 trials today). But I intend to finish off the week by doing 100 sessions on Saturday and another 100 on Sunday. Or more, if I can manage it.

But he cautions us that besides being a considerable time investment, it may only work for him:

The point is, while I can say without a doubt that this schedule has been effective for me, it might not be effective for you. Are the benefits worth the amount of work needed? Will you even notice an improvement? Is this healthy? These are all factors which depend entirely upon the individual actually doing the training.

Raman started DNB training, and in his first 30 days, he “took breaks every 5 days or so, and was doing about 20-30 session each day and n-back wise I made good gains (2 → 7 touching 9 on the way).”; he kept a journal on the mailing list about the experience with daily updates.

Alas, neither Raman nor Warren took an IQ or digit-span test before starting, so they can only report DNB level increases & subjective assessments.

The research does suggest that diminishing returns does not set in with training regimes of 10 or 15 minutes a day; for example, 2011 trained 4-year-olds in WM exercises, gf (NVR) exercises, or both:

…These analyses took into account that the groups differed in the amount of training received, full dose for NVR or WM groups or half dose for the CB group (Table 3). Even though the pattern is not consistent across all tests (see Figure 2), this is interpreted as confirmation of the linear dose effect that was expected to be seen. Our results suggest that the amount of transfer to non-trained tasks within the trained construct was roughly proportionate to the amount of training on that construct. A similar finding, with transfer proportional to amount of training, was reported by et al 2008. This has possible implications for the design of future cognitive training paradigms and suggests that the training should be intensive enough to lead to significant transfer and that training more than one construct does not entail any advantages in itself. The training effect presumably reaches asymptote, but where this occurs is for future studies to determine. It is probably important to ensure that participants spend enough time on each task in order to see clinically-significant transfer, which may be difficult when increasing the number of tasks being trained. This may be one of the explanations for the lack of transfer seen in the Owen et al. study (201015ya) (training six tasks in 10 minutes).

Plateauing, Or, Am I Wasting Time If I Can’t Get past 4-Back?

Some people start n-backing with great vigor and rapidly ascend levels until suddenly they stop improving and panic, wondering if something is wrong with them. Not at all! Reaching a high level is a good thing, and if one does so in just a few weeks, all the more impressive since most members take much longer than, say, 2 weeks to reach good scores on D4B. In fact, if you look at the reports in the Group survey, most reports are of plateauing at D4B or D5B months in.

The crucial thing about N-back is just that you are stressing your working memory, that’s all. The actual level doesn’t matter very much, just whether you can barely manage it; it is somewhat like lifting weights, in that regard. From 2008:

The finding that the transfer to gf remained even after taking the specific training effect into account seems to be counterintuitive, especially because the specific training effect is also related to training time. The reason for this capacity might be that participants with a very high level of n at the end of the training period may have developed very task specific strategies, which obviously boosts n-back performance, but may prevent transfer because these strategies remain too task-specific (5, 20). The averaged n-back level in the last session is therefore not critical to predicting a gain in gf; rather, it seems that working at the capacity limit promotes transfer to gf.

Mailing list members report benefits even if they have plateaued at 3 or 4-back; see the benefits section.

One commonly reported tactic to break a plateauing is to deliberately advance a level (or increase modalities), and practice hard on that extra difficult task, the idea being that this will spur adaptation and make one capable of the lower level.

Do Breaks Undo My Work?

Some people have wondered if not n-backing for a day/

Multiple group members have pointed to long gaps in their training, sometimes multiple months up to a year, which did not change their scores significantly (immediately after the break, scores may dip a level or a few percentage points in accuracy, but quickly rises to the old level). Some members have ceased n-backing for 2 or 3 years, and found their scores dropped by only 2-4 levels - far from 1 or 2-back. (Pontus Granström, on the other hand, took a break for several months and fell for a long period from D8B-D9B to D6B-D7B; he speculates it might reflect a lack of motivation.) huhwhat/

I’ve been training with n-back on and off, mostly off, for the past few years. I started about 3 years ago and was able to get up to 9-n back, but on average I would be doing around 6 or 7 n back. Then I took a break for a few years. Now after coming back, even though I have had my fair share of partying, boxing, light drugs, even polyphasic sleep, on my first few tries I was able to get back up to 5-6, and a week into it I am back at getting up to 9 n back.

This anecdotal evidence is supported by at least one WM-training letter, 2010:

Figure 1b illustrates the degree to which training transferred to an ostensibly different (and untrained) measure of verbal working memory compared to a no-contact control group. Not only did training significantly increase verbal working memory, but these gains persisted 3 months following the cessation of training!

Similarly, 2008 found WM training gains which were durable over more than a year:

The authors investigated immediate training gains, transfer effects, and 18-month maintenance after 5 weeks of computer-based training in updating of information in working memory in young and older subjects. Trained young and older adults improved significantly more than controls on the criterion task (letter memory), and these gains were maintained 18 months later. Transfer effects were in general limited and restricted to the young participants, who showed transfer to an untrained task that required updating (3-back)…

I Heard 12-Back Is Possible

Some users have reported being able to go all the way up to 12-back; Ashirgo regularly plays at D13B, but the highest at other modes seems to be T9B and Q6B.

Ashirgo offers up her 8-point scheme as to how to accomplish such feats:

’Be focused at all cost. The fluid intelligence itself is sometimes called “the strength of focus”.

You had better not rehearse the last position/

sound . It will eventually decrease your performance! I mean the rehearsal “step by step”: it will slow you down and distract. The only rehearsal allowed should be nearly unconscious and “effortless” (you will soon realize its meaning :) Both points 1 & 2 thus imply that you must be focused on the most current stimulus as strongly as you can. Nevertheless, you cannot forget about the previous stimuli. How to do that? You should hold the image of them (image, picture, drawing, whatever you like) in your mind. Notice that you still do not rehearse anything that way.

Consider dividing the stream of data (n) on smaller parts. 6-back will be then two 3-back, for instance.

Follow square with your eyes as it changes its position.

Just turn on the Jaeggi mode with all the options to ensure your task is closest to the original version.

Consider doing more than 20 trials. I am on my way to do no less than 30 today. It may also help.

You may lower the difficulty by reducing the fall-back and advance levels from >75 and =<90 to 70 and 85 respectively (for instance).’

What’s Some Relevant Research?

Training WM tasks has yielded a literature of mixed results - for every positive, there’s a negative, it seems. The following sections of positive and null results illustrate that, as do the papers themselves; from 2011:

However, there are some studies using several WM tasks to train that have also shown transfer effects to reasoning tasks (Klingberg, Fernell, Olesen, Johnson, Gustafsson, Dahlstrçm, Gillberg, Forssberg & Westerberg, 2005; Klingberg, Forssberg & Westerberg, 2002), while other WM training studies have failed to show such transfer (Dahlin, Neely, Larsson, Backman & Nyberg, 2008; Holmes, Gathercole, Place, Dunning, Hilton & Elliott, 2009; Thorell, Lindqvist, Bergman Nutley, Bohlin & Klingberg, 200916ya). Thus, it is still unclear under which conditions effects of WM training transfer to gf.

Other intervention studies have included training of attention or executive functions. Rueda and colleagues trained attention in a sample of 4- and 6-year-olds and found significant gains in intelligence (as measured with the Kaufman Brief Intelligence Test) in the 4-year-olds but only a tendency in the group of 6-year-olds (Rueda, Rothbart, McCandliss, Saccomanno & Posner, 2005). A large training study with 11,430 participants revealed practically no transfer after a 6-week intervention (10 min ⧸ day, 3 days a week) of a broader range of tasks including reasoning and planning or memory, visuo-spatial skills, mathematics and attention (Owen, Hampshire, Grahn, Stenton, Dajani, Burns, Howard & Ballard, 2010). However, this study lacked control in sample selection and compliance. In summary, it is still an open question to what extent gf can be improved by targeted training.

Working memory training including variants on dual n-back has been shown to physically change/

Physical changes have been linked to WM training and n-backing. For example, Olesen PJ, Westerberg H, Klingberg T (200421ya) Increased prefrontal and parietal activity after training of working memory. Nat Neuroscience 7:75-79; about this study, Kuriyama writes:

“ et al 2004 presented progressive evidence obtained by functional magnetic resonance imaging that repetitive training improves spatial WM performance [both accuracy and response time (RT)] associated with increased cortical activity in the middle frontal gyrus and the superior and inferior parietal cortices. Such a finding suggests that training-induced improvement in WM performance could be based on neural plasticity, similar to that for other skill-learning characteristics.”

2007, “Changes in cortical activity after training of working memory—a single-subject analysis”:

“…Practice on the WM tasks gradually improved performance and this effect lasted several months. The effect of practice also generalized to improve performance on a non-trained WM task and a reasoning task. After training, WM-related brain activity was significantly increased in the middle and inferior frontal gyrus. The changes in activity were not due to activations of any additional area that was not activated before training. Instead, the changes could best be described by small increases in the extent of the area of activated cortex. The effect of training of WM is thus in several respects similar to the changes in the functional map observed in primate studies of skill learning, although the physiological effect in WM training is located in the prefrontal association cortex.”

Executive functions, including working memory and inhibition, are of central importance to much of human behavior. Interventions intended to improve executive functions might therefore serve an important purpose. Previous studies show that working memory can be improved by training, but it is unknown if this also holds for inhibition, and whether it is possible to train executive functions in preschoolers. In the present study, preschool children received computerized training of either visuo-spatial working memory or inhibition for 5 weeks. An active control group played commercially available computer games, and a passive control group took part in only pre- and posttesting. Children trained on working memory improved significantly on trained tasks; they showed training effects on non-trained tests of spatial and verbal working memory, as well as transfer effects to attention. Children trained on inhibition showed a significant improvement over time on two out of three trained task paradigms, but no significant improvements relative to the control groups on tasks measuring working memory or attention. In neither of the two interventions were there effects on non-trained inhibitory tasks. The results suggest that working memory training can have significant effects also among preschool children. The finding that inhibition could not be improved by either one of the two training programs might be due to the particular training program used in the present study or possibly indicate that executive functions differ in how easily they can be improved by training, which in turn might relate to differences in their underlying psychological and neural processes.

“Training, maturation, and genetic influences on the development of executive attention”, et al 2005:

A neural network underlying attentional control involves the anterior cingulate in addition to lateral prefrontal areas. An important development of this network occurs between 3 and 7 years of age. We have examined the efficiency of attentional networks across age and after 5 days of attention training (experimental group) compared with different types of no training (control groups) in 4-year-old and 6-year-old children. Strong improvement in executive attention and intelligence was found from ages 4 to 6 years. Both 4- and 6-year-olds showed more mature performance after the training than did the control groups. This finding applies to behavioral scores of the executive attention network as measured by the attention network test, event-related potentials recorded from the scalp during attention network test performance, and intelligence test scores. We also documented the role of the temperamental factor of effortful control and the DAT1 gene in individual differences in attention. Overall, our data suggest that the executive attention network appears to develop under strong genetic control, but that it is subject to educational interventions during development.

Behavioral findings indicate that the core executive functions of inhibition and working memory are closely linked, and neuroimaging studies indicate overlap between their neural correlates. There has not, however, been a comprehensive study, including several inhibition tasks and several working memory tasks, performed by the same subjects. In the present study, 11 healthy adult subjects completed separate blocks of 3 inhibition tasks (a stop task, a go/

no-go task and a flanker task), and 2 working memory tasks (one spatial and one verbal). Activation common to all 5 tasks was identified in the right inferior frontal gyrus, and, at a lower threshold, also the right middle frontal gyrus and right parietal regions (BA 40 and BA 7). Left inferior frontal regions of interest (ROIs) showed a significant conjunction between all tasks except the flanker task. The present study could not pinpoint the specific function of each common region, but the parietal region identified here has previously been consistently related to working memory storage and the right inferior frontal gyrus has been associated with inhibition in both lesion and imaging studies. These results support the notion that inhibitory and working memory tasks involve common neural components, which may provide a neural basis for the interrelationship between the two systems.

Recent functional neuroimaging evidence suggests a bottleneck between learning new information and remembering old information. In two behavioral experiments and one functional MRI (fMRI) experiment, we tested the hypothesis that learning and remembering compete when both processes happen within a brief period of time. In the first behavioral experiment, participants intentionally remembered old words displayed in the foreground, while incidentally learning new scenes displayed in the background. In line with a memory competition, we found that remembering old information was associated with impaired learning of new information. We replicated this finding in a subsequent fMRI experiment, which showed that this behavioral effect was coupled with a suppression of learning-related activity in visual and medial temporal areas. Moreover, the fMRI experiment provided evidence that left mid-ventrolateral prefrontal cortex is involved in resolving the memory competition, possibly by facilitating rapid switching between learning and remembering. Critically, a follow-up behavioral experiment in which the background scenes were replaced with a visual target detection task provided indications that the competition between learning and remembering was not merely due to attention. This study not only provides novel insight into our capacity to learn and remember, but also clarifies the neural mechanisms underlying flexible behavior.

(There’s also a worthwhile blog article on this one: “Training The Mind: Transfer Across Tasks Requiring Interference Resolution”.)

“How distractible are you? The answer may lie in your working memory capacity”

Jennifer C. McVay, Michael J. Kane (200916ya). “Conducting the train of thought: Working memory capacity, goal neglect, and mind wandering in an executive-control task”. Journal of Experimental Psychology: Learning, Memory, and Cognition, 35 (1), 196-204 DOI: 10.1037/

a0014104 :

On the basis of the executive-attention theory of working memory capacity (WMC; eg. M. J. Kane, A. R. A. Conway, D. Z. Hambrick, & R. W. Engle, 200718ya), the authors tested the relations among WMC, mind wandering, and goal neglect in a sustained attention to response task (SART; a go/

no-go task). In 3 SART versions, making conceptual versus perceptual processing demands, subjects periodically indicated their thought content when probed following rare no-go targets. SART processing demands did not affect mind-wandering rates, but mind-wandering rates varied with WMC and predicted goal-neglect errors in the task; furthermore, mind-wandering rates partially mediated the WMC-SART relation, indicating that WMC-related differences in goal neglect were due, in part, to variation in the control of conscious thought.

“Working memory capacity and its relation to general intelligence”; Andrew R.A. Conway et al; TRENDS in Cognitive Sciences Vol.7 No.2003-12-12

Several recent latent variable analyses suggest that (working memory capacity) accounts for at least one-third and perhaps as much as one-half of the variance in (intelligence).What seems to be important about WM span tasks is that they require the active maintenance of information in the face of concurrent processing and interference and therefore recruit an executive attention-control mechanism to combat interference. Furthermore, this ability seems to be mediated by portions of the prefrontal cortex.

Support

2005

“Capacity Limitations in Human Cognition: Behavioral and Biological Contributions”; Jaeggi thesis:

…Experiment 6 and 7 finally tackle the issue, whether capacity limitations are trait-like, ie. fixed, or whether it is be possible to extend these limitations with training and whether generalized effects on other domains can be observed. In the last section, all the findings are integrated and discussed, and further issues remaining to be investigated are pointed out.

…In this experiment [6], the effects of a 10-day training of an adaptive version of an n-back dual task were studied. The adaptive version should be very directly depending on the actual performance of the participant: Not being too easy, but also not too difficult; always providing a sense of achievement in the participant in order to keep the motivation high. Comparing pre and post measures, effects on the task itself were evaluated, but also effects on other WM measures, and on a measure of fluid intelligence.

…As stated before, this study [7] was conducted in order to replicate and extend the findings of Experiment 6: I was primarily interested to see whether an asymptotic curve regarding performance would be reached after nearly twice of the training sessions used in Experiment 6, and further, whether generalized and differential effects on various cognitive tasks could be obtained with this training. Therefore, more tasks were included compared to Experiment 6, covering many aspects of WM (ie. verbal tasks, visuospatial tasks), executive functions, as well as control tasks not used in Experiment 6 in order to investigate whether the WM training has a selective effect on tasks which are related to the concept of WM and executive functions with no effect on these control tasks. With respect to fluid intelligence, a more appropriate task than the APM, ie. the ‘Bochumer Matrizentest’ (BOMAT; Hossiep, Turck, & Hasella, 199926ya) was used, which has the advantage that full parallel-versions are available and that the task was explicitly developed in order not to yield ceiling effects in student samples. The experiment was carried out together with Martin Buschkuehl and Daniela Blaser; the latter writing her Master thesis on the topic.

2008

“Improving fluid intelligence with training on working memory”, et al 2008 (supplement; all the data in 2005 was used in this as well); this article was widely covered (eg. Science Daily’s “Brain-Training To Improve Memory Boosts Fluid Intelligence” or Wired’s “Forget Brain Age: Researchers Develop Software That Makes You Smarter”) and sparked most people’s interest in the topic. The abstract:

Fluid intelligence (gf) refers to the ability to reason and to solve new problems independently of previously acquired knowledge. gf is critical for a wide variety of cognitive tasks, and it is considered one of the most important factors in learning. Moreover, gf is closely related to professional and educational success, especially in complex and demanding environments. Although performance on tests of gf can be improved through direct practice on the tests themselves, there is no evidence that training on any other regimen yields increased gf in adults. Furthermore, there is a long history of research into cognitive training showing that, although performance on trained tasks can increase dramatically, transfer of this learning to other tasks remains poor. Here, we present evidence for transfer from training on a demanding working memory task to measures of gf. This transfer results even though the trained task is entirely different from the intelligence test itself. Furthermore, we demonstrate that the extent of gain in intelligence critically depends on the amount of training: the more training, the more improvement in gf. That is, the training effect is dosage-dependent. Thus, in contrast to many previous studies, we conclude that it is possible to improve gf without practicing the testing tasks themselves, opening a wide range of applications.

Brain Workshop includes a special ‘Jaeggi mode’ which replicates almost exactly the settings described for the “Brain Twister” software used in the study.

No study is definitive, of course, but 2008 is still one of the major studies that must be cited in any DNB discussion. There are some issues - not as many subjects as one would like, and the researchers (quoted in the Wired article) obviously don’t know if the WM or gf gains are durable; more technical issues like the administered gf IQ tests being speeded and thus possibly reduced in validity have been raised by Moody and others.

2009

“Study on Improving Fluid Intelligence through Cognitive Training System Based on Gabor Stimulus”, 200916ya First International Conference on Information Science and Engineering, abstract:

General fluid intelligence (gf) is a human ability to reason and solve new problems independently of previously acquired knowledge and experience. It is considered one of the most important factors in learning. One of the issues which academic people concentrates on is whether gf of adults can be improved. According to the Dual N-back working memory theory and the characteristics of visual perceptual learning, this paper put forward cognitive training pattern based on Gabor stimuli. A total of 20 undergraduate students at 24 years old participated in the experiment, with ten training sessions for ten days. Through using Raven’s Standard Progressive Matrices as the evaluation method to get and analyze the experimental results, it was proved that training pattern can improve fluid intelligence of adults. This will promote a wide range of applications in the field of adult intellectual education.

Discussion and criticism of this Chinese6061 paper took place in 2 threads; the SPM was administer in 25 minutes, which while not as fast as 2008, is still not the normal length. An additional anomaly is that according to the final graph, the control group’s IQ dropped massively in the post-test (driving much of the improvement). As part of my meta-analysis, I tried to contact the 4 authors in May, June, July & September 201213ya; they eventually replied with data.

Polar (June 200916ya)

A group member, polar, conducted a small experiment at his university where he was a student; his results seemed to show an improvement. As polar would be the first to admit, the attrition in subjects (few to begin with), relatively short time of training and whatnot make the power of his study weak.

2010

“The relationship between n-back performance and matrix reasoning - implications for training and transfer”, Jaeggi et al (coded as Jaeggi2 in meta-analysis); abstract:

…In the first study, we demonstrated that dual and single n-back task performances are approximately equally correlated with performance on two different tasks measuring gf, whereas the correlation with a task assessing working memory capacity was smaller. Based on these results, the second study was aimed on testing the hypothesis that training on a single n-back task yields the same improvement in gf as training on a dual n-back task, but that there should be less transfer to working memory capacity. We trained two groups of students for four weeks with either a single or a dual n-back intervention. We investigated transfer effects on working memory capacity and gf comparing the two training groups’ performance to controls who received no training of any kind. Our results showed that both training groups improved more on gf than controls, thereby replicating and extending our prior results.

The 2 studies measured gf using Raven’s APM and the BOMAT. In both studies, the tests were administered speeded to 10 or 15 minutes as in 2008. The experimental groups saw average gains of 1 or 2 additional correct answers on the BOMAT and APM. It’s worth noting that the Single N-Back was done with a visual modality (and the DNB with the standard visual & audio).

Followup work:

et al 2012 trained audio WM and found no transfer to visual WM tasks; unfortunately, they did not measure any far transfer tasks like RAPM/

BOMAT. 2012 reports n = 47, experimentals trained on single n-back & controls on “combined verbal tasks Definetime and Who wants to be a millionaire (Millionaire)”; no improvements on “STM span and attention, short term auditory memory span and divided attention, and WM as operationalised through the Woodcock-Johnson III: Tests of cognitive abilities (WJ-III)”.

Studer-2012

The second study’s data was reused for a Big Five personality factor analysis in Studer-Luethi, Jaeggi, et al 201213ya, “Influence of neuroticism and conscientiousness on working memory training outcome”.62

The lack of n-back score correlation with WM score seems in line with an earlier study; “Working Memory, Attention Control, and the N-Back Task: A Question of Construct Validity”:

…Participants also completed a verbal WM span task (operation span task) and a marker test of general fluid intelligence (Gf; Ravens Advanced Progressive Matrices Test; J. C. Raven, J. E. Raven, & J. H. Court, 199827ya). N-back and WM span correlated weakly, suggesting they do not reflect primarily a single construct; moreover, both accounted for independent variance in gf. N-back has face validity as a WM task, but it does not demonstrate convergent validity with at least 1 established WM measure.

2010

“Does training to increase working memory capacity improve fluid intelligence?”:

The current study was successful in replicating Jaeggi et al.’s (200817ya) results. However, the current study also observed improvements in scores on the Raven’s Advanced Progressive Matrices for participants who completed a variation of the dual n-back task or a short-term memory task training program. Participants’ scores improved significantly for only two of the four tests of GJ, which raises the issue of whether the tests measure the construct gf exclusively, as defined by Cattell (196362ya), or whether they may be sensitive to other factors. The concern is whether the training is actually improving gf or if the training is improving attentional control and/

or visuospatial skills, which improves performance on specific tests of gf. The findings are discussed in terms of implications for conceptualizing and assessing gf.

136 participants split over 25-28 subjects in experimental groups and the control group. Visual n-back improved more than audio n-back; the control group was a passive control group (they did nothing but served as controls for test-retest effects).

2013

“Improved matrix reasoning is limited to training on tasks with a visuospatial component”, 2013:

Recent studies (eg. et al 2008, 201015ya) have provided evidence that scores on tests of fluid intelligence can be improved by having participants complete a four week training program using the dual n-back task. The dual n-back task is a working memory task that presents auditory and visual stimuli simultaneously. The primary goal of our study was to determine whether a visuospatial component is required in the training program for participants to experience gains in tests of fluid intelligence. We had participants complete variations of the dual n-back task or a short-term memory task as training. Participants were assessed with four tests of fluid intelligence and four cognitive tests. We were successful in corroborating Jaeggi et al.’s results, however, improvements in scores were observed on only two out of four tests of fluid intelligence for participants who completed the dual n-back task, the visual n-back task, or a short-term memory task training program. Our results raise the issue of whether the tests measure the construct of fluid intelligence exclusively, or whether they may be sensitive to other factors. The findings are discussed in terms of implications for conceptualizing and assessing fluid intelligence…The data in the current paper was part of Clayton Stephenson’s doctoral dissertation.

2011

Jaeggi, Buschkuehl, 2011 “Short-term & long-term benefits of cognitive training” (coded as Jaeggi3 in the meta-analysis); the abstract:

We trained elementary and middle school children by means of a videogame-like working memory task. We found that only children who considerably improved on the training task showed a performance increase on untrained fluid intelligence tasks. This improvement was larger than the improvement of a control group who trained on a knowledge-based task that did not engage working memory; further, this differential pattern remained intact even after a 3-mo hiatus from training. We conclude that cognitive training can be effective and long-lasting, but that there are limiting factors that must be considered to evaluate the effects of this training, one of which is individual differences in training performance. We propose that future research should not investigate whether cognitive training works, but rather should determine what training regimens and what training conditions result in the best transfer effects, investigate the underlying neural and cognitive mechanisms, and finally, investigate for whom cognitive training is most useful.

(This paper is not to be confused with the 201114ya poster, “Working Memory Training and Transfer to gf. Evidence for Domain Specificity?”, et al 2011.)

It is worth noting that the study used Single N-back (visual). Unlike 2008, “despite the experimental group’s clear training effect, we observed no significant group × test session interaction on transfer to the measures of gf. (so perhaps the training was long enough for subjects to hit their ceilings). The group which did n-back could be split, based on final IQ & n-back scores, into 2 groups; interestingly”Inspection of n-back training performance revealed that there were no group differences in the first 3 wk of training; thus, it seems that group differences emerge more clearly over time [first 3 wk: t(30) < 1; P = ns; last week: t(16) = 3.00; P < 0.01] (Fig. 3).” 3 weeks is ~21 days, or >19 days (the longest period in 2008). It’s also worth noting that 2011 seems to avoid Moody’s most cogent criticism, the speeding of the IQ tests; from the paper’s “Material and Methods” section;

We assessed matrix reasoning with two different tasks, the Test of Nonverbal Intelligence (TONI) (23) and Raven’s Standard Progressive Matrices (SPM) (24). Parallel versions were used for the pre, post-, and follow-up test sessions in counterbalanced order. For the TONI, we used the standard procedure (45 items, five practice items; untimed), whereas for the SPM, we used a shortened version (split into odd and even items; 29 items per version; two practice items; timed to 10 min after completion of the practice items. Note that virtually all of the children completed this task within the given timeframe).

The IQ results were, specifically, the control group averaged 15.33/

UoM produced a video with Jonides; 2011 has also been discussed in mainstream media. From the Wall Street Journal’s “Boot Camp for Boosting IQ”:

…when several dozen elementary- and middle-school kids from the Detroit area used this exercise for 15 minutes a day, many showed significant gains on a widely used intelligence test. Most impressive, perhaps, is that these gains persisted for three months, even though the children had stopped training…these schoolchildren showed gains in fluid intelligence roughly equal to five IQ points after one month of training…There are two important caveats to this research. The first is that not every kid showed such dramatic improvements after training. Initial evidence suggests that children who failed to increase their fluid intelligence found the exercise too difficult or boring and thus didn’t fully engage with the training.

From Discover’s blogs, “Can intelligence be boosted by a simple task? For some…”, come additional details:

She [Jaeggi] recruited 62 children, aged between seven and ten. While half of them simply learned some basic general knowledge questions, the other half trained with a cheerful computerised n-back task. They saw a stream of images where a target object appeared in one of six locations - say, a frog in a lily pond. They had to press a button if the frog was in the same place as it was two images ago, forcing them to store a continuously updated stream of images in their minds. If the children got better at the task, this gap increased so they had to keep more images in their heads. If they struggled, the gap was shortened.

Before and after the training sessions, all the children did two reasoning tests designed to measure their fluid intelligence. At first, the results looked disappointing. On average, the n-back children didn’t become any better at these tests than their peers who studied the knowledge questions. But according to Jaeggi, that’s because some of them didn’t take to the training. When she divided the children according to how much they improved at the n-back task, she saw that those who showed the most progress also improved in fluid intelligence. The others did not. Best of all, these benefits lasted for 3 months after the training. That’s a first for this type of study, although Jaeggi herself says that the effect is “not robust.” Over this time period, all the children showed improvements in their fluid intelligence, “probably [as] a result of the natural course of development”.

…Philip Ackerman, who studies learning and brain training at the University of Illinois, says, “I am concerned about the small sample, especially after splitting the groups on the basis of their performance improvements.” He has a point - the group that showed big improvements in the n-back training only included 18 children….Why did some of the children benefit from the training while others did not? Perhaps they were simply uninterested in the task, no matter how colorfully it was dressed up with storks and vampires. In Jaeggi’s earlier study with adults, every volunteer signed up themselves and were “intrinsically motivated to participate and train.” By contrast, the kids in this latest study were signed up by their parents and teachers, and some might only have continued because they were told to do so.

It’s also possible that the changing difficulty of the game was frustrating for some of the children. Jaeggi says, “The children who did not benefit from the training found the working memory intervention too effortful and difficult, were easily frustrated, and became disengaged. This makes sense when you think of physical training - if you don’t try and really run and just walk instead, you won’t improve your cardiovascular fitness.” Indeed, a recent study on IQ testing which found that they reflect motivation as well as intelligence.

Et Al 2011

This study investigated whether brain-training (working memory [WM] training) improves cognitive functions beyond the training task (transfer effects), especially regarding the control of emotional material since it constitutes much of the information we process daily. Forty-five participants received WM training using either emotional or neutral material, or an undemanding control task. WM training, regardless of training material, led to transfer gains on another WM task and in fluid intelligence. However, only brain-training with emotional material yielded transferable gains to improved control over affective information on an emotional Stroop task. The data support the reality of transferable benefits of demanding WM training and suggest that transferable gains across to affective contexts require training with material congruent to those contexts. These findings constitute preliminary evidence that intensive cognitively demanding brain-training can improve not only our abstract problem-solving capacity, but also ameliorate cognitive control processes (eg. decision-making) in our daily emotive environments.

Notes:

There seems to be an IQ increase of around one question on the RPM (but there’s an oddity with the control group which they think they correct for64)

The RPM does not seem to have been administered speeded65

The emotional aspect seems to be just replacing the ‘neutral’ existing stimuli like colors or letters or piano keys with more loaded ones66, nor does this tweak seem to change the DNB/

WM/ IQ scores of that group67

Their later study “Training the Emotional Brain: Improving Affective Control through Emotional Working Memory Training” did not use any measure of fluid intelligence.

Et Al 2011

“Relating individual differences in short-term memory-derived EEG to cognitive training effects” (coded as Kundu1 in the meta-analysis); 3 controls (Tetris), 3 experimentals (Brain Workshop) for 1000 minutes. RAPM showed a slight increase. Extremely small experimental size, which may form part of the data for et al 2012.

2011

“The Effect Of Training Working Memory And Attention On Pupils’ Fluid Intelligence” (abstract), 2011; original encrypted file (8M), screenshots of all pages in thesis (20M); discussion

Appears to have found IQ gains, but no dose-response effect, using a no-contact control group. Difficult to understand: translation assistance from Chinese speakers would be appreciated.

2012

“Working memory training: Improving intelligence - Changing brain activity”, 2012:

The main objectives of the study were: to investigate whether training on working memory (WM) could improve fluid intelligence, and to investigate the effects WM training had on neuroelectric (electroencephalography - EEG) and hemodynamic (near-infrared spectroscopy - NIRS) patterns of brain activity. In a parallel group experimental design, respondents of the working memory group after 30 h of training significantly increased performance on all tests of fluid intelligence. By contrast, respondents of the active control group (participating in a 30-h communication training course) showed no improvements in performance. The influence of WM training on patterns of neuroelectric brain activity was most pronounced in the theta and alpha bands. Theta and lower-1 alpha band synchronization was accompanied by increased lower-2 and upper alpha desynchronization. The hemodynamic patterns of brain activity after the training changed from higher right hemispheric activation to a balanced activity of both frontal areas. The neuroelectric as well as hemodynamic patterns of brain activity suggest that the training influenced WM maintenance functions as well as processes directed by the central executive. The changes in upper alpha band desynchronization could further indicate that processes related to long term memory were also influenced.

14 experimental & 15 controls; the testing was a little unusual:

Respondents solved four test-batteries, for which the procedure was the same during pre- and post-testing. The same test-batteries were used on pre- and post-testing. The digit span subtest (WAIS-R) was administered separately, according to the directions in the test manual (Wechsler, 198144ya). The other three tests (RAPM, verbal analogies and spatial rotation) were administered while the respondents’ EEG and NIRS measures were recorded.

The RAPM was based on a modified version of Raven’s progressive matrices (Raven, 199035ya), a widely used and well established test of fluid intelligence (Sternberg, Ferrari, Clinkenbeard, & Grigorenko, 199629ya). The correlation between this modified version of RAPM and WAIS-R was r = .56, (p < .05, n = 97). Similar correlations of the order of 0.40-0.75, were also reported for the standard version of RAPM (Court & Raven, 199530ya). Therefore it can be concluded that the modified application of the RAPM did not significantly alter its metric characteristics. Used were 50 test items - 25 easy (Advanced Progressive Matrices Set I - 12 items and the B Set of the Colored Progressive Matrices), and 25 difficult items (Advanced Progressive Matrices Set II, items 12-36). Participants saw a figural matrix with the lower right entry missing. They had to determine which of the four options fitted into the missing space. The tasks were presented on a computer screen (positioned about 80-100 cm in front of the respondent), at fixed 10 or 14 s interstimulus intervals. They were exposed for 6 s (easy) or 10 s (difficult) following a 2-s interval, when a cross was presented. During this time the participants were instructed to press a button on a response pad (1-4) which indicated their answer.

At 25 hard questions, and <14s a question, that implies the RAPM was administered in <5.8 minutes. They comment:

To further investigate possible influences of task difficulty on the observed performance gains on the RAPM a GLM for repeated measures test/

retest  easy/ difficult-items  group (WM, AC) was conducted. The analysis showed only a significant interaction effect for the test/ retest condition and type of training used in the two groups (F(1, 27) = 4.47; p < .05; partial eta2 = .15). A GLM conducted for the WM group showed only a significant test/ retest effect (F(1, 13) = 30.11; p < .05; partial eta2 = .70), but no interaction between the test/ retest conditions and the difficulty level (F(1, 13) = 1.79; p = .17 not-significant; partial eta2 = .12). As can be seen in Fig. 4 after WM training an about equal increase in respondents’ performance for the easy and difficult test items was observed. On the other hand, no increases in performance, neither for the easy nor for the difficult test items, in respondents of the active control group were observed (F(1, 14) = .47; p = .50 not- significant; partial eta2 = .03).

(Even on the “easy” questions, no group performed better than 76% accuracy.)

2013

A number of recent studies have provided evidence that training working memory can lead to improvements in fluid intelligence, working memory span, and performance on other untrained tasks. However, in addition to a number of mixed results, many of these studies suffer from design limitations. The aim of the present study was to experimentally investigate the effects of a dual n-back working memory training task on a variety of measures of fluid intelligence, reasoning, working memory span, and attentional control. The present study compared a training group with an active control group (a placebo group), using appropriate methods that overcame the limitations of previous studies. The dual n-back training group improved more than the active control group on some, but not all outcome measures. Differential improvement for the training group was observed on fluid intelligence, working memory capacity, and response times on conflict trials in the Stroop task. In addition, individual differences in pre-training fluid intelligence scores and initial performance on the training task explain some of the variance in outcome measure improvements. We discuss these results in the context of previous studies, and suggest that additional work is needed in order to further understand the variables responsible for transfer from training.

Et Al 2013

“The role of individual differences in cognitive training and transfer”:

Working memory (WM) training has recently become a topic of intense interest and controversy. Although several recent studies have reported near- and far-transfer effects as a result of training WM-related skills, others have failed to show far transfer, suggesting that generalization effects are elusive. Also, many of the earlier intervention attempts have been criticized on methodological grounds. The present study resolves some of the methodological limitations of previous studies and also considers individual differences as potential explanations for the differing transfer effects across studies. We recruited intrinsically motivated participants and assessed their need for cognition (NFC; Cacioppo & Petty Journal of Personality and Social Psychology 42:116-131, 198243ya) and their implicit theories of intelligence (Dweck, 199926ya) prior to training. We assessed the efficacy of two interventions by comparing participants’ improvements on a battery of fluid intelligence tests against those of an active control group. We observed that transfer to a composite measure of fluid reasoning resulted from both WM interventions. In addition, we uncovered factors that contributed to training success, including motivation, need for cognition, preexisting ability, and implicit theories about intelligence.

This is quite a complex study, with a lot of analysis I don’t think I entirely understand. The quick summary is table 2 on pg10: the DNB group fell on APM, rose on BOMAT (neither statistically-significant); the SNB group increased on APM & BOMAT (but only BOMAT was statistically-significant).

Michael J. Kane has written some critical comments on the results.

2013

“Near and Far Transfer of Working Memory Training Related Gains in Healthy Adults”, 2013:

Enhancing intelligence through working memory training is an attractive concept, particularly for middle-aged adults. However, investigations of working memory training benefits are limited to younger or older adults, and results are inconsistent. This study investigates working memory training in middle age-range adults. Fifty healthy adults, aged 30-60, completed measures of working memory, processing speed, and fluid intelligence before and after a 5-week web-based working memory (experimental) or processing speed (active control) training program. Baseline intelligence and personality were measured as potential individual characteristics associated with change. Improved performance on working memory and processing speed tasks were experienced by both groups; however, only the working memory training group improved in fluid intelligence. Agreeableness emerged as a personality factor associated with working memory training related change. Albeit limited by power, findings suggest that dual n-back working memory training not only enhances working memory but also fluid intelligence in middle-aged healthy adults.

The personality correlations seem to differ with Studer-Luethi.

Et Al 2013