A/B testing long-form readability on Gwern.net

A log of experiments done on the site design, intended to render pages more readable, focusing on the challenge of testing a static site, page width, fonts, plugins, and effects of advertising.

- Background

- Problems With “Conversion” Metric

- Ideas For Testing

- Testing

- Resumption: ABalytics

max-widthRedux- Fonts

- Line Height

- Null Test

- Text & Background Color

- List Symbol And Font-Size

- Blockquote Formatting

- Font Size & ToC Background

- Section Header Capitalization

- ToC Formatting

- BeeLine Reader Text Highlighting

- Floating Footnotes

- Indented Paragraphs

- Sidebar Elements

- Moving Sidebar Metadata Into Page

- CSE

- Banner Ad Effect on Total Traffic

- Deep Reinforcement Learning

- Indentation + Left-Justified Text

- Appendix

To gain some statistical & web development experience and to improve my readers’ experiences, I have been running a series of CSS A/B tests since June 201214ya. As expected, most do not show any meaningful difference.

Background

https://www.google.com/analytics/siteopt/exptlist?account=18912926

http://www.pqinternet.com/196.htm

https://support.google.com/websiteoptimizer/bin/answer.py?answer=61203 “Experiment with site-wide changes”

https://support.google.com/websiteoptimizer/bin/answer.py?answer=117911 “Working with global headers”

https://support.google.com/websiteoptimizer/bin/answer.py?answer=61427

https://support.google.com/websiteoptimizer/bin/answer.py?answer=188090 “Varying page and element styles” - testing with inline CSS overriding the defaults

https://stackoverflow.com/questions/2993199/with-google-website-optimizers-multivariate-testing-can-i-vary-multiple-css-cl

http://www.xemion.com/blog/the-secret-to-painless-google-website-optimizer-70.html

https://stackoverflow.com/tags/google-website-optimizer/hot

Problems With “Conversion” Metric

https://support.google.com/websiteoptimizer/bin/answer.py?answer=74345 “Time on page as a conversion goal” - every page converts, by using a timeout (mine is 40 seconds). Problem: dichotomizing a continuous variable into a single binary variable destroys a massive amount of information. This is well-known in the statistical and psychological literature (eg. Mac et al 2002) but I’ll illustrate further with some information-theoretical observations.

According to my Analytics, the mean reading time (time on page) is 1:47 and the maximum bracket, hit by 1% of viewers, is 1,801 seconds, and the range 1–1,801 takes <10.8 bits to encode (log2(1801) → 10.81), hence each page view could be represented by <10.8 bits (less since reading time is so highly skewed). But if we dichotomize, then we learn simply that ~14% of readers will read for 40 seconds, hence each reader carries not 6 bits, nor 1 bit (if 50% read that long) but closer to 2/3 of a bit:

p=0.14; q=1-p; (-p*log2(p) - q*log2(q))

# [1] 0.5842This isn’t even an efficient dichotomization: we could improve the fractional bit to 1 bit if we could somehow dichotomize at 50% of readers:

p=0.50; q=1-p; (-p*log2(p) - q*log2(q))

# [1] 1But unfortunately, simply lowering the timeout will have minimal returns as Analytics also reports that 82% of reader spend 0-10 seconds on pages. So we are stuck with a severe loss.

Ideas For Testing

CSS

differences from readability

every declaration in default.CSS?test the suggestions in https://code.google.com/p/better-web-readability-project/ http://www.vcarrer.com/200917ya/05/how-we-read-on-web-and-how-can-we.html

Testing

max-width

CSS-3 property: set how wide the page will be in pixels if unlimited screen real estate is available. I noticed some people complained that pages were ‘too wide’ and this made it hard to read, which apparently is a real thing since lines are supposed to fit in eye saccades. So I tossed in 800px, 900px, 1,300px, and 1,400px to the first A/B test.

<script>

function utmx_section(){}function utmx(){}

(function(){var k='0520977997',d=document,l=d.location,c=d.cookie;function f(n){

if(c){var i=c.indexOf(n+'=');if(i>-1){var j=c.indexOf(';',i);return escape(c.substring(i+n.

length+1,j<0?c.length:j))}}}var x=f('__utmx'),xx=f('__utmxx'),h=l.hash;

d.write('<sc'+'ript src="'+

'http'+(l.protocol=='https:'?'s://ssl':'://www')+'.google-analytics.com'

+'/siteopt.js?v=1&utmxkey='+k+'&utmx='+(x?x:'')+'&utmxx='+(xx?xx:'')+'&utmxtime='

+new Date().valueOf()+(h?'&utmxhash='+escape(h.substr(1)):'')+

'" type="text/javascript" charset="utf-8"></sc'+'ript>')})();

</script>

<script type="text/javascript">

var _gaq = _gaq || [];

_gaq.push(['gwo._setAccount', 'UA-18912926-2']);

_gaq.push(['gwo._trackPageview', '/0520977997/test']);

(function() {

var ga = document.createElement('script'); ga.type = 'text/javascript'; ga.async = true;

ga.src = ('https:' == document.location.protocol ? 'https://ssl' : 'http://www')

+ '.google-analytics.com/ga.js';

var s = document.getElementsByTagName('script')[0]; s.parentNode.insertBefore(ga, s);

})();

</script>

<script type="text/javascript">

var _gaq = _gaq || [];

_gaq.push(['gwo._setAccount', 'UA-18912926-2']);

setTimeout(function() {

_gaq.push(['gwo._trackPageview', '/0520977997/goal']);

}, 40000);

(function() {

var ga = document.createElement('script'); ga.type = 'text/javascript'; ga.async = true;

ga.src = ('https:' == document.location.protocol ? 'https://ssl' : 'http://www') +

'.google-analytics.com/ga.js';

var s = document.getElementsByTagName('script')[0]; s.parentNode.insertBefore(ga, s);

})();

</script>

<script>utmx_section("max width")</script>

<style>

body { max-width: 800px; }

</style>

</noscript>It ran from mid-June to 2012-08-01. Unfortunately, I cannot be more specific: on 1 August, Google deleted Website Optimizer and told everyone to use ‘Experiments’ in Google Analytics - and deleted all my information. The graph over time, the exact numbers - all gone. So this is from memory.

The results were initially very promising: ‘conversion’ was defined as staying on a page for 40 seconds (I reasoned that this meant someone was actually reading the page), and had a base of around 70% of readers converting. With a few hundred hits, 900px converted at 10-20% more than the default! I was ecstatic. So when it began falling, I was only a little bothered (one had to expect some regression to the mean since the results were too good to be true). But as the hits increased into the low thousands, the effect kept shrinking all the way down to 0.4% improved conversion. At some points, 1,300px actually exceeded 900px.

The second distressing thing was that Google’s estimated chance of a particular intervention beating the default (which I believe is a Bonferroni-corrected p-value), did not increase! Even as each version received 20,000 hits, the chance stubbornly bounced around the 70-90% range for 900px and 1,300px. This remained true all the way to the bitter end. At the end, each version had racked up 93,000 hits and still was in the 80% decile. Wow.

Ironically, I was warned at the beginning about both of these possible behaviors by a paper I read on large-scale corporate A/B testing: http://www.exp-platform.com/Documents/puzzlingOutcomesInControlledExperiments.pdf and http://www.exp-platform.com/Documents/controlledExperimentDMKD.pdf and http://www.exp-platform.com/Documents/201313ya%20controlledExperimentsAtScale.pdf It covered at length how many apparent trends simply evaporated, but it also covered later a peculiar phenomenon where A/B tests did not converge even after being run on ungodly amounts of data because the standard deviations kept changing (the user composition kept shifting and rendering previous data more uncertain). And it’s a general phenomenon that even for large correlations, the trend will bounce around a lot before it stabilizes (2013).

Oy vey! When I discovered Google had deleted my results, I decided to simply switch to 900px. Running a new test would not provide any better answers.

TODO

how about a blue background? see https://www.overcomingbias.com/201016ya/06/near-far-summary.html for more design ideas

table striping

tbody tr:hover td { background-color: #f5f5f5;}

tbody tr:nth-child(odd) td { background-color: #f9f9f9;}link decoration

a { color: black; text-decoration: underline;}

a { color:#005AF2; text-decoration:none; }Resumption: ABalytics

In March 201313ya, I decided to give A/B testing another whack. Google Analytics Experiment did not seem to have improved and the commercial services continued to charge unacceptable prices, so I gave the Google Analytics custom variable integration approach another trying using ABalytics. The usual puzzling, debugging, and frustration of combining so many disparate technologies (HTML and CSS and JS and Google Analytics) aside, it seemed to work on my test page. The current downside seems to be that the ABalytics approach may be fragile, and the UI in GA is awful (you have to do the statistics yourself).

max-width Redux

The test case is to rerun the max-width test and finish it.

Implementation

The exact changes:

Sun Mar 17 11:25:39 EDT 2013 gwern@gwern.net

* default.html: setup ABalytics a/b testing https://github.com/danmaz74/ABalytics

(hope this doesn't break anything...)

addfile ./static/js/abalytics.js

hunk ./static/js/abalytics.js 1

...

hunk ./static/template/default.html 28

+ <div class="maxwidth_class1"></div>

+

...

- <noscript><p>Enable JavaScript for Disqus comments</p></noscript>

+ window.onload = function() {

+ ABalytics.applyHtml();

+ };

+ </script>

hunk ./static/template/default.html 119

+

+ ABalytics.init({

+ maxwidth: [

+ {

+ name: '800',

+ "maxwidth_class1": "<style>body { max-width: 800px; }</style>",

+ "maxwidth_class2": ""

+ },

+ {

+ name: '900',

+ "maxwidth_class1": "<style>body { max-width: 900px; }</style>",

+ "maxwidth_class2": ""

+ },

+ {

+ name: '1100',

+ "maxwidth_class1": "<style>body { max-width: 1100px; }</style>",

+ "maxwidth_class2": ""

+ },

+ {

+ name: '1200',

+ "maxwidth_class1": "<style>body { max-width: 1200px; }</style>",

+ "maxwidth_class2": ""

+ },

+ {

+ name: '1300',

+ "maxwidth_class1": "<style>body { max-width: 1300px; }</style>",

+ "maxwidth_class2": ""

+ },

+ {

+ name: '1400',

+ "maxwidth_class1": "<style>body { max-width: 1400px; }</style>",

+ "maxwidth_class2": ""

+ }

+ ],

+ }, _gaq);

+Results

I wound up the test on 2013-04-17 with the following results:

Width (px) |

Visits |

Conversion |

|---|---|---|

1,100 |

18,164 |

14.49% |

1,300 |

18,071 |

14.28% |

1,200 |

18,150 |

13.99% |

800 |

18,599 |

13.94% |

900 |

18,419 |

13.78% |

1,400 |

18,378 |

13.68% |

109,772 |

14.03% |

Analysis

1,100px is close to my original A/B test indicating 1,000px was the leading candidate, so that gives me additional confidence, as does the observation that 1,300px and 1,200px are the other leading candidates. (Curiously, the site conversion average before was 13.88%; perhaps my underlying traffic changed slightly around the time of the test? This would demonstrate why alternatives need to be tested simultaneously.) A quick and dirty R test of 1,100px vs 1,300px (prop.test(c(2632,2581),c(18164,18071))) indicates the difference isn’t statistically-significant (at p = 0.58), and we might want more data; worse, there is no clear linear relation between conversion and width (the plot is erratic, and a linear fit a dismal p = 0.89):

rates <- read.csv(stdin(),header=TRUE)

Width,N,Rate

1100,18164,0.1449

1300,18071,0.1428

1200,18150,0.1399

800,18599,0.1394

900,18419,0.1378

1400,18378,0.1368

rates$Successes <- rates$N * rates$Rate

rates$Successes <- round(rates$Successes,0)

rates$Failures <-rates$N - rates$Successes

g <- glm(cbind(Successes,Failures) ~ Width, data=rates, family="binomial")

# ...Coefficients:

# Estimate Std. Error z value Pr(>|z|)

# (Intercept) -1.82e+00 4.65e-02 -39.12 <2e-16

# Width 5.54e-06 4.10e-05 0.14 0.89

## not much better:

rates$Width <- as.factor(rates$Width)

rates$Width <- relevel(rates$Width, ref="900")

g2 <- glm(cbind(Successes,Failures) ~ Width, data=rates, family="binomial"); summary(g2)But I want to move on to the next test and by the same logic it is highly unlikely that the difference between them is large or much in 1,300px’s favor (the kind of mistake I care about: switching between 2 equivalent choices doesn’t matter, missing out on an improvement does matter - maximizing β, not minimizing α).

Fonts

The New York Times ran an informal online experiment with a large number of readers (n = 60750) and found that the Baskerville font led to more readers agreeing with a short text passage - this seems plausible enough given their very large sample size and Wikipedia’s note that “The refined feeling of the typeface makes it an excellent choice to convey dignity and tradition.”

Power Analysis

Would this font work its magic on Gwern.net too? Let’s see. The sample size is quite manageable, as over a month I will easily have 60k visits, and they tested 6 fonts, expanding their necessary sample. What sample size do I actually need? Their professor estimates the effect size of Baskerville at 1.5%; I would like my A/B test to have very high statistical power (0.9) and reach more stringent statistical-significance (p < 0.01) so I can go around and in good conscience tell people to use Baskerville. I already know the average “conversion rate” is ~13%, so I get this power calculation:

power.prop.test(p1=0.13+0.015, p2=0.13, power=0.90, sig.level=0.01)

Two-sample comparison of proportions power calculation

n = 15683

p1 = 0.145

p2 = 0.13

sig.level = 0.01

power = 0.9

alternative = two.sided

NOTE: n is number in *each* group15,000 visitors in each group seems reasonable; at ~16k visitors a week, that suggests a few weeks of testing. Of course I’m testing 4 fonts (see below), but that still fits in the ~2 months I’ve allotted for this test.

Implementation

I had previously drawn on the NYT experiment for my site design:

html {

...

font-family: Georgia, "HelveticaNeue-Light", "Helvetica Neue Light", "Helvetica Neue", Helvetica,

Arial, "Lucida Grande", garamond, palatino, verdana, sans-serif;

}I had not used Baskerville but Georgia since Georgia seemed similar and was convenient, but we’ll fix that now. Besides Baskerville & Georgia, we’ll omit Comic Sans (of course), but we can try Trebuchet for a total of 4 fonts (falling back to Georgia):

hunk ./static/template/default.html 28

+ <div class="fontfamily_class1"></div>

...

hunk ./static/template/default.html 121

+ fontfamily: [

+ {

+ name: 'Baskerville',

+ "fontfamily_class1": "<style>html { font-family: Baskerville, Georgia; }</style>",

+ "fontfamily_class2": ""

+ },

+ {

+ name: 'Georgia',

+ "fontfamily_class1": "<style>html { font-family: Georgia; }</style>",

+ "fontfamily_class2": ""

+ },

+ {

+ name: 'Trebuchet',

+ "fontfamily_class1": "<style>html { font-family: 'Trebuchet MS', Georgia; }</style>",

+ "fontfamily_class2": ""

+ },

+ {

+ name: 'Helvetica',

+ "fontfamily_class1": "<style>html { font-family: Helvetica, Georgia; }</style>",

+ "fontfamily_class2": ""

+ }

+ ],Results

Running from 2013-04-14 to 2013-06-16:

Font |

Type |

Visits |

Conversion |

|---|---|---|---|

Trebuchet |

sans |

35,473 |

13.81% |

Baskerville |

serif |

36,021 |

13.73% |

Helvetica |

sans |

35,656 |

13.43% |

Georgia |

serif |

35,833 |

13.31% |

sans |

71,129 |

13.62% |

|

serif |

71,854 |

13.52% |

|

142,983 |

13.57% |

The sample size for each font is 20k higher than I projected due to the enormous popularity of an analysis of the lifetimes of Google services I finished during the test. Regardless, it’s clear that the results - with double the total sample size of the NYT experiment, focused on fewer fonts - are disappointing and there seems to be very little difference between fonts.

Analysis

Picking the most extreme difference, between Trebuchet and Georgia, the difference is close to the usual definition of statistical-significance:

prop.test(c(0.1381*35473,0.1331*35833),c(35473,35833))

# 2-sample test for equality of proportions with continuity correction

#

# data: c(0.1381 * 35473, 0.1331 * 35833) out of c(35473, 35833)

# X-squared = 3.76, df = 1, p-value = 0.0525

# alternative hypothesis: two.sided

# 95% confidence interval:

# -5.394e-05 1.005e-02

# sample estimates:

# prop 1 prop 2

# 0.1381 0.1331Which naturally implies that the much smaller difference between Trebuchet and Baskerville is not statistically-significant:

prop.test(c(0.1381*35473,0.1373*36021), c(35473,36021))

# 2-sample test for equality of proportions with continuity correction

#

# data: c(0.1381 * 35473, 0.1373 * 36021) out of c(35473, 36021)

# X-squared = 0.0897, df = 1, p-value = 0.7645

# alternative hypothesis: two.sided

# 95% confidence interval:

# -0.00428 0.00588Since there’s only small differences between individual fonts, I wondered if there might be a difference between the two sans-serifs and the two serifs. If we lump the 4 fonts into those 2 categories and look at the small difference in mean conversion rate:

prop.test(c(0.1362*71129,0.1352*71854), c(71129,71854))

# 2-sample test for equality of proportions with continuity correction

#

# data: c(0.1362 * 71129, 0.1352 * 71854) out of c(71129, 71854)

# X-squared = 0.2963, df = 1, p-value = 0.5862

# alternative hypothesis: two.sided

# 95% confidence interval:

# -0.002564 0.004564Nothing doing there either. More generally:

rates <- read.csv(stdin(),header=TRUE)

Font,Serif,N,Rate

Trebuchet,FALSE,35473,0.1381

Baskerville,TRUE,6021,0.1373

Helvetica,FALSE,35656,0.1343

Georgia,TRUE,5833,0.1331

rates$Successes <- rates$N * rates$Rate

rates$Successes <- round(rates$Successes,0)

rates$Failures <-rates$N - rates$Successes

g <- glm(cbind(Successes,Failures) ~ Font, data=rates, family="binomial"); summary(g)

# ...Coefficients:

# Estimate Std. Error z value Pr(>|z|)

# (Intercept) -1.83745 0.03744 -49.08 <2e-16

# FontGeorgia -0.03692 0.05374 -0.69 0.49

# FontHelvetica -0.02591 0.04053 -0.64 0.52

# FontTrebuchet 0.00634 0.04048 0.16 0.88With essentially no meaningful differences between conversion rates, this suggests that however fonts matter, they don’t matter for reading duration. So I feel free to pick the font that appeals to me visually, which is Baskerville.

Line Height

I have seen complaints that lines on Gwern.net are “too closely spaced” or “run together” or “cramped”, referring to the line height (the CSS property line-height). I set the CSS to line-height: 150%; to deal with this objection, but this was a simple hack based on rough eyeballing of it, and it was done before I changed the max-width and font-family settings after the previous testing. So it’s worth testing some variants.

Most web design guides seem to suggest a safe default of 120%, rather than my current 150%. If we try to test each decile plus one on the outside, that’d give us 110, 120, 130, 140, 150, 160 or 6 options, which combined with the expected small effect, would require an unreasonable sample size (and I have nothing in the pipeline I expect might catch fire like the Google analysis and deliver an excess >50k visits). So I’ll try just 120/130/140/150, and schedule a similar block of time as fonts (ending the experiment on 2013-08-16, with presumably >70k datapoints).

Implementation

hunk ./static/template/default.html 30

- <div class="fontfamily_class1"></div>

+ <div class="linewidth_class1"></div>

hunk ./static/template/default.html 156

- fontfamily:

+ linewidth:

hunk ./static/template/default.html 158

- name: 'Baskerville',

- "fontfamily_class1": "<style>html { font-family: Baskerville, Georgia; }</style>",

- "fontfamily_class2": ""

+ name: 'Line120',

+ "linewidth_class1": "<style>div#content { line-height: 120%;}</style>",

+ "linewidth_class2": ""

hunk ./static/template/default.html 163

- name: 'Georgia',

- "fontfamily_class1": "<style>html { font-family: Georgia; }</style>",

- "fontfamily_class2": ""

+ name: 'Line130',

+ "linewidth_class1": "<style>div#content { line-height: 130%;}</style>",

+ "linewidth_class2": ""

hunk ./static/template/default.html 168

- name: 'Trebuchet',

- "fontfamily_class1": "<style>html { font-family: 'Trebuchet MS', Georgia; }</style>",

- "fontfamily_class2": ""

+ name: 'Line140',

+ "linewidth_class1": "<style>div#content { line-height: 140%;}</style>",

+ "linewidth_class2": ""

hunk ./static/template/default.html 173

- name: 'Helvetica',

- "fontfamily_class1": "<style>html { font-family: Helvetica, Georgia; }</style>",

- "fontfamily_class2": ""

+ name: 'Line150',

+ "linewidth_class1": "<style>div#content { line-height: 150%;}</style>",

+ "linewidth_class2": ""Analysis

From 2013-06-15–2013-08-1513ya:

line % |

n |

Conversion % |

|---|---|---|

130 |

18,124 |

15.26 |

150 |

17,459 |

15.22 |

120 |

17,773 |

14.92 |

140 |

17,927 |

14.92 |

71,283 |

15.08 |

Just from looking at the miserably small difference between the most extreme percentages (15.26 - 14.92 = 0.34%), we can predict that nothing here was statistically-significant:

x1 <- 18124; x2 <- 17927; prop.test(c(x1*0.1524, x2*0.1476), c(x1,x2))

# 2-sample test for equality of proportions with continuity correction

#

# data: c(x1 * 0.1524, x2 * 0.1476) out of c(x1, x2)

# X-squared = 1.591, df = 1, p-value = 0.2072I changed the 150% to 130% for the heck of it, even though the difference between 130 and 150 was trivially small:

rates <- read.csv(stdin(),header=TRUE)

Width,N,Rate

130,18124,0.1526

150,17459,0.1522

120,17773,0.1492

140,17927,0.1492

rates$Successes <- rates$N * rates$Rate

rates$Successes <- round(rates$Successes,0)

rates$Failures <-rates$N - rates$Successes

rates$Width <- as.factor(rates$Width)

g <- glm(cbind(Successes,Failures) ~ Width, data=rates, family="binomial")

# ...Coefficients:

# Estimate Std. Error z value Pr(>|z|)

# (Intercept) -1.74e+00 2.11e-02 -82.69 <2e-16

# Width130 2.65e-02 2.95e-02 0.90 0.37

# Width140 9.17e-06 2.97e-02 0.00 1.00

# Width150 2.32e-02 2.98e-02 0.78 0.44Null Test

One of the suggestions in the A/B testing papers was to run a “null” A/B test (or “A/A test”) where the payload is empty but the A/B testing framework is still measuring conversions etc. By definition, the null hypothesis of “no difference” should be true and at an alpha of 0.05, only 5% of the time would the null tests yield a p < 0.05 (which is very different from the usual situation). The interest here is that it’s possible that something is going wrong in one’s A/B setup or in general, and so if one gets a “statistically-significant” result, it may be worthwhile investigating this anomaly.

It’s easy to switch from the lineheight test to the null test; just rename the variables for Google Analytics, and empty the payloads:

hunk ./static/template/default.html 30

- <div class="linewidth_class1"></div>

+ <div class="null_class1"></div>

hunk ./static/template/default.html 158

- linewidth: [

+ null: [

+ ...]]

hunk ./static/template/default.html 160

- name: 'Line120',

- "linewidth_class1": "<style>div#content { line-height: 120%;}</style>",

+ name: 'null1',

+ "null_class1": "",

hunk ./static/template/default.html 165

- { ...

- name: 'Line130',

- "linewidth_class1": "<style>div#content { line-height: 130%;}</style>",

- "linewidth_class2": ""

- },

- {

- name: 'Line140',

- "linewidth_class1": "<style>div#content { line-height: 140%;}</style>",

- "linewidth_class2": ""

- },

- {

- name: 'Line150',

- "linewidth_class1": "<style>div#content { line-height: 150%;}</style>",

+ name: 'null2',

+ "null_class1": "",

+ ... }Since any difference due to the testing framework should be noticeable, this will be a shorter experiment, from 15 August to 29 August.

Results

While amusingly the first pair of 1k hits resulted in a dramatic 18% vs 14% result, this quickly disappeared into a much more normal-looking set of data:

option |

n |

conversion |

|---|---|---|

null2 |

7,359 |

16.23% |

null1 |

7,488 |

15.89% |

14,847 |

16.06% |

Analysis

Ah, but can we reject the null hypothesis that [] == []? In a rare victory for null-hypothesis-significance-testing, we do not commit a Type I error:

x1 <- 7359; x2 <- 7488; prop.test(c(x1*0.1623, x2*0.1589), c(x1,x2))

# 2-sample test for equality of proportions with continuity correction

#

# data: c(x1 * 0.1623, x2 * 0.1589) out of c(x1, x2)

# X-squared = 0.2936, df = 1, p-value = 0.5879

# alternative hypothesis: two.sided

# 95% confidence interval:

# -0.008547 0.015347Hurray—we controlled our false-positive error rate! But seriously, it is nice to see that ABalytics does not seem to be broken & favoring either option and any results driven by placement in the array of options.

Text & Background Color

As part of the generally monochromatic color scheme, the background was off-white (grey) and the text was black:

html { ...

background-color: #FCFCFC; /* off-white */

color: black;

... }The hyperlinks, on the other hand, make use of an off-black color: #303C3C, partially motivated by Ian Storm Taylor’s advice to “Never Use Black”. I wonder - should all the text be off-black too? And which combination is best? White/black? Off-white/black? Off-white/off-black? White/off-black? Let’s try all 4 combinations here.

Implementation

The usual:

hunk ./static/template/default.html 30

- <div class="underline_class1"></div>

+ <div class="ground_class1"></div>

hunk ./static/template/default.html 155

- underline: [

+ ground: [

hunk ./static/template/default.html 157

- name: 'underlined',

- "underline_class1": "<style>a { color: #303C3C; text-decoration: underline; }</style>",

- "underline_class2": ""

+ name: 'bw',

+ "ground_class1": "<style>html { background-color: white; color: black; }</style>",

+ "ground_class2": ""

hunk ./static/template/default.html 162

- name: 'notUnderlined',

- "underline_class1": "<style>a { color: #303C3C; text-decoration: none; }</style>",

- "underline_class2": ""

+ name: 'obw',

+ "ground_class1": "<style>html { background-color: white; color: #303C3C; }</style>",

+ "ground_class2": ""

+ },

+ {

+ name: 'bow',

+ "ground_class1": "<style>html { background-color: #FCFCFC; color: black; }</style>",

+ "ground_class2": ""

+ },

+ {

+ name: 'obow',

+ "ground_class1": "<style>html { background-color: #FCFCFC; color: #303C3C; }</style>",

+ "ground_class2": ""

... ]]Data

I am a little curious about this one, so I scheduled a full month and half: 10 September - 20 October. Due to far more traffic than anticipated from submissions to Hacker News, I cut it short by 10 days to avoid wasting traffic on a test which was done (a total n of 231,599 was more than enough). The results:

Version |

n |

Conversion |

|---|---|---|

bw |

58,237 |

12.90% |

obow |

58,132 |

12.62% |

bow |

57,576 |

12.48% |

obw |

57,654 |

12.44% |

Analysis

rates <- read.csv(stdin(),header=TRUE)

Black,White,N,Rate

TRUE,TRUE,58237,0.1290

FALSE,FALSE,58132,0.1262

TRUE,FALSE,57576,0.1248

FALSE,TRUE,57654,0.1244

rates$Successes <- rates$N * rates$Rate

rates$Successes <- round(rates$Successes,0)

rates$Failures <-rates$N - rates$Successes

g <- glm(cbind(Successes,Failures) ~ Black * White, data=rates, family="binomial")

summary(g)

# ...Coefficients:

# Estimate Std. Error z value Pr(>|z|)

# (Intercept) -1.9350 0.0125 -154.93 <2e-16

# BlackTRUE -0.0128 0.0177 -0.72 0.47

# WhiteTRUE -0.0164 0.0178 -0.92 0.36

# BlackTRUE:WhiteTRUE 0.0545 0.0250 2.17 0.03

#

# (Dispersion parameter for binomial family taken to be 1)

#

# Null deviance: 6.8625e+00 on 3 degrees of freedom

# Residual deviance: -1.1758e-11 on 0 degrees of freedom

# AIC: 50.4

summary(step(g))

# same thingSo we can estimate the net effect of the 4 possibilities:

Black, White: -0.0128 + -0.0164 + 0.0545 = 0.0253

Off-black, Off-white: 0 + 0 + 0 = 0

Black, Off-white: -0.0128 + 0 + 0 = -0.0128

Off-black, White: 0 + -0.0164 + 0 = -0.0164

The results exactly match the data’s rankings.

So, this suggests a change to the CSS: we switch the default background color from #FCFCFC to white, while leaving the default color its current black.

Reader Lucas asks in the comment sections whether, since we would expect new visitors to the website to be less likely to read a page in full than a returning visitor (who knows what they’re in for & probably wants more), whether including such a variable (which is something Google Analytics does track) might improve the analysis. It’s easy to ask GA for “New vs Returning Visitor” so I did:

rates <- read.csv(stdin(),header=TRUE)

Black,White,Type,N,Rate

FALSE,TRUE,new,36695,0.1058

FALSE,TRUE,old,21343,0.1565

FALSE,FALSE,new,36997,0.1043

FALSE,FALSE,old,21537,0.1588

TRUE,TRUE,new,36600,0.1073

TRUE,TRUE,old,22274,0.1613

TRUE,FALSE,new,36409,0.1075

TRUE,FALSE,old,21743,0.1507

rates$Successes <- rates$N * rates$Rate

rates$Successes <- round(rates$Successes,0)

rates$Failures <-rates$N - rates$Successes

g <- glm(cbind(Successes,Failures) ~ Black * White + Type, data=rates, family="binomial")

summary(g)

# Coefficients:

# Estimate Std. Error z value Pr(>|z|)

# (Intercept) -2.134459 0.013770 -155.01 <2e-16

# BlackTRUE -0.009219 0.017813 -0.52 0.60

# WhiteTRUE 0.000837 0.017798 0.05 0.96

# BlackTRUE:WhiteTRUE 0.034362 0.025092 1.37 0.17

# Typeold 0.448004 0.012603 35.55 <2e-16B/W: (-0.009219) + 0.000837 + 0.034362 = 0.02598

0 + 0 + 0 = 0

B: (-0.009219) + 0 + 0 = -0.009219

W: 0 + 0.000837 + 0 = 0.000837

And again, 0.02598 > 0.000837. So as one hopes, thank to randomization, adding a missing covariate doesn’t change our conclusion.

List Symbol And Font-Size

I make heavy use of unordered lists in articles; for no particular reason, the symbol denoting the start of each entry in a list is the little black square, rather than the more common little circle. I’ve come to find the little squares a little chunky and ugly, so I want to test that. And I just realized that I never tested font size (just type of font), even though increasing font size one of the most common CSS tweaks around. I don’t have any reason to expect an interaction between these two bits of designs, unlike the previous A/B test, but I like the idea of getting more out of my data, so I am doing another factorial design, this time not 2x2 but 3x5. The options:

ul { list-style-type: square; }

ul { list-style-type: circle; }

ul { list-style-type: disc; }

html { font-size: 100%; }

html { font-size: 105%; }

html { font-size: 110%; }

html { font-size: 115%; }

html { font-size: 120%; }Implementation

A 3x5 design, or 15 possibilities, does get a little bulkier than I’d like:

hunk ./static/template/default.html 30

- <div class="ground_class1"></div>

+ <div class="ulFontSize_class1"></div>

hunk ./static/template/default.html 146

- ground: [

+ ulFontSize: [

hunk ./static/template/default.html 148

- name: 'bw',

- "ground_class1": "<style>html { background-color: white; color: black; }</style>",

- "ground_class2": ""

+ name: 's100',

+ "ulFontSize_class1": "<style>ul { list-style-type: square; }; html { font-size: 100%; }</style>",

+ "ulFontSize_class2": ""

hunk ./static/template/default.html 153

- name: 'obw',

- "ground_class1": "<style>html { background-color: white; color: #303C3C; }</style>",

- "ground_class2": ""

+ name: 's105',

+ "ulFontSize_class1": "<style>ul { list-style-type: square; }; html { font-size: 105%; }</style>",

+ "ulFontSize_class2": ""

hunk ./static/template/default.html 158

- name: 'bow',

- "ground_class1": "<style>html { background-color: #FCFCFC; color: black; }</style>",

- "ground_class2": ""

+ name: 's110',

+ "ulFontSize_class1": "<style>ul { list-style-type: square; }; html { font-size: 110%; }</style>",

+ "ulFontSize_class2": ""

hunk ./static/template/default.html 163

- name: 'obow',

- "ground_class1": "<style>html { background-color: #FCFCFC; color: #303C3C; }</style>",

- "ground_class2": ""

+ name: 's115',

+ "ulFontSize_class1": "<style>ul { list-style-type: square; }; html { font-size: 115%; }</style>",

+ "ulFontSize_class2": ""

+ },

+ {

+ name: 's120',

+ "ulFontSize_class1": "<style>ul { list-style-type: square; }; html { font-size: 120%; }</style>",

+ "ulFontSize_class2": ""

+ },

+ {

+ name: 'c100',

+ "ulFontSize_class1": "<style>ul { list-style-type: circle; }; html { font-size: 100%; }</style>",

+ "ulFontSize_class2": ""

+ },

+ {

+ name: 'c105',

+ "ulFontSize_class1": "<style>ul { list-style-type: circle; }; html { font-size: 105%; }</style>",

+ "ulFontSize_class2": ""

+ },

+ {

+ name: 'c110',

+ "ulFontSize_class1": "<style>ul { list-style-type: circle; }; html { font-size: 110%; }</style>",

+ "ulFontSize_class2": ""

+ },

+ {

+ name: 'c115',

+ "ulFontSize_class1": "<style>ul { list-style-type: circle; }; html { font-size: 115%; }</style>",

+ "ulFontSize_class2": ""

+ },

+ {

+ name: 'c120',

+ "ulFontSize_class1": "<style>ul { list-style-type: circle; }; html { font-size: 120%; }</style>",

+ "ulFontSize_class2": ""

+ },

+ {

+ name: 'd100',

+ "ulFontSize_class1": "<style>ul { list-style-type: disc; }; html { font-size: 100%; }</style>",

+ "ulFontSize_class2": ""

+ },

+ {

+ name: 'd105',

+ "ulFontSize_class1": "<style>ul { list-style-type: disc; }; html { font-size: 105%; }</style>",

+ "ulFontSize_class2": ""

+ },

+ {

+ name: 'd110',

+ "ulFontSize_class1": "<style>ul { list-style-type: disc; }; html { font-size: 110%; }</style>",

+ "ulFontSize_class2": ""

+ },

+ {

+ name: 'd115',

+ "ulFontSize_class1": "<style>ul { list-style-type: disc; }; html { font-size: 115%; }</style>",

+ "ulFontSize_class2": ""

+ },

+ {

+ name: 'd120',

+ "ulFontSize_class1": "<style>ul { list-style-type: disc; }; html { font-size: 120%; }</style>",

+ "ulFontSize_class2": ""

... ]]Data

I halted the A/B test on 27 October because I was noticing clear damage as compared to my default CSS. The results were:

List icon |

Font zoom |

n |

Reading conversion rate |

|---|---|---|---|

square |

100% |

4,763 |

16.38% |

disc |

100% |

4,759 |

16.18% |

disc |

110% |

4,716 |

16.09% |

circle |

115% |

4,933 |

15.95% |

circle |

100% |

4,872 |

15.85% |

circle |

110% |

4,920 |

15.53% |

circle |

120% |

5,114 |

15.51% |

square |

115% |

4,815 |

15.51% |

square |

110% |

4,927 |

15.47% |

circle |

105% |

5,101 |

15.33% |

square |

105% |

4,775 |

14.85% |

disc |

115% |

4,797 |

14.78% |

disc |

105% |

5,006 |

14.72% |

disc |

120% |

4,912 |

14.56% |

square |

120% |

4,786 |

13.96% |

73,196 |

15.38% |

Analysis

Incorporating visitor type:

rates <- read.csv(stdin(),header=TRUE)

Ul,Size,Type,N,Rate

c,120,old,2673,0.1650

c,115,old,2643,0.1854

c,105,new,2636,0.1392

d,105,old,2635,0.1613

s,110,old,2596,0.1749

s,120,old,2593,0.1678

s,105,new,2582,0.1243

d,120,old,2559,0.1649

c,110,new,2558,0.1298

d,110,new,2555,0.1307

c,100,old,2553,0.2002

c,105,old,2539,0.1713

d,115,old,2524,0.1565

s,115,new,2516,0.1391

c,110,old,2505,0.1741

d,100,new,2502,0.1431

c,120,new,2500,0.1284

s,110,new,2491,0.1265

c,115,new,2483,0.1228

d,120,new,2452,0.1277

d,105,new,2448,0.1364

c,100,new,2436,0.1199

d,115,new,2435,0.1437

s,100,new,2411,0.1497

s,120,new,2411,0.1161

s,105,old,2387,0.1571

s,115,old,2365,0.1674

d,100,old,2358,0.1735

s,100,old,2329,0.1803

d,110,old,2235,0.1888

rates$Successes <- rates$N * rates$Rate

rates$Successes <- round(rates$Successes,0)

rates$Failures <-rates$N - rates$Successes

g <- glm(cbind(Successes,Failures) ~ Ul * Size + Type, data=rates, family="binomial"); summary(g)

# ...Coefficients:

# Estimate Std. Error z value Pr(>|z|)

# (Intercept) -1.389310 0.270903 -5.13 2.9e-07

# Uld -0.103201 0.386550 -0.27 0.789

# Uls 0.055036 0.389109 0.14 0.888

# Size -0.004397 0.002458 -1.79 0.074

# Uld:Size 0.000842 0.003509 0.24 0.810

# Uls:Size -0.000741 0.003533 -0.21 0.834

# Typeold 0.317126 0.020507 15.46 < 2e-16

summary(step(g))

# ...Coefficients:

# Estimate Std. Error z value Pr(>|z|)

# (Intercept) -1.40555 0.15921 -8.83 <2e-16

# Size -0.00436 0.00144 -3.02 0.0025

# Typeold 0.31725 0.02051 15.47 <2e-16

#

## examine just the list type alone, since the Size result is clear.

summary(glm(cbind(Successes,Failures) ~ Ul + Type, data=rates, family="binomial"))

# ...Coefficients:

# Estimate Std. Error z value Pr(>|z|)

# (Intercept) -1.8725 0.0208 -89.91 <2e-16

# Uld -0.0106 0.0248 -0.43 0.67

# Uls -0.0265 0.0249 -1.07 0.29

# Typeold 0.3163 0.0205 15.43 <2e-16

summary(glm(cbind(Successes,Failures) ~ Ul + Type, data=rates[rates$Size==100,], family="binomial"))

# ...Coefficients:

# Estimate Std. Error z value Pr(>|z|)

# (Intercept) -1.8425 0.0465 -39.61 < 2e-16

# Uld -0.0141 0.0552 -0.26 0.80

# Uls 0.0353 0.0551 0.64 0.52

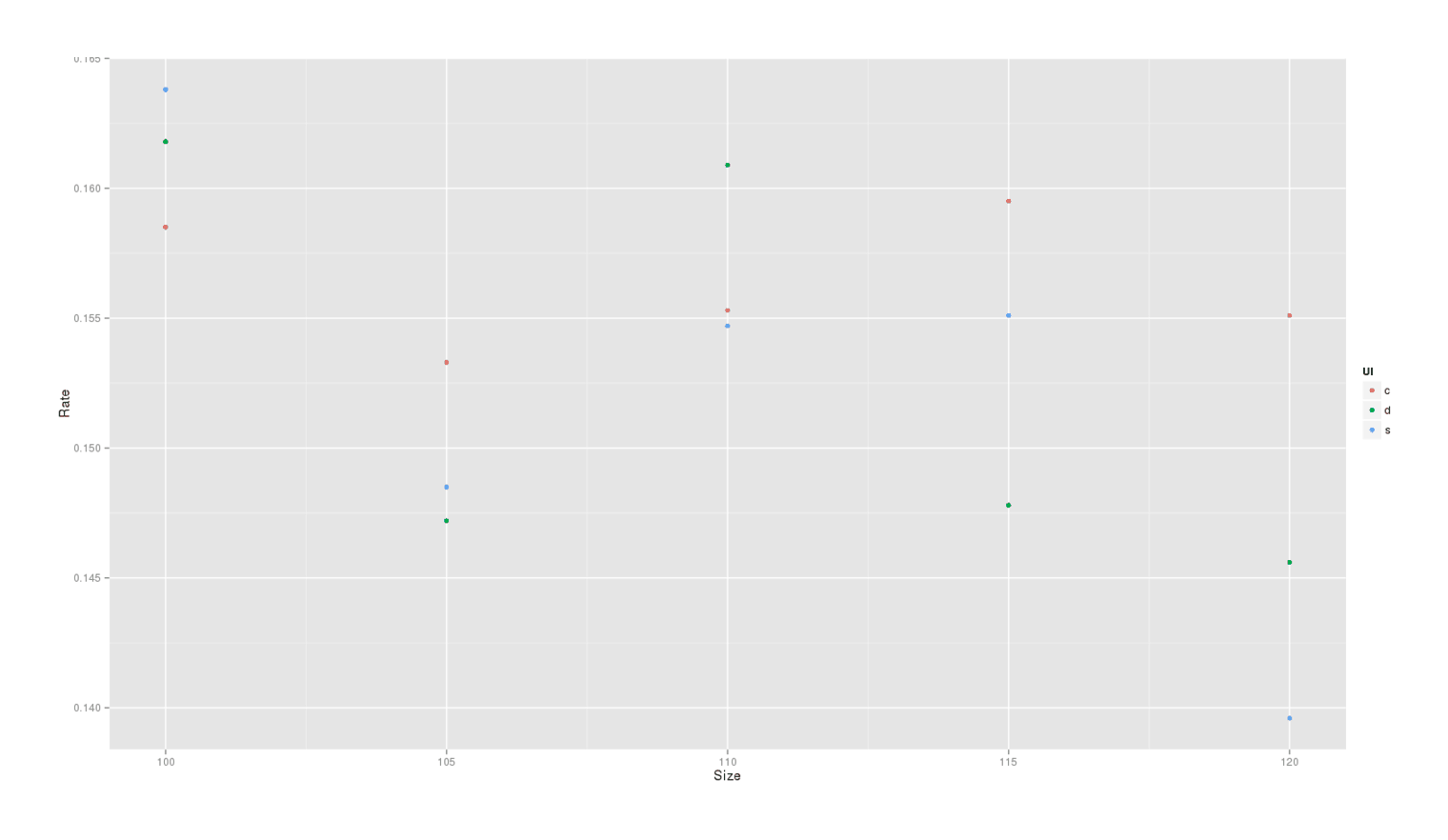

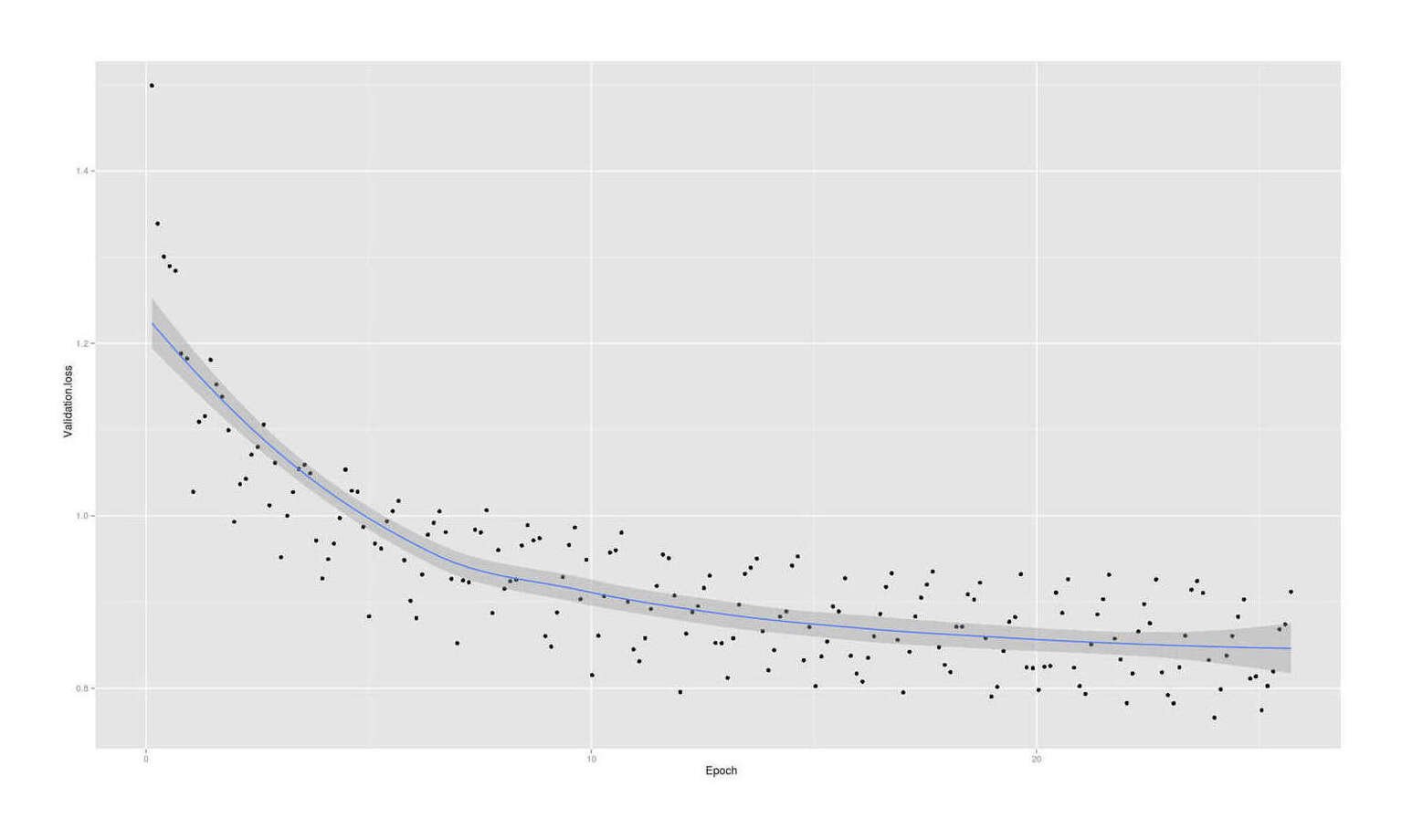

# Typeold 0.3534 0.0454 7.78 7.3e-15The results are a little confusing in factorial form: it seems pretty clear that Size is bad and that 100% performs best, but what’s going on with the list icon type? Do we have too little data or is it interacting with the font size somehow? I find it a lot clearer when plotted:

library(ggplot2)

qplot(Size,Rate,color=Ul,data=rates)

Reading rate, split by font size, then by list icon type

Immediately the negative effect of increasing the font size jumps out, but it’s easier to understand the list icon estimates: square performs the best in the 100% (the original default) font size condition but it performs poorly in the other font sizes, which is why it seems to do only medium-well compared to the others. Given how much better 100% performs than the others, I’m inclined to ignore their results and keep the squares.

100% and squares, however, were the original CSS settings, so this means I will make no changes to the existing CSS based on these results.

Blockquote Formatting

Another bit of formatting I’ve been meaning to test for a while is seeing how well Readability’s pull-quotes next to blockquotes perform, and to check whether my zebra-striping of nested blockquotes is helpful or harmful.

The Readability thing goes like this:

blockquote: : before {

content: "\201C";

filter: alpha(opacity=20);

font-family: "Constantia", Georgia, 'Hoefler Text', 'Times New Roman', serif;

font-size: 4em;

left: -0.5em;

opacity: .2;

position: absolute;

top: .25em }The current blockquote striping goes thusly:

blockquote, blockquote blockquote blockquote,

blockquote blockquote blockquote blockquote blockquote {

z-index: -2;

background-color: rgb(245, 245, 245); }

blockquote blockquote, blockquote blockquote blockquote blockquote,

blockquote blockquote blockquote blockquote blockquote blockquote {

background-color: rgb(235, 235, 235); }Implementation

This is another 2x2 design since we can use the Readability quotes or not, and the zebra-striping or not.

hunk ./static/css/default.css 271

-blockquote, blockquote blockquote blockquote,

- blockquote blockquote blockquote blockquote blockquote {

- z-index: -2;

- background-color: rgb(245, 245, 245); }

-blockquote blockquote, blockquote blockquote blockquote blockquote,

- blockquote blockquote blockquote blockquote blockquote blockquote {

- background-color: rgb(235, 235, 235); }

+/* blockquote, blockquote blockquote blockquote, */

+/* blockquote blockquote blockquote blockquote blockquote { */

+/* z-index: -2; */

+/* background-color: rgb(245, 245, 245); } */

+/* blockquote blockquote, blockquote blockquote blockquote blockquote, */

+/*blockquote blockquote blockquote blockquote blockquote blockquote { */

+/* background-color: rgb(235, 235, 235); } */

hunk ./static/template/default.html 30

- <div class="ulFontSize_class1"></div>

+ <div class="blockquoteFormatting_class1"></div>

hunk ./static/template/default.html 148

- ulFontSize: [

+ blockquoteFormatting: [

hunk ./static/template/default.html 150

- name: 's100',

- "ulFontSize_class1": "<style>ul { list-style-type: square; }; html { font-size: 100%; }</style>",

- "ulFontSize_class2": ""

+ name: 'rz',

+ "blockquoteFormatting_class1": "<style>blockquote: : before { content: '\201C';

filter: alpha(opacity=20);

font-family: 'Constantia', Georgia, 'Hoefler Text', 'Times New Roman', serif; font-size: 4em;left: -0.5em;

opacity: .2; position: absolute; top: .25em }; blockquote, blockquote blockquote blockquote,

blockquote blockquote blockquote blockquote blockquote { z-index: -2; background-color: rgb(245, 245, 245); };

blockquote blockquote, blockquote blockquote blockquote blockquote,

blockquote blockquote blockquote blockquote blockquote blockquote { background-color: rgb(235, 235, 235); }</style>",

+ "blockquoteFormatting_class2": ""

hunk ./static/template/default.html 155

- name: 's105',

- "ulFontSize_class1": "<style>ul { list-style-type: square; }; html { font-size: 105%; }</style>",

- "ulFontSize_class2": ""

+ name: 'orz',

+ "blockquoteFormatting_class1": "<style>blockquote, blockquote blockquote blockquote,

blockquote blockquote blockquote blockquote blockquote { z-index: -2; background-color: rgb(245, 245, 245); };

blockquote blockquote, blockquote blockquote blockquote blockquote,

blockquote blockquote blockquote blockquote blockquote blockquote { background-color: rgb(235, 235, 235); }</style>",

+ "blockquoteFormatting_class2": ""

hunk ./static/template/default.html 160

- name: 's110',

- "ulFontSize_class1": "<style>ul { list-style-type: square; }; html { font-size: 110%; }</style>",

- "ulFontSize_class2": ""

+ name: 'roz',

+ "blockquoteFormatting_class1": "<style>blockquote: : before { content: '\201C';

filter: alpha(opacity=20);

font-family: 'Constantia', Georgia, 'Hoefler Text', 'Times New Roman', serif; font-size: 4em;left: -0.5em;

opacity: .2; position: absolute; top: .25em }</style>",

+ "blockquoteFormatting_class2": ""

hunk ./static/template/default.html 165

- name: 's115',

- "ulFontSize_class1": "<style>ul { list-style-type: square; }; html { font-size: 115%; }</style>",

- "ulFontSize_class2": ""

- },

- {

- name: 's120',

- "ulFontSize_class1": "<style>ul { list-style-type: square; }; html { font-size: 120%; }</style>",

- "ulFontSize_class2": ""

- },

- {

- name: 'c100',

- "ulFontSize_class1": "<style>ul { list-style-type: circle; }; html { font-size: 100%; }</style>",

- "ulFontSize_class2": ""

- },

- {

- name: 'c105',

- "ulFontSize_class1": "<style>ul { list-style-type: circle; }; html { font-size: 105%; }</style>",

- "ulFontSize_class2": ""

- },

- {

- name: 'c110',

- "ulFontSize_class1": "<style>ul { list-style-type: circle; }; html { font-size: 110%; }</style>",

- "ulFontSize_class2": ""

- },

- {

- name: 'c115',

- "ulFontSize_class1": "<style>ul { list-style-type: circle; }; html { font-size: 115%; }</style>",

- "ulFontSize_class2": ""

- },

- {

- name: 'c120',

- "ulFontSize_class1": "<style>ul { list-style-type: circle; }; html { font-size: 120%; }</style>",

- "ulFontSize_class2": ""

- },

- {

- name: 'd100',

- "ulFontSize_class1": "<style>ul { list-style-type: disc; }; html { font-size: 100%; }</style>",

- "ulFontSize_class2": ""

- },

- {

- name: 'd105',

- "ulFontSize_class1": "<style>ul { list-style-type: disc; }; html { font-size: 105%; }</style>",

- "ulFontSize_class2": ""

- },

- {

- name: 'd110',

- "ulFontSize_class1": "<style>ul { list-style-type: disc; }; html { font-size: 110%; }</style>",

- "ulFontSize_class2": ""

- },

- {

- name: 'd115',

- "ulFontSize_class1": "<style>ul { list-style-type: disc; }; html { font-size: 115%; }</style>",

- "ulFontSize_class2": ""

- },

- {

- name: 'd120',

- "ulFontSize_class1": "<style>ul { list-style-type: disc; }; html { font-size: 120%; }</style>",

- "ulFontSize_class2": ""

+ name: 'oroz',

+ "blockquoteFormatting_class1": "<style></style>",

+ "blockquoteFormatting_class2": ""

... ]]Data

Readability Quote |

Blockquote highlighting |

n |

Conversion Rate |

|---|---|---|---|

no |

yes |

11,663 |

20.04% |

yes |

yes |

11,514 |

19.86% |

no |

no |

11,464 |

19.21% |

yes |

no |

10,669 |

18.51% |

45,310 |

19.42% |

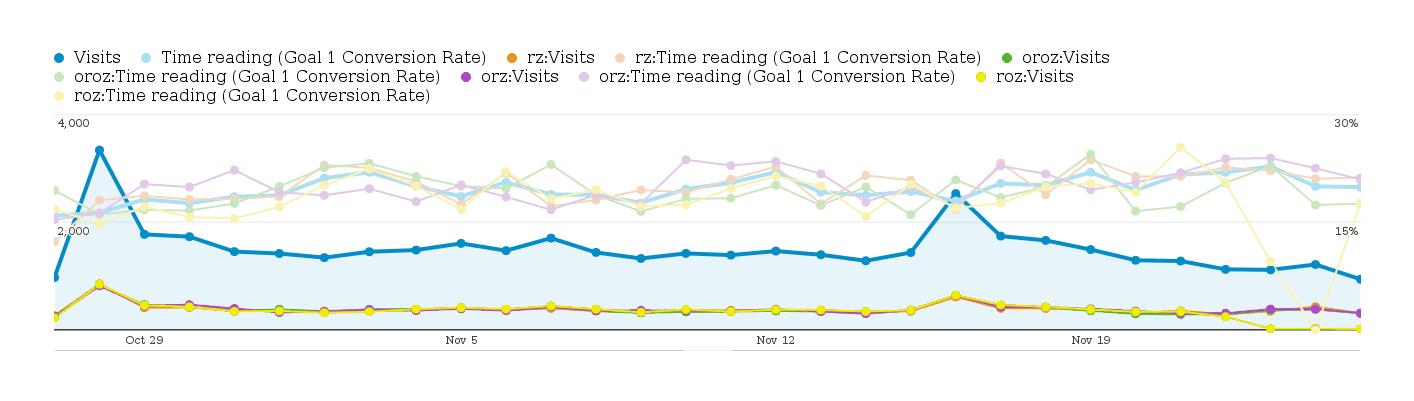

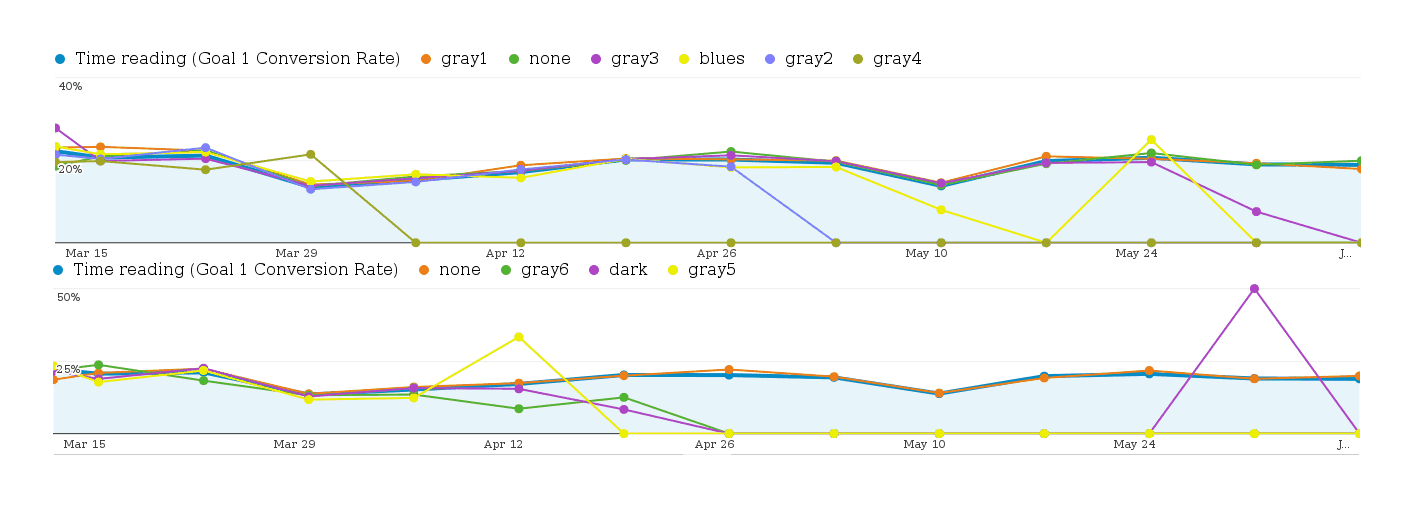

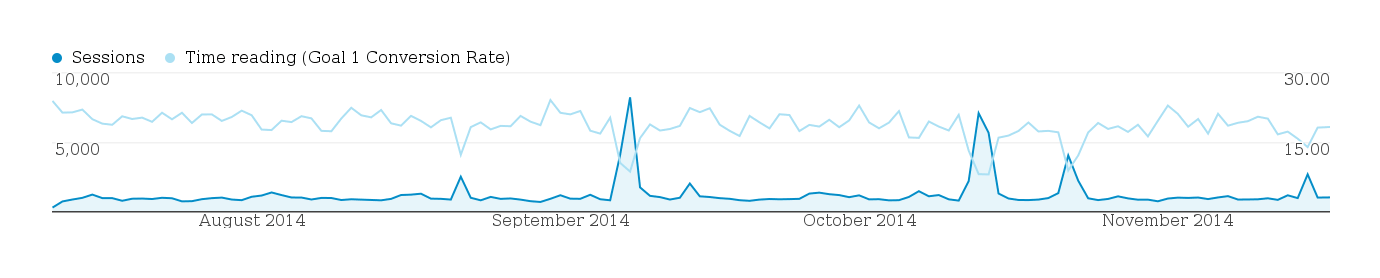

I discovered during this experiment that I could graph the conversion rate of each condition separately:

Google Analytics view on blockquote factorial test conversions, by day

What I like about this graph is how it demonstrates some basic statistical points:

the more traffic, the smaller sampling error is and the closer the 4 conditions are to their true values as they cluster together. This illustrates how even what seems like a large difference based on a large amount of data, may still be - unintuitively - dominated by sampling error

day to day, any condition can be on top; no matter which one proves superior and which version is the worst, we can spot days where the worst version looks better than the best version. This illustrates how insidious selection biases or choice of datapoints can be: we can easily lie and show black is white, if we can just manage to cherrypick a little bit.

the underlying traffic does not itself appear to be completely stable or consistent. There are a lot of movements which look like the underlying visitors may be changing in composition slightly and responding slightly. This harks back to the paper’s warning that for some tests, no answer was possible as the responses of visitors kept changing which version was performing best.

Analysis

rates <- read.csv(stdin(),header=TRUE)

Readability,Zebra,Type,N,Rate

FALSE,FALSE,new,7191,0.1837

TRUE,TRUE,new,7182,0.1910

FALSE,TRUE,new,7112,0.1800

TRUE,FALSE,new,6508,0.1804

FALSE,TRUE,old,4652,0.2236

TRUE,FALSE,old,4452,0.1995

TRUE,TRUE,old,4412,0.2201

FALSE,FALSE,old,4374,0.2046

rates$Successes <- rates$N * rates$Rate

rates$Successes <- round(rates$Successes,0)

rates$Failures <-rates$N - rates$Successes

g <- glm(cbind(Successes,Failures) ~ Readability * Zebra + Type, data=rates, family="binomial"); summary(g)

# ...Coefficients:

# Estimate Std. Error z value Pr(>|z|)

# (Intercept) -1.5095 0.0255 -59.09 <2e-16

# ReadabilityTRUE -0.0277 0.0340 -0.81 0.42

# ZebraTRUE 0.0327 0.0331 0.99 0.32

# ReadabilityTRUE:ZebraTRUE 0.0609 0.0472 1.29 0.20

# Typeold 0.1788 0.0239 7.47 8e-14

summary(step(g))

# ...Coefficients:

# Estimate Std. Error z value Pr(>|z|)

# (Intercept) -1.5227 0.0197 -77.20 < 2e-16

# ZebraTRUE 0.0627 0.0236 2.66 0.0079

# Typeold 0.1782 0.0239 7.45 9.7e-14The top-performing variant is the status quo (no Readability-style quote, zebra-striped blocks). So we keep it.

Font Size & ToC Background

It was pointed out to me that in my previous font-size test, the clear linear trend may have implied that larger fonts than 100% were bad, but that I was making an unjustified leap in implicitly assuming that 100% was best: if bigger is worse, then mightn’t the optimal font size be something smaller than 100%, like 95%?

And while the blockquote background coloring is a good idea, per the previous test, what about the other place on Gwern.net where I use a light background shading: the Table of Contents? Perhaps it would be better with the same background shading as the blockquotes, or no shading?

Finally, because I am tired of just 2 factors, I throw in a third factor to make it really multifactorial. I picked the number-sizing from the existing list of suggestions.

Each factor has 3 variants, giving 27 conditions:

.num { font-size: 85%; }

.num { font-size: 95%; }

.num { font-size: 100%; }

html { font-size: 85%; }

html { font-size: 95%; }

html { font-size: 100%; }

div#TOC { background: #fff; }

div#TOC { background: #eee; }

div#TOC { background-color: rgb(245, 245, 245); }Implementation

hunk ./static/template/default.html 30

- <div class="blockquoteFormatting_class1"></div>

+ <div class="tocFormatting_class1"></div>

hunk ./static/template/default.html 150

- blockquoteFormatting: [

+ tocFormatting: [

hunk ./static/template/default.html 152

- name: 'rz',

- "blockquoteFormatting_class1": "<style>blockquote:before { display: block; font-size: 200%; color: #ccc; content: open-quote; height: 0px; margin-left: -0.55em; position:relative; }; blockquote blockquote, blockquote blockquote blockquote blockquote, blockquote blockquote blockquote blockquote blockquote blockquote { background-color: rgb(235, 235, 235); }</style>",

- "blockquoteFormatting_class2": ""

+ name: '88f',

+ "tocFormatting_class1": "<style>.num { font-size: 85%; }; html { font-size: 85%; }; div#TOC { background: #fff; };</style>",

+ "tocFormatting_class2": ""

hunk ./static/template/default.html 157

- name: 'orz',

- "blockquoteFormatting_class1": "<style>blockquote, blockquote blockquote blockquote, blockquote blockquote blockquote blockquote blockquote { z-index: -2; background-color: rgb(245, 245, 245); }; blockquote blockquote, blockquote blockquote blockquote blockquote, blockquote blockquote blockquote blockquote blockquote blockquote { background-color: rgb(235, 235, 235); }</style>",

- "blockquoteFormatting_class2": ""

+ name: '88e',

+ "tocFormatting_class1": "<style>.num { font-size: 85%; }; html { font-size: 85%; }; div#TOC { background: #eee; }</style>",

+ "tocFormatting_class2": ""

hunk ./static/template/default.html 162

- name: 'oroz',

- "blockquoteFormatting_class1": "<style></style>",

- "blockquoteFormatting_class2": ""

+ name: '88r',

+ "tocFormatting_class1": "<style>.num { font-size: 85%; }; html { font-size: 85%; }; div#TOC { background-color: rgb(245, 245, 245); }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '89f',

+ "tocFormatting_class1": "<style>.num { font-size: 85%; }; html { font-size: 95%; }; div#TOC { background: #fff; }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '89e',

+ "tocFormatting_class1": "<style>.num { font-size: 85%; }; html { font-size: 95%; }; div#TOC { background: #eee; }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '89f',

+ "tocFormatting_class1": "<style>.num { font-size: 85%; }; html { font-size: 95%; }; div#TOC { background-color: rgb(245, 245, 245); }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '81f',

+ "tocFormatting_class1": "<style>.num { font-size: 85%; }; html { font-size: 100%; }; div#TOC { background: #fff; }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '81e',

+ "tocFormatting_class1": "<style>.num { font-size: 85%; }; html { font-size: 100%; }; div#TOC { background: #eee; }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '81r',

+ "tocFormatting_class1": "<style>.num { font-size: 85%; }; html { font-size: 100%; }; div#TOC { background-color: rgb(245, 245, 245); }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '98f',

+ "tocFormatting_class1": "<style>.num { font-size: 95%; }; html { font-size: 85%; }; div#TOC { background: #fff; };</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '98e',

+ "tocFormatting_class1": "<style>.num { font-size: 95%; }; html { font-size: 85%; }; div#TOC { background: #eee; }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '98r',

+ "tocFormatting_class1": "<style>.num { font-size: 95%; }; html { font-size: 85%; }; div#TOC { background-color: rgb(245, 245, 245); }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '99f',

+ "tocFormatting_class1": "<style>.num { font-size: 95%; }; html { font-size: 95%; }; div#TOC { background: #fff; }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '99e',

+ "tocFormatting_class1": "<style>.num { font-size: 95%; }; html { font-size: 95%; }; div#TOC { background: #eee; }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '99f',

+ "tocFormatting_class1": "<style>.num { font-size: 95%; }; html { font-size: 95%; }; div#TOC { background-color: rgb(245, 245, 245); }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '91f',

+ "tocFormatting_class1": "<style>.num { font-size: 95%; }; html { font-size: 100%; }; div#TOC { background: #fff; }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '91e',

+ "tocFormatting_class1": "<style>.num { font-size: 95%; }; html { font-size: 100%; }; div#TOC { background: #eee; }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '91r',

+ "tocFormatting_class1": "<style>.num { font-size: 95%; }; html { font-size: 100%; }; div#TOC { background-color: rgb(245, 245, 245); }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '18f',

+ "tocFormatting_class1": "<style>.num { font-size: 100%; }; html { font-size: 85%; }; div#TOC { background: #fff; };</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '18e',

+ "tocFormatting_class1": "<style>.num { font-size: 100%; }; html { font-size: 85%; }; div#TOC { background: #eee; }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '18r',

+ "tocFormatting_class1": "<style>.num { font-size: 100%; }; html { font-size: 85%; }; div#TOC { background-color: rgb(245, 245, 245); }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '19f',

+ "tocFormatting_class1": "<style>.num { font-size: 100%; }; html { font-size: 95%; }; div#TOC { background: #fff; }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '19e',

+ "tocFormatting_class1": "<style>.num { font-size: 100%; }; html { font-size: 95%; }; div#TOC { background: #eee; }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '19f',

+ "tocFormatting_class1": "<style>.num { font-size: 100%; }; html { font-size: 95%; }; div#TOC { background-color: rgb(245, 245, 245); }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '11f',

+ "tocFormatting_class1": "<style>.num { font-size: 100%; }; html { font-size: 100%; }; div#TOC { background: #fff; }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '11e',

+ "tocFormatting_class1": "<style>.num { font-size: 100%; }; html { font-size: 100%; }; div#TOC { background: #eee; }</style>",

+ "tocFormatting_class2": ""

+ },

+ {

+ name: '11r',

+ "tocFormatting_class1": "<style>.num { font-size: 100%; }; html { font-size: 100%; }; div#TOC { background-color: rgb(245, 245, 245); }</style>",

+ "tocFormatting_class2": ""

... ]]Analysis

rates <- read.csv(stdin(),header=TRUE)

NumSize,FontSize,TocBg,Type,N,Rate

1,9,e,new,3060,0.1513

8,9,e,new,2978,0.1605

9,1,r,new,2965,0.1548

8,8,f,new,2941,0.1629

1,9,f,new,2933,0.1558

9,9,r,new,2932,0.1576

8,9,f,new,2906,0.1473

1,9,r,new,2901,0.1482

9,9,f,new,2901,0.1420

8,8,r,new,2885,0.1567

1,8,e,new,2876,0.1412

8,1,r,new,2869,0.1593

9,8,f,new,2846,0.1472

1,1,e,new,2844,0.1551

1,8,f,new,2841,0.1457

9,8,e,new,2834,0.1478

8,1,f,new,2833,0.1521

1,8,r,new,2818,0.1544

8,8,e,new,2818,0.1678

8,1,e,new,2810,0.1605

1,1,r,new,2806,0.1775

9,8,r,new,2801,0.1682

9,1,e,new,2799,0.1422

8,9,r,new,2764,0.1548

9,9,e,new,2753,0.1478

1,1,f,new,2750,0.1611

9,1,f,new,2700,0.1537

8,8,r,old,1551,0.2521

9,8,e,old,1519,0.2146

9,8,f,old,1505,0.2153

1,8,e,old,1489,0.2317

1,1,e,old,1475,0.2339

8,1,f,old,1416,0.2112

1,9,r,old,1390,0.2245

8,9,e,old,1388,0.2464

9,9,r,old,1379,0.2466

8,9,r,old,1374,0.1907

1,9,f,old,1361,0.2337

8,8,f,old,1348,0.2322

1,9,e,old,1347,0.2279

1,8,f,old,1340,0.2470

9,1,r,old,1336,0.2605

8,1,r,old,1326,0.2119

8,8,e,old,1321,0.2286

9,1,f,old,1318,0.2398

1,1,r,old,1293,0.2111

1,8,r,old,1293,0.2073

9,9,f,old,1261,0.2411

8,9,f,old,1254,0.2113

9,9,e,old,1240,0.2435

1,1,f,old,1232,0.2240

8,1,e,old,1229,0.2587

9,1,e,old,1182,0.2335

9,8,r,old,1032,0.2403

rates[rates$NumSize==1,]$NumSize <- 100

rates[rates$NumSize==9,]$NumSize <- 95

rates[rates$NumSize==8,]$NumSize <- 85

rates[rates$FontSize==1,]$FontSize <- 100

rates[rates$FontSize==9,]$FontSize <- 95

rates[rates$FontSize==8,]$FontSize <- 85

rates$Successes <- rates$N * rates$Rate

rates$Successes <- round(rates$Successes,0)

rates$Failures <-rates$N - rates$Successes

g <- glm(cbind(Successes,Failures) ~ NumSize * FontSize * TocBg + Type, data=rates, family="binomial"); summary(g)

# ...Coefficients:

# Estimate Std. Error z value Pr(>|z|)

# (Intercept) 0.124770 3.020334 0.04 0.97

# NumSize -0.022262 0.032293 -0.69 0.49

# FontSize -0.012775 0.032283 -0.40 0.69

# TocBgf 4.042812 4.287006 0.94 0.35

# TocBgr 5.356794 4.250778 1.26 0.21

# NumSize:FontSize 0.000166 0.000345 0.48 0.63

# NumSize:TocBgf -0.040645 0.045855 -0.89 0.38

# NumSize:TocBgr -0.054164 0.045501 -1.19 0.23

# FontSize:TocBgf -0.052406 0.045854 -1.14 0.25

# FontSize:TocBgr -0.065503 0.045482 -1.44 0.15

# NumSize:FontSize:TocBgf 0.000531 0.000490 1.08 0.28

# NumSize:FontSize:TocBgr 0.000669 0.000487 1.37 0.17

# Typeold 0.492688 0.015978 30.84 <2e-16

summary(step(g))

# ...Coefficients:

# Estimate Std. Error z value Pr(>|z|)

# (Intercept) 3.808438 1.750144 2.18 0.0295

# NumSize -0.059730 0.018731 -3.19 0.0014

# FontSize -0.052262 0.018640 -2.80 0.0051

# TocBgf -0.844664 0.285387 -2.96 0.0031

# TocBgr -0.747451 0.283304 -2.64 0.0083

# NumSize:FontSize 0.000568 0.000199 2.85 0.0044

# NumSize:TocBgf 0.008853 0.003052 2.90 0.0037

# NumSize:TocBgr 0.008139 0.003030 2.69 0.0072

# Typeold 0.492598 0.015975 30.83 <2e-16The two size tweaks turn out to be unambiguously negative compared to the status quo (with an almost negligible interaction term probably reflecting reader preference for consistency in sizes of letters and numbers - as one gets smaller, the other does better if it’s smaller too). The Table of Contents backgrounds also survive (thanks to the new vs old visitor type covariate adding power): there were 3 background types, e/f/r[gb], and f/r turn out to have negative coefficients, implying that e is best - but e is also the status quo, so no change is recommended.

Multifactorial Roundup

At this point it seems worth asking whether running multifactorials has been worthwhile. The analysis is a bit more difficult, and the more factors there are, the harder to interpret. I’m also not too keen on encoding the combinatorial explosion into a big JS array for ABalytics. In my tests so far, have there been many interactions? A quick tally of the glm()/step() results:

Text & background color:

original: 2 main, 1 two-way interaction

survived: 2 main, 1 two-way interaction

List symbol and font-size:

original: 3 main, 2 two-way interactions

survived: 1 main

Blockquote formatting:

original: 2 main, 1 two-way

survived: 1 main

Font size & ToC background:

original: 4 mains, 5 two-ways, 2 three-ways

survived: 3 mains, 2 two-way

So of the 11 main effects, 9 two-ways, & 2 three-ways, there were confirmed in the reduced models: 7 mains, 3 two-ways (22%), & 0 three-ways (0%). And of the 2 interactions, only the black/white interaction was important (and even there, if I had regressed instead cbind(Successes, Failures) ~ Black + White, black & white would still have positive coefficients, they just would not be statistically-significant, and so I would likely have made the same choice as I did with the interaction data available).

This is not a resounding endorsement so far.

Section Header Capitalization

3x3:

h1, h2, h3, h4, h5 { text-transform: uppercase; }h1, h2, h3, h4, h5 { text-transform: none; }h1, h2, h3, h4, h5 { text-transform: capitalize; }div#header h1 { text-transform: uppercase; }div#header h1 { text-transform: none; }div#header h1 { text-transform: capitalize; }

--- a/static/template/default.html

+++ b/static/template/default.html

@@ -27,7 +27,7 @@

<body>

- <div class="tocFormatting_class1"></div>

+ <div class="headerCaps_class1"></div>

<div id="main">

<div id="sidebar">

@@ -152,141 +152,51 @@

_gaq.push(['_setAccount', 'UA-18912926-1']);

ABalytics.init({

- tocFormatting: [

+ headerCaps: [

{

- name: '88f',

- "tocFormatting_class1": "<style>.num { font-size: 85%; }; html { font-size: 85%; }; div#TOC { background: #fff; };</style>",

- "tocFormatting_class2": ""

+ name: 'uu',

+ "headerCaps_class1": "<style>h1, h2, h3, h4, h5 { text-transform: uppercase; }; div#header h1 { text-transform: uppercase; }</style>",

+ "headerCaps_class2": ""

},

{

- name: '88e',

- "tocFormatting_class1": "<style>.num { font-size: 85%; }; html { font-size: 85%; }; div#TOC { background: #eee; }</style>",

- "tocFormatting_class2": ""

+ name: 'un',

+ "headerCaps_class1": "<style>div#header h1 { text-transform: uppercase; }; div#header h1 { text-transform: none; }</style>",

+ "headerCaps_class2": ""

},

{

- name: '88r',

- "tocFormatting_class1": "<style>.num { font-size: 85%; }; html { font-size: 85%; }; div#TOC { background-color: rgb(245, 245, 245); }</style>",

- "tocFormatting_class2": ""

+ name: 'uc',

+ "headerCaps_class1": "<style>div#header h1 { text-transform: uppercase; }; div#header h1 { text-transform: capitalize; }</style>",

+ "headerCaps_class2": ""

},

{

- name: '89f',

- "tocFormatting_class1": "<style>.num { font-size: 85%; }; html { font-size: 95%; }; div#TOC { background: #fff; }</style>",

- "tocFormatting_class2": ""

+ name: 'nu',

+ "headerCaps_class1": "<style>h1, h2, h3, h4, h5 { text-transform: none; }; div#header h1 { text-transform: uppercase; }</style>",

+ "headerCaps_class2": ""

},

{

- name: '89e',

- "tocFormatting_class1": "<style>.num { font-size: 85%; }; html { font-size: 95%; }; div#TOC { background: #eee; }</style>",

- "tocFormatting_class2": ""

+ name: 'nn',

+ "headerCaps_class1": "<style>h1, h2, h3, h4, h5 { text-transform: none; }; div#header h1 { text-transform: none; }</style>",

+ "headerCaps_class2": ""

},

{

- name: '89r',

- "tocFormatting_class1": "<style>.num { font-size: 85%; }; html { font-size: 95%; }; div#TOC { background-color: rgb(245, 245, 245); }</style>",

- "tocFormatting_class2": ""

+ name: 'nc',

+ "headerCaps_class1": "<style>h1, h2, h3, h4, h5 { text-transform: none; }; div#header h1 { text-transform: capitalize; }</style>",

+ "headerCaps_class2": ""

},

{

- name: '81f',

- "tocFormatting_class1": "<style>.num { font-size: 85%; }; html { font-size: 100%; }; div#TOC { background: #fff; }</style>",

- "tocFormatting_class2": ""

+ name: 'cu',

+ "headerCaps_class1": "<style>h1, h2, h3, h4, h5 { text-transform: capitalize; }; div#header h1 { text-transform: uppercase; }</style>",

+ "headerCaps_class2": ""

},

{

- name: '81e',

- "tocFormatting_class1": "<style>.num { font-size: 85%; }; html { font-size: 100%; }; div#TOC { background: #eee; }</style>",

- "tocFormatting_class2": ""

+ name: 'cn',

+ "headerCaps_class1": "<style>h1, h2, h3, h4, h5 { text-transform: capitalize; }; div#header h1 { text-transform: none; }</style>",

+ "headerCaps_class2": ""

},

{

- name: '81r',

- "tocFormatting_class1": "<style>.num { font-size: 85%; }; html { font-size: 100%; }; div#TOC { background-color: rgb(245, 245, 245); }</style>",

- "tocFormatting_class2": ""

- },

- {

- name: '98f',

- "tocFormatting_class1": "<style>.num { font-size: 95%; }; html { font-size: 85%; }; div#TOC { background: #fff; };</style>",

- "tocFormatting_class2": ""

- },

- {

- name: '98e',

- "tocFormatting_class1": "<style>.num { font-size: 95%; }; html { font-size: 85%; }; div#TOC { background: #eee; }</style>",

- "tocFormatting_class2": ""

- },

- {

- name: '98r',

- "tocFormatting_class1": "<style>.num { font-size: 95%; }; html { font-size: 85%; }; div#TOC { background-color: rgb(245, 245, 245); }</style>",

- "tocFormatting_class2": ""

- },

- {

- name: '99f',

- "tocFormatting_class1": "<style>.num { font-size: 95%; }; html { font-size: 95%; }; div#TOC { background: #fff; }</style>",

- "tocFormatting_class2": ""

- },

- {

- name: '99e',

- "tocFormatting_class1": "<style>.num { font-size: 95%; }; html { font-size: 95%; }; div#TOC { background: #eee; }</style>",

- "tocFormatting_class2": ""

- },

- {

- name: '99r',

- "tocFormatting_class1": "<style>.num { font-size: 95%; }; html { font-size: 95%; }; div#TOC { background-color: rgb(245, 245, 245); }</style>",

- "tocFormatting_class2": ""

- },

- {

- name: '91f',

- "tocFormatting_class1": "<style>.num { font-size: 95%; }; html { font-size: 100%; }; div#TOC { background: #fff; }</style>",

- "tocFormatting_class2": ""

- },

- {

- name: '91e',

- "tocFormatting_class1": "<style>.num { font-size: 95%; }; html { font-size: 100%; }; div#TOC { background: #eee; }</style>",

- "tocFormatting_class2": ""

- },

- {

- name: '91r',

- "tocFormatting_class1": "<style>.num { font-size: 95%; }; html { font-size: 100%; }; div#TOC { background-color: rgb(245, 245, 245); }</style>",

- "tocFormatting_class2": ""

- },

- {

- name: '18f',

- "tocFormatting_class1": "<style>.num { font-size: 100%; }; html { font-size: 85%; }; div#TOC { background: #fff; };</style>",

- "tocFormatting_class2": ""

- {

- name: '18e',

- "tocFormatting_class1": "<style>.num { font-size: 100%; }; html { font-size: 85%; }; div#TOC { background: #eee; }</style>",

- "tocFormatting_class2": ""

- },

- {

- name: '18r',

- "tocFormatting_class1": "<style>.num { font-size: 100%; }; html { font-size: 85%; }; div#TOC { background-color: rgb(245, 245, 245); }</style>",

- "tocFormatting_class2": ""

- },

- {

- name: '19f',

- "tocFormatting_class1": "<style>.num { font-size: 100%; }; html { font-size: 95%; }; div#TOC { background: #fff; }</style>",

- "tocFormatting_class2": ""

- },

- {

- name: '19e',

- "tocFormatting_class1": "<style>.num { font-size: 100%; }; html { font-size: 95%; }; div#TOC { background: #eee; }</style>",

- "tocFormatting_class2": ""

- },

- {

- name: '19r',

- "tocFormatting_class1": "<style>.num { font-size: 100%; }; html { font-size: 95%; }; div#TOC { background-color: rgb(245, 245, 245); }</style>",

- "tocFormatting_class2": ""

- },

- {

- name: '11f',

- "tocFormatting_class1": "<style>.num { font-size: 100%; }; html { font-size: 100%; }; div#TOC { background: #fff; }</style>",

- "tocFormatting_class2": ""

- },

- {

- name: '11e',

- "tocFormatting_class1": "<style>.num { font-size: 100%; }; html { font-size: 100%; }; div#TOC { background: #eee; }</style>",

- "tocFormatting_class2": ""

- },

- {

- name: '11r',

- "tocFormatting_class1": "<style>.num { font-size: 100%; }; html { font-size: 100%; }; div#TOC { background-color: rgb(245, 245, 245); }</style>",

- "tocFormatting_class2": ""

+ name: 'cc',

+ "headerCaps_class1": "<style>h1, h2, h3, h4, h5 { text-transform: capitalize; }; div#header h1 { text-transform: capitalize; }</style>",

+ "headerCaps_class2": ""

}

],

}, _gaq);

...)}rates <- read.csv(stdin(),header=TRUE)

Sections,Title,Old,N,Rate

c,u,FALSE,2362, 0.1808

c,n,FALSE,2356,0.1855

c,c,FALSE,2342,0.2003

u,u,FALSE,2341,0.1965

u,c,FALSE,2333,0.1989

n,u,FALSE,2329,0.1928

n,c,FALSE,2323,0.1941

n,n,FALSE,2321,0.1978

u,n,FALSE,2315,0.1965

c,c,TRUE,1370,0.2190

n,u,TRUE,1302,0.2558

u,u,TRUE,1271,0.2919

c,n,TRUE,1258,0.2377

u,c,TRUE,1228,0.2272

n,c,TRUE,1211,0.2337

n,n,TRUE,1200,0.2400

c,u,TRUE,1135,0.2396

u,n,TRUE,1028,0.2442

rates$Successes <- rates$N * rates$Rate

rates$Successes <- round(rates$Successes,0)

rates$Failures <-rates$N - rates$Successes

g <- glm(cbind(Successes,Failures) ~ Sections * Title + Old, data=rates, family="binomial"); summary(g)

# ...Coefficients:

# (Intercept) -1.4552 0.0422 -34.50 <2e-16

# Sectionsn 0.0111 0.0581 0.19 0.848

# Sectionsu 0.0163 0.0579 0.28 0.779

# Titlen -0.0153 0.0579 -0.26 0.791

# Titleu -0.0318 0.0587 -0.54 0.588

# OldTRUE 0.2909 0.0283 10.29 <2e-16

# Sectionsn:Titlen 0.0429 0.0824 0.52 0.603

# Sectionsu:Titlen 0.0419 0.0829 0.51 0.613

# Sectionsn:Titleu 0.0732 0.0825 0.89 0.375

# Sectionsu:Titleu 0.1553 0.0820 1.89 0.058

summary(step(g))

# ...Coefficients:

# Estimate Std. Error z value Pr(>|z|)

# (Intercept) -1.4710 0.0263 -55.95 <2e-16

# Sectionsn 0.0497 0.0337 1.47 0.140

# Sectionsu 0.0833 0.0337 2.47 0.013

# OldTRUE 0.2920 0.0283 10.33 <2e-16Uppercase and ‘none’ beat ‘capitalize’ in both page titles & section headers (interaction does not survive). So I toss in a CSS declaration to uppercase section headers as well as the status quo of the title.

ToC Formatting

After the page title, the next thing a reader will generally see on my pages in the table of contents. It’s been tweaked over the years (particularly by suggestions from Hacker News) but still has some untested aspects, particularly the first two parts of div#TOC:

float: left;

width: 25%;I’d like to test left vs right, and 15,20,25,30,35%, so that’s a 2x5 design. Usual implementation:

diff --git a/static/template/default.html b/static/template/default.html

index 83c6f9c..11c4ada 100644

--- a/static/template/default.html

+++ b/static/template/default.html

@@ -27,7 +27,7 @@

<body>

- <div class="headerCaps_class1"></div>

+ <div class="tocAlign_class1"></div>

<div id="main">

<div id="sidebar">

@@ -152,51 +152,56 @@

_gaq.push(['_setAccount', 'UA-18912926-1']);

ABalytics.init({

- headerCaps: [

+ tocAlign: [

{

- name: 'uu',

- "headerCaps_class1": "<style>h1, h2, h3, h4, h5 { text-transform: uppercase; }; div#header h1 { text-transform: uppercase; }</style>",

- "headerCaps_class2": ""

+ name: 'l15',

+ "tocAlign_class1": "<style>div#TOC { float: left; width: 15%; }</style>",

+ "tocAlign_class2": ""

},

{

- name: 'un',

- "headerCaps_class1": "<style>div#header h1 { text-transform: uppercase; }; div#header h1 { text-transform: none; }</style>",

- "headerCaps_class2": ""

+ name: 'l20',

+ "tocAlign_class1": "<style>div#TOC { float: left; width: 20%; }</style>",

+ "tocAlign_class2": ""

},

{

- name: 'uc',

- "headerCaps_class1": "<style>div#header h1 { text-transform: uppercase; }; div#header h1 { text-transform: capitalize; }</style>",

- "headerCaps_class2": ""

+ name: 'l25',

+ "tocAlign_class1": "<style>div#TOC { float: left; width: 25%; }</style>",

+ "tocAlign_class2": ""

},

{

- name: 'nu',

- "headerCaps_class1": "<style>h1, h2, h3, h4, h5 { text-transform: none; }; div#header h1 { text-transform: uppercase; }</style>",

- "headerCaps_class2": ""

+ name: 'l30',

+ "tocAlign_class1": "<style>div#TOC { float: left; width: 30%; }</style>",

+ "tocAlign_class2": ""

},

{

- name: 'nn',

- "headerCaps_class1": "<style>h1, h2, h3, h4, h5 { text-transform: none; }; div#header h1 { text-transform: none; }</style>",

- "headerCaps_class2": ""

+ name: 'l35',

+ "tocAlign_class1": "<style>div#TOC { float: left; width: 35%; }</style>",

+ "tocAlign_class2": ""

},

{

- name: 'nc',

- "headerCaps_class1": "<style>h1, h2, h3, h4, h5 { text-transform: none; }; div#header h1 { text-transform: capitalize; }</style>",

- "headerCaps_class2": ""

+ name: 'r15',

+ "tocAlign_class1": "<style>div#TOC { float: right; width: 15%; }</style>",

+ "tocAlign_class2": ""

},

{

- name: 'cu',

- "headerCaps_class1": "<style>h1, h2, h3, h4, h5 { text-transform: capitalize; }; div#header h1 { text-transform: uppercase; }</style>",

- "headerCaps_class2": ""

+ name: 'r20',

+ "tocAlign_class1": "<style>div#TOC { float: right; width: 20%; }</style>",

+ "tocAlign_class2": ""

},

{

- name: 'cn',

- "headerCaps_class1": "<style>h1, h2, h3, h4, h5 { text-transform: capitalize; }; div#header h1 { text-transform: none; }</style>",

- "headerCaps_class2": ""

+ name: 'r25',

+ "tocAlign_class1": "<style>div#TOC { float: right; width: 25%; }</style>",

+ "tocAlign_class2": ""

},

{

- name: 'cc',

- "headerCaps_class1": "<style>h1, h2, h3, h4, h5 { text-transform: capitalize; }; div#header h1 { text-transform: capitalize; }</style>",

- "headerCaps_class2": ""

+ name: 'r30',